Hi @JunJian Xia ,

Thanks for your query. Unfortunately we do not have an out of box feature to partition the data by file size. I would recommend you to please log a feature request in ADF feedback forum : https://feedback.azure.com/forums/270578-azure-data-factory All the feedback shared in this forum are actively monitored and reviewed by ADF engineering team. Please do share the feedback thread once you have posted, so that other users with similar idea can upvote and comment on your suggestion.

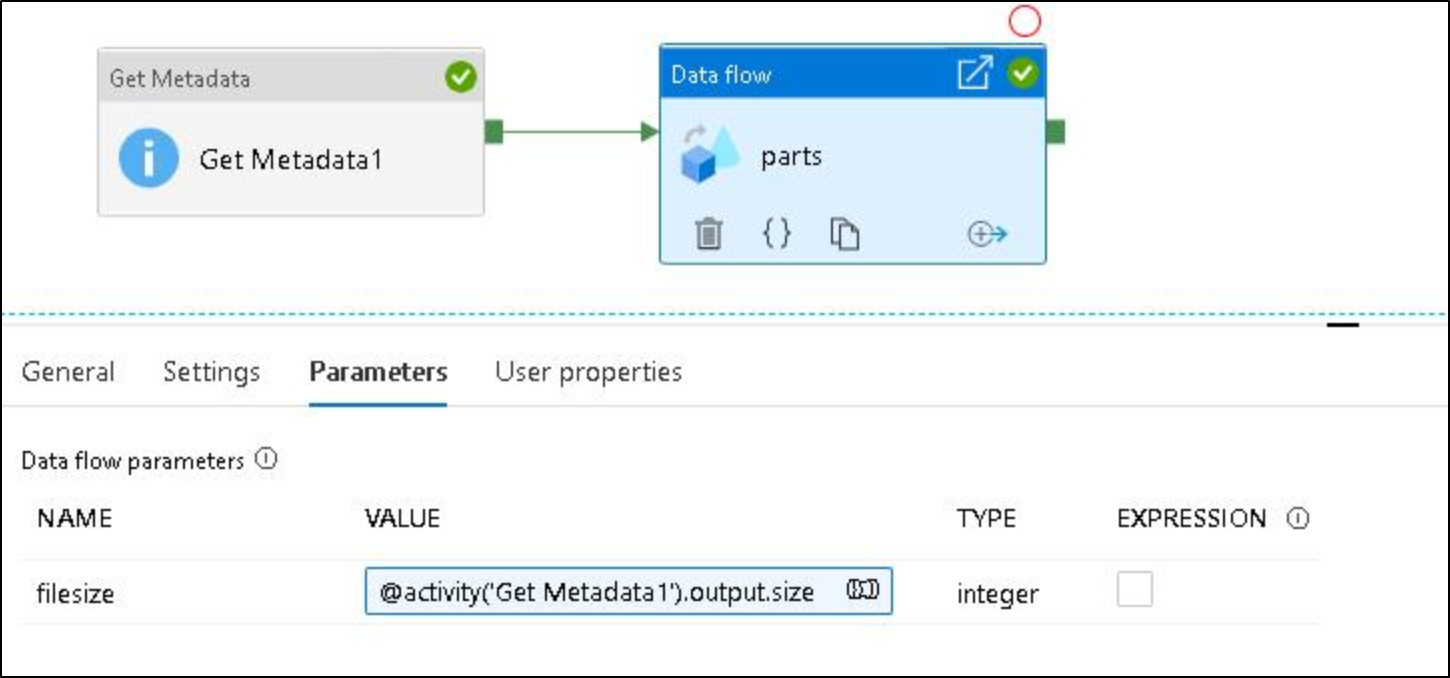

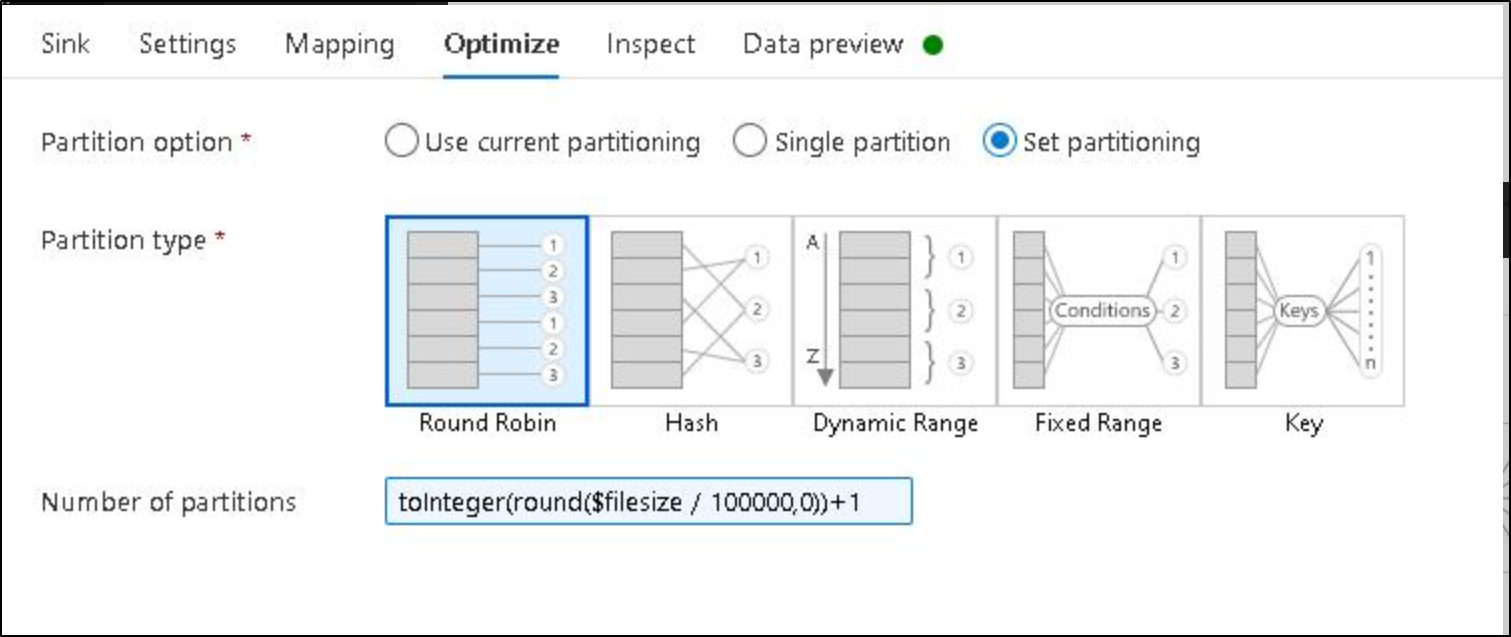

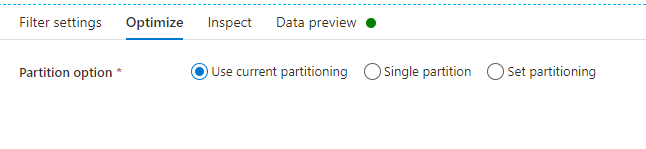

If your source is a file then you can try parameterizing the number of partitions you want to change based on the incoming source file size. In order to do that you can use Get Metadata activity to get the source file size and pass it as a parameter to Dataflow to calculate the partition number. Below is an example.

Incase if it is database table you can use lookup to do partition based on row count as explained in this stack overflow thread: Azure Data Factory split file by file size

Another option is you can create a Custom activity with your own data movement or transformation logic and use the activity in a pipeline.

Hope this info helps.

----------

Thank you

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.

.

.