Here is the other solution, @Ramamoorthy, Kesavan . It is quite long, but I provided pictures and videos to help.

In this solution I synchronize and record the file location / datetime for use in subsequent runs. To do this I leverage two key ideas/features.

First, I synchronize all references to the current datetime by creating a variable and setting its value to utcnow(). All other occurrences of utcnow() are replaced by references to this variable. There are a number of side-benefits to this. The side-benefits include making code simpler and easier to read, and avoiding discrepancies, and avoiding the midnight-to-next-day edge case.

Second, I leverage the csv dataset's Additional columns feature. By taking an (almost) empty source file, and adding the aforementioned utcnow() variable, I effectively can record the datetime used in creating the file name and folder path. In subsequent runs, I use the lookup activity to retrieve this value.

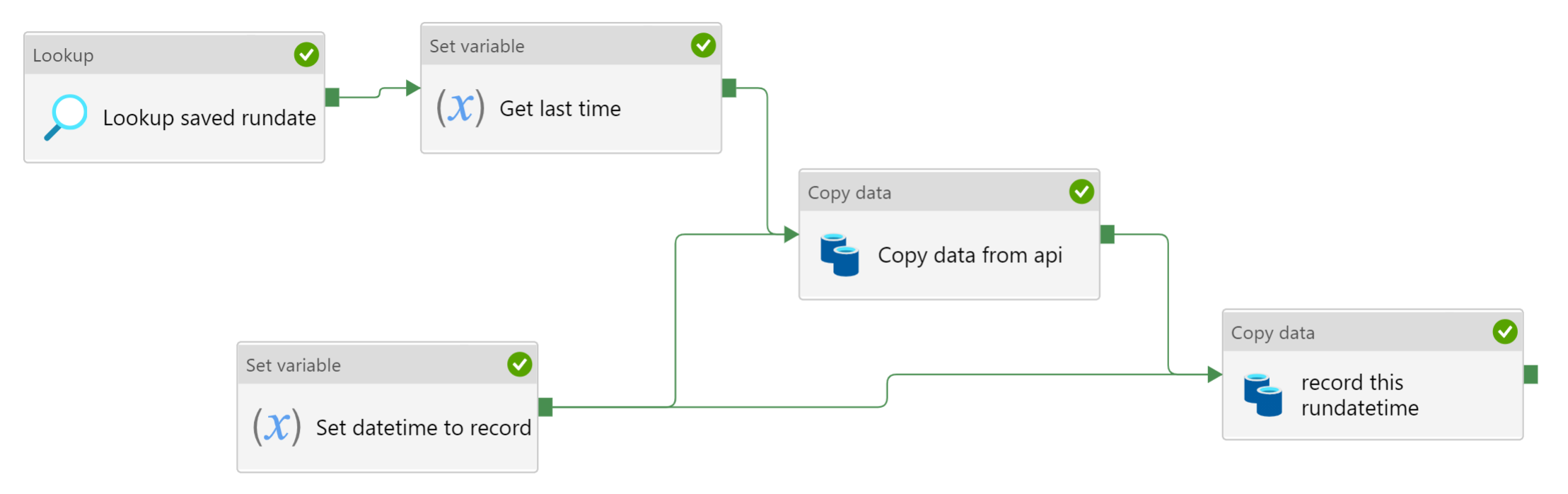

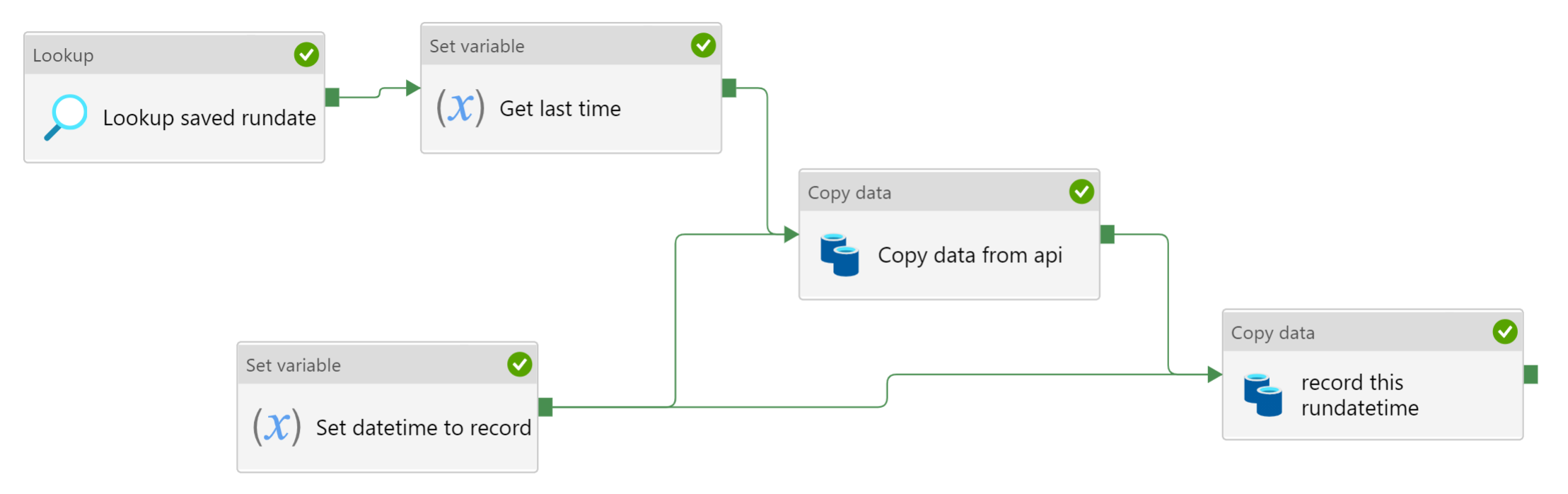

Overview:

Preparation work:

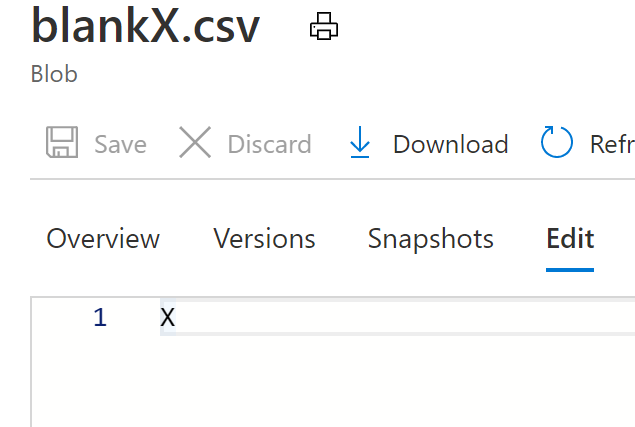

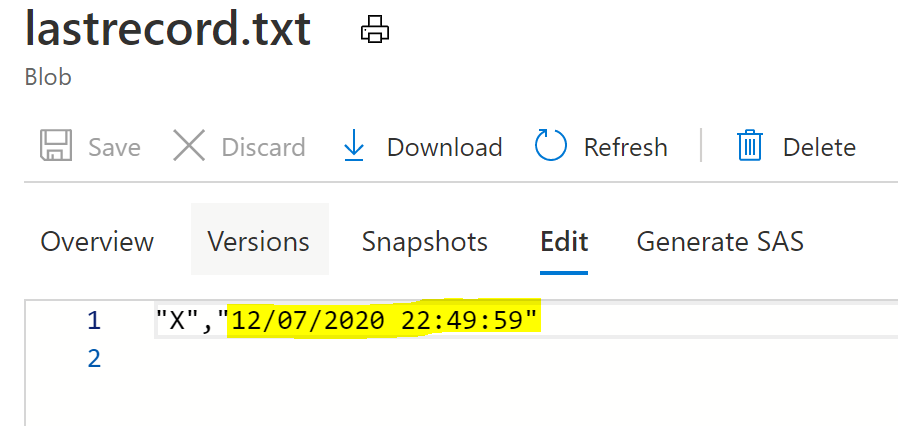

Create and upload an almost empty text file. This will be used as source when recording the datetime. In my example, I typed the letter X and left at that. The reason for leaving something is to ensure Data Factory interprests this as having 1 row rather than 0 rows.

Create a dataset for this blank file. Create a dataset for the file we will use to store the last successful run datetime ("LastRecord"). Both locations are permanent and unchanging. There is nothing special in these two delimited text datasets.

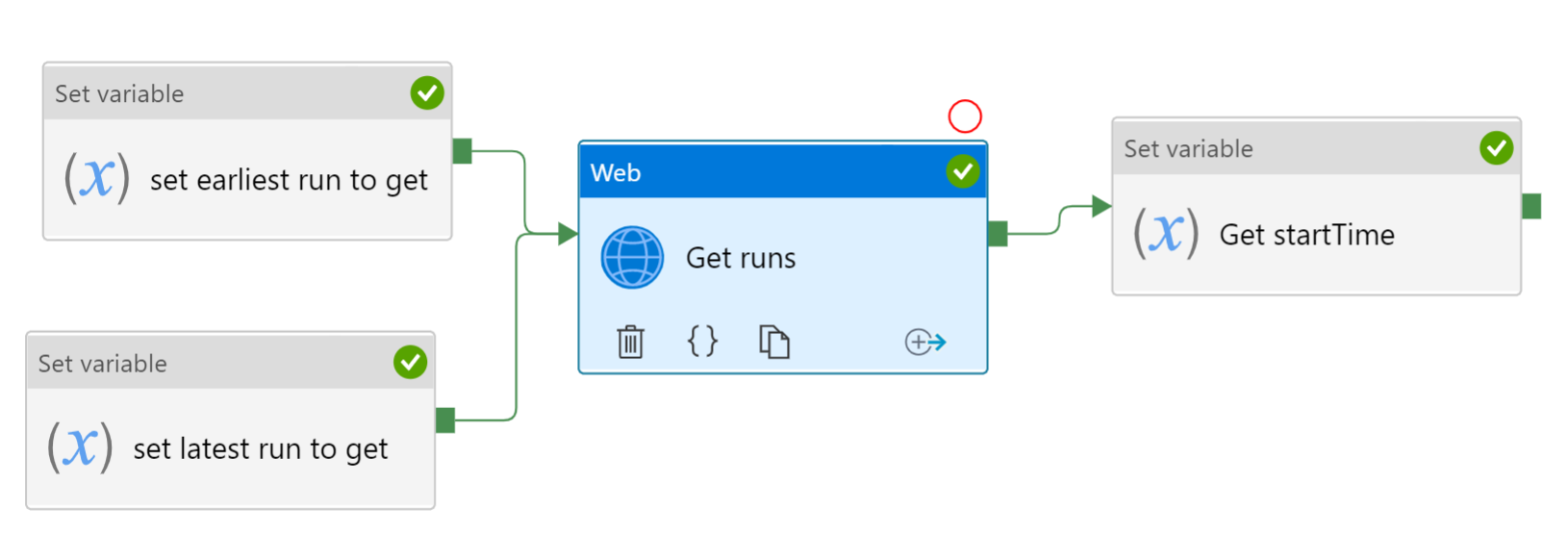

Create two variables, one for fetching the past run datetime ("Last_Time"), the other for storing this run datetime ("This_Time").

Create a Set Variable activity using the variable for storing this run datetime ("This_Time"). Assign it the expression @utcnow().

Create a Copy Activity, whose source is the blank file, and sink is the other dataset. In the Copy activity Source options, go to the bottom and add an "Additional column". The name can be anything you want, but the value should be @variables('This_Time')

Do a debug run to test. A new file should be written , and its contents should contain the datetime of your test.

If all this is workign correctly, you are ready to move on to the next step.

Note: before running the pipeline in production for the first time, you may need to manually edit this stored datetiem to line up with any data you wrote previously.

Re-arrange the activities from the above preparation steps into this image repeated from the overview.

First the lookup activity. The lookup activity uses the same dataset as in the prep-work's sink ("LastRecord"). We only need one row, so enable "first row only".

On success of the Lookup Activity, we have a Set Variable activity. To the variable "Last_Time" it fetches the datetime from the Lookup using expression @activity('Lookup saved rundate').output.firstRow.Prop_1

@activity('Lookup saved rundate').output.firstRow.Prop_1

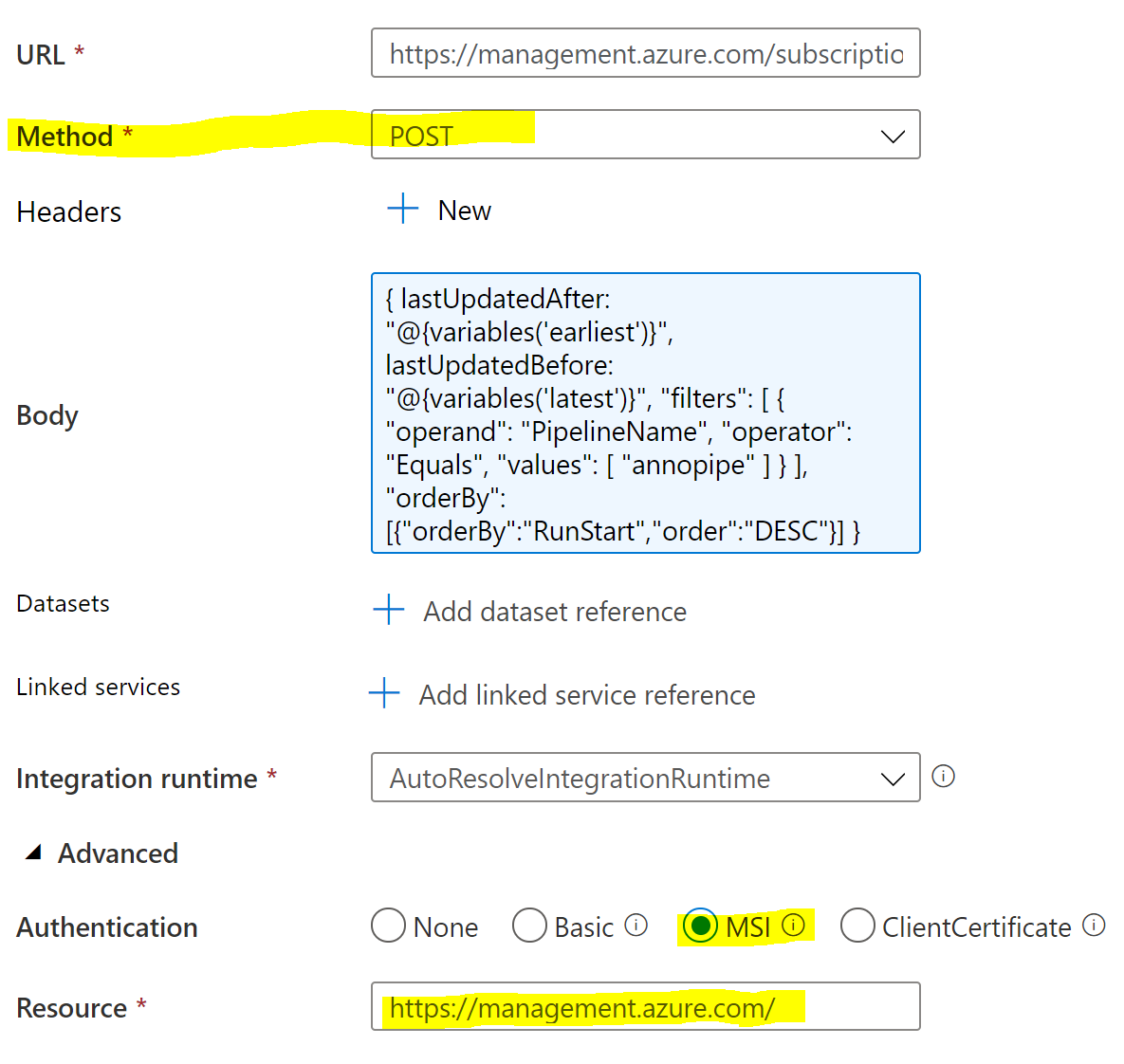

The first copy activity "Copy data from API", depends upon the success of both of the Set Variable activities. I assume you use the "Last_Time" variable in the source when calling the api.

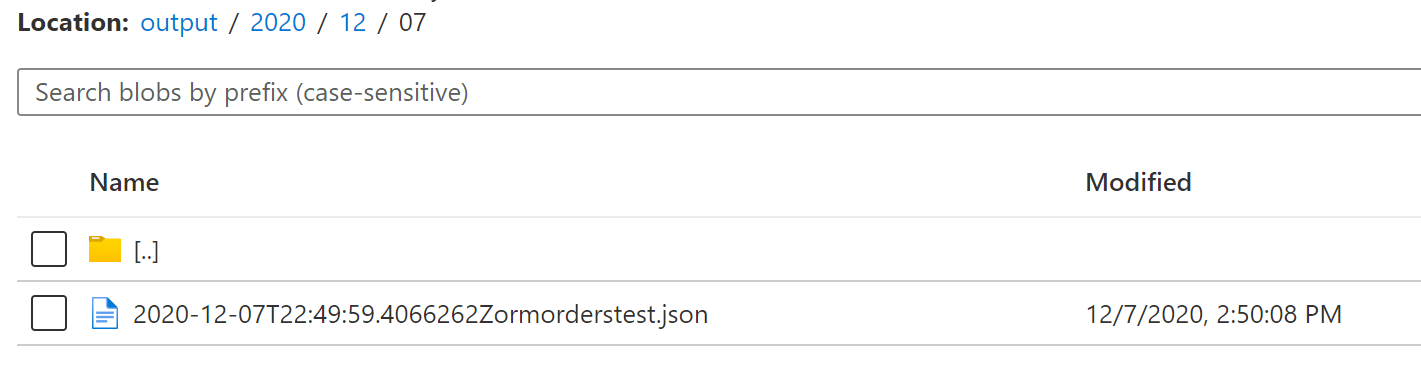

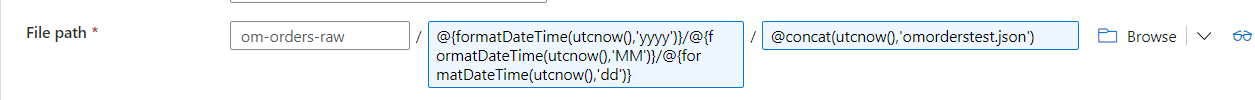

In the sink, I have re-written your dataset to use the "This_Time" variable passed in thru the Copy Activity.

With the dataset owning a parameter "dateparam",

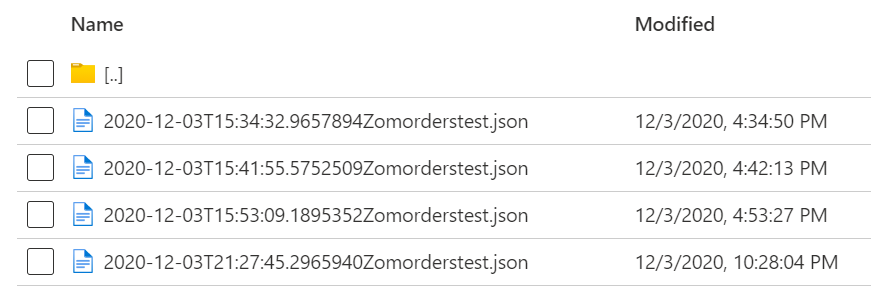

The folderpath @formatdatetime(dataset().dateparam,'yyyy/MM/dd')

The filename @concat(dataset().dateparam,'ormorderstest.json')

The second copy activity, ("record this rundatetime") which we set up in the preparation section, depends upon the success of the first copy activity.

If all goes well, your data should be copied into the corresponding folder, and filename. When that is done successfully, the datetime should be overwritten in that "LastRecord" blob/file.