You are looping over the records 100,000 records at a time. Try changing that to 1,000,000.

You will need to experiment and determine the best batch size for your process.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

I have store procedure which delete data from sql server table. We store ticker wise data. Some ticker’s has high volume of data. Suppose for a ticker X which has 4 million data. When deleting 4 million data in batch then it takes lots of time.

Anyone Can have a look and tell me how to optimize this delete routine as a result 10 million data will be removing in few second.

Here is a snippet of my store procedure which is taking long time to delete 4 million data.

CREATE PROC USP_RemoveDataForCSMReport

(

@Ticker VARCHAR(20),

@Earning VARCHAR(10),

@PrePost VARCHAR(10)

)

AS

BEGIN TRY

DECLARE @r INT;

DECLARE @TickerName VARCHAR(20)

SET @TickerName=@Ticker

SET @r = 1;

WHILE @r > 0

BEGIN

BEGIN TRANSACTION;

DELETE TOP (100000) FROM tblOutputDetl_csmtuner where Ticker=@TickerName

SET @r = @@ROWCOUNT;

COMMIT TRANSACTION;

END

SET @r = 1;

WHILE @r > 0

BEGIN

BEGIN TRANSACTION;

DELETE TOP (100000) FROM tblQCComment_CSMTuner where Ticker=@TickerName

SET @r = @@ROWCOUNT;

COMMIT TRANSACTION;

END

SET @r = 1;

WHILE @r > 0

BEGIN

BEGIN TRANSACTION;

DELETE TOP (100000) FROM tblOutputDetl_Period_CSMTuner where Ticker=@TickerName

SET @r = @@ROWCOUNT;

COMMIT TRANSACTION;

END

Select * from tblearnings WHERE EarningTickerID IN

(

Select MAX(EarningTickerID) from tblearnings where Ticker=@TickerName and Earning=@Earning and PrePost=@PrePost

)

END TRY

BEGIN CATCH

ROLLBACK TRAN

END CATCH

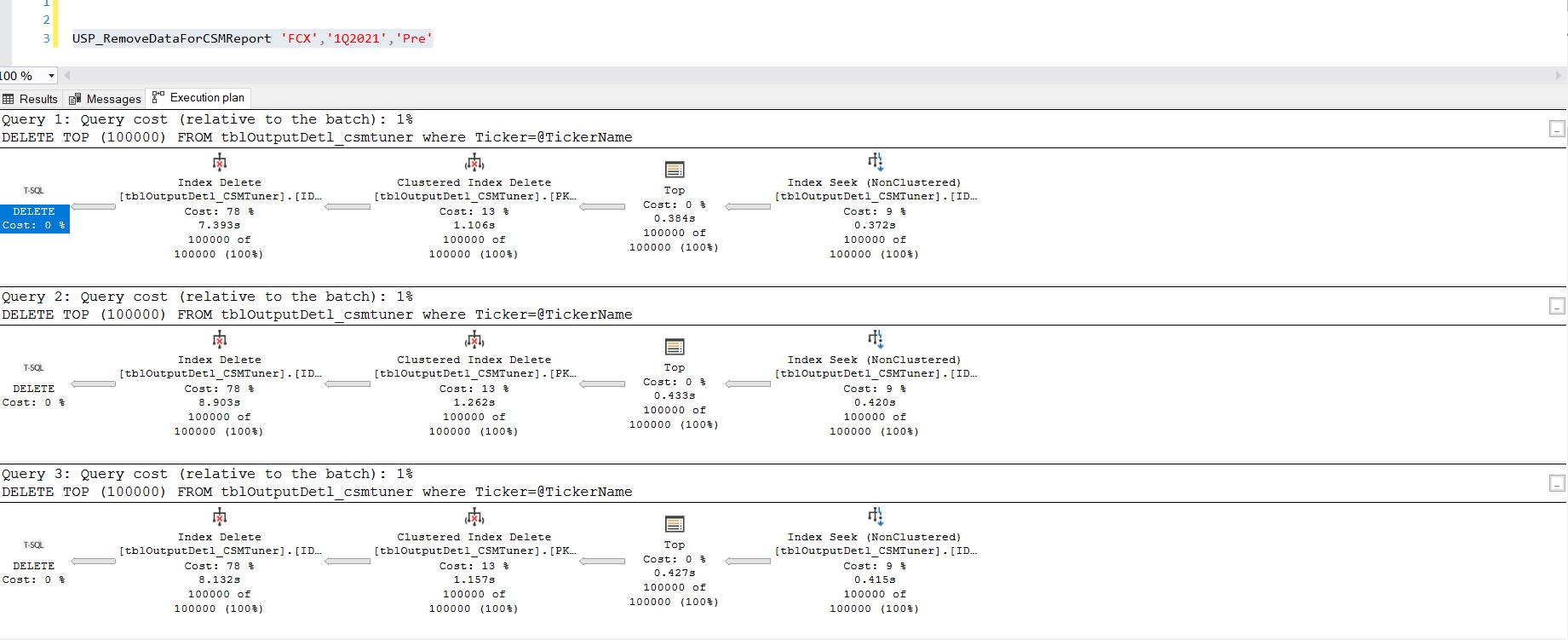

Execution Plan Attached.  Waiting for guide line to speed up high volume of data deletion operation. Thanks

Waiting for guide line to speed up high volume of data deletion operation. Thanks

You are looping over the records 100,000 records at a time. Try changing that to 1,000,000.

You will need to experiment and determine the best batch size for your process.

Tom is wrong when he says that nothing can be optimized with a DELETE statement. Although, these plans look good, they are all index seeks. A common error with batching is that people don't have an index to support the batching, and therefore each iteration requires a scan.

Then again, that is only one of the tables.

One thing to consider though is there are other indexes on the table, beside the one that is used in the query and the clustered index. In that case, it may pay off to disable those indexes and rebuild once you are done.

And of course, you need to check for blocking - that could be the reason things are going slow. You should have the database for yourself for this type of operations.

Finally a small optimization with the WHILE statement. Initiate @r to 100000 and change it to:

WHILE @r = 1000000