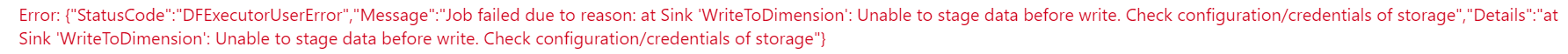

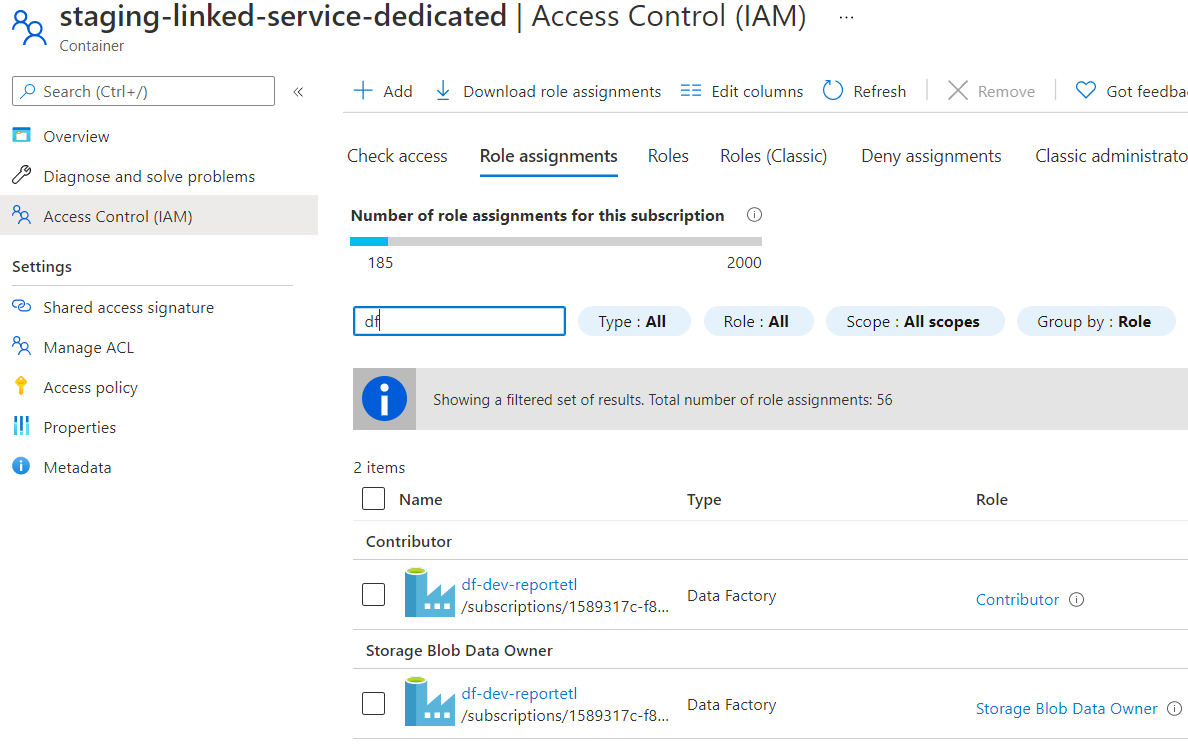

Worked through Microsoft support for this. The error I was receiving was completely incorrect, had nothing to do with the issue that caused it. There was no credential issue and no issue with the identified data flow object (it seems to have defaulted to the last object). This went through to the DW/Synapse team who examined what was being run in the background. Looks like it was an unhandled exception which didn't surface the right error.

The issue was I was reading data from a Dimension table in Synapse. The dimension has an "Active" field, indicating whether the row is active or not. This is set as a boolean on the SQL DW side and I needed to filter all those where Active = true. In the "Filter On" statement on the Filter transformation I had:

toBoolean(byName('Active'))==true()

This was the issue, for some reason the underlying engine doesn't know what to do with this and cannot equate the true value coming from DW to Data Factories definition of true. As advised by the support team, I changed this to the following:

toString(byName('Active'))=='true'

which worked.

This was a pretty simple but obscure solution, really a work around. It was pretty much a mystery that it was the filter causing the issue as it was never specified in any of the error messages. I have told the support specialists at Microsoft that this isn't really acceptable, the appropriate error must be surfaced for the appropriate transformation, else we are just guessing at solutions. My Data flow has 20 objects in it, guessing which is causing an error (when I know the error returned may or may not be the actual error) isn't particularly enjoyable. Symptomatic of a "not quite ready for production" immature software product...