How to Execute Dotnet apache spark using docker image?

- I have created the docker file with Dotnet SDK

- Copied my debug folder of my console

- Create the package(Folder) which have all the required software for running dotnet pyspark application and copied this also to my image file

Find the Docker image details below

FROM mcr.microsoft.com/dotnet/sdk:5.0

WORKDIR /app/Debug

COPY ./Debug .

WORKDIR /app/DotnetSpark.Package

COPY ./DotnetSpark.Package .

ENV HADOOP_HOME=C:/app/DotnetSpark.Package/hadoop

ENV SPARK_HOME=C:/app/DotnetSpark.Package/spark-3.0.0-bin-hadoop2.7

ENV DOTNET_WORKER_DIR=C:/app/DotnetSpark.Package/Microsoft.Spark.Worker-1.0.0

ENV DOTNET_ASSEMBLY_SEARCH_PATHS=C:/app/Debug

ENV JAVA_HOME=C:/app/DotnetSpark.Package/java

ENV DOTNET="C:/Program Files/dotnet"

ENV TEMP=C:/Windows/Temp

ENV TMP=C:/Windows/Temp

PATH=${HADOOP_HOME}/bin;${SPARK_HOME}/bin;${DOTNET_WORKER_DIR};${JAVA_HOME}/bin;${DOTNET};C:/Windows/system32;C:/Windows;${TEMP};${TMP}

ENTRYPOINT ["cmd.exe"]

After running this docker, cmd prompt is getting open, then i tried to execute the below command

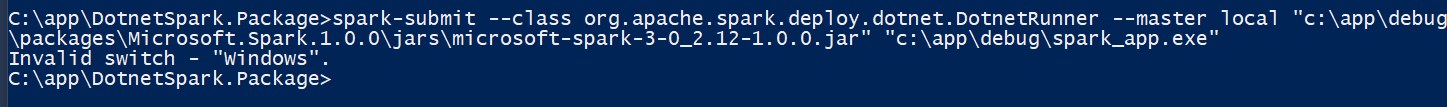

spark-submit --class org.apache.spark.deploy.dotnet.DotnetRunner --master local "c:\app\debug

\packages\Microsoft.Spark.1.0.0\jars\microsoft-spark-3-0_2.12-1.0.0.jar" "c:\app\debug\spark_app.exe"

But this command is not throwing any exception or result, i'm getting result like below image

Can any one help to resolve my problem or suggest a way to run dotnet pyspark using docker?