Hello @Goldner, Eli ,

Apologize for the delay in response.

Your scenario is interesting and we do realize that the key column is not optimized in some cases and will look how to improve this in future releases.

In the meantime, for these scenarios, it may be best to create the table manually and choose an existing table.

ASA portal does not create any key for the new table, but only an index. And when ASA creates a table, the maximum size of nvarchar we may set is 4000 instead of 8000 as described by you, so I'm wondering if the behavior you have seen was caused by some other product.

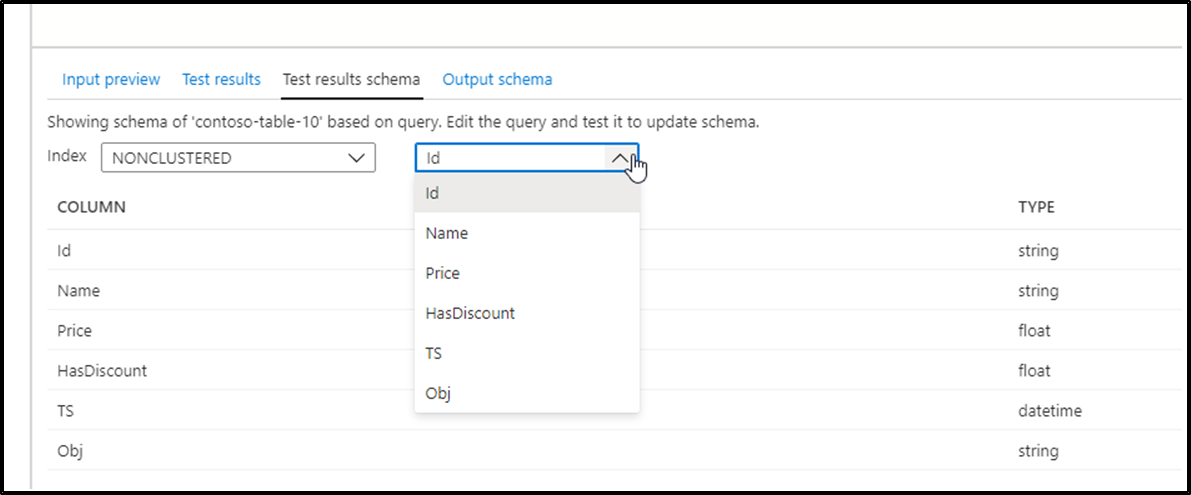

As for the index column, you can pick what column that you want to use before creating the table:

Hope this helps. Do let us know if you any further queries.

------------

- Please accept an answer if correct. Original posters help the community find answers faster by identifying the correct answer. Here is how.

- Want a reminder to come back and check responses? Here is how to subscribe to a notification.