Hi,

I'm building my first "web scraper". This can be accomplished probably a dozen different ways but I want it all to be done in Azure. This leads me to function apps and logic apps.

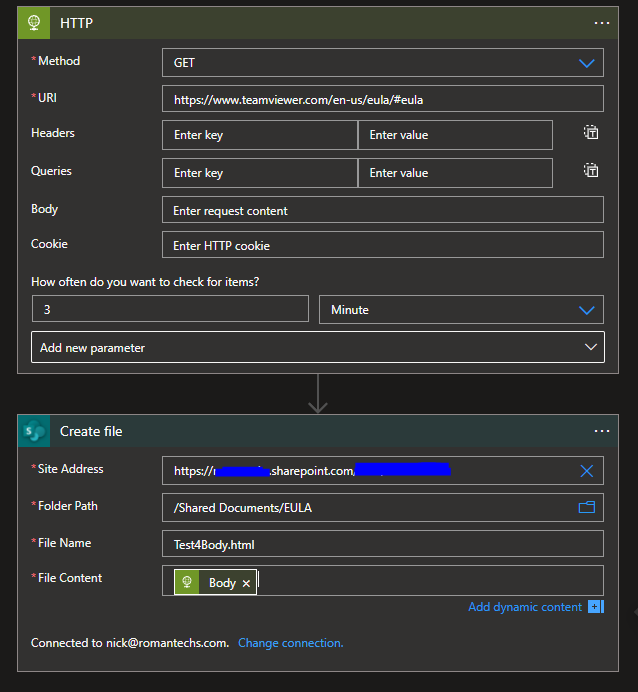

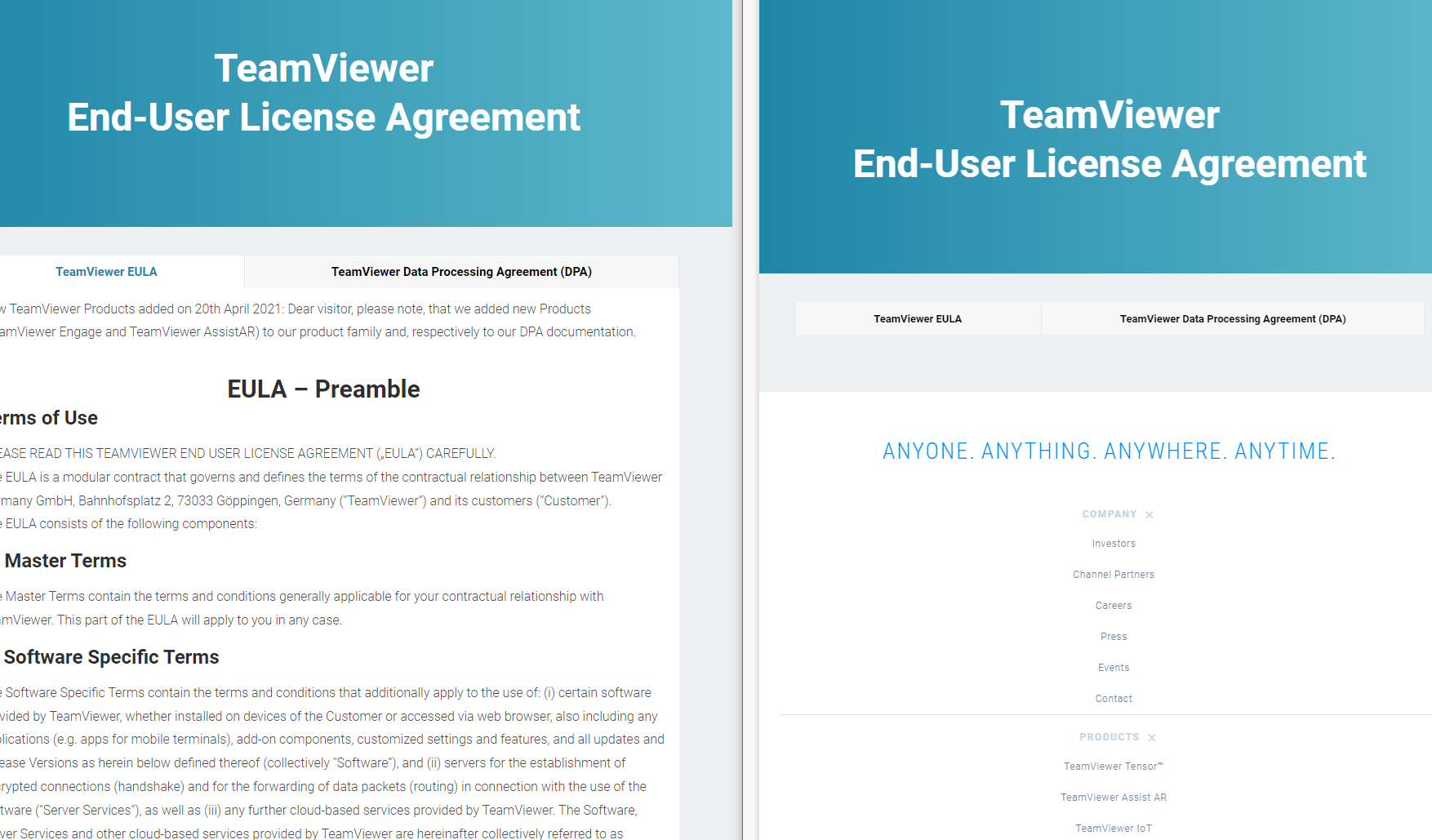

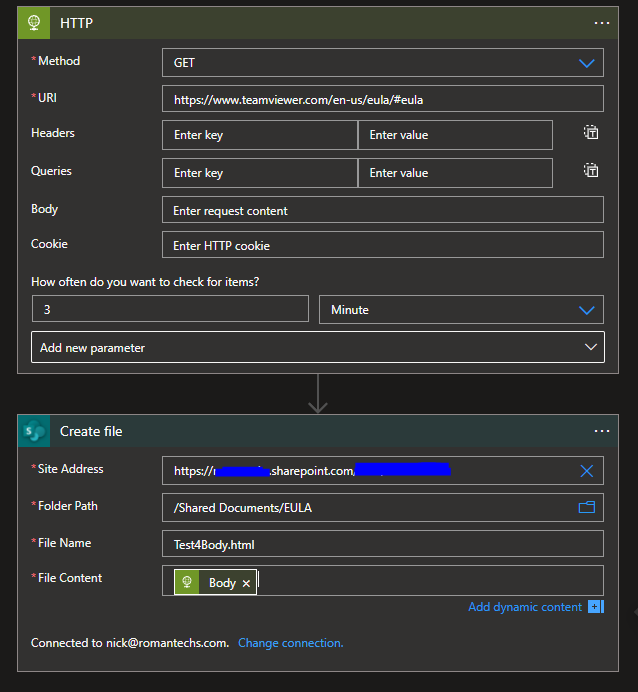

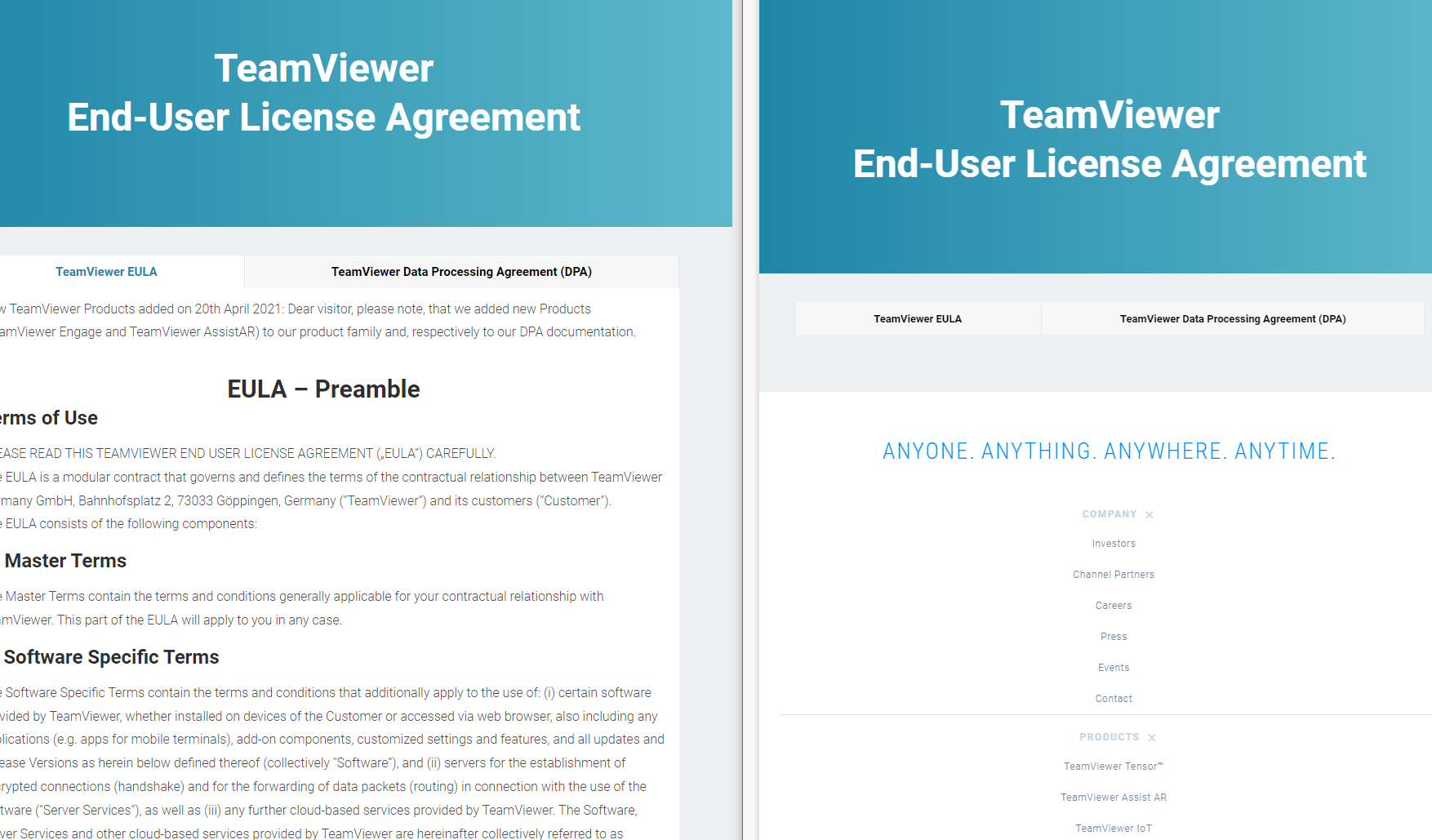

I built a logic app that runs an HTTP get against a URL, then creates an HTML file in a Sharepoint document library. I was very close to accomplishing this but the way the page is formatted prevents it from downloading all the text within the webpage. (https://www.teamviewer.com/en-us/eula/#eula).

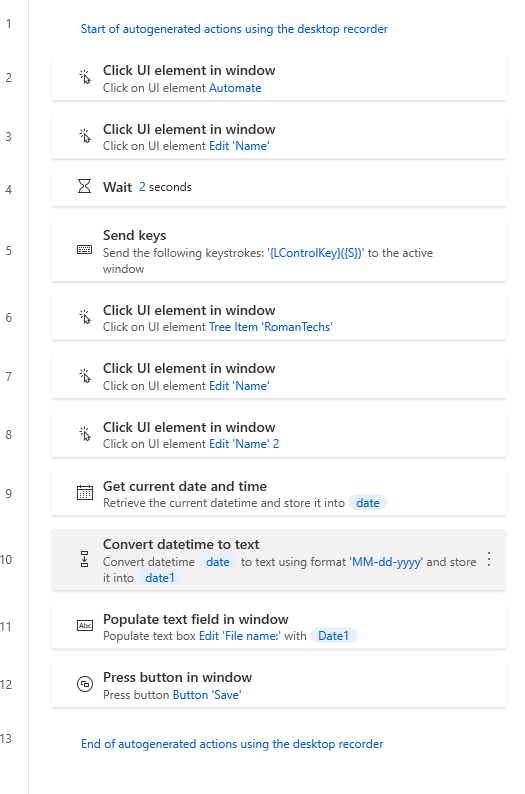

The other way I could accomplish this is simply by downloading the HTML file from the URL. If I right click and save the page, the file displays everything I want, but I'm not finding a way to download a webpage file using Logic or Function app.

I will attach two pictures. One of the logic app that I made, and the other of the result of the file the logic app creates in Sharepoint next to how the original site looks.