Hi @Yash Tamakuwala ,

Thankyou for using Microsoft Q&A platform and posting your queries.

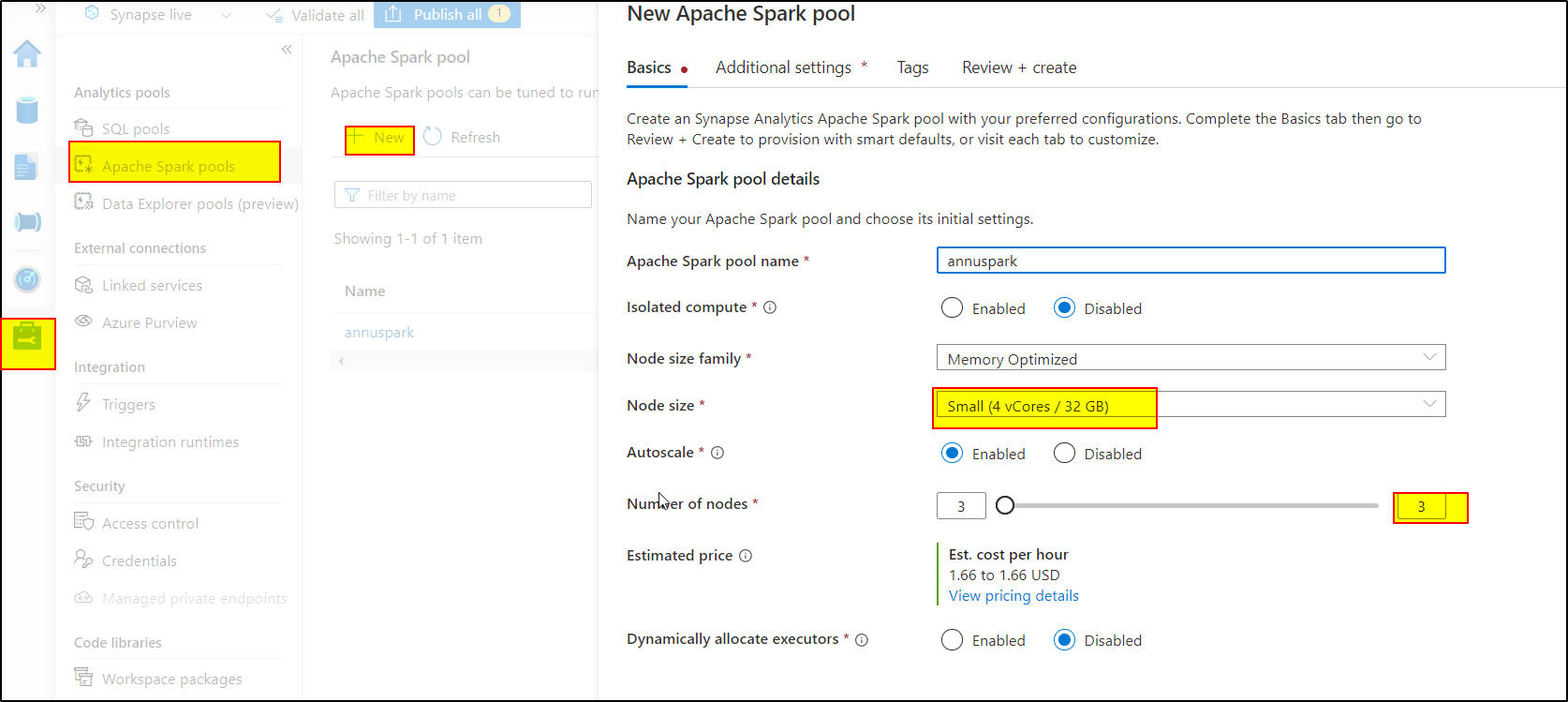

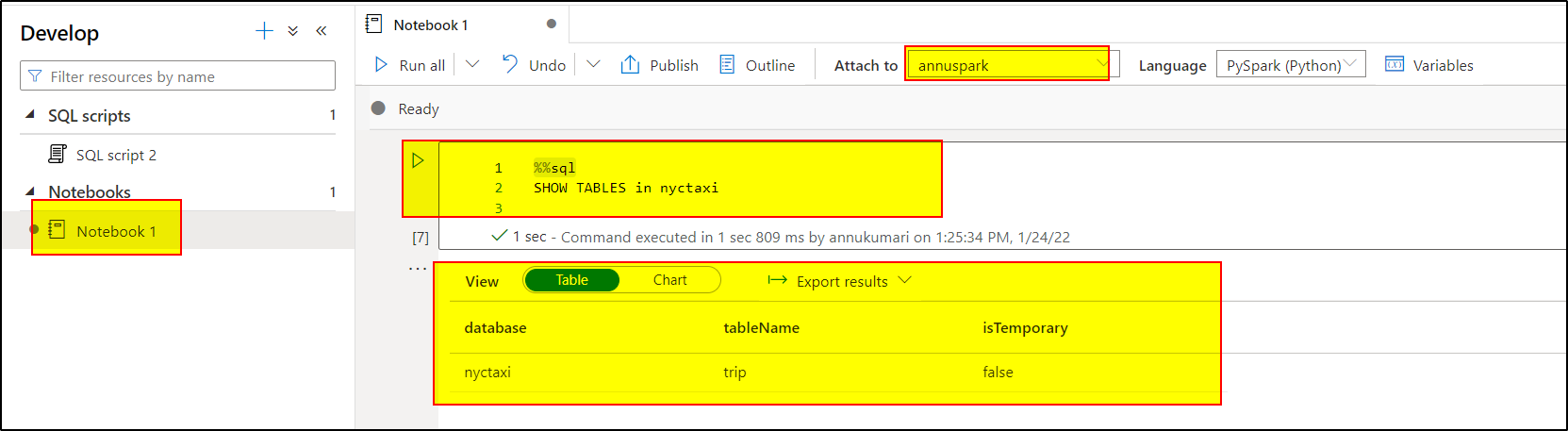

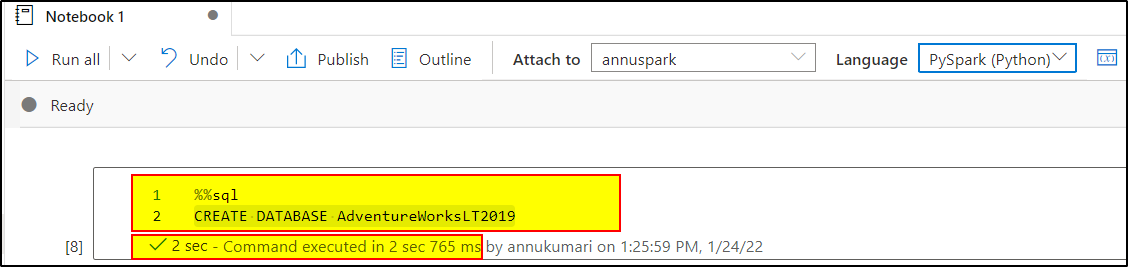

In Azure Synapse workspace, you need to go to Develop tab, and create a new notebook in order to run these queries . The notebook should be attached to Spark pool . You can create Apache spark pool in Manage tab of Synapse Workspace and attach your notebook with the spark pool.

Note: As Spark pools are a provisioned service, you pay for the resources provisioned. You can go with small node size and keep Max number of nodes as 3 for getting the least charge

1. SHOW TABLES

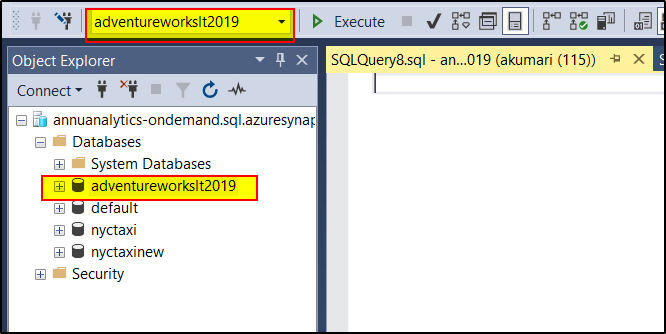

2. CREATE DATABASE AdventureWorksLT2019

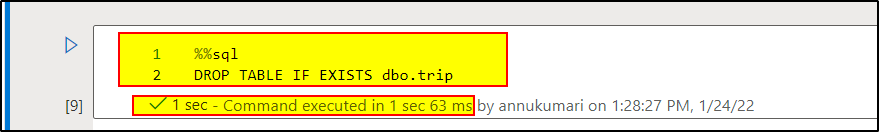

3. DROP TABLE IF EXISTS table_name

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators