Dear Team,

We have a requirement to ingest the Network user log traffic data and build the Power BI report on top of it.

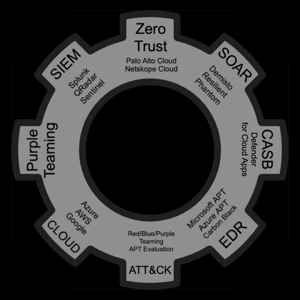

Since it’s a log data, we don’t want to store it in our Azure SQL Warehouse DB, and we would like to implement any other effective architecture in azure to ingest and store this data.

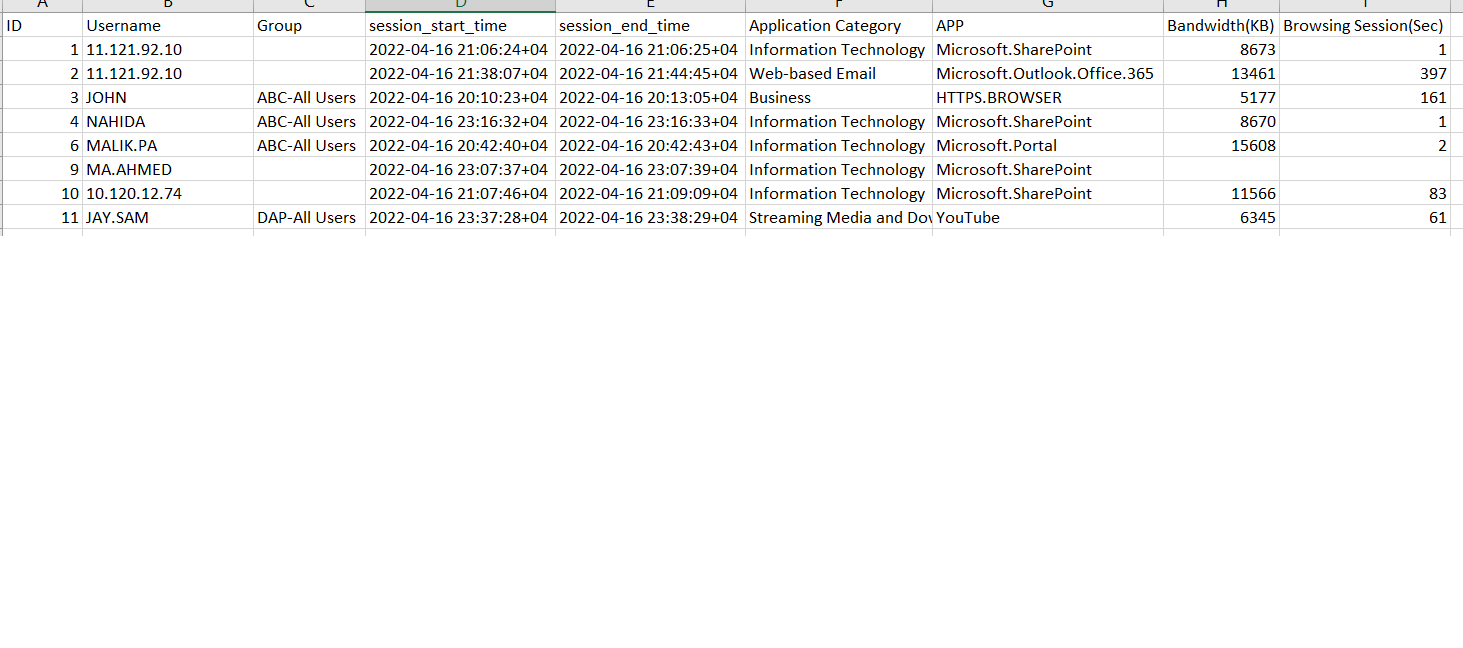

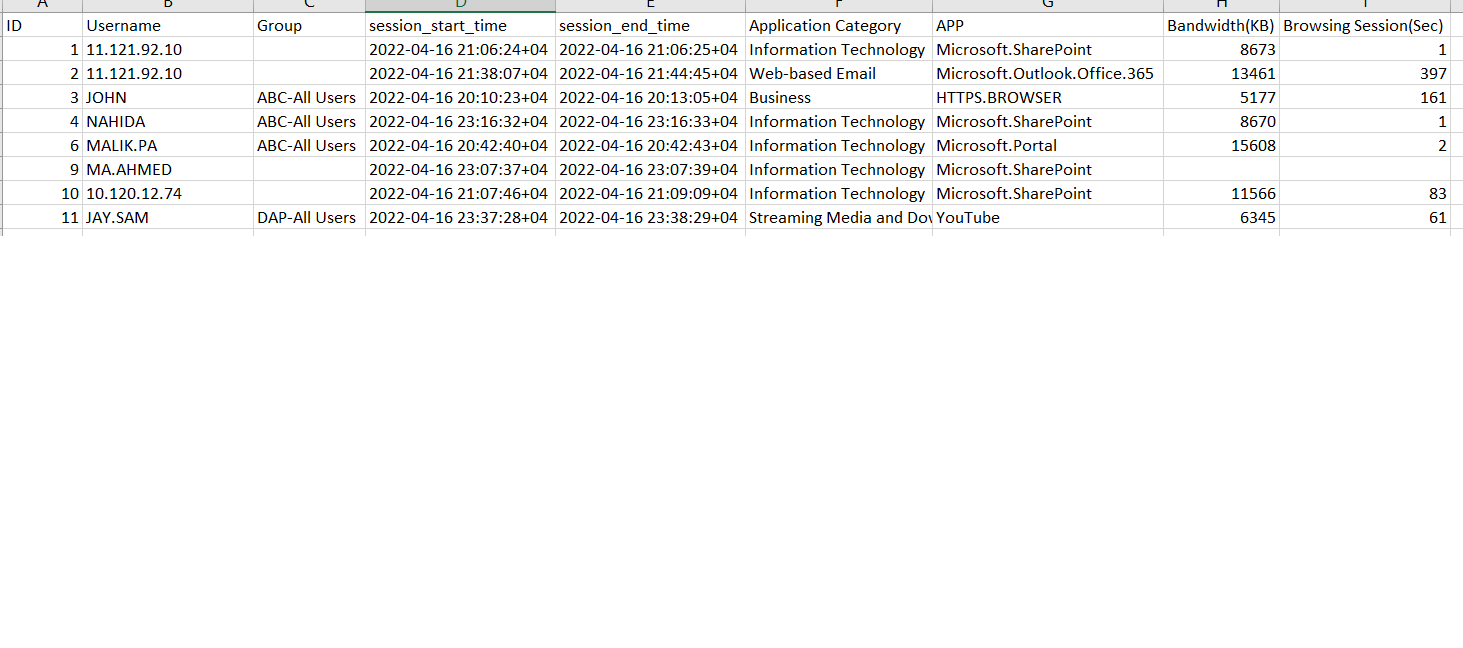

Log Data Sample Below:

Source for this file:

Network application from vendor will generate this file on hourly basis and transfer this file to our SFTP. So we can say that we need to process 24 files on each day with the huge log data in each file.

Please advise me on which architecture and which service in azure is best fit for this scenario, I prefer to use some live streaming to ingest the data. Kindly share your suggestion.

Thanks,

Divakar

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.