Error: {"message":"Job failed due to reason: at Sink 'MetaSink': org.apache.spark.sql.AnalysisException: The column number of the existing table default.phaseone(struct<>) doesn't match the data schema(struct<Id:int,Name:string>);. Details:org.apache.spark.sql.AnalysisException: The column number of the existing table default.phaseone(struct<>) doesn't match the data schema(struct<Id:int,Name:string>);\n\tat org.apache.spark.sql.execution.datasources.PreprocessTableCreation$$anonfun$apply$2.applyOrElse(rules.scala:131)\n\tat org.apache.spark.sql.execution.datasources.PreprocessTableCreation$$anonfun$apply$2.applyOrElse(rules.scala:76)\n\tat org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$$anonfun$resolveOperatorsDown$1$$anonfun$2.apply(AnalysisHelper.scala:108)\n\tat org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$$anonfun$resolveOperatorsDown$1$$anonfun$2.apply(AnalysisHelper.scala:108)\n\tat org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:69)\n\tat org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$$anonfun$resolveOperatorsDown$1.apply(AnalysisHelper.scala:107)\n\tat org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$$anonfun$resolveOperatorsDown$1.apply(AnalysisHelper.scala:106)\n\tat org.ap","failureType":"UserError","target":"DataFlow_SourceToLanding","errorCode":"DFExecutorUserError"}

I am mentioning my steps which I have performed :

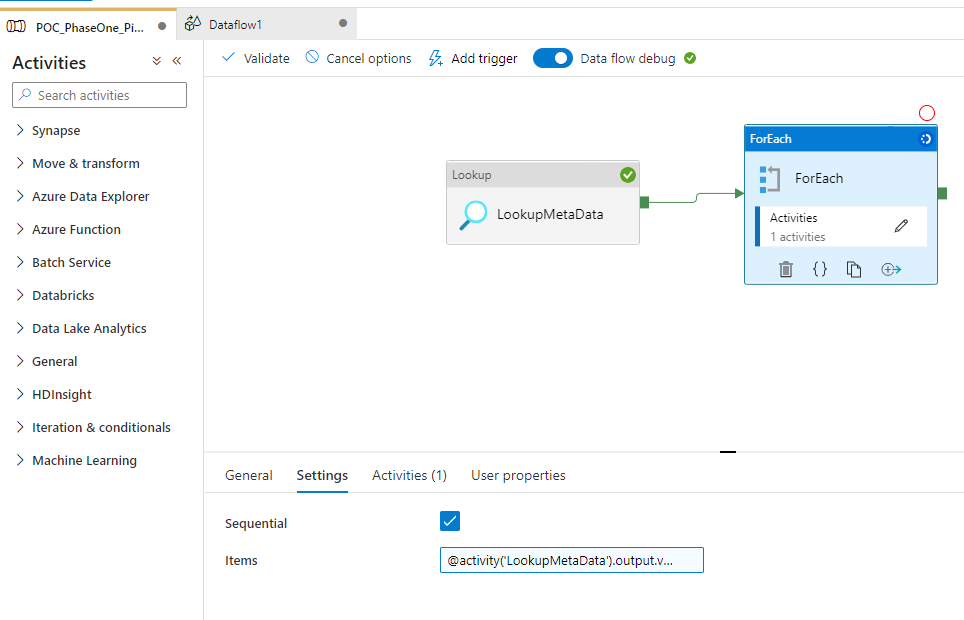

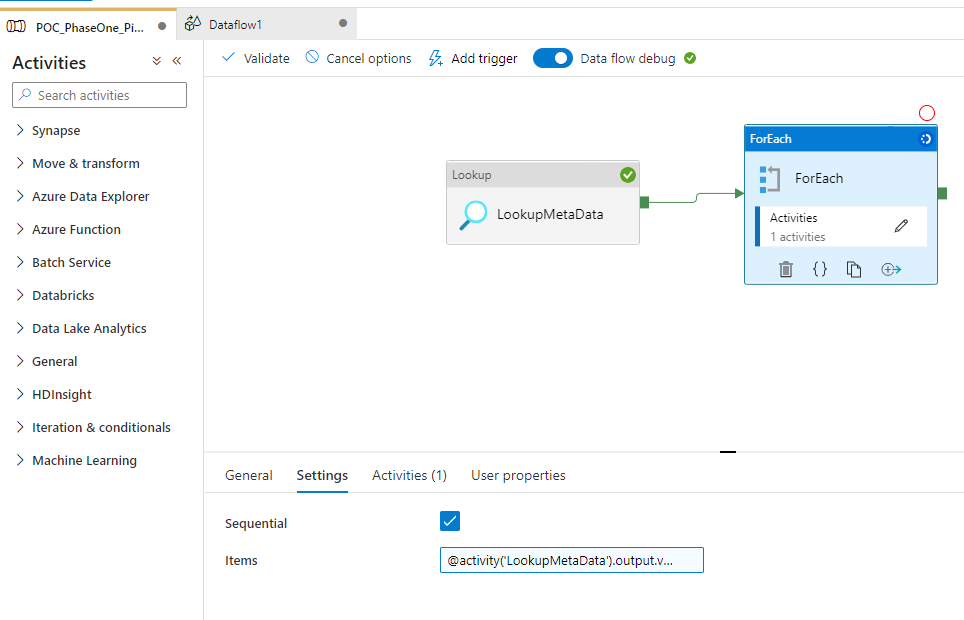

Step1: got metadata using lookup activity

Step2: Passed the values in for each loop for ne by one table execution

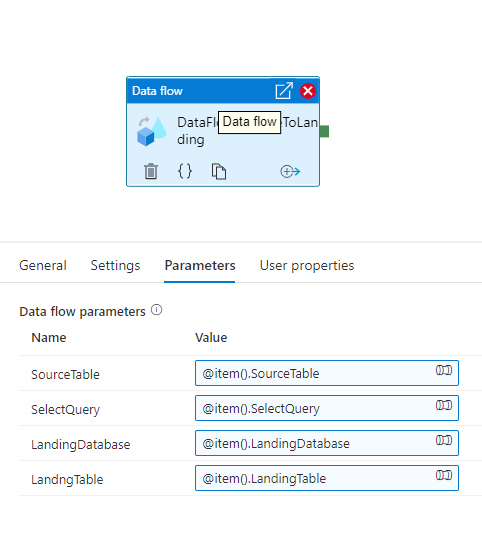

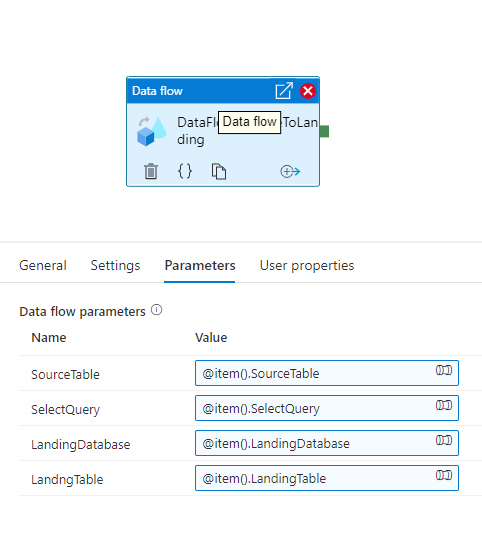

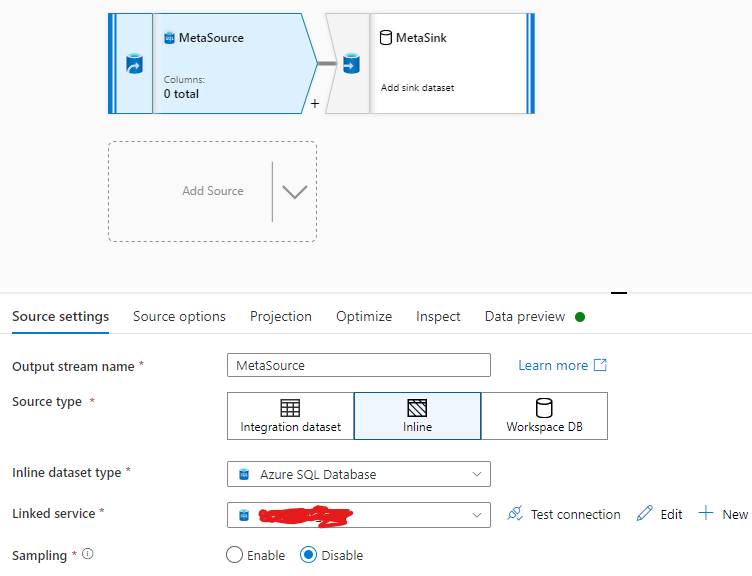

Step3: Created parameters for metadata in dataflow

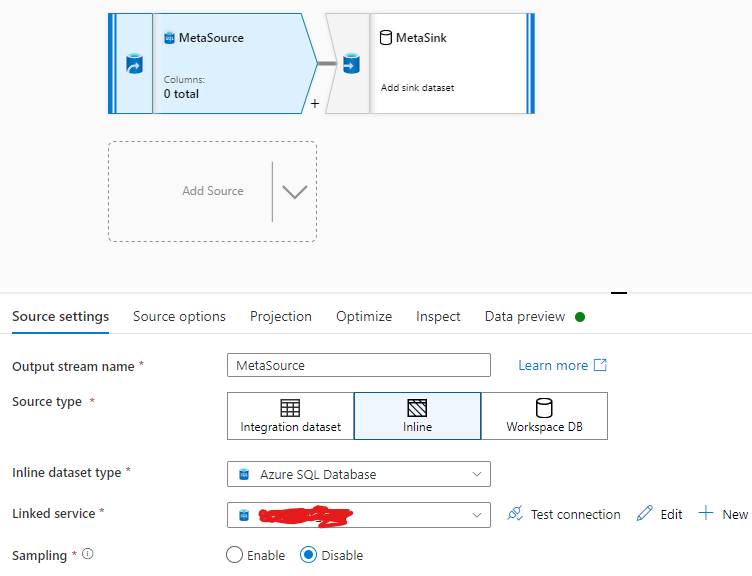

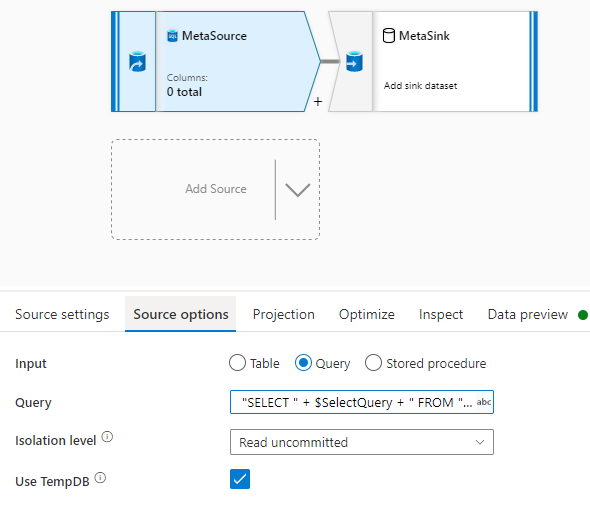

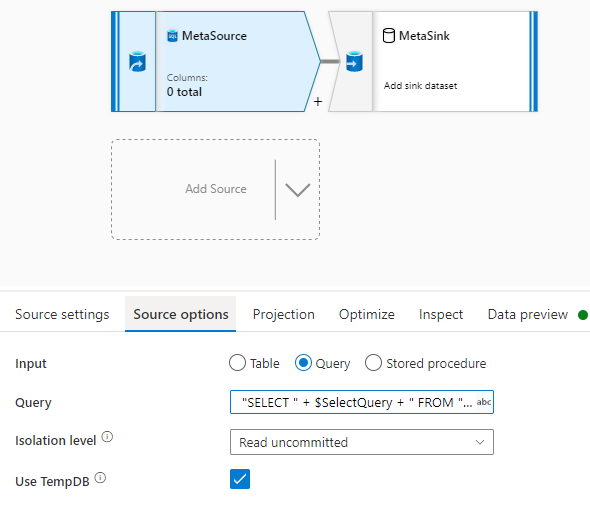

Step4: Passed the table name and columns in dataflow source activity via Inline Query

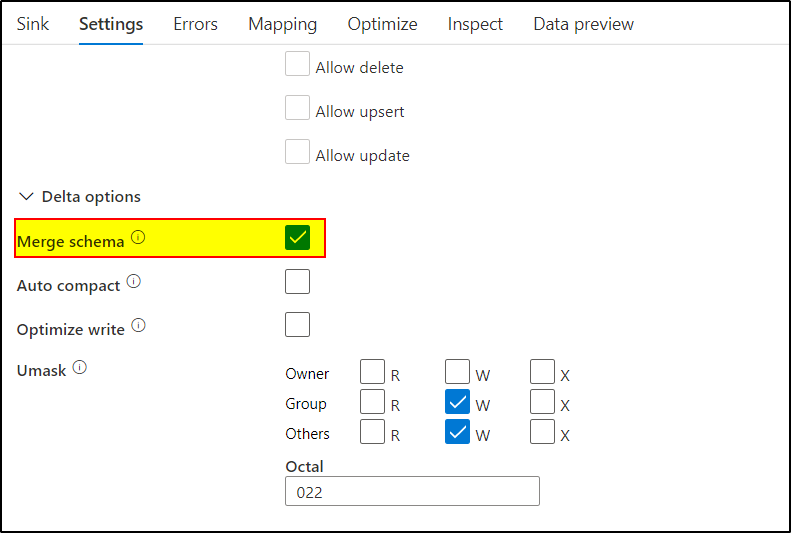

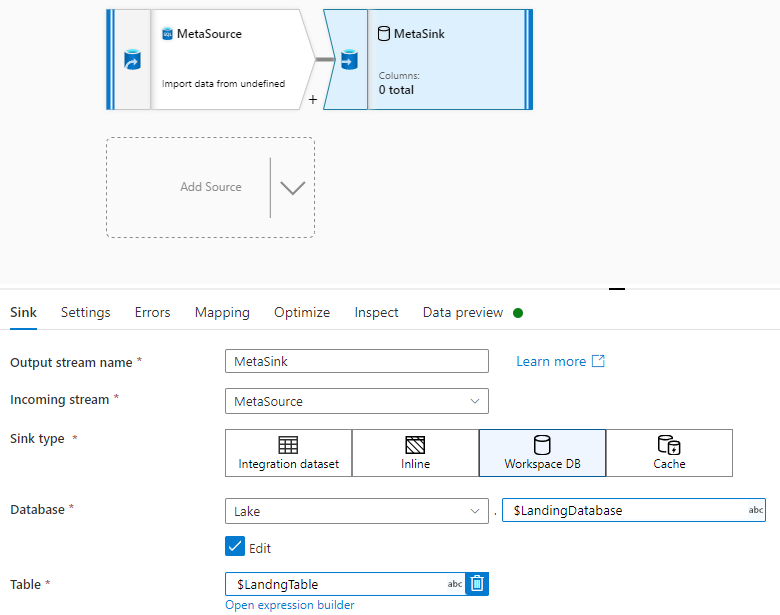

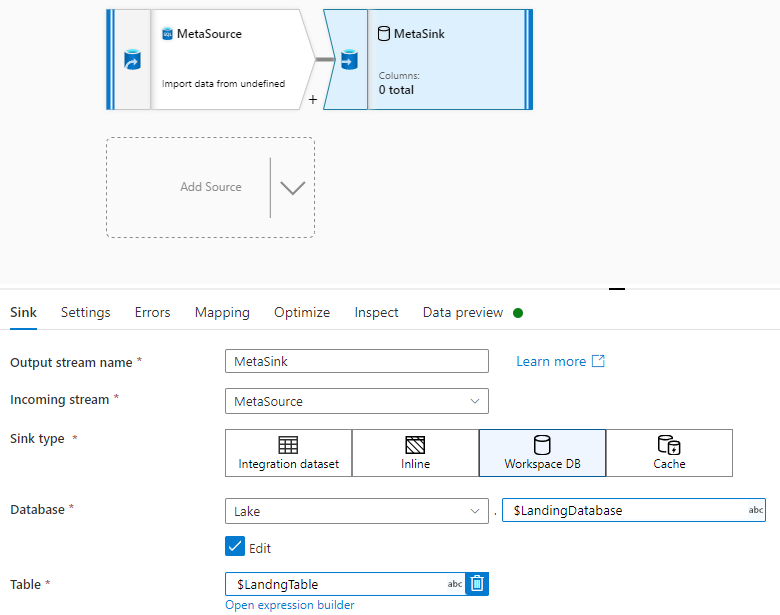

Step5: Then use the Delta Table from WorkspaceDb(Lake Database) In Sink Activity

There is Two Same Columns in Azure SQL Source and Delta Lake Table

Source Table : (Id int , Name varchar(20) )

Destination Table : (Id INT , Name STRING )