Overview of Azure Monitor pipeline

Azure Monitor pipeline is part of an ETL-like data collection process that improves on legacy data collection methods for Azure Monitor. This process uses a common data ingestion pipeline for all data sources and a standard method of configuration that's more manageable and scalable than other methods. Specific advantages of the data collection using the pipeline include the following:

- Common set of destinations for different data sources.

- Ability to apply a transformation to filter or modify incoming data before it's stored.

- Consistent method for configuration of different data sources.

- Scalable configuration options supporting infrastructure as code and DevOps processes.

- Option of edge pipeline in your own environment to provide high-end scalability, layered network configurations, and periodic connectivity.

Note

When implementation is complete, all data collected by Azure Monitor will use the pipeline. Currently, only certain data collection methods are supported, and they may have limited configuration options. There's no difference between data collected with the Azure Monitor pipeline and data collected using other methods. The data is all stored together as Logs and Metrics, supporting Azure Monitor features such as log queries, alerts, and workbooks. The only difference is in the method of collection.

Components of pipeline data collection

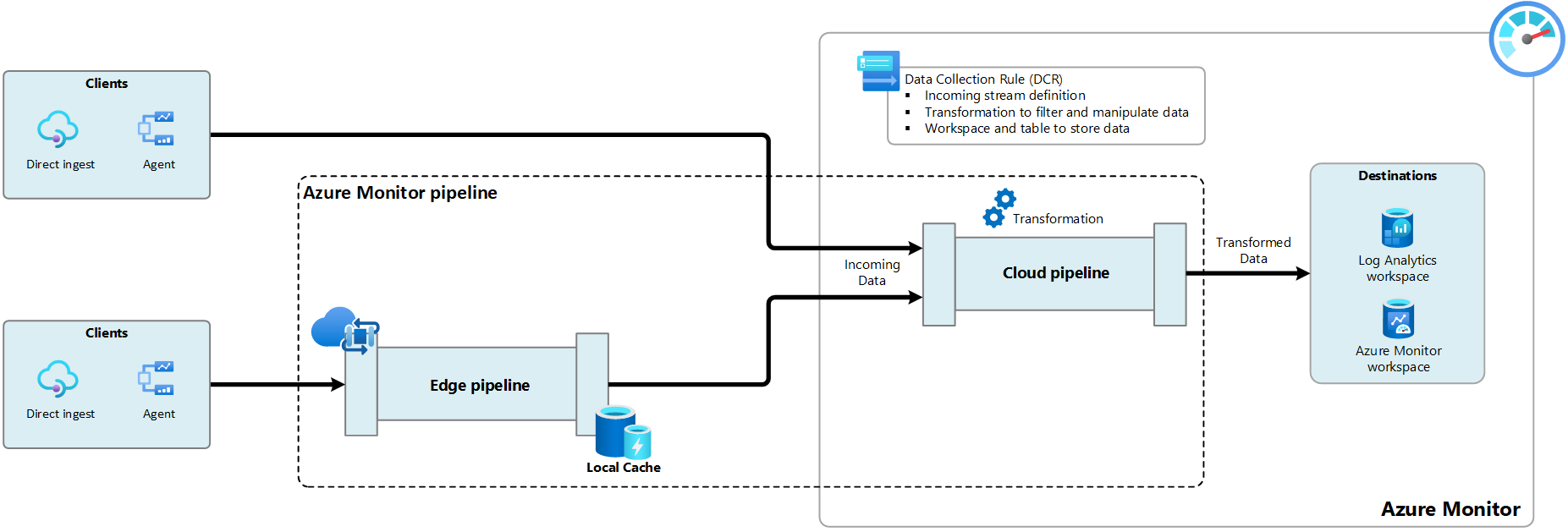

Data collection using the Azure Monitor pipeline is shown in the diagram below. All data is processed through the cloud pipeline, which is automatically available in your subscription and needs no configuration. Each collection scenario is configured in a data collection rule (DCR), which is a set of instructions describing details such as the schema of the incoming data, a transformation to optionally modify the data, and the destination where the data should be sent.

Some environments may choose to implement a local edge pipeline to manage data collection before it's sent to the cloud. See edge pipeline for details on this option.

Data collection rules

Data collection rules (DCRs) are sets of instructions supporting data collection using the Azure Monitor pipeline. Depending on the scenario, DCRs specify such details as what data should be collected, how to transform that data, and where to send it. In some scenarios, you can use the Azure portal to configure data collection, while other scenarios may require you to create and manage your own DCR. See Data collection rules in Azure Monitor for details on how to create and work with DCRs.

Transformations

Transformations allow you to modify incoming data before it's stored in Azure Monitor. They are KQL queries defined in the DCR that run in the cloud pipeline. See Data collection transformations in Azure Monitor for details on how to create and use transformations.

The specific use case for Azure Monitor pipeline are:

- Reduce costs. Remove unneeded records or columns to save on ingestion costs.

- Remove sensitive data. Filter or obfuscate private data.

- Enrich data. Add a calculated column to simplify log queries.

- Format data. Change the format of incoming data to match the schema of the destination table.

Edge pipeline

The edge pipeline extends the Azure Monitor pipeline to your own data center. It enables at-scale collection and routing of telemetry data before it's delivered to Azure Monitor in the Azure cloud. See Configure an edge pipeline in Azure Monitor for details on how to set up an edge pipeline.

The specific use case for Azure Monitor edge pipeline are:

- Scalability. The edge pipeline can handle large volumes of data from monitored resources that may be limited by other collection methods such as Azure Monitor agent.

- Periodic connectivity. Some environments may have unreliable connectivity to the cloud, or may have long unexpected periods without connection. The edge pipeline can cache data locally and sync with the cloud when connectivity is restored.

- Layered network. In some environments, the network is segmented and data cannot be sent directly to the cloud. The edge pipeline can be used to collect data from monitored resources without cloud access and manage the connection to Azure Monitor in the cloud.

Data collection scenarios

The following table describes the data collection scenarios that are currently supported using the Azure Monitor pipeline. See the links in each entry for details.

| Scenario | Description |

|---|---|

| Virtual machines | Install the Azure Monitor agent on a VM and associate it with one or more DCRs that define the events and performance data to collect from the client operating system. You can perform this configuration using the Azure portal so you don't have to directly edit the DCR. See Collect events and performance counters from virtual machines with Azure Monitor Agent. |

| When you enable VM insights on a virtual machine, it deploys the Azure Monitor agent to telemetry from the VM client. The DCR is created for you automatically to collect a predefined set of performance data. See Enable VM Insights overview. |

|

| Container insights | When you enable Container insights on your Kubernetes cluster, it deploys a containerized version of the Azure Monitor agent to send logs from the cluster to a Log Analytics workspace. The DCR is created for you automatically, but you may need to modify it to customize your collection settings. See Configure data collection in Container insights using data collection rule. |

| Log ingestion API | The Logs ingestion API allows you to send data to a Log Analytics workspace from any REST client. The API call specifies the DCR to accept its data and specifies the DCR's endpoint. The DCR understands the structure of the incoming data, includes a transformation that ensures that the data is in the format of the target table, and specifies a workspace and table to send the transformed data. See Logs Ingestion API in Azure Monitor. |

| Azure Event Hubs | Send data to a Log Analytics workspace from Azure Event Hubs. The DCR defines the incoming stream and defines the transformation to format the data for its destination workspace and table. See Tutorial: Ingest events from Azure Event Hubs into Azure Monitor Logs (Public Preview). |

| Workspace transformation DCR | The workspace transformation DCR is a special DCR that's associated with a Log Analytics workspace and allows you to perform transformations on data being collected using other methods. You create a single DCR for the workspace and add a transformation to one or more tables. The transformation is applied to any data sent to those tables through a method that doesn't use a DCR. See Workspace transformation DCR in Azure Monitor. |

Next steps

Povratne informacije

Uskoro: tokom 2024. postepeno ćemo ukidati probleme s uslugom GitHub kao mehanizam povratnih informacija za sadržaj i zamijeniti ga novim sistemom povratnih informacija. Za više informacija, pogledajte https://aka.ms/ContentUserFeedback.

Pošalјite i prikažite povratne informacije za