tiled_extent::truncate in C++ AMP

This is the third and last post in the series about the tiled extent divisibility requirement. The first post presented the requirement, the second post showed how to deal with the requirement using tiled_extent::pad, and this post will show how to deal with the requirement using tiled_extent::truncate.

First I’ll describe the function, then we’ll attempt to use it with one approach, and then I’ll show you a second better approach. Then we’ll conclude with a comparison of all of the approaches I presented.

Using the tiled_extent::truncate function

Truncating a tiled_extent follows the same pattern as padding: call .truncate() on a potentially unevenly-divisible tiled extent and that will yield a tiled_extent instance which is truncated, or rounded downwards, to a tile-size multiple, in each dimension. This is demonstrated by the following code and its output:

extent<2> e(999,666);

tiled_extent<16,16> tiled_e = e.tile<16,16>();

tiled_extent<16,16> truncated_e = tiled_e.truncate();

std::cout << "tile_e extents are: (" << tiled_e[0] << "," << tiled_e[1] << ")" << std::endl;

std::cout << "truncated_e extents are: (" << truncated_e[0] << "," << truncated_e[1] << ")" << std::endl;

Output:

tile_e extents are: (999,666)

truncated_e extents are: (992,656)

Example – matrix transpose – using truncate

As we have discussed before, in the matrix transpose sample we are computing a transpose matrix of an input matrix. In the sample, a matrix which is evenly divisible by the tiles size is transposed with this function:

template <typename value_type>

void transpose_tiled_even(

const array_view<const value_type,2>& A,

const array_view<value_type,2>& Atranspose)

{

assert(A.extent == transpose(Atranspose.extent));

assert(A.extent % tile_size == extent<2>(0,0));

Atranspose.discard_data();

parallel_for_each(

A.extent.tile<tile_size,tile_size>(),

[=] (tiled_index<tile_size,tile_size> tidx) restrict(amp) {

tile_static float t1[tile_size][tile_size];

t1[tidx.local[1]][tidx.local[0]] = A[tidx.global];

tidx.barrier.wait();

index<2> idxdst(transpose(tidx.tile_origin) + tidx.local);

Atranspose[idxdst] = t1[tidx.local[0]][tidx.local[1]];

}

);

}

In order to come up with a truncation strategy we have to decide which remaining threads will carry out what leftover work. Let’s look at this from a quantitative perspective. Suppose we wanted to transpose a 999 by 666 matrix. We have a 999 times 666 cells to transform. That’s a total of 665,334 cells. Then we have a 992 times 656 threads thrown at the problem---a total of 650,752 threads. So we’re only about 15,000 threads short. Two things follow:

- The amount of leftover work is less than 2.5% of the total amount of work. This means it doesn’t have to be done in the most efficient manner. It’s just important it doesn’t get in the way of the rest of the processing.

- Testing whether a thread will need to do leftover work will be done by 100% of the threads, so we better ensure that the testing is done in an efficient manner.

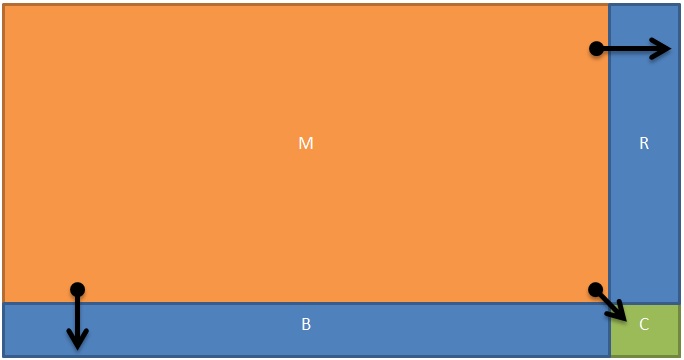

Following these guidelines, the image below depicts the chosen strategy:

- The entire rectangular shape (M+B+R+C) represents the matrix to be transposed.

- The area denoted by M is the Main area corresponding to the truncated extent. Each cell in the area M will be transposed by the corresponding thread in the same area.

- The area to the Right denoted by R will be covered by threads lying on the rightmost column of M. The right-pointing arrow represents the operation of one such thread. It will cover an entire row-section out of R.

- The area to the Bottom denoted by B will be covered by threads lying on the bottommost row of M. The down-pointing arrow represents the operation of one such thread. It will cover an entire column-section out of B.

- Finally, the small Corner area C will be handled by the right-most-bottom-most thread in M.

In order to check whether a particular thread has any extra work to do, we perform the following test, where e_truncated is the compute extent:

bool is_bottom_thread = tidx.global[0] == e_truncated[0]-1;

bool is_right_thread = tidx.global[1] == e_truncated[1]-1;

if (is_right_thread | is_bottom_thread)

// do leftover work here...

That’s a fairly simple test to perform, so hopefully it wouldn’t encumber normal thread operation in the 97.7% case it evaluates to false. Note that we are using the bit-wise OR operator rather than the logical OR operator because it is more efficient on the GPU especially in the case where both sub-expressions are going to be false, which as we saw is the case almost all of the time (and hence C++’s short-circuiting evaluation rules don’t help at all).

Now we are ready to see the code inside the if condition:

// Right leftover band ‘R’

if (is_right_thread)

{

idx0 = tidx.global[0];

for (idx1=e_truncated[1]; idx1<A.extent[1]; idx1++)

{

Atranspose(idx1, idx0) = A(idx0, idx1);

}

}

// Bottom leftover band ‘B’

if (is_bottom_thread)

{

idx1 = tidx.global[1];

for (idx0=e_truncated[0]; idx0<A.extent[0]; idx0++)

{

Atranspose(idx1, idx0) = A(idx0, idx1);

}

}

// Bottom right leftover corner ‘C’

if (is_bottom_thread && is_right_thread)

{

for (idx0 = e_truncated[0]; idx0<A.extent[0]; idx0++)

{

for (idx1 = e_truncated[1]; idx1<A.extent[1]; idx1++)

{

Atranspose(idx1, idx0) = A(idx0, idx1);

}

}

}

This is a lot more code to add to the kernel, compared with what we were able to achieve with padding, and not at all straight forward! You may ask whether this approach performs better than the padding approach---is the pain worth the gain?---my measurements show that this is not the case.

At least in the transpose case, the option of truncation doesn’t seem like the way to go. This one is definitely not a keeper! But now you have another tool under your belt, which may turn out useful in other cases.

Let’s examine a different approach.

Example – matrix transpose – using divide-and-conquer

The second attempt assumes that we have already created

- A simple algorithm which can handle all matrix sizes, and,

- A tiled algorithm, which can handle matrix sizes that are evenly-divisible by the tile size.

These two algorithms correspond to functions transpose_simple and transpose_tiled_even, in the matrix transpose sample code.

Because we have been careful to use array_views in these algorithmic building-blocks, we can now reuse these algorithms for sections of the data, by employing the array_view::section function to obtain the sections of the matrix corresponding to the different regions of the diagram above, and feed them into the algorithms which we are using as building blocks. We will make use of the truncate function to calculate the sizes and offsets of the sections.

Now we are ready to present the transpose_tiled_truncate_option_b function.

template <typename value_type>

void transpose_tiled_truncate_option_b(

const array_view<const value_type,2>& A,

const array_view<value_type,2>& Atranspose)

{

tiled_extent<16,16> e_tiled(A.extent.tile<16,16>());

tiled_extent<16,16> e_truncated(e_tiled.truncate());

// Transpose area ‘M’ – main area

{

auto B = A.section(index<2>(0,0), e_truncated);

auto Bt = Atranspose.section(

index<2>(0,0),

transpose(static_cast<extent<2>>(e_truncated)));

transpose_tiled_even(B, Bt);

}

// Transpose area ‘B’ – bottom leftover band

if (e_truncated[0] < e_tiled[0])

{

index<2> offset(e_truncated[0],0);

extent<2> e(A.extent[0]-e_truncated[0], e_truncated[1]);

auto B = A.section(offset, e);

auto Bt = Atranspose.section(transpose(offset), transpose(e));

transpose_simple(B, Bt);

}

// Transpose areas ‘R’ and ‘BR’– right leftover band

if (e_truncated[1] < e_tiled[1])

{

index<2> offset(0, e_truncated[1]);

auto B = A.section(offset);

auto Bt = Atranspose.section(transpose(offset));

transpose_simple(B, Bt);

}

}

The algorithm ends up invoking between one and three kernels, depending if there are leftover bands to the bottom and/or to the right, in addition to the main area.

Conclusion and recommendations

Overall we have presented four approaches to dealing with C++ AMP’s tiled_extent divisibility requirement in the context of transposing matrices of arbitrary sizes

- Using the simple model (i.e. not explicitly tiling-the parallel_for_each) – see the matrix transpose sample blog post.

- Using the tiled model and a padding algorithm.

- Using the tiled model and truncating with tiled_extent::truncate (in this post).

- Using a combination of #1 and (a simplified) #3 in a divide-and-conquer algorithm (in this post).

Stacking those four options against each other, it could be argued that the padding approach is the simplest to understand and implement and contains the fewest gotchas. Based on my experimentation, at least in this experiment, not only is the padding approach easier to understand and maintain, it also offers the most performance-portability.

Of course, findings for other workloads could be different, but as a justification for the almost-universal applicability of the padding approach consider the following argument. Tiling already involves the process of eliminating redundant reads and writes outside of your kernel. Thus the global memory accesses which remain are the ones that you must perform, and they are likely to be expensive because they are not redundant (and hence not cached by the hardware). If they are expensive, say in the order of a thousand cycles, then it doesn’t do much damage to preface them with a range check, which is what the padding approach does.

That concludes our series on the transpose workload in consideration of the divisibility requirement. As always if you have any feedback or questions please feel free to provide them at the C++ AMP forum or right here in the comments section.