Library management in Spark

Important

This feature is currently in preview. The Supplemental Terms of Use for Microsoft Azure Previews include more legal terms that apply to Azure features that are in beta, in preview, or otherwise not yet released into general availability. For information about this specific preview, see Azure HDInsight on AKS preview information. For questions or feature suggestions, please submit a request on AskHDInsight with the details and follow us for more updates on Azure HDInsight Community.

The purpose of Library Management is to make open-source or custom code available to notebooks and jobs running on your clusters. You can upload Python libraries from PyPI repositories. This article focuses on managing libraries in the cluster UI. Azure HDInsight on AKS already includes many common libraries in the cluster. To see which libraries are included in HDI on AKS cluster, review the library management page.

Install libraries

You can install libraries in two modes:

- Cluster-installed

- Notebook-scoped

Cluster Installed

All notebooks running on a cluster can use cluster libraries. You can install a cluster library directly from a public repository such as PyPi. Upload from Maven repositories, upload custom libraries from cloud storage are in the roadmap.

Notebook-scoped

Notebook-scoped libraries, available for Python and Scala, which allow you to install libraries and create an environment scoped to a notebook session. These libraries don't affect other notebooks running on the same cluster. Notebook-scoped libraries don't persist and must be reinstalled for each session.

Note

Use notebook-scoped libraries when you need a custom environment for a specific notebook.

Modes of Library Installation

PyPI: Fetch libraries from open source PyPI repository by mentioning the library name and version in the installation UI.

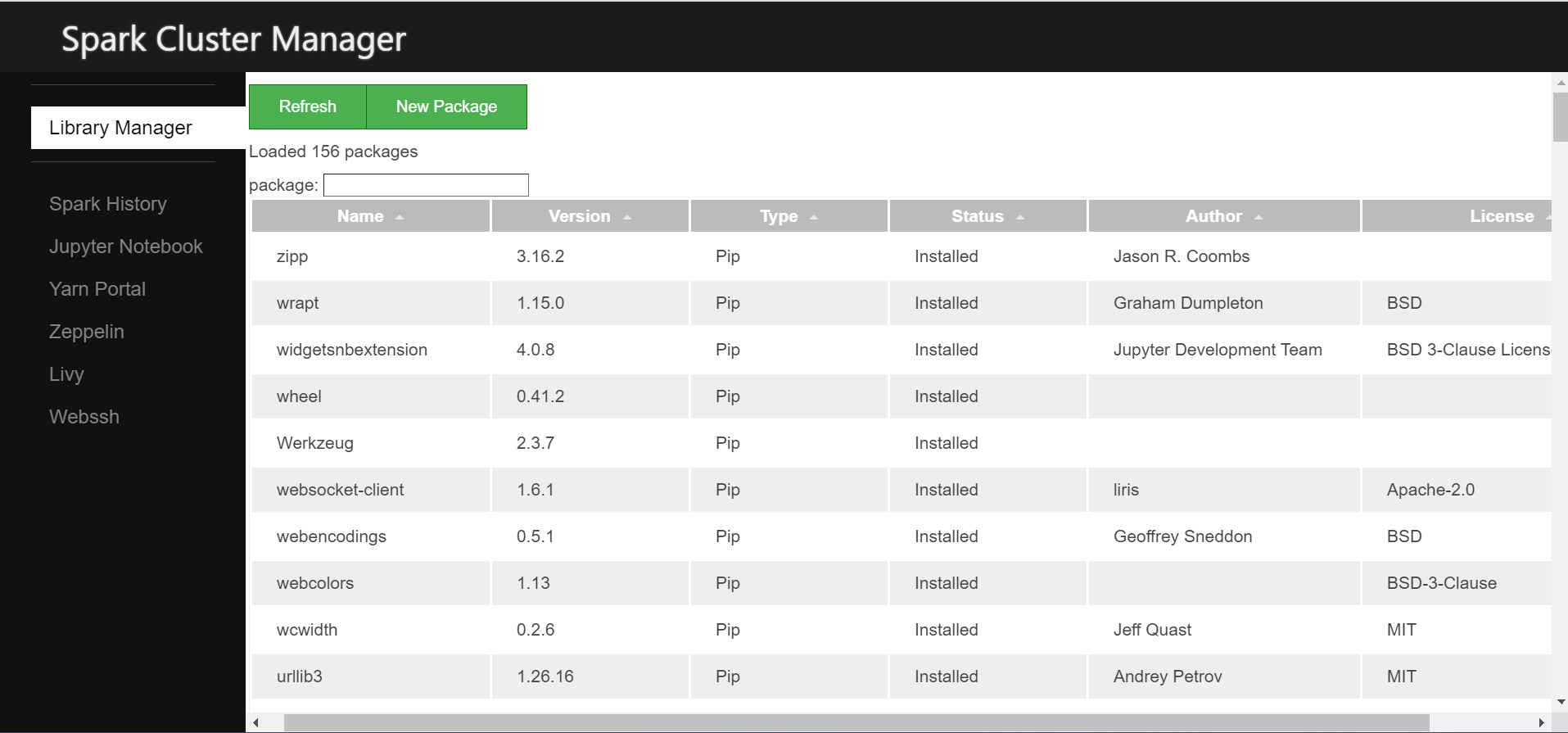

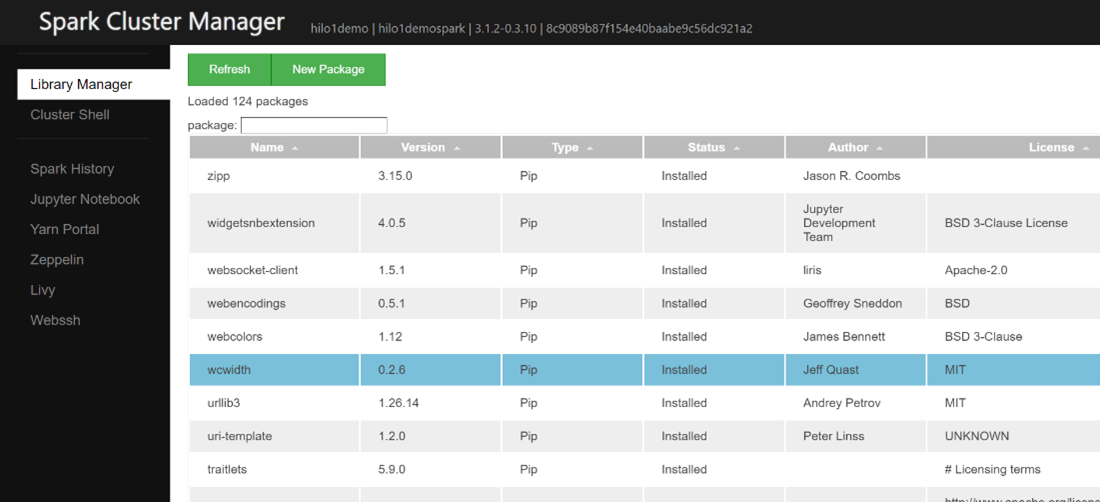

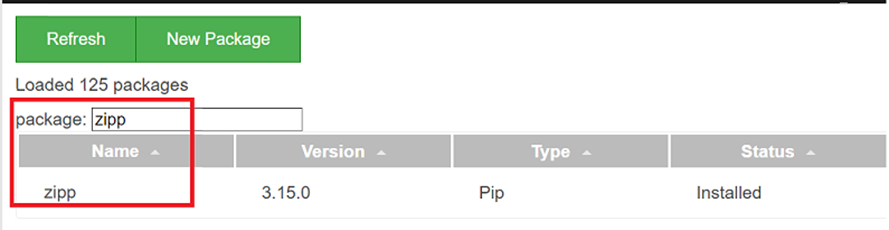

View the installed libraries

From Overview page, navigate to Library Manager.

From Spark Cluster Manager, click on Library Manager.

You can view the list of installed libraries from here.

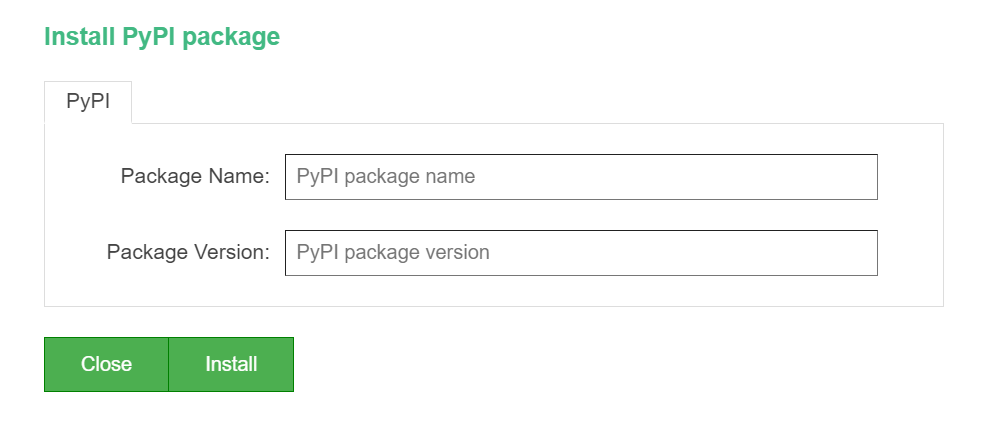

Add library widget

PyPI

From the PyPI tab, enter the Package Name and Package Version..

Click Install.

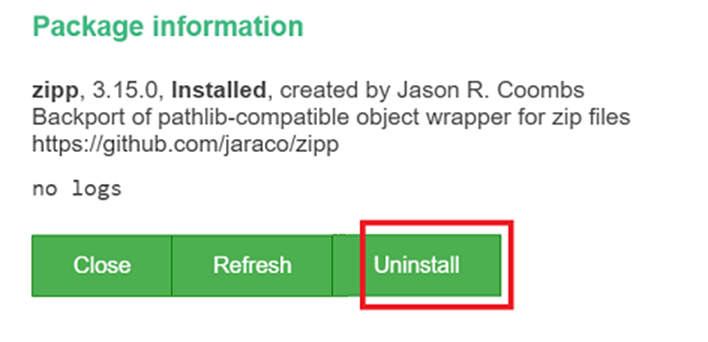

Uninstalling Libraries

If you decide not to use the libraries anymore, then you can easily delete the libraries packages through the uninstall button in the library management page.

Select and click on the library name

Click on Uninstall in the widget

Note

- Packages installed from Jupyter notebook can only be deleted from Jupyter Notebook.

- Packages installed from library manager can only be uninstalled from library manager.

- For upgrading a library/package, uninstall the current version of the library and resinstall the required version of the library.

- Installation of libraries from Jupyter notebook is particular to the session. It is not persistant.

- Installing heavy packages may take some time due to their size and complexity.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for