Use multiple LUIS and QnA models with Orchestrator

APPLIES TO: SDK v4

Note

Azure AI QnA Maker will be retired on 31 March 2025. Beginning 1 October 2022, you won't be able to create new QnA Maker resources or knowledge bases. A newer version of the question and answering capability is now available as part of Azure AI Language.

Custom question answering, a feature of Azure AI Language, is the updated version of the QnA Maker service. For more information about question-and-answer support in the Bot Framework SDK, see Natural language understanding.

Note

Language Understanding (LUIS) will be retired on 1 October 2025. Beginning 1 April 2023, you won't be able to create new LUIS resources. A newer version of language understanding is now available as part of Azure AI Language.

Conversational language understanding (CLU), a feature of Azure AI Language, is the updated version of LUIS. For more information about language understanding support in the Bot Framework SDK, see Natural language understanding.

If a bot uses multiple Language Understanding (LUIS) models and QnA Maker knowledge bases, you can use Bot Framework Orchestrator to determine which LUIS model or QnA Maker knowledge base best matches the user input. You can use the bf orchestrator CLI command to create an Orchestrator snapshot file, then use the snapshot file to route user input to the correct model at run time.

This article describes how to use an existing QnA Maker knowledge base with Orchestrator.

- For new bots, consider using the question answering and orchestration workflow features of Azure AI Language.

- For more information about Orchestrator, see Intent recognition with Orchestrator in Composer.

- For more information about the

bf orchestratorcommand, see the Bot Framework CLI README.

Prerequisites

- A luis.ai account to author LUIS apps.

- A QnA Maker account and an existing QnA Maker knowledge base.

- A copy of the NLP with Orchestrator sample in C# (archived) or JavaScript (archived).

- Knowledge of bot basics, LUIS, and QnA Maker.

- Install the command-line BF CLI.

About this sample

This sample is based on a predefined set of LUIS and QnA Maker projects. However, to use QnA Maker in your bot, you need an existing knowledge base in the QnA Maker portal. Your bot then can use the knowledge base to answer the user's questions.

For new bot development, consider using Power Virtual Agents. If you need to create a new knowledge base for a Bot Framework SDK bot, see the following Azure AI services articles:

- What is question answering?

- Create an FAQ bot

- Azure Cognitive Language Services Question Answering client library for .NET

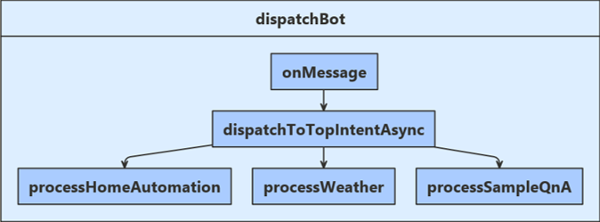

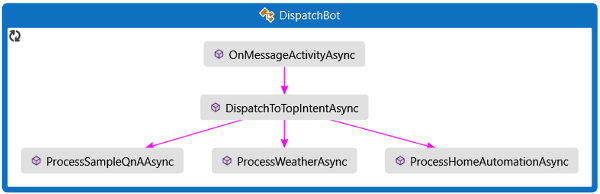

OnMessageActivityAsync is called for each user input received. This module finds the top scoring user intent and passes that result on to DispatchToTopIntentAsync. DispatchToTopIntentAsync, in turn, calls the appropriate app handler.

ProcessSampleQnAAsync- for bot FAQ questions.ProcessWeatherAsync- for weather queries.ProcessHomeAutomationAsync- for home lighting commands.

The handler calls the LUIS or QnA Maker service and returns the generated result back to the user.

Create LUIS apps

Before you can create an Orchestrator snapshot file, you need LUIS apps and QnA knowledge bases created and published. The sample bot referenced in this article uses the following models, included with the NLP With Orchestrator sample in the \CognitiveModels folder:

| Name | Description |

|---|---|

| HomeAutomation | A LUIS app that recognizes a home automation intent with associated entity data. |

| Weather | A LUIS app that recognizes weather-related intents with location data. |

| QnAMaker | A QnA Maker knowledge base that provides answers to simple questions about the bot. |

Create the LUIS apps

Create LUIS apps from the HomeAutomation and Weather .lu files in the cognitive models directory of the sample.

Run the following command to import, train and publish the app to the production environment.

bf luis:build --in CognitiveModels --authoringKey <YOUR-KEY> --botName <YOUR-BOT-NAME>Record the application IDs, display names, authoring key, and location.

For more information, see how to Create a LUIS app in the LUIS portal and Obtain values to connect to your LUIS app in Add natural language understanding to your bot and the LUIS documentation on how to train and publish an app to the production environment.

Obtain values to connect your bot to the knowledge base

Note

Azure AI QnA Maker will be retired on 31 March 2025. Beginning 1 October 2022, you won't be able to create new QnA Maker resources or knowledge bases. A newer version of the question and answering capability is now available as part of Azure AI Language.

Custom question answering, a feature of Azure AI Language, is the updated version of the QnA Maker service. For more information about question-and-answer support in the Bot Framework SDK, see Natural language understanding.

You need an existing knowledge base and your QnA Maker hostname and endpoint key.

Tip

The QnA Maker documentation has instructions on how to create, train, and publish your knowledge base.

Create the Orchestrator snapshot file

The CLI interface for the Orchestrator tool creates the Orchestrator snapshot file for routing to the correct LUIS or QnA Maker app at run time.

Install the latest supported version of the Visual C++ Redistributable package

Open a command prompt or terminal window, and change directories to the sample directory

Make sure you have the current version of npm and the Bot Framework CLI.

npm i -g npm npm i -g @microsoft/botframework-cliDownload Orchestrator base model file

mkdir model bf orchestrator:basemodel:get --out ./modelCreate the Orchestrator snapshot file

mkdir generated bf orchestrator:create --hierarchical --in ./CognitiveModels --out ./generated --model ./model

Installing packages

Prior to running this app for the first time ensure that several NuGet packages are installed:

- Microsoft.Bot.Builder

- Microsoft.Bot.Builder.AI.Luis

- Microsoft.Bot.Builder.AI.QnA

- Microsoft.Bot.Builder.AI.Orchestrator

Manually update your appsettings.json file

Once all of your service apps are created, the information for each needs to be added into your 'appsettings.json' file. The initial sample for C# (archived) code contains an empty appsettings.json file:

appsettings.json

For each of the entities shown below, add the values you recorded earlier in these instructions:

"QnAKnowledgebaseId": "<knowledge-base-id>",

"QnAEndpointKey": "<qna-maker-resource-key>",

"QnAEndpointHostName": "<your-hostname>",

"LuisHomeAutomationAppId": "<app-id-for-home-automation-app>",

"LuisWeatherAppId": "<app-id-for-weather-app>",

"LuisAPIKey": "<your-luis-endpoint-key>",

"LuisAPIHostName": "<your-dispatch-app-region>",

When all changes are complete, save this file.

Connect to the services from your bot

To connect to the LUIS, and QnA Maker services, your bot pulls information from the settings file.

In BotServices.cs, the information contained within configuration file appsettings.json is used to connect your Orchestrator bot to the HomeAutomation, Weather and SampleQnA services. The constructors use the values you provided to connect to these services.

BotServices.cs

Call the services from your bot

For each input from your user, the bot logic passes in user input to Orchestrator Recognizer, finds the top returned intent, and uses that information to call the appropriate service for the input.

In the DispatchBot.cs file whenever the OnMessageActivityAsync method is called, we check the incoming user message and get the top intent from Orchestrator Recognizer. We then pass the topIntent and recognizerResult on to the correct method to call the service and return the result.

bots\DispatchBot.cs

Work with the recognition results

When the Orchestrator recognizer produces a result, it indicates which service can most appropriately process the utterance. The code in this bot routes the request to the corresponding service, and then summarizes the response from the called service. Depending on the intent returned from Orchestrator, this code uses the returned intent to route to the correct LUIS model or QnA service.

bots\DispatchBot.cs

The ProcessHomeAutomationAsync and ProcessWeatherAsync methods use the user input contained within the turn context to get the top intent and entities from the correct LUIS model.

The ProcessSampleQnAAsync method uses the user input contained within the turn context to generate an answer from the knowledge base and display that result to the user.

Note

If this were a production application, this is where the selected LUIS methods would connect to their specified service, pass in the user input, and process the returned LUIS intent and entity data.

Test your bot

Using your development environment, start the sample code. Note the localhost address shown in the address bar of the browser window opened by your App:

https://localhost:<Port_Number>.Open Bot Framework Emulator, click on Open Bot button.

In the Open a bot dialog box, enter your bot endpoint URL, such as

http://localhost:3978/api/messages. Click Connect.For your reference, here are some of the questions and commands that are covered by the services built for your bot:

- QnA Maker

hi,good morningwhat are you,what do you do

- LUIS (home automation)

turn on bedroom lightturn off bedroom lightmake some coffee

- LUIS (weather)

whats the weather in redmond washingtonwhat's the forecast for londonshow me the forecast for nebraska

- QnA Maker

Route user utterance to QnA Maker

In the Emulator, enter the text

hiand submit the utterance. The bot submits this query to Orchestrator and gets back a response indicating which child app should get this utterance for further processing.By selecting the

Orchestrator Recognition Traceline in the log, you can see the JSON response in the Emulator. The Orchestrator result is displayed in the Inspector.{ "type": "trace", "timestamp": "2021-05-01T06:26:04.067Z", "serviceUrl": "http://localhost:58895", "channelId": "emulator", "from": { "id": "36b2a460-aa43-11eb-920f-7da472b36492", "name": "Bot", "role": "bot" }, "conversation": { "id": "17ef3f40-aa46-11eb-920f-7da472b36492|livechat" }, "recipient": { "id": "5f8c6123-2596-45df-928c-566d44426556", "role": "user" }, "locale": "en-US", "replyToId": "1a3f70d0-aa46-11eb-8b97-2b2a779de581", "label": "Orchestrator Recognition", "valueType": "OrchestratorRecognizer", "value": { "text": "hi", "alteredText": null, "intents": { "QnAMaker": { "score": 0.9987310956576168 }, "HomeAutomation": { "score": 0.3402091165577196 }, "Weather": { "score": 0.24092200496795158 } }, "entities": {}, "result": [ { "Label": { "Type": 1, "Name": "QnAMaker", "Span": { "Offset": 0, "Length": 2 } }, "Score": 0.9987310956576168, "ClosestText": "hi" }, { "Label": { "Type": 1, "Name": "HomeAutomation", "Span": { "Offset": 0, "Length": 2 } }, "Score": 0.3402091165577196, "ClosestText": "make some coffee" }, { "Label": { "Type": 1, "Name": "Weather", "Span": { "Offset": 0, "Length": 2 } }, "Score": 0.24092200496795158, "ClosestText": "soliciting today's weather" } ] }, "name": "OrchestratorRecognizerResult", "id": "1ae65f30-aa46-11eb-8b97-2b2a779de581", "localTimestamp": "2021-04-30T23:26:04-07:00" }Because the utterance,

hi, is part of the Orchestrator's QnAMaker intent, and is selected as thetopScoringIntent, the bot will make a second request, this time to the QnA Maker app, with the same utterance.Select the

QnAMaker Traceline in the Emulator log. The QnA Maker result displays in the Inspector.{ "questions": [ "hi", "greetings", "good morning", "good evening" ], "answer": "Hello!", "score": 1, "id": 96, "source": "QnAMaker.tsv", "metadata": [], "context": { "isContextOnly": false, "prompts": [] } }

[Python (archived)]: (https://github.com/microsoft/BotBuilder-Samples/tree/main/archive/samples/python/14.nlp-with-orchestrator

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for