Yet a solution. This one is based on a performance test I did, and where I tried the solutions posted by Yitzhak, Ronen and me with a fairly large data set. I gave all ten minutes to run before I killed them. Looking at the plans, Ronen's is probably the one that would have completed first, but in how many hours, I don't know.

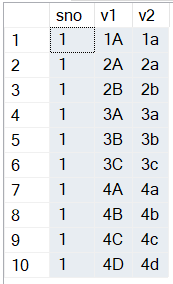

While I was waiting, I wrote a new solution which completed in three minutes. With a faster string splitter the time can be reduced further. But the keys is that the two strings need to split separately, so that we can join over an index.

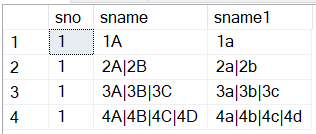

SQLWhisper will have to accept that the names have changed. id = sno, ShipName = sname, ShipAddress = sname1

CREATE TABLE #t1 (id int NOT NULL,

pos int NOT NULL,

ShipName nvarchar(60) NOT NULL,

PRIMARY KEY (id, pos))

CREATE TABLE #t2 (id int NOT NULL,

pos int NOT NULL,

ShipAddress nvarchar(60) NOT NULL,

PRIMARY KEY (id, pos))

INSERT #t1(id, pos, ShipName)

SELECT t.id, s.listpos, s.nstr

FROM PipeData t

CROSS APPLY iter_charlist_to_tbl(t.ShipName, '|') AS s

INSERT #t2(id, pos, ShipAddress)

SELECT t.id, s.listpos, s.nstr

FROM PipeData t

CROSS APPLY iter_charlist_to_tbl(t.ShipAddress, '|') AS s

SELECT t1.id, t1.ShipName + ' ' + t2.ShipAddress as str

FROM #t1 t1

JOIN #t2 t2 ON t1.id = t2.id

AND t1.pos = t2.pos

For the curious, my test script is here: 34739-pipedatasplt.txt. The BigDB database that I load the data from is on http://www.sommarskog.se/present/BigDB.bak. Warning! This is a 3GB download, and the full database size is 20 GB. SQL 2016 or later is needed. (This is a demo database that I had uploaded already.)