Hi @SQL Guy10 ,

If we know that data to be stored in the column or variable doesn’t have any Unicode characters, we can use varchar. But some experts recommends nvarchar always because: since all modern operating systems and development platforms use Unicode internally, using nvarchar rather than varchar, will avoid encoding conversions every time you read from or write to the database.

The major difference between varchar vs nvarchar

- Nvarchar stores UNICODE data. If you have requirements to store UNICODE or multilingual data, nvarchar is the choice. Varchar stores ASCII data and should be your data type of choice for normal use.

- For nvarchar, when using characters defined in the Unicode range 0-65,535, one character can be stored per each byte-pair, however, in higher Unicode ranges (65,536-1,114,111) one character may use two byte-pairs. Whereas varchar only uses 1 byte.

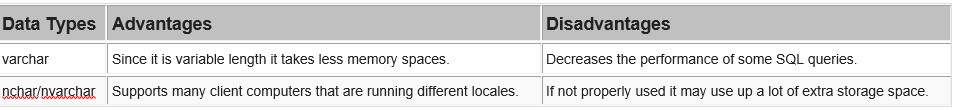

Advantages and Disadvantages of varchar and nvarchar in SQL Server.

By the way, starting with SQL Server 2019 (15.x), consider using a UTF-8 enabled collation to support Unicode and minimize character conversion issues.

Please refer to below links to get more information.

Difference Between Sql Server VARCHAR and NVARCHAR Data Type

What is the difference between varchar and nvarchar?

SQL Server differences of char, nchar, varchar and nvarchar data types

Best regards,

Cathy

If the response is helpful, please click "Accept Answer" and upvote it, thank you.

Note: Please follow the steps in our documentation to enable e-mail notifications if you want to receive the related email notification for this thread.