@Matzof Thanks for the question. Please follow the below code for writing.

datastore = ## get your defined in Workspace as Datastore

datastore.upload(src_dir='./files/to/copy/...',

target_path='target/directory',

overwrite=True)

Datastore.upload only support blob and fileshare. For adlsgen2 upload, you can try our new dataset upload API:

from azureml.core import Dataset, Datastore

datastore = Datastore.get(workspace, 'mayadlsgen2')

Dataset.File.upload_directory(src_dir='./data', target=(datastore,'data'))

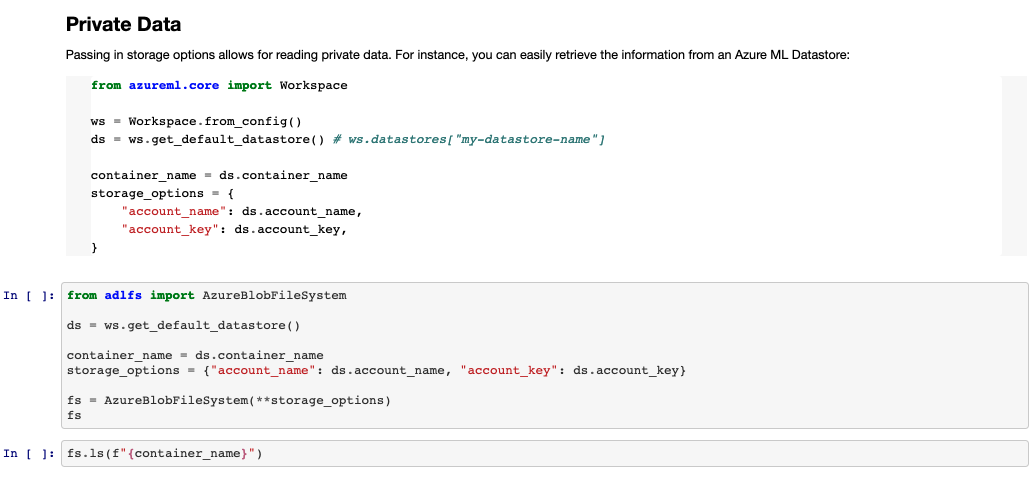

Pandas is integrated with fsspec which provides Pythonic implementation for filesystems including s3, gcs, and Azure. You can check the source for Azure here: dask/adlfs: fsspec-compatible Azure Datake and Azure Blob Storage access (github.com). With this you can use normal filesystem operations like ls, glob, info, etc.

You can find an example (for reading data) here: azureml-examples/1.intro-to-dask.ipynb at main · Azure/azureml-examples (github.com)

Writing is essentially the same as reading, you need to switch the protocol to abfs (or az), slightly modify how you're accessing the data, and provide credentials unless your blob has public write access.

You can use the Azure ML Datastore to retrieve credentials like this (taken from example):