Tag 862u:

Hi KranthiPakala-MSFT,

I think it might be better to give a very direct suggestion to illustrate what I want to achieve here. (Sorry for this approach, I hope it won't be inappropriate, I'm doing it with good intents to give you a different aspect)

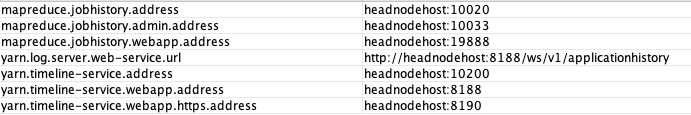

- Please configure JobHistory, Timeline Service services in a way that the above mentioned properties are referring to <clustername>.headnodehost (following my example above would result eg:

mapreduce.jobhistory.address=hdi101.headnodehost:10020). It is important that Ambari must report this value. - Please add the

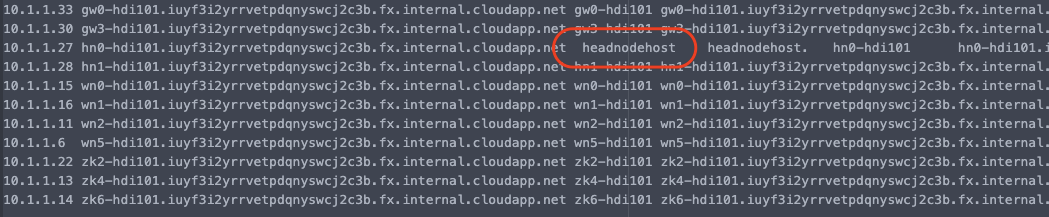

<clustername>.headnodehostalias to networking. You might as well maintain its IP address similarly to the headnodehost alias during failovers. This will result an additional, externally referable and unique hostname alias for the master node which won't collide even if I happen to have multiple HDInsight4.0 clusters in the network at any given point in time. - You can keep all the existing hostname aliases as indicated in my screenshot (including the headnodehost alias as well to maintain backward compatibility for you)

Cheers