Hello @Evans, Matt ,

Thanks for the ask and using the Microsoft Q&A platform .

I did dig into this a bit more and I think you can use the dynamic expression to get the

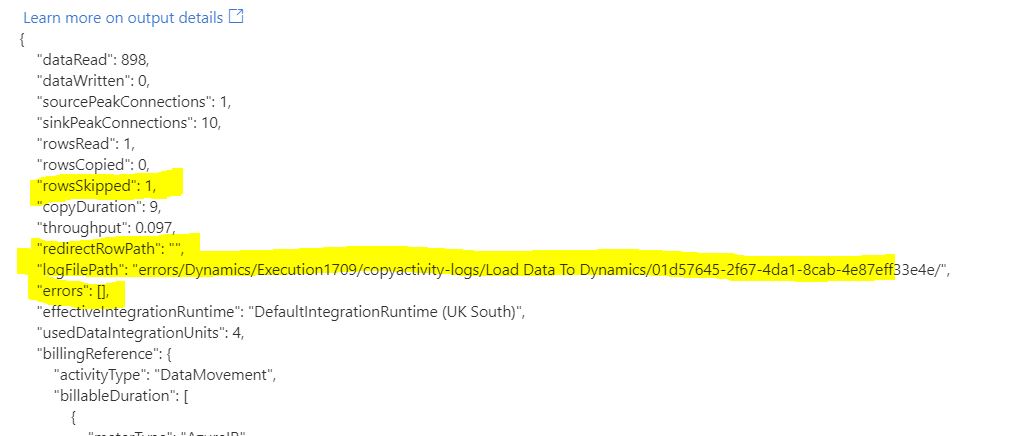

@activity('Copy data1').output.logFilePath . This is what i tried created a destination table with the datalenth less then tha of source , the idea was to trigger a failure .

CREATE TABLE T1

(

ID INT ,

NAME VARCHAR(10)

)

INSERT into t1 values(1,'Evan')

INSERT into t1 values(2,'John')

INSERT into t1 values(3,'James')

sink

CREATE TABLE T2

(

ID INT ,

NAME VARCHAR**(2)**

)

Added a copy activity to copy data from source sink and enabled fault tolerance .Create a variable to capture the log file path ( this is not required but I kept to clarity )

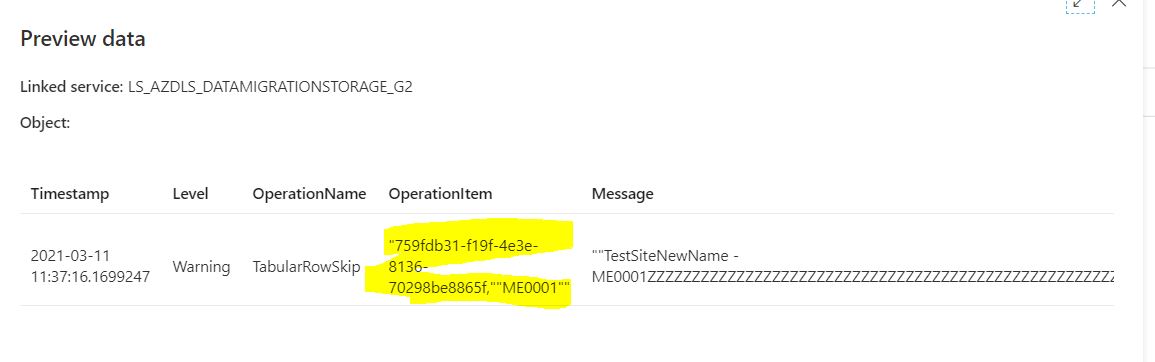

Added one more copy activity , the intend here is to copy the date from the blob to a SQL logging . I used the dataset parameter and passed the value of the log file . Below animation should make that more clear ,

I agree that the data in SQL is not in perfect format , but its something i was able to work around

select * from dbo.logging

where operationItem is not null

Animation : https://imgur.com/a/EFCoii6

Please do let me know how it goes .

Thanks

Himanshu

Please do consider to click on "Accept Answer" and "Up-vote" on the post that helps you, as it can be beneficial to other community members