@Joaquin Chemile Welcome to the Microsoft Q&A platform.

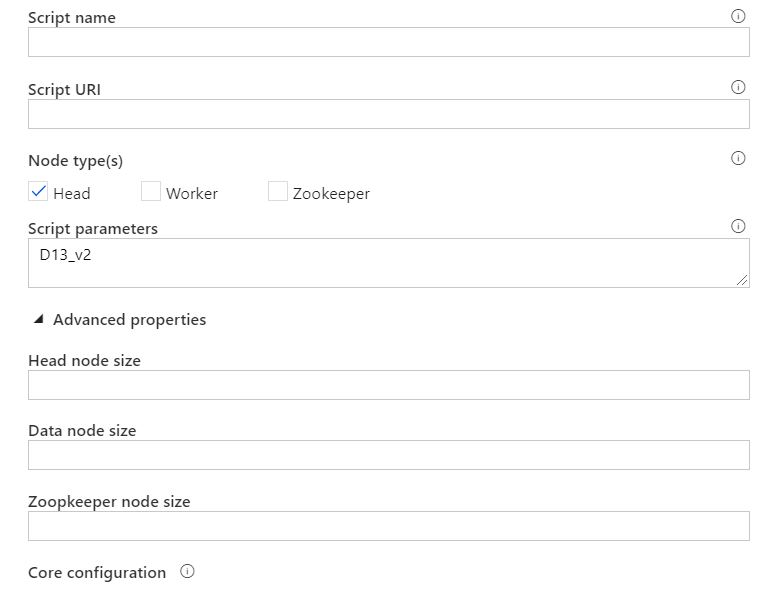

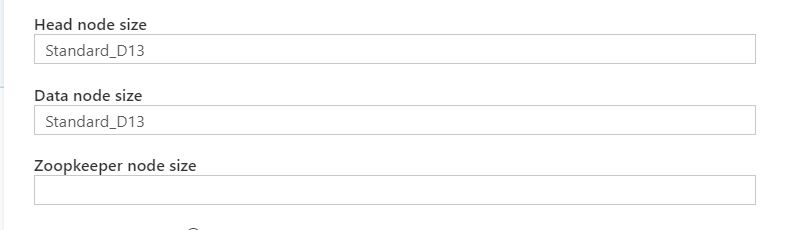

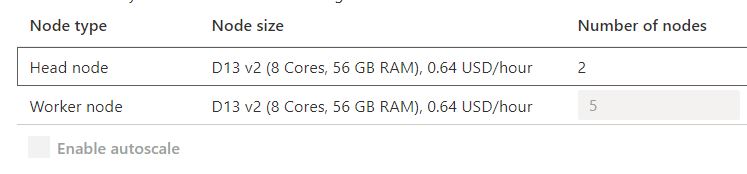

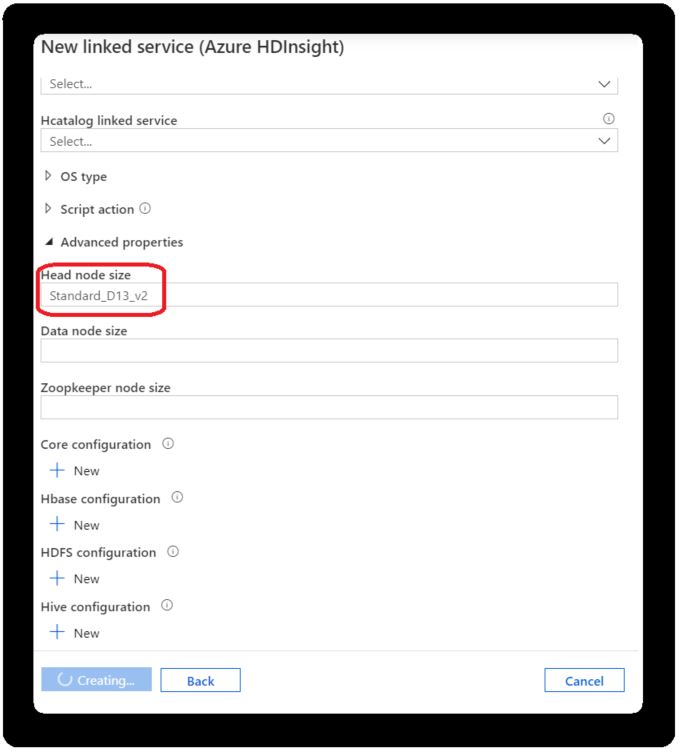

Note: If you want to create Standard D13_v2 sized head nodes, specify Standard D13_v2 as the value for Head node size in Advanced properties.

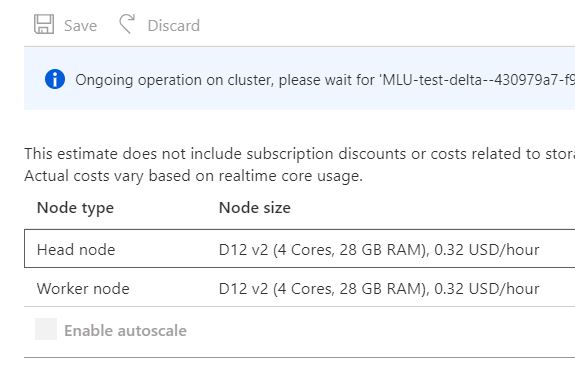

Specifying node sizes See the Sizes of Virtual Machines article for string values you need to specify for the properties mentioned in the previous section. The values need to conform to the CMDLETs & APIS referenced in the article. As you can see in the article, the data node of Large (default) size has 7-GB memory, which may not be good enough for your scenario.

For more details, refer "Compute environments supported by Azure Data Factory - Node Sizes".

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.