Hello @KNP ,

Welcome to the Microsoft Q&A platform.

Here are steps to Connect Azure Synapse notebook to Azure SQL Database:

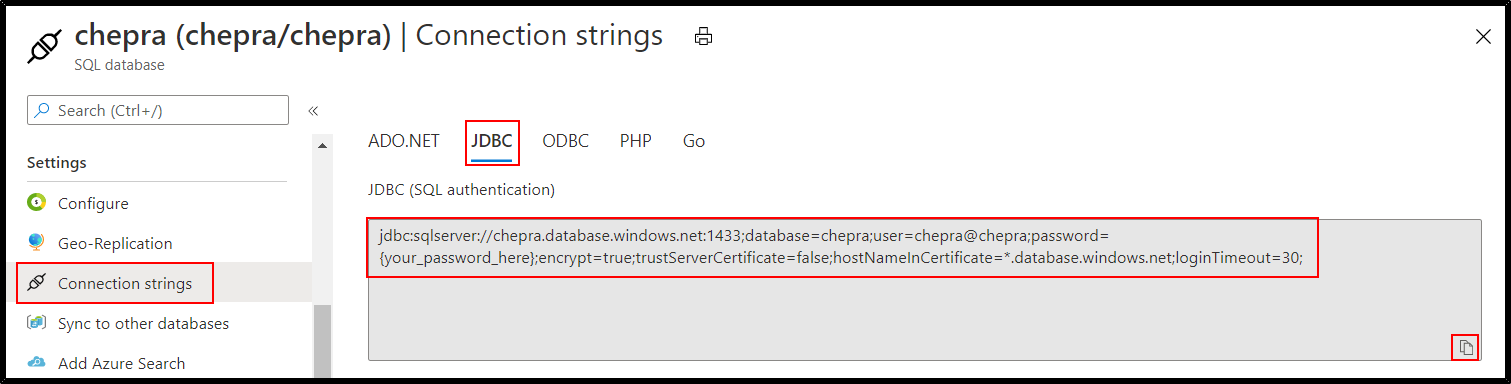

Step1: From Azure Portal get the Azure SQL Database JDBC connection string.

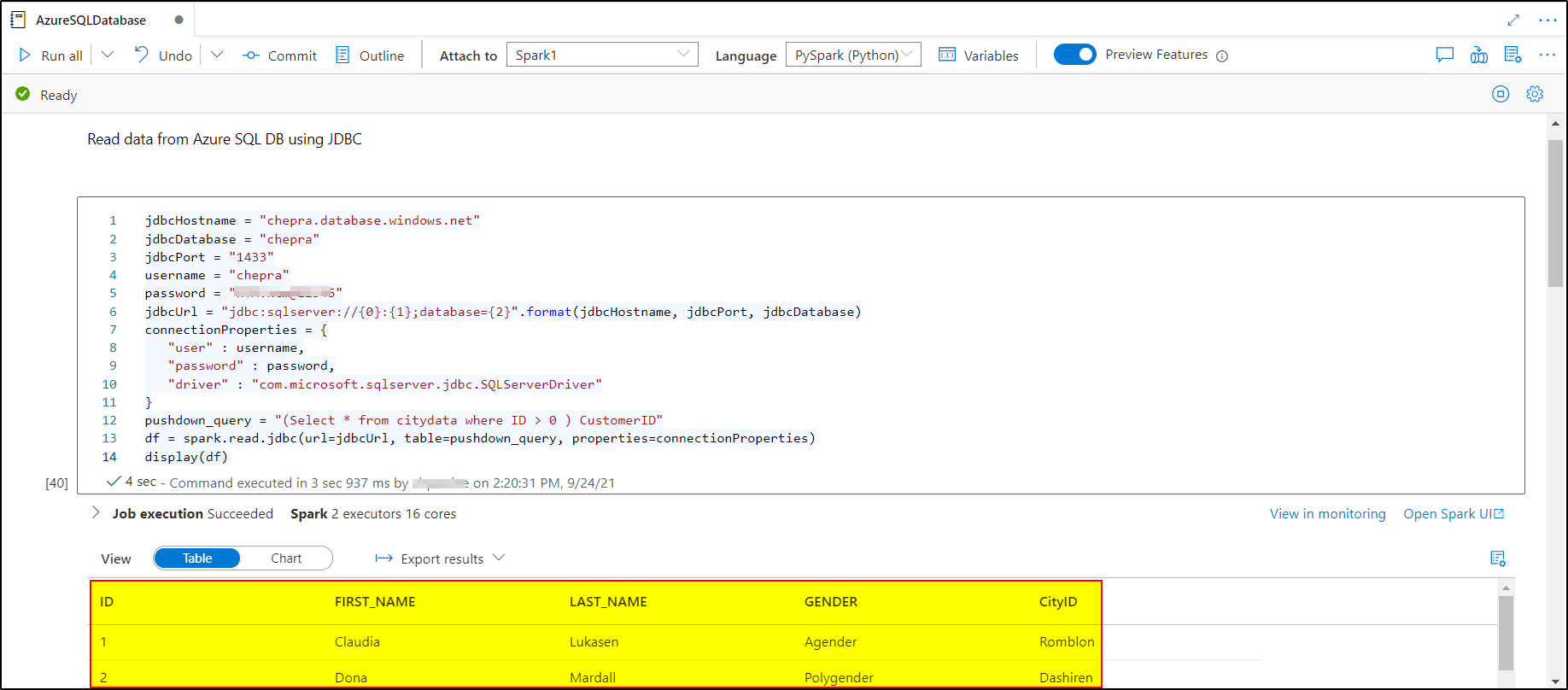

Step2: You can use the following Python Code to connect and read from Azure SQL Databases using JDBC using Python:

jdbcHostname = "chepra.database.windows.net"

jdbcDatabase = "chepra"

jdbcPort = "1433"

username = "chepra"

password = "XXXXXXXXXX"

jdbcUrl = "jdbc:sqlserver://{0}:{1};database={2}".format(jdbcHostname, jdbcPort, jdbcDatabase)

connectionProperties = {

"user" : username,

"password" : password,

"driver" : "com.microsoft.sqlserver.jdbc.SQLServerDriver"

}

pushdown_query = "(Select * from citydata where ID > 0) CustomerID"

df = spark.read.jdbc(url=jdbcUrl, table=pushdown_query, properties=connectionProperties)

display(df)

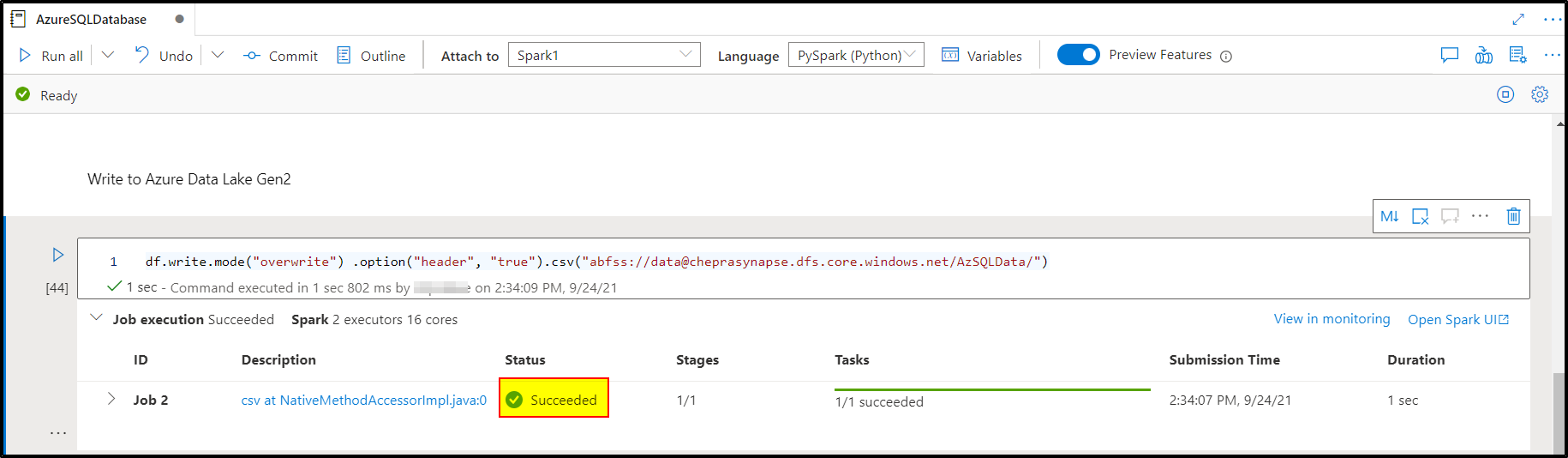

Step3: Writing data frame to Azure Storage Accounts.

- Write to Azure Blob Storage use:

wasbs://<Container>@<StorageName>.blob.core.windows.net/<Folder>/ - Write to Azure Data Lake Gen2 use:

abfss://<Container>@<StorageName>.dfs.core.windows.net/<Folder>/

I had written the data frame to Azure Data Lake Gen2:

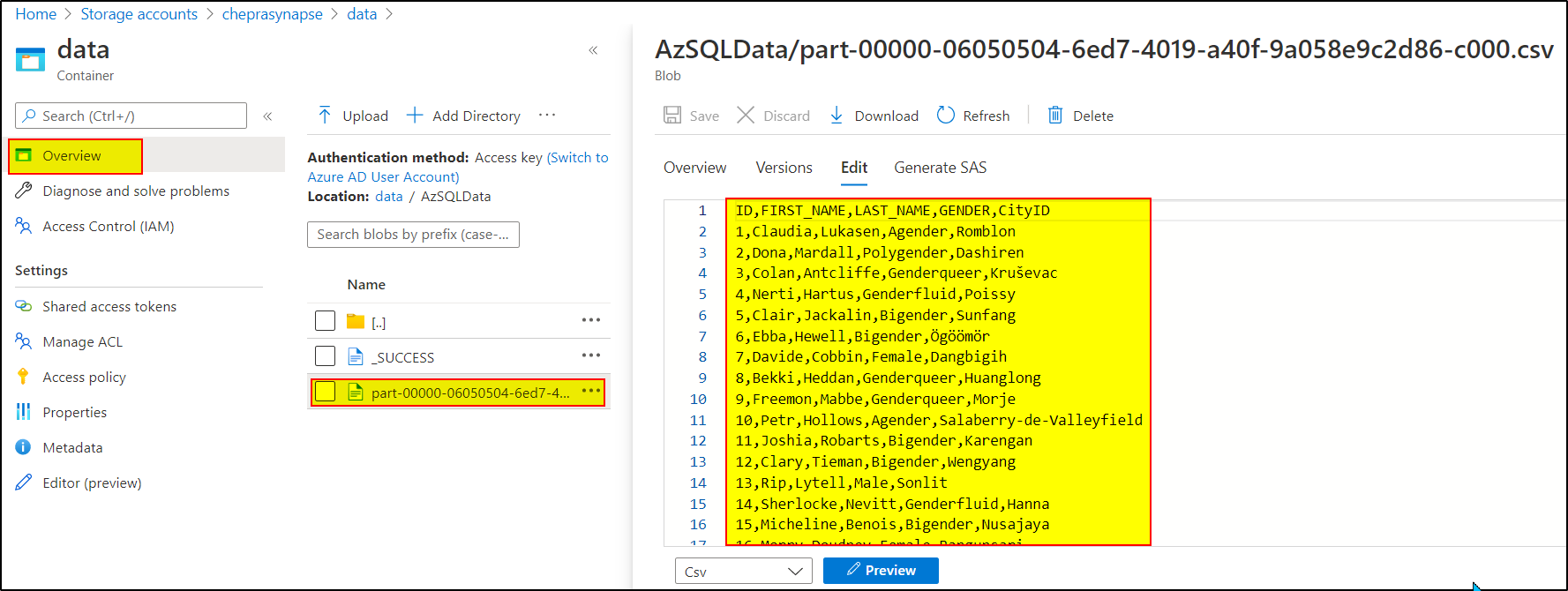

Checkout the output written to ADLS Gen2 account:

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators