Hello @Raj D ,

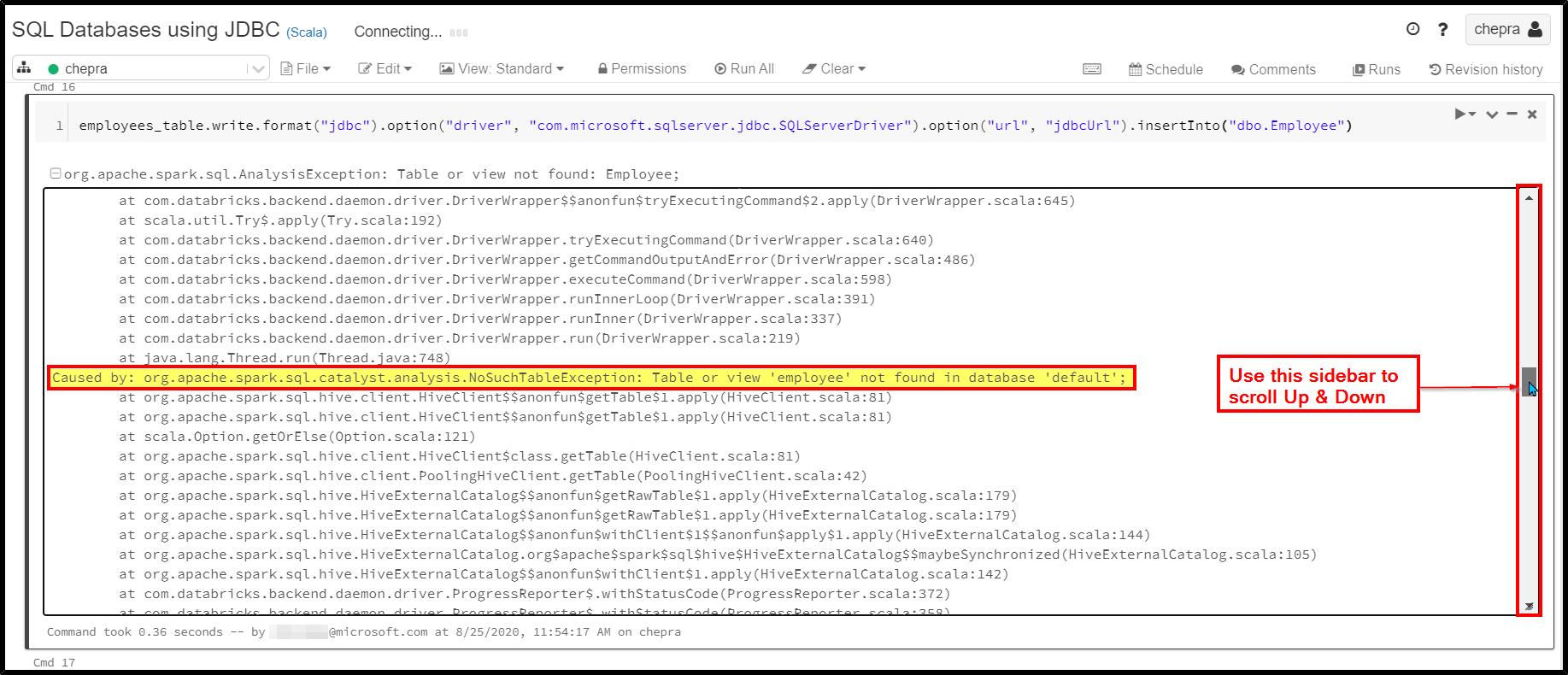

To know exact reason, I would request you to check out the complete stack trace and try to find root cause of the issue.

If you scroll down the error message you will find something called as "Caused by".

How to check complete stack trace and find caused by reason?

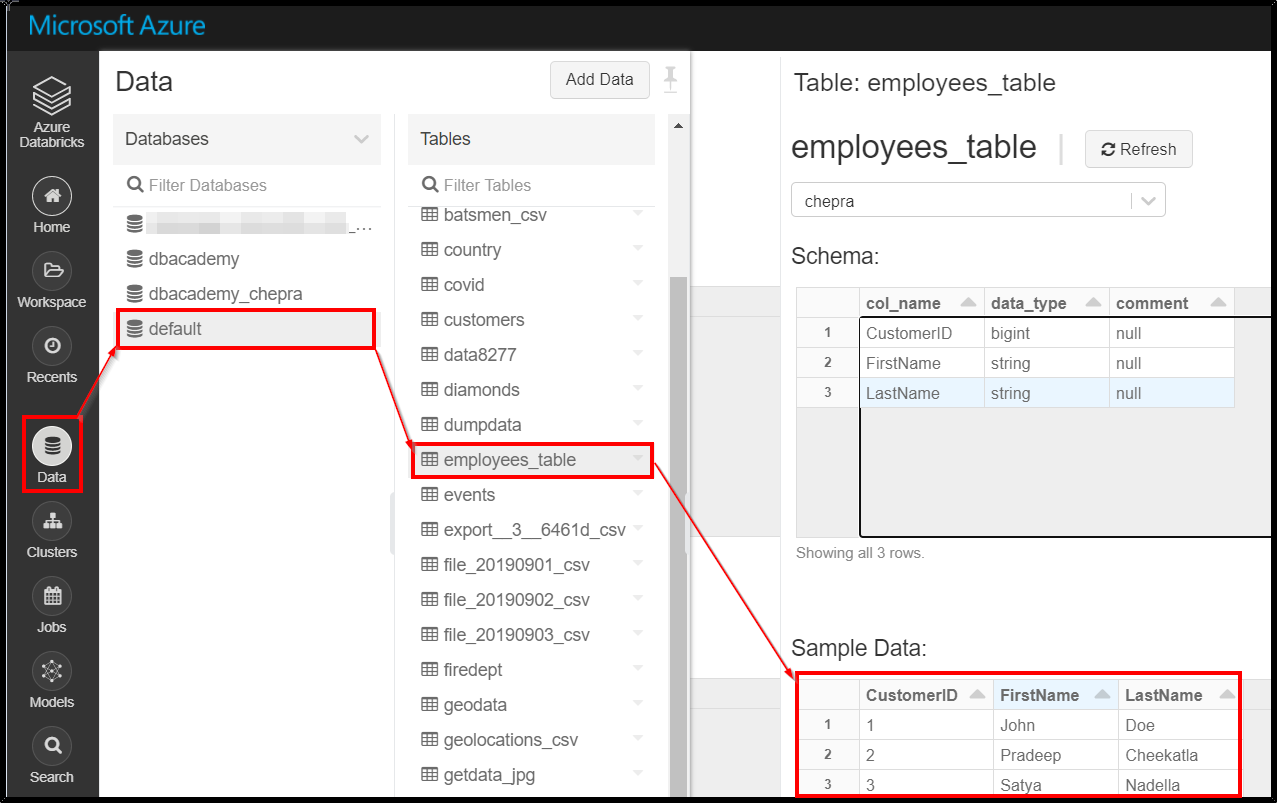

When you are using “.insertInto” with the dataframe. It will insert the data into underlying database which is databricks default database.

To successfully insert data into default database, make sure create a Table or view.

Checkout the dataframe written to default database.

For more details, refer “Azure Databricks – Create a table.”

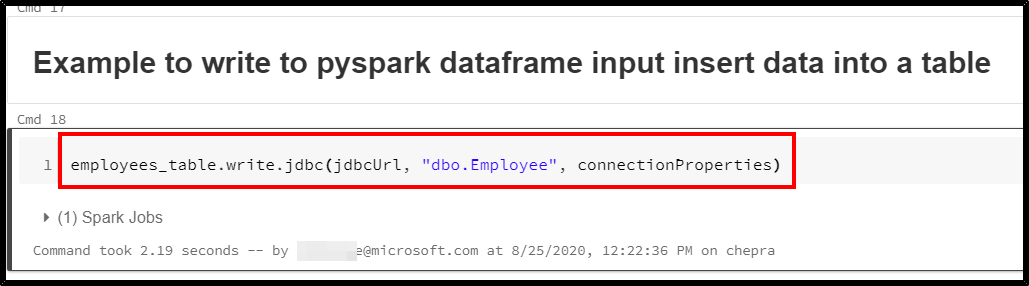

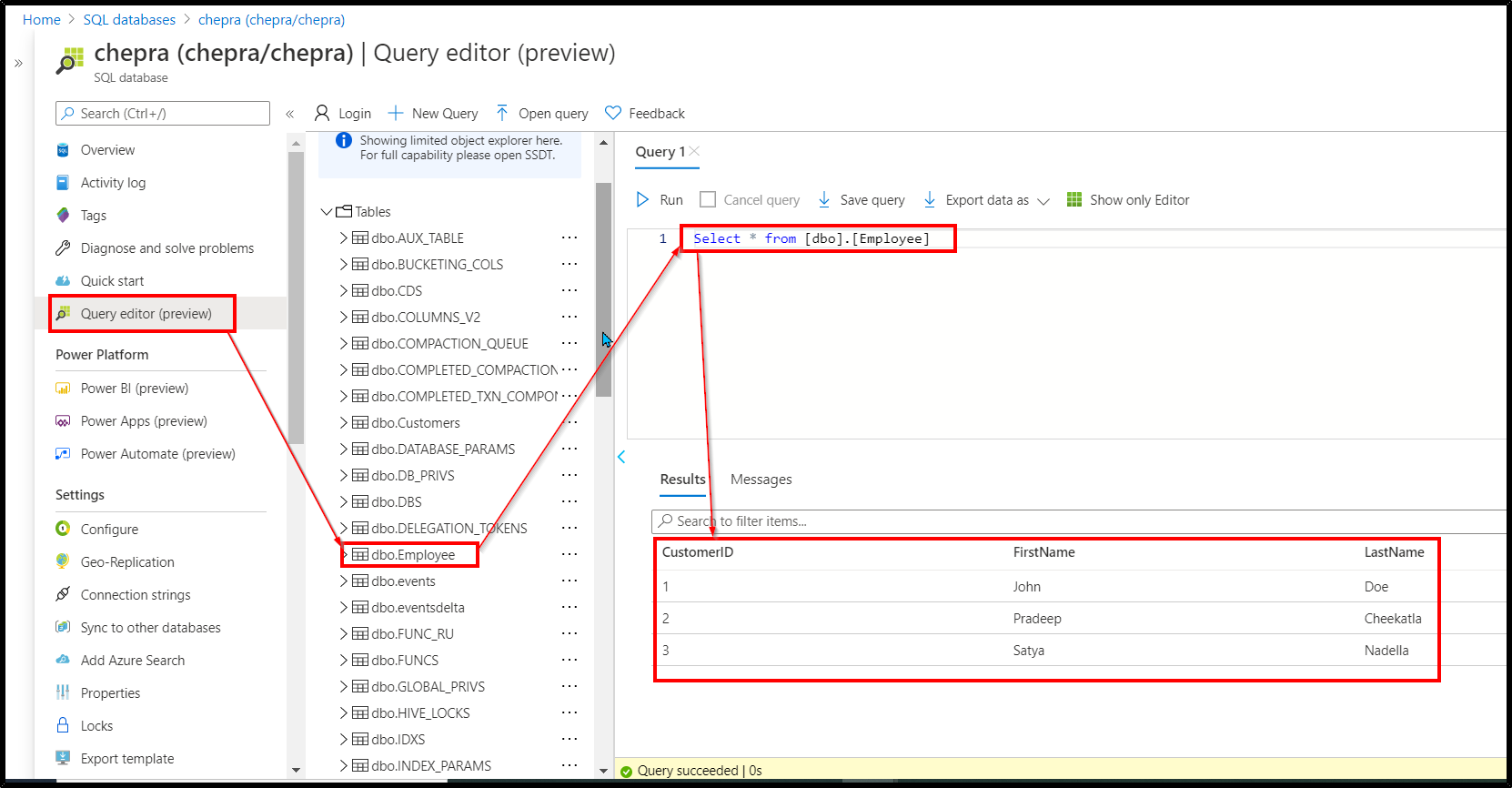

Here is an example on how to write data from a dataframe to Azure SQL Database.

Checkout the dataframe written to Azure SQL database.

For more details, refer “Azure Databricks – Write to JDBC”.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.