Hello @Sateesh Battu and welcome to Microsoft Q&A.

It sounds like you want to retrieve the output stream/dataset from a given run or definition?

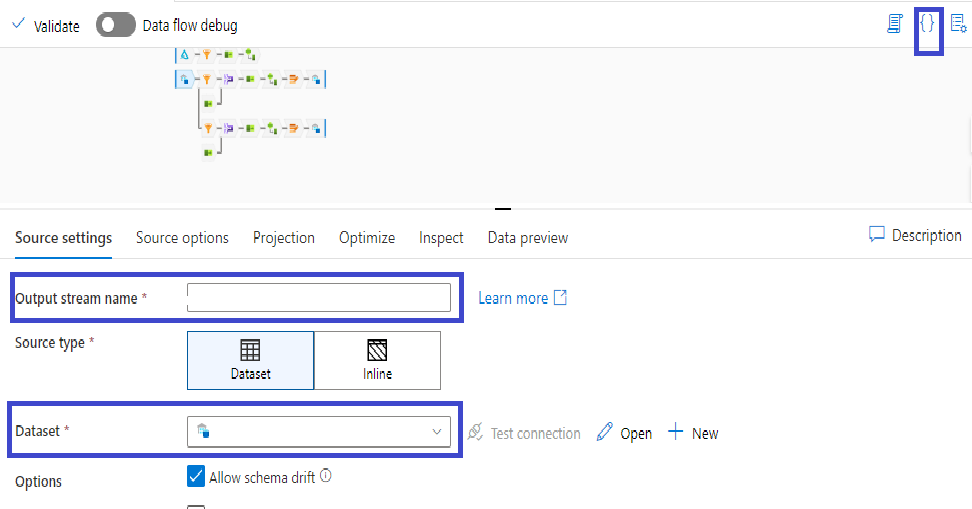

Since the stream is defined in the Data Flow definition, and the Dataset defined in the pipeline definition, these are not dynamic values. This confuses me as to what your end goal is. By knowing which Data Flow or Pipeline was used, you know which Dataset was used. Also, knowing the stream name doesn't yield any actionable details. It isn't like you could hook up another service to the output stream.

But if you really wanted to, I suppose first you would take the pipeline run id, and get the run details. From that you get the pipeline name. With the pipeline name you fetch the pipeline definition. Inside the pipeline definition find the relevant copy activity. Under that it is outputs[0].referenceName

For the dataflow, once located in the pipeline definition, get the Data flow name under typeProperties.dataflow.referenceName. With this name fetch the data flow definition. There look under properties.typeProperties.sinks[x].name