Hello @Felipe Regis E Silva ,

Thanks for the question and using MS Q&A platform.

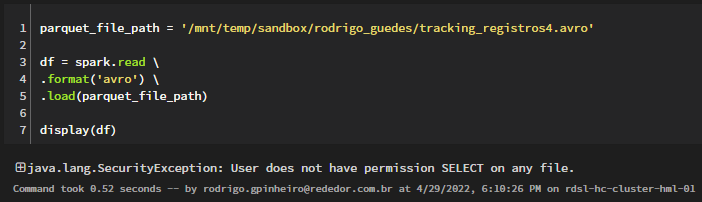

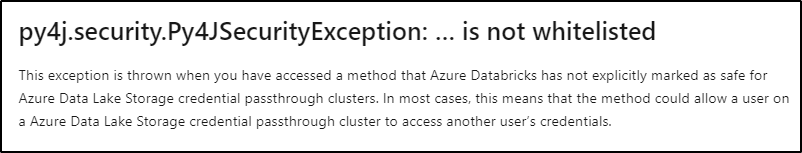

This error shows up with some library methods when using High Concurrency cluster with credential pass through enabled. If that is your scenario a work around that may be an option is to use a different cluster mode.

For more details, refer to Access Azure Data Lake Storage using Azure Active Directory credential passthrough.

Below are the three workarounds to resolve this issue:

- Update

spark.databricks.pyspark.enableProcessIsolationtofalse - Update

spark.databricks.pyspark.enablePy4JSecuritytofalse - Use

standardclusters.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators