Hello @PRADEEPCHEEKATLA-MSFT

I didn't able to check my email in a while. I have resolved the aforementioned error using the command

SET mapreduce.input.fileinputformat.input.dir.recursive=True.

Thank you very much for your response.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

I am writing code in hiveQL using databricks community edition.

I have loaded the csv dataset files into dbfs, and created hive external table and tried loading table with data from uploaded dbfs file.

CREATE EXTERNAL TABLE IF NOT EXISTS data_table(

Id STRING,

Sponsor STRING,

Status STRING,

Start_Date STRING,

Completion_Date STRING,

Type STRING,

Submission STRING,

Conditions ARRAY<STRING>,

Interventions STRING

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '|'

LOCATION '/FileStore/tables/CT21/clinicaltrial_2021-1.csv'

TBLPROPERTIES("skip.header.line.count"="2");

result was OK.

But when I queried

select * from data_table

It throws an exception:

Error in SQL statement: IOException: Path: /FileStore/tables/clinicaltrial_2021/mesh.csv is a directory, which is not supported by the record reader when mapreduce.input.fileinputformat.input.dir.recursive is false.

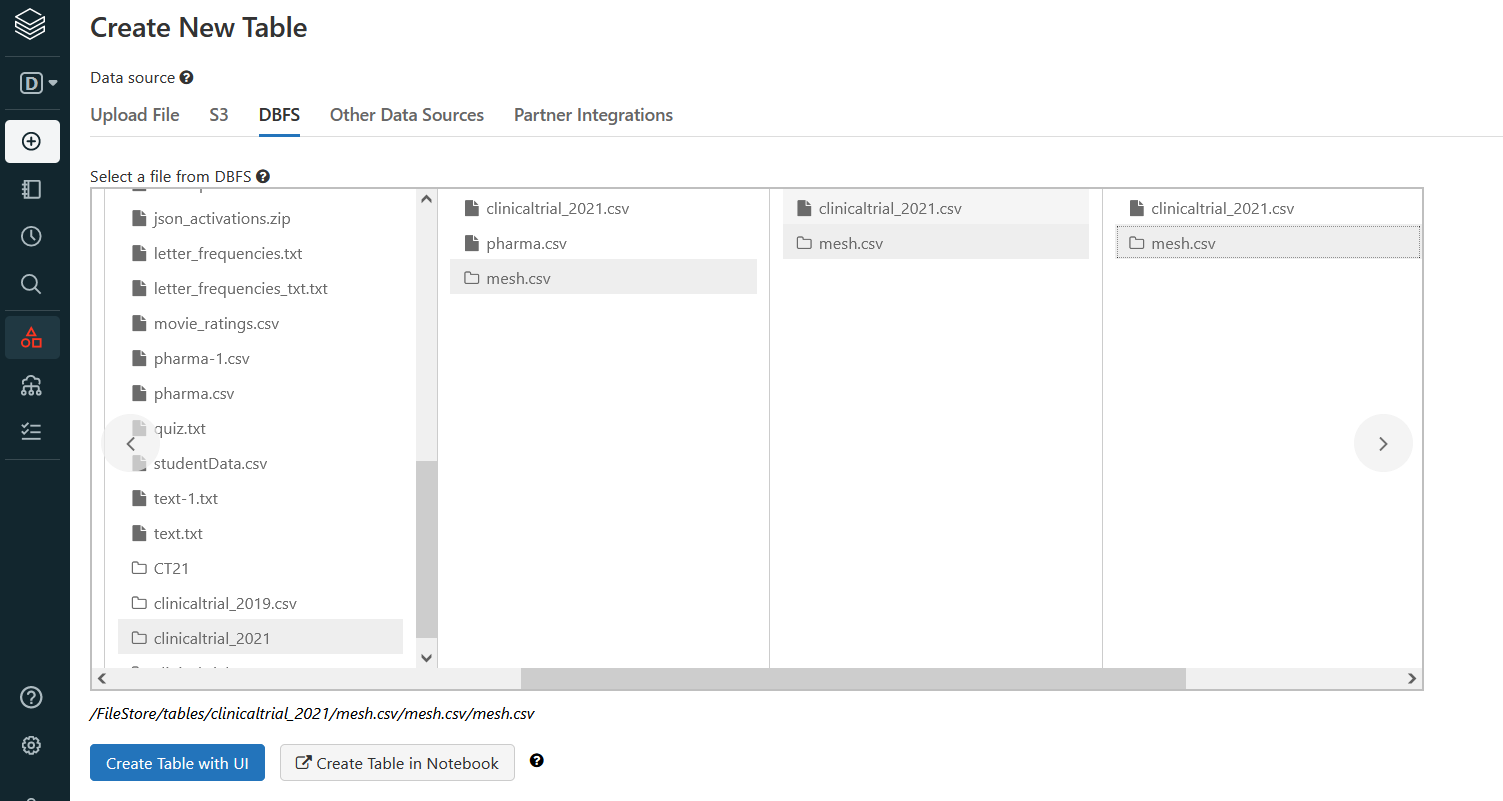

When I checked dbfs directory:

There exists a new directory mesh.csv created by dbfs with many subdirectories with same mesh.csv

Any help is highly appreciated.

Hello @PRADEEPCHEEKATLA-MSFT

I didn't able to check my email in a while. I have resolved the aforementioned error using the command

SET mapreduce.input.fileinputformat.input.dir.recursive=True.

Thank you very much for your response.