Introduction to SharePoint Search Indexes for DPM Administrators

This blog introduces the basics of SharePoint Search capabilities to DPM Administrators. Initially, it may be a bit confusing as to why a DPM blog would contain information about how to setup and configure another technology’s software. In protecting this data using DPM, it is necessary to have an understanding of how the protected application is installed and configured. This is especially true with regards to Search Index database protection.

By the conclusion of this short series of blogs, you will have a greater understanding of why knowing a bit about the applications that DPM protects helps in their protection, recovery, and maintenance.

Why 2 versions of Search

Since DPM offers the capability to protect both WSS Search and MOSS Search, let’s take a moment to understand the difference before we dive into the set up and configuration aspects.

First, let’s discuss the basics of what a Search Index server used for. This is the same principal as how the Internet works. Websites are “crawled” (queried for their contents) and a reference to their content is then stored in a database. Think of this as you would an index to a book. From a SharePoint perspective, this allows user to location content in the farm without knowing where the content actually resides.

“Office SharePoint Server 2007” provides two search services: “Office SharePoint Server Search” and “Windows SharePoint Services Help Search”. Each of these services can be used to crawl, index, and query content, and each service uses a separate index.

The “Office SharePoint Server Search” service is based on the search service that is provided with earlier versions of “SharePoint Products & Technologies”, but with many improvements. You should use the “Office SharePoint Server Search” service to crawl and index all content that you want to be searchable (other than the Help system).

The “Windows SharePoint Services Help Search” service is the same service provided by “Windows SharePoint Services 3.0”, although in “Windows SharePoint Services 3.0” it is called the “Windows SharePoint Services Search” service. “Windows SharePoint Services 3.0” uses this service to index site content, index “Help” content, and service queries.

Installing a Search Index Server

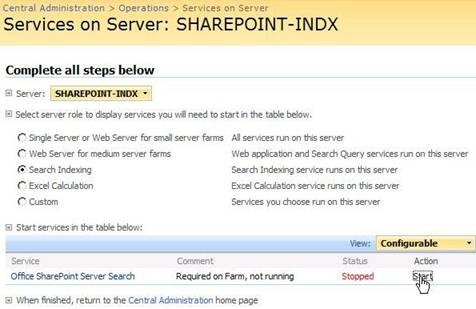

The installation of a server for Search is the same as with any other SharePoint server. Use the “Advanced” option during installation and add it to an existing farm. The piece that defines the server as a Search server is found in the “Central Administration” website under “Operations” and “Services on Server”

An example of this screen is shown below for your convenience.

After clicking on the “Start” action, a wizard is displayed where you will need to specify some basic configuration information. In this wizard, you will make selections on which WFE’s (Web Front-End server) will be used to crawl the content of sites in the SharePoint farm and add their content references to its index.

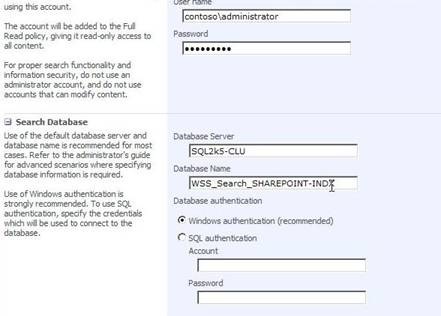

As part of the configuration, you will also need to create a database for the index references to be stored. This is also done from within the Central Administration website.

Below is a screenshot of the information that must be specified when creating the database. When creating the database, be sure to give it a name that makes it easy to identify its purpose.

With the content database created, we need to look at the creation of a Shared Services Provider.

Shared Services Provider (SSP) Configuration

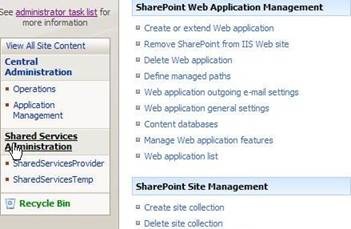

Once the services have been configured on the Search Index server and are running, you are down to the last step. An SSP (Shared Services Provider) must be created as this is the SharePoint object that has the crawling functionality in it.

We will start by creating the Shared Services Provider by clicking on the Shared Services Administration link on the left side from within the Central Administration website’s main page.

Begin by clicking on the New SSP link as seen below by the pointer.

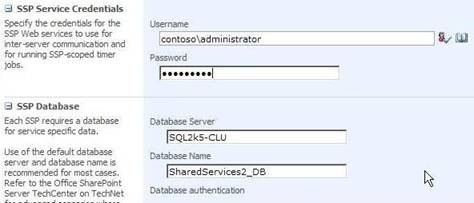

This opens a new page where you will specify the configuration information needed for SharePoint to create the provider. A database is also needed for this so make sure that the database name is clear as to its purpose. Once the necessary information has been provided, click on the “OK” button at the bottom to allow SharePoint to create the provider.

Once complete, you should see a page like the following indicating that you have successfully created a Shared Services Provider.

Now, we need to figure out how to crawl the sites in the farm.

SSP Crawl Configuration

When the Shared Services Administration page opens for this SSP, click on the Search administration link under the Search section to begin the crawl configuration.

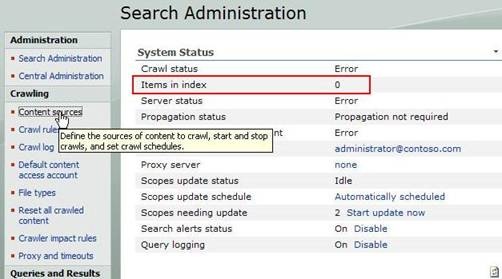

When the Search Administration window appears, notice that the Items in index value is ‘0’ which is expected as this SSP was just set up and has not yet been configured. Under the Crawling section on the left, click on the Content sources link to start the configuration.

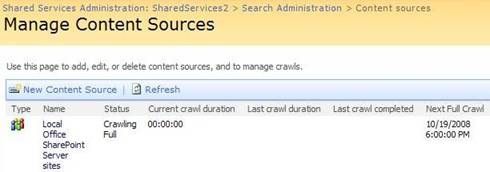

This will bring up the Manage Content Sources window.

After the Manage Content Sources window appears, there should only be a single entry in the list titled Local Office SharePoint Server sites. Click on this link as this is what will need to be configured in order to make this SSP useful.

At the top of the configuration page titled Edit Content Source, you have the option of changing the name to something more suitable. This is an optional entry.

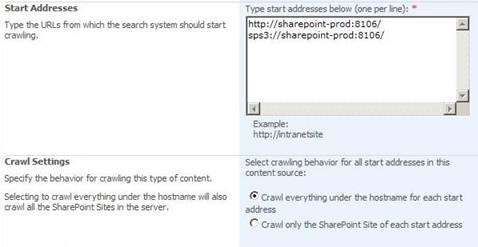

If there are other site addresses that you would like this SSP to crawl, enter them in the box as seen below. If you are unsure of the URLs of the SharePoint sites, there is an alternate location that we will discuss in a few steps that will set this up for you. You will only need to select radio buttons based on a list of sites.

Here, the settings begin to get more impactful. In the Crawl Settings section, choose one of the two radio buttons which essentially allow you to filter on whether the content is SharePoint in nature or not. Choosing the Crawl everything… radio button allows the crawl to capture the maximum amount of data but also opens up the searches to additional content that you may not be expecting. For a test environment, this is the best solution as long as none of your SharePoint sites contain external site references on the Internet as the crawl will catalog the Internet data as well.

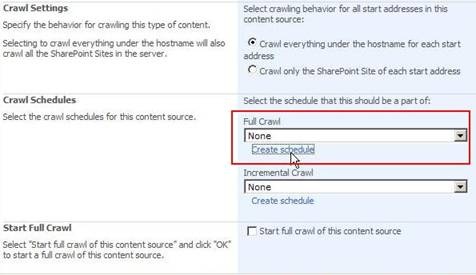

The last settings to make are for scheduling when the crawls will take place on the sites selected. Selecting the Create schedule links under the Full Crawl and Incremental Crawl sections will allow for a modest configuration schedule.

Each Create schedule link will open the following window offering multiple types with multiple settings for each. In a lab-style environment, these can be quite lenient with longer intervals. If you are testing or working in a rapidly updating environment, then a shorter interval is recommended.

Click on OK to accept the schedule you have configured and then fill in the Start full crawl of this content source checkbox so that when you click OK, the initial crawl will begin.

Depending upon how much configuration and modification you have done to the sites specified in the crawl list, the crawl should run in just a few minutes. The Status field should show Crawling Full when the page is initially drawn. You can monitor the page or you can leave it and continue with your SharePoint work. Once the initial crawl is complete however a Search site should be setup, if it has not already been added, so that you can test the index information for accuracy.

Creating Crawl Rules

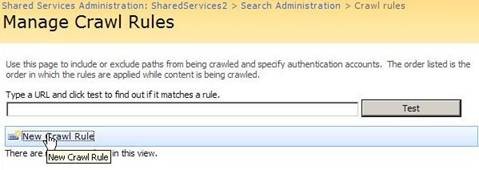

A short configuration step to take is to include the paths and authentication accounts for crawling. To reopen the crawl rules, click on the Crawl rules link under the Crawling section on the left side of the SSP’s Search Administration page.

Since this is a newly created SSP, there will be no rules. Click on the New Crawl Rule link to create rules for this SSP.

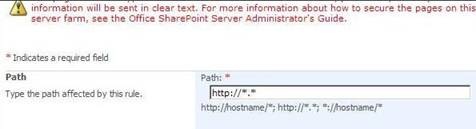

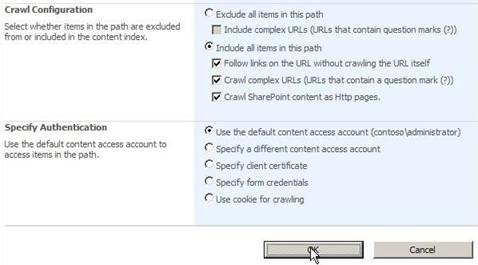

There are 3 sections to be configured here and the following shows a test configuration that works. For simplicity, use these settings and click on OK to complete the configuration.

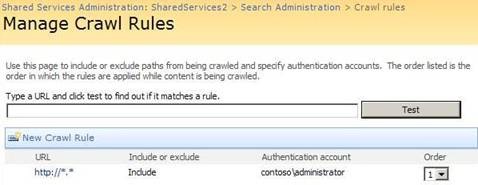

The Manage Crawl Rules page should return pretty quickly to let you know what configuration has been made. The crawl that you ran should be done by now as well.

Working under the premise that the crawl has been completed, a return to the Search Administration site should display entries like the following. Observe that the Items in index field now has a non-zero value indicating that there are entries in the index.

To supplement the high-level overview of the steps covered here, a video has been provided that will guide you from start to finish.

Video

Vic Reavis

Support Escalation Engineer

Microsoft Enterprise Platforms Support

![clip_image014[1] clip_image014[1]](https://msdntnarchive.blob.core.windows.net/media/TNBlogsFS/BlogFileStorage/blogs_technet/askcore/WindowsLiveWriter/IntroductiontoSharePointSearchIndexesfor_B459/clip_image014%5B1%5D_thumb.jpg)