Hyper-V 2012 R2 Network Architectures Series (Part 3 of 7) – Converged Networks Managed by SCVMM and PowerShell

Following the same rule from the previous post about Non Converged Network Architectures, let me add the diagram first and elaborate my thoughts from there

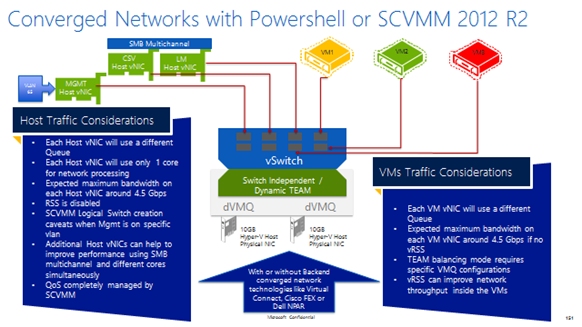

As you can see, in this diagram there are only 2 x 10 GB Physical Network cards. This hardware configuration is becoming more and more popular because 20GB of bandwidth should cover most customer’s needs while simplifying the datacenter and reducing costs. However, this architecture does include some caveats that must be mentioned in order to avoid potential performance issues.

So let’s start with the positives for this option and leave the constraints to the end of the post.

- Simplified and central management from SCVMM 2012 R2. We can setup almost everything from Virtual Machine Manager 2012 R2 using the powerful Logical Switch options. Hyper-V Host Traffic partitioning, VMs QoS and NIC Teaming will be configured automatically by VMM once our Logical Switch template is applied to the Hyper-V Host

- QoS based on weight can be applied by VMM 2012 R2 enhancing the resource utilization based on the need and the priority. For example, we might want to guarantee a minimum bandwidth to our CSV and LM vNICs on the Hyper-V host to make sure that Cluster reliability and stability is protected.

- You can take advantage of SMB Multichannel and use it with the vNICs to provide more bandwidth if needed. Each vNIC will use a VMQ and SMB Multichannel can help to use more CPU Cores to improve network throughput for the same traffic. CSV Redirection and Live Migration network traffic are good examples.

- You will be able to use LACP if needed if the 2 Physical NICs are connected to a Physical Switch. This might be desired or not depending on your needs. LACP configuration will force you to use a MIN-QUEUE configuration for dVMQ, so if you plan to run more VMs than Queues maybe this is not the best decision. You can always use Switch Independent / Hyper-V Port or Dynamic configuration for your TEAM if you don’t expect to need more than 10GB throughput for just one VM.

This architecture seems really handy right? I can manage almost all my network settings from VMM preserving good Network throughput… but talk about those caveats I mentioned:

- You lose RSS for the Hyper-V Host network traffic. This might have a performance impact in your Private Cloud if you don’t properly mitigate the penalty of losing RSS with several vNICs in your host to use SMB Multichannel. For example LM speed can be impacted unless you take advantage of SMB Multichannel.

- Generally speaking, you have a single point of failure on the TEAM. If for whatever reason the TEAM has a problem, all your network might be compromised impacting the Host and Cluster stability

- VM deployment from the VMM Library server will have just one core for the queue of the vNIC for management. This can reduce the deployment and provisioning speed. This can be a minor or big issue depending on how often you deploy VMs. One core can handle around 4Gbps throughput so I don’t really see this as a big constraint but something to keep in mind.

- The amount of VMQs available may be a concern if we plan to run a lot of VMs on this Hyper-V host. All the VMs without a queue will interrupt CPU 0 by default to handle their network traffic.

- We still need to configure VMQ processor settings with Powershell

- Ii is not possible to use SR-IOV with this configuration if the NICs are teamed at host level.

Hyper-V Converged Network using SCVMM 2012 R2 and Powershell strength is based on the fact that 90% of the configuration required can be done from VMM. However, we may face some performance constraints that we should be aware before making any decision. As a general rule I would say that most of the environments today can offer more performance than is required but in some cases we will need to think of different approaches.

Stay tuned for part 4!