Disaster: Sector “0” Blanked for Cluster CSV, VMM Incomplete Configuration

Earlier this week, I had the privilege of living through a living hell whereby I was wondering what exactly would happen when our development engineering team had to figure out how to rebuild 53+ virtual machines hosted on a Failover Cluster with Clustered Shared Volumes (CSV) enabled. The first question is why don’t you have backups and I will tell you we do but let me talk about what I believe is the perfect storm of irony.

In today’s post, I will walk you through this scenario so you can re-live the unbelievable pain and hopefully in this blog you will enjoy the entertainment value as much as what I hope you learn. Let’s get started…

Scenario: Say goodbye to EMC AX4-5 Storage Area Network (SAN) & Hello to NetApp SAN

Our engineering development cluster has ran great for 3+ years and has lived through host decommissions, failed drives, and even expansions of size for CSVs when running low. We’ve moved data successfully around easily and pulled hosts in & out based on our needs. The datacenter is an interesting place you see – hardware grows old and begins to show its age though to EMC’s credit we didn’t really see this age. It was running, and for our needs, running well. However, it was out of support effective April 2012 and at this point we were putting our business at risk.

Enter NetApp with it’s great integration points with System Center 2012 and we couldn’t say no. There were other factors but in the end we now ended up with 2 SANs racked side-by-side – one old and one new. The techie in me couldn’t say no to this and we were excited to get the NetApp into production as it was bigger, faster, and newer. The LUNs were provisioned and presented to the hosts and now added to our Failover Cluster.

It’s time to migrate…

System Center 2012: VMM’s Migrate Storage Feature

With System Center 2012’s Virtual Machine Manager, you can easily migrate from one storage to another. It is an operation you can do manually or automate using PowerShell. In our case, we decided to move manually and for this post I focus on the manual process for a single VM.

The steps were straight forward:

- Open the VMM Admin Console

- Expand the Failover Cluster in VMM’s host group, locate your Virtual Machine (VM)

- Right-click and choose Migrate Storage

- Select the storage, or in our case the CSV, location for both the configuration & virtual hard disks (VHDs) and click Next

- Select the host you’d like to host the VM (this can be the same as it is currently hosted on if you like) and click Next

- Click Move

NOTE: In some cases, you have non-highly available Virtual Machines that you’d like to move to your Highly Available infrastructure. In System Center 2012’s VMM it is possible to do this by using the Migrate Virtual Machine option and check the “Make this VM highly available” and this will migrate the VM to the cluster for the configuration & VHDs.

This job will take time depending on the size of the disks and the number of disks to move. For some of our VMs it was 8 to 10 minutes while for others they were in the 3 to 4 hours as they were large VMs with multiple disks running System Center 2012 platforms like Configuration Manager and Operations Manager.

For more in-depth information on the migrate storage feature, please review my blog at https://blogs.technet.com/b/chrad/archive/2009/09/07/virtualization-tip-migrating-to-san-s-after-the-fact-with-scvmm-using-r2-s-migrate-storage.aspx?CommentPosted=true that outlined the new feature when it appeared in VMM 2008 R2.

Life was good: Monday morning was pretty and 3.6 Terabytes had been migrated successfully

On Monday morning after the migration, we were nearly 75% completed with the migration from the EMC to the new NetApp. We were at 60+ Virtual Machines or so migrated and they were all in their existing state as the team had left them on Friday.

This is the time you’d normally start the day with a meeting and talk about the next steps. This is exactly what we did at 9:30 and we decided that we needed to provision one more LUN and add to the cluster as an additional CSV to complete the migration off the EMC. The migration would continue the following weekend.

At 12:36 PM, I heard from some folks on my team that they were unable to access any VMs and the question was something like this -

“What did you do again this weekend, Chris?”

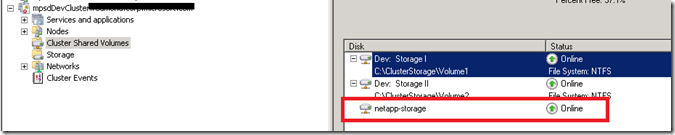

After hearing this enough, I immediately checked the Failover Cluster using the Manager and saw that nearly 75% of our Virtual Machines were offline. In looking at the event viewer, I recognized that the new CSV showed dismounted at 12:36 and I was shocked. The first visual indication I got was checking the Cluster Shared Volumes in the Failover Cluster Manager and realizing that the disk showed no indication of the volume:

As you can see, the NetApp CSV-based LUN was seen by the cluster yet had no volumes and the result was that in Disk Management the disk showed offline. Bring it online led to it asking to initialize the disk which no one should do to an existing volume with data you’d like to retain as this re-writes the partition table for a MBR or GPT-based volume.

Oh heck, what do we do now?

After working all day with the NetApp and internal Microsoft expert named James, I’m happy to say we solved this problem with no data loss whatsoever. I was @#$#% bullets until late Monday night but I knew that our team would validate in the morning any data problems and we did confirm this.

James determined that on our CSV our LUN’s Sector “0” was blanked and thus had no recognizable data to the operating system. For some, you might just think this is a problem and decide to re-initialize the disk but when you do this you would blank out Sectors 1 & 2 which are very important in MBR/GPT volumes. This engineer taught us a lot while we nervously awaited to see if he could re-establish the sectors and cause the cluster to recognize the volume again.

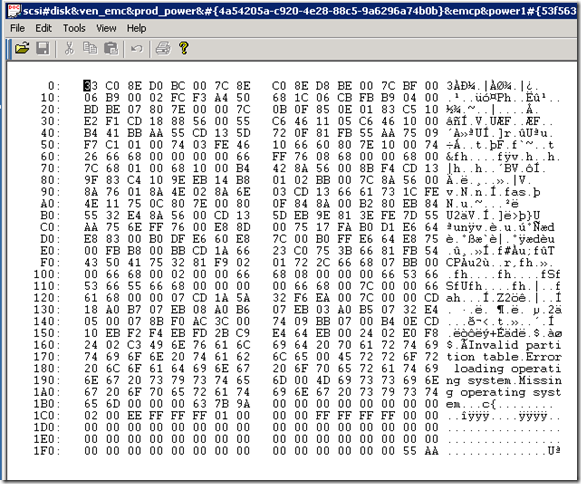

To do this, the engineer sent us a tool called Disk Probe and he started playing with Go To Sector, copy sectors off to file, taking screen shots and overly amazing us while we were praying. In the end, he found that Sector 0 was blank and didn’t look like it “should” and I’ve provided a sector 0 that isn’t blanked out in the screenshot below-

NOTE: This is the typical warning I’d like to share. If you are faint of heart, or pregnant, or whatever I’d recommend you do not get this application and do anything with it. I will never touch it personally as it allows you to edit sectors directly on the disk. James was a cool customer and obviously knew what he was doing but for those that don’t, call someone who does. You should know that James role at Microsoft is currently in our Customer Service & Support group so you might can get lucky and find him. Consider this your warning.

After he’d done some “stuff”, saved it, the volume returned in our Failover Cluster Manager. The VM’s, all 53 of them, could effectively start now and be effectively managed which is where we decompressed.

Rebuilding Sector 0: Disk Signature Changes with Initialize Disk

As part of the exercise, James was capable of pulling key information from Sector 1 & 2 which include the reserved partitions for Microsoft’s MBR/GPT volumes (I think). James took the appropriate screenshots of Sector 1 & 2 and then he initialized the disk which created a new disk signature. For your education, prior to the “blanking” of our sector 0 I want to provide you a before after:

Before Sector 0 Corrupt: {B08C099D-54B4-476B-94A4-3A5B4C5ED03F}

After Sector 0 Corrupt: {5A038286-1631-40AC-A329-5BA4EC28A9C3}

We noticed a flooding of events getting logged in the cluster that read like the below which indicated to us that the cluster was still unhappy overall.

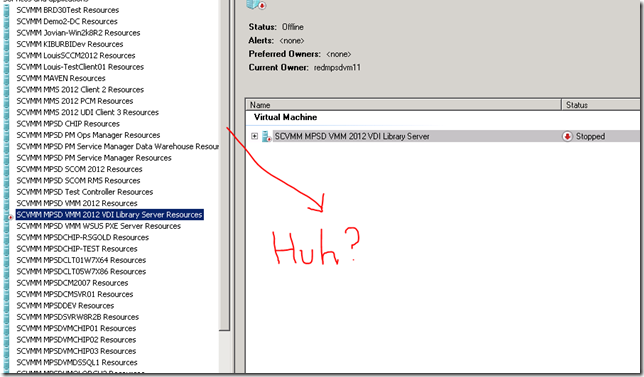

Although the VM’s were successfully running, we noticed that every Virtual Machine under Services & Applications in the Failover Cluster Manager failed to show the volume resource binding like it normally does. We were unsure if it impacts anything and since the VMs were running, we decided to worry about it later.

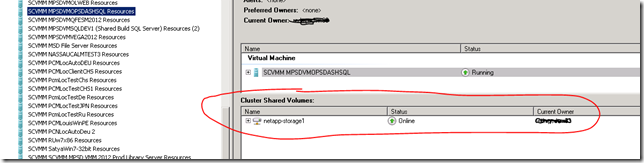

A normal view shows the resources required for the configuration such as drives, etc. I’ve shown a typical working example below in the graphic -

System Center 2012: VMM’s Incomplete VM Configuration

Because of the late night hour, and determining that the VMs were functional, we decided that the mission linkage to Cluster Shared Volume wasn’t a problem,. It was only a few days later we realized that we seem to might have some correlation between the state of the VM’s in the cluster and the VMM state “Incomplete CM Configuration.” We couldn’t understand if the disk signature was causing an issue or what but thanks to the help of Hector from our VMM team we were able to determine that this in fact was a clustering issue and not a VMM.

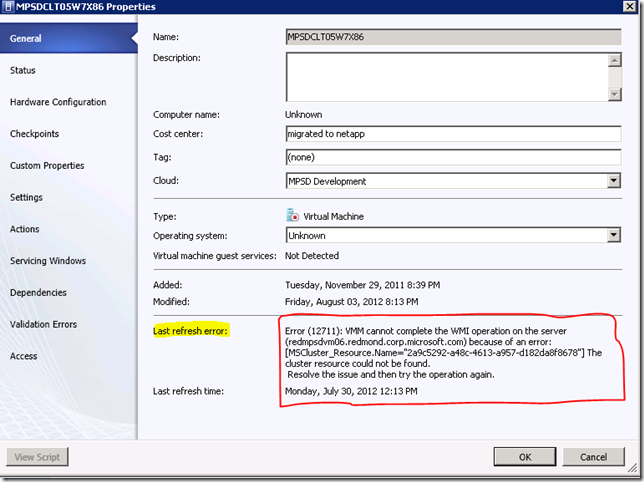

I wanted to step you through some of the VMM errors were receiving during this to help you -

As you can see, we have an issue with MSCluster_Resource.Name=”{Guid}” and it was unhappy. For each VM, the GUID was different but the error 12711 was the same and the until this problem was corrected the VM’s in VMM were unable to be refreshed.

Refreshing Cluster Configuration for Virtual Machines

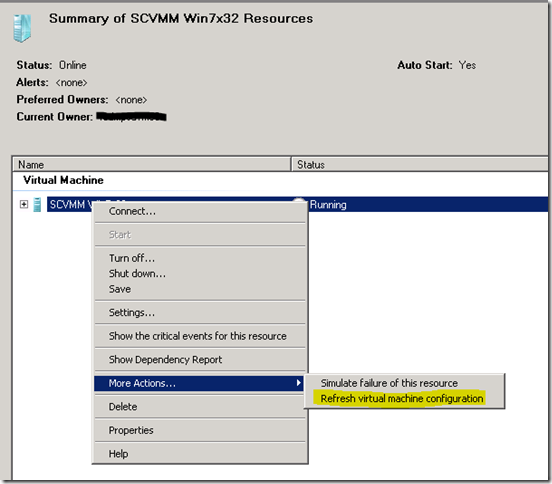

The magic bullet to get the cluster back to a healthy state was to do an easy exercise of refreshing the virtual machine configuration so that it would drop the cache (which was inaccurate now) and re-build it. This allows it to verify everything is working. The major complaint I have is that it is very buried in the Failover Cluster Manager user experience and many will not know it.

To refresh the configuration for a VM, do the following-

- Open Failover Cluster Manager

- Expand the clusters Services & Applications and click the Virtual Machine

- In the middle console, right-click on the virtual machine and select More Actions…

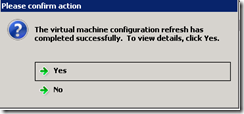

- Click Refresh virtual machine configuration

- After it completes, you will be prompted to see if you’d like to see the results – Click Yes or No

- Click off your VM (or refresh) and come back and you will now see the Disk resource binding

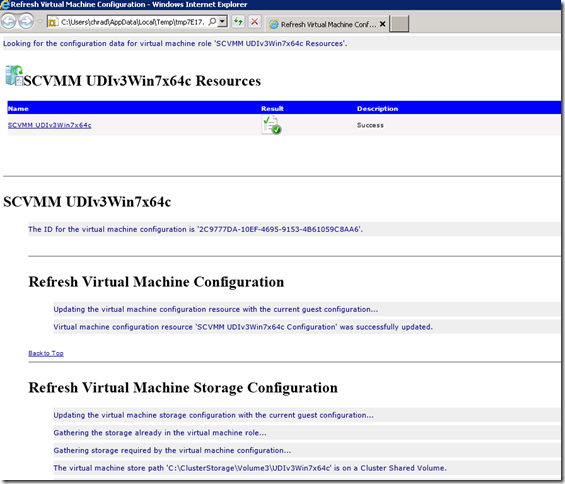

If the refresh was successful, you can view the report and it will look similar to the below. The result is that you now have a healthy VM in the cluster and you can move to fixing Virtual Machine Manager.

Virtual Machine Manager: Refresh Your Virtual Machine

The actual action to make the virtual machine in VMM happy is so simple now that you will be happy to know that it is a single right-click experience. You right-click on the virtual machine and choose refresh and the refresh should now complete successfully. You should also refresh the entire cluster at this point as it should be healthy now and you are off to the races.

Summary

This blog was one that I hope was a bit entertaining while at the same time informative. As we continue this migration, we hold hope for no more surprises though there was a lot of learning taking place early this week. The key takeaways I hope you learned how to effectively understand are -

- Using System Center 2012’s VMM Migrate Storage feature is super simple and easy – Use It!

- Sector “0” clearing is a exercise in futility and painful and not for the faint of heart – Find an expert and befriend them until you leave this planet

- Failover Clusters can be unhealthy and still providing working VMs to end-users

- Refreshing virtual machine configurations are easy, diagnosing this is the issue not so much

Enjoy!

Thanks,

-Chris