The server rejected the session-establishment request

Here is an interesting WCF exception you may have encountered before. In my case we were looking into a performance issue for a client.

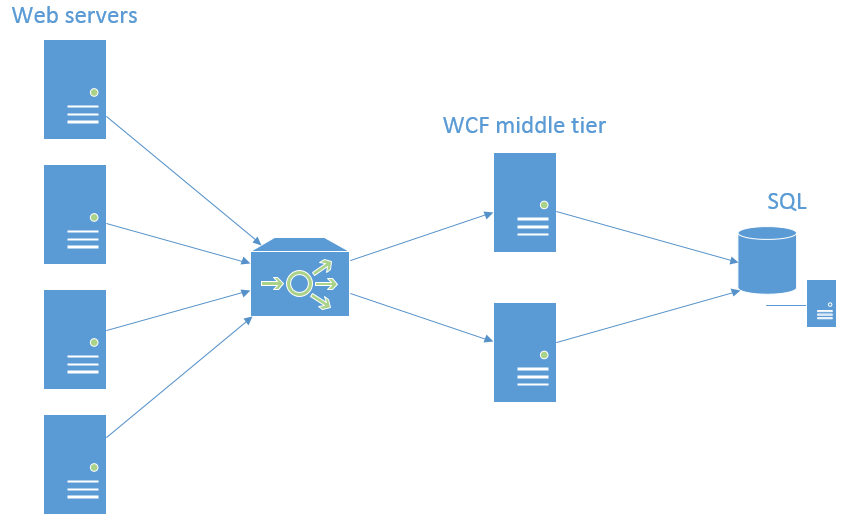

Environment

This setup is pretty common. A set of load-balanced web servers is talking to a load-balanced WCF middle tier. The middle tier interacts with the data source.

In this case, there are two WCF services: one streamed and one buffered. Both services use the net.tcp transport with binary encoding.

The Problem

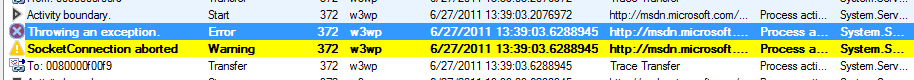

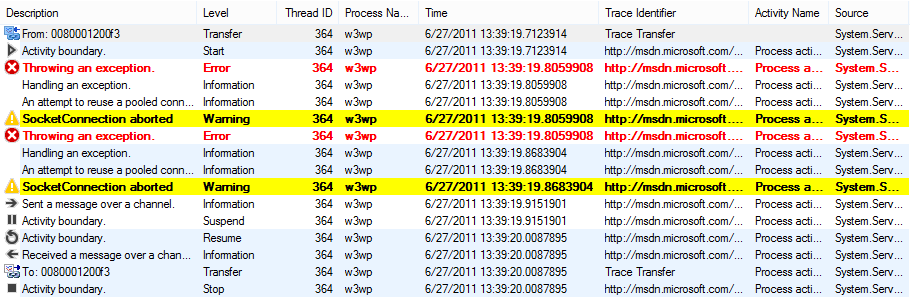

One of the symptoms that I dread the most when diagnosing WCF issues is the CommunicationException. It's a generic catch-all that gives no information as to what actually happened. You'll usually see something like this in the diagnostics logs.

The error message usually looks like this:

<Exception>

<ExceptionType>System.ServiceModel.CommunicationException, System.ServiceModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</ExceptionType>

<Message>The socket connection was aborted. This could be caused by an error processing your message or a receive timeout being exceeded by the remote host, or an underlying network resource issue. Local socket timeout was '00:00:59.7348017'.</Message>

<StackTrace>...</StackTrace>

<ExceptionString>

System.ServiceModel.CommunicationException: The socket connection was aborted. This could be caused by an error processing your message or a receive timeout being exceeded by the remote host, or an underlying network resource issue. Local socket timeout was '00:00:59.7348017'. ---> System.Net.Sockets.SocketException: An existing connection was forcibly closed by the remote host

at System.Net.Sockets.Socket.Receive(Byte[] buffer, Int32 offset, Int32 size, SocketFlags socketFlags)

at System.ServiceModel.Channels.SocketConnection.ReadCore(Byte[] buffer, Int32 offset, Int32 size, TimeSpan timeout, Boolean closing)

--- End of inner exception stack trace ---

</ExceptionString>

<InnerException>

<ExceptionType>System.Net.Sockets.SocketException, System, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</ExceptionType>

<Message>An existing connection was forcibly closed by the remote host</Message>

<StackTrace>

at System.Net.Sockets.Socket.Receive(Byte[] buffer, Int32 offset, Int32 size, SocketFlags socketFlags)

at System.ServiceModel.Channels.SocketConnection.ReadCore(Byte[] buffer, Int32 offset, Int32 size, TimeSpan timeout, Boolean closing)

</StackTrace>

<ExceptionString>

System.Net.Sockets.SocketException (0x80004005): An existing connection was forcibly closed by the remote host

at System.Net.Sockets.Socket.Receive(Byte[] buffer, Int32 offset, Int32 size, SocketFlags socketFlags)

at System.ServiceModel.Channels.SocketConnection.ReadCore(Byte[] buffer, Int32 offset, Int32 size, TimeSpan timeout, Boolean closing)

</ExceptionString>

<NativeErrorCode>2746</NativeErrorCode>

</InnerException>

</Exception>

This exception can happen for a lot of reasons. So it pays to dig around the log and look for other signs of trouble. There could be other kinds of exceptions buried in there or warnings about hitting throttling limits. In our case, there was another kind of exception mixed in that WCF eats by default.

You can see there is a sequence of events to this exception. After the exception is thrown, it is handled and a message is logged indicating that an attempt to reuse a pooled connection failed. The socket connection is aborted and then a retry is attempted. These exceptions are being eaten by WCF since you can see that after a couple attempts, the rest of the request continues. Note that this is coming from the client side. The exception itself looks like this:

<Exception>

<ExceptionType>System.ServiceModel.ProtocolException, System.ServiceModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</ExceptionType>

<Message>The server at net.tcp://someserver/MyService.svc rejected the session-establishment request. </Message>

<StackTrace>

at System.ServiceModel.Channels.ConnectionUpgradeHelper.ValidatePreambleResponse(Byte[] buffer, Int32 count, ClientFramingDecoder decoder, Uri via)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.SendPreamble(IConnection connection, TimeoutHelper& timeoutHelper, ClientFramingDecoder decoder, SecurityMessageProperty& remoteSecurity)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedConnectionPoolHelper.AcceptPooledConnection(IConnection connection, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedFramingRequest.SendRequest(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.RequestChannel.Request(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object[] ins, Object[] outs, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation)

at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

...

at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute()

at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously)

at System.Web.HttpApplication.ApplicationStepManager.ResumeSteps(Exception error)

at System.Web.HttpApplication.System.Web.IHttpAsyncHandler.BeginProcessRequest(HttpContext context, AsyncCallback cb, Object extraData)

at System.Web.HttpRuntime.ProcessRequestInternal(HttpWorkerRequest wr)

at System.Web.Hosting.ISAPIRuntime.ProcessRequest(IntPtr ecb, Int32 iWRType)

</StackTrace>

<ExceptionString>System.ServiceModel.ProtocolException: The server at net.tcp://someserver/MyService.svc rejected the session-establishment request. ---> System.ServiceModel.ProtocolException: Error while reading message framing format at position 0 of stream (state: ReadingUpgradeRecord) ---> System.IO.InvalidDataException: More data was expected, but EOF was reached.

--- End of inner exception stack trace ---

--- End of inner exception stack trace ---</ExceptionString>

<InnerException>

<ExceptionType>System.ServiceModel.ProtocolException, System.ServiceModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</ExceptionType>

<Message>Error while reading message framing format at position 0 of stream (state: ReadingUpgradeRecord)</Message>

<StackTrace>

at System.ServiceModel.Channels.ConnectionUpgradeHelper.ValidatePreambleResponse(Byte[] buffer, Int32 count, ClientFramingDecoder decoder, Uri via)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.SendPreamble(IConnection connection, TimeoutHelper& timeoutHelper, ClientFramingDecoder decoder, SecurityMessageProperty& remoteSecurity)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedConnectionPoolHelper.AcceptPooledConnection(IConnection connection, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedFramingRequest.SendRequest(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.RequestChannel.Request(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object[] ins, Object[] outs, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation)

at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

...

at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute()

at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously)

at System.Web.HttpApplication.ApplicationStepManager.ResumeSteps(Exception error)

at System.Web.HttpApplication.System.Web.IHttpAsyncHandler.BeginProcessRequest(HttpContext context, AsyncCallback cb, Object extraData)

at System.Web.HttpRuntime.ProcessRequestInternal(HttpWorkerRequest wr)

at System.Web.Hosting.ISAPIRuntime.ProcessRequest(IntPtr ecb, Int32 iWRType)

</StackTrace>

<ExceptionString>System.ServiceModel.ProtocolException: Error while reading message framing format at position 0 of stream (state: ReadingUpgradeRecord) ---> System.IO.InvalidDataException: More data was expected, but EOF was reached.

--- End of inner exception stack trace ---</ExceptionString>

<InnerException>

<ExceptionType>System.IO.InvalidDataException, System, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</ExceptionType>

<Message>More data was expected, but EOF was reached. </Message>

<StackTrace>

at System.ServiceModel.Channels.ConnectionUpgradeHelper.ValidatePreambleResponse(Byte[] buffer, Int32 count, ClientFramingDecoder decoder, Uri via)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.SendPreamble(IConnection connection, TimeoutHelper& timeoutHelper, ClientFramingDecoder decoder, SecurityMessageProperty& remoteSecurity)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedConnectionPoolHelper.AcceptPooledConnection(IConnection connection, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.StreamedFramingRequestChannel.StreamedFramingRequest.SendRequest(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.RequestChannel.Request(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object[] ins, Object[] outs, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation)

at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

...

at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute()

at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously)

at System.Web.HttpApplication.ApplicationStepManager.ResumeSteps(Exception error)

at System.Web.HttpApplication.System.Web.IHttpAsyncHandler.BeginProcessRequest(HttpContext context, AsyncCallback cb, Object extraData)

at System.Web.HttpRuntime.ProcessRequestInternal(HttpWorkerRequest wr)

at System.Web.Hosting.ISAPIRuntime.ProcessRequest(IntPtr ecb, Int32 iWRType)

</StackTrace>

<ExceptionString>System.IO.InvalidDataException: More data was expected, but EOF was reached.</ExceptionString>

</InnerException>

</InnerException>

</Exception>

ProtocolException

When sending a message over a connection, WCF will first establish the protocol by sending a few meaningful bytes. In this case, the WCF server has both a buffered service and a streamed service. These two transfer modes use different protocols. So if a connection was created by a buffered service call, added to the connection pool upon the buffered service call's completion, and then used by a streamed service call, that could explain the ProtocolException. We thought this could occur because the client side WCF connection pool for TCP is based on IP address. Since the two services were on the same server, we believed the clients were sharing the connection pool. To test our theory, we asked the client to set the connectionGroupName attribute in their client side configuration. Setting the group name allows you to isolate the connection pools. The client tried this but observed no change in behavior. The reason for this is streamed services don't use connection pools. Something else was causing the ProtocolException.

Creating a Repro

As your possible solutions become more difficult to test, the best thing to do is create a small reproduction of the issue. This can be tricky to get right. Most of the WCF repros I write take into account the following characteristics of the original service:

- Hosting - IIS, port sharing, self-host

- Binding - TCP, HTTP

- Types of services - quick responses, I/O intensive, CPU intensive

- Sync or async

- Transfer modes - buffered, streamed

In this case, there were both buffered and streamed, synchronous, I/O-bound (calling into a database) services using a TCP binding with IIS hosting (TCP and IIS implies port sharing).

This is the buffered service. The sleep is to simulate holding the thread while contacting the database. Usually database calls are quicker than this but sleeps like 1 or 2 seconds can help make problems easier to recreate and analyze.

And this is the streamed service:

Here is the configuration for the services. The custom binding used is equivalent to a nettcp binding. Using a custom binding will come in handy for trying different settings (such as the connection pool group name mentioned earlier).

On the client side I attempt to simulate load on the service by running many parallel requests:

The CorrelationManager.ActivityId is useful for grouping the events for a given request under unique ids. Otherwise when you look at the trace in svctraceviewer you'll see everything is grouped under the 00000 id.

The full solution is available at the bottom of the post.

When I tried running the code I initially had no luck reproducing the problem despite tweaking thread pool settings, the number of simultaneous requests, and the throttle settings on the services. So I tried a different approach and ran many client processes simultaneously using the following command line trick:

for /l %i in (1,1,5) do start TestClient.exe

The number 5 indicates that I want the for loop to execute 5 times. When I ran this I was able to reproduce the issue and get the exact same ProtocolException in the client side logs.

The Solution

With the repro it is now possible to tweak settings and quickly find out what's wrong. For instance, if you just run the buffered service by itself, the issue still repros meaning that it's not the combination of the buffered and streamed service. The real difference was that multiple client processes were talking to the same service. This is entirely possible when you're dealing with an environment where multiple web servers are calling into a WCF middle tier. The problem ended up being with the connection pool settings on the services and the fix is easy.

The service is keeping a pool of connections to the clients calling it. Also each client is keeping a pool of connections to the buffered service. By default, these two pools will be the same size. So one client and one server sending multiple simultaneous requests should work just fine. But when there are many clients, the service runs out of connections and starts closing down inactive ones. The client doesn't know the service closed the connection and only finds out when it gets the ProtocolException. That's why WCF eats the exception. The real problem was then that eventually all the connections would be active and after a client exhausted all the connections in the pool it would try to establish a new connection but the server would reject it, resulting in the CommunicationException. Bumping the maxOutboundConnectionsPerEndpoint fixed the issue.