"Extrapolation, Educated Guesses and Dart Boards, Oh My"

Here is another common question in the testing world: “What is the best way to take the results from our tests with a small load and estimate how the system will behave under a full load? ” There are many different reasons for asking this question, not the least of which is because testers often do not have access to equipment, environments or time to execute full size performance tests. When this happens, people will need to "figure out" the expected results. This can be done by extrapolation, making educated guesses, or pulling out the old dart board and throwing darts at the results.

I am fortunate enough to have the ability to drive to full load in almost every engagement I do. This has allowed me to see firsthand that extrapolation of results is extremely tricky and often misleading. I would love to have a single answer, but the truth is that you need to consider too many things to do this. There may be linear patterns in some applications, and other applications may have enough real world testing to use historical data along with educated guesses, but they are still guesses. If you have to guess, you need to consider a ton of factors. I have listed a few things at the end of the article.

Real World Testing Example 1

I worked with a customer once who had completed one round of testing about 3 months prior to the engagement I was helping with. When reading the results of the previous test round, I saw a statement claiming that (paraphrased) the work they had done so far, along with the metrics they observed in the tests indicated that they could add a single extra web server to the farm and they would be able to reach their target throughput goal. The system had 7 web servers and was pushing 6.6 TPS at the end of the previous engagement. We started the next engagement with the same 7 web servers, and by the end of the lab, we were using 11 web servers. We managed to get the customer to 6.76 TPS, which was still under the goal of 6.8 TPS.

Real World Testing Example 2

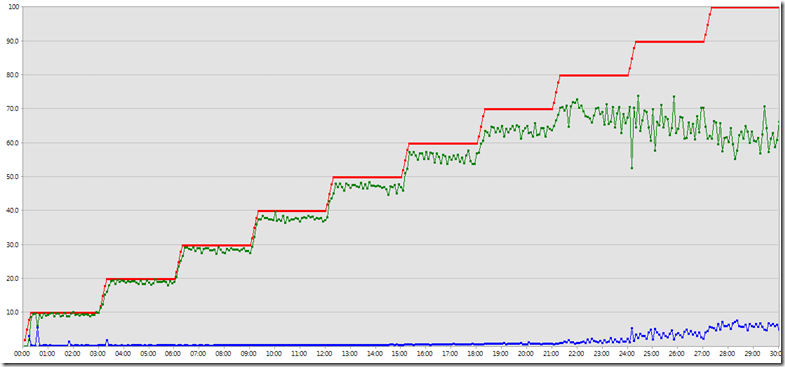

I like to use step ramp tests as part of the work in most of my customer engagements (along with all of the other typical tests), specifically to understand the behavior of an application (or a portion of the app) at various loads. The graph below shows the results from a load test I performed with a customer to understand the behavior of certain settings in their app-queue module. I chose this one because it clearly demonstrates one area where extrapolation can be very dangerous. In our case, we had enough horsepower in the rig and in the environment to drive load well past the expected load so I could easily use the results in my final report. However, what if we could have only driven load up to 50 users instead of 100?

Counter |

Instance |

Category |

Computer |

Color |

Range |

Min |

Max |

Avg |

User Load |

_Total |

LoadTest:Scenario |

CONTROLLER |

Red | 100 |

2 |

100 |

55 |

Tests/Sec |

_Total |

LoadTest:Test |

CONTROLLER |

Green | 100 |

0 |

74 |

45.5 |

Avg. Test Time |

_Total |

LoadTest:Test |

CONTROLLER |

Blue | 10 |

0.031 |

0.77 |

0.19 |

We can see that the throughput (tests/sec) is falling on each step a little bit, but there is no indication that we will reach an absolute ceiling. In fact, we do not hit the ceiling until we reach 80 users. When we go to 90+ users, we start to reverse the efficiency of the queue. I easily saw the ceiling, but if I had run only up to 50 users, I would never have known that limitation.

The bottom line for me is that this type of testing is awesome to analyze concrete results, but it does not necessarily provide useful information for extrapolation.

Considerations when there is no alternative

The following list illustrates a few things I consider when looking at application behavior under load and attempt to determine limitations. It is by no means complete and you will still need to interpret each item's expected behavior as load increases:

- Network usage patterns:

- overall throughput increases

- connection pool sizes and thresholds

- load balancer thresholds

- Web Tier limitations:

- Physical resources such as CPU, Disk, Memory, etc.

- Web connection pool resources

- Web Application Pool worker resources and their thresholds

- Application limitations:

- thread pool issues

- deadlocking and blocking

- Off-Box resource contentions (see my post on Off-Box Inclusive Testing)

- Framework limitations (this can apply to any type of Framework, be it Microsoft, custom, or any other tool/3rd party app). This can vary wildly and includes any type of engine behaviors that are in play. You often do not even know that some of these behaviors exist, let alone know how they will act at different loads.

- Licensing limitations (I have had tests that failed because the customer did not own enough user licenses to drive to the anticipated load)

- Data Tier Limitations:

- Physical resources such as CPU, Disk, Memory, etc.

- Shared resource limitations (SAN, etc.)

- data growth pattern behaviors

- data maintenance and DB plan execution behaviors (index rebuilding, caching, size of data, etc.)

- Environment limitations:

- Virtual environments being on "under-powered" or "over-allocated" host systems

- ETC.....