Super-fast Failovers with VM Guest Clustering in Windows Server 2012 Hyper-V - Become a Virtualization Expert in 20 Days! ( Part 14 of 20 )

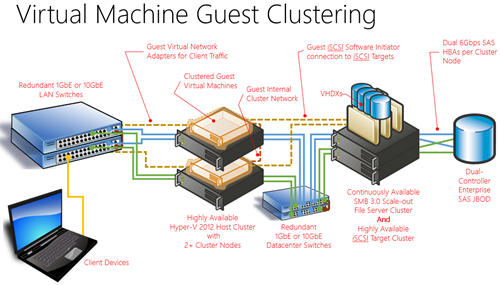

My good friend and colleague, Matt Hester, recently wrote a great article on Hyper-V Host Clustering as part of our latest article series, Become a Virtualization Expert in 20 Days! In this article, I’ll build on Matt’s cluster scenario to walk through the advantages and implementation steps for another type of clustering in a Hyper-V environment: Virtual Machine Guest Clustering. Along the way, we’ll explore some new features in Windows Server 2012 Failover Clustering that enhance Virtual Machine Guest Clustering: Virtual Fibre Channel SANs, Anti-Affinity and Virtual Machine Monitoring.

- Want to follow along as we step through Virtual Machine Guest Clustering? Follow these steps to build out a FREE Hyper-V Host Cluster upon which you can deploy one or more Virtual Machine Guest Clusters as we step through this article together.

DO IT: Step-by-Step: Build a FREE Hyper-V Server 2012 Host Cluster

What is Virtual Machine Guest Clustering?

Virtual Machine Guest Clustering allows us to extend the high availability afforded to us by Windows Server 2012 Failover Clustering directly to applications running inside a set of virtual machines that are hosted on a Hyper-V Host Cluster. This allows us to support cluster-aware applications running as virtualized workloads.

While the Hyper-V Host cluster can be running either Windows Server 2012 or our FREE Hyper-V Server 2012 enterprise-grade bare-metal hypervisor, Virtual Machine Guest Clustering requires a full copy of Windows Server 2012 to be running inside each clustered Virtual Machine.

Why would I use Virtual Machine Guest Clustering if I already have a Hyper-V Host Cluster?

Great question! By clustering both between Hyper-V Hosts and within Virtual Machine Guests, you can improve your high availability scenarios by providing superfast failover during unplanned outages and improved health monitoring of the cluster services.

As Matt pointed out in his article, Hyper-V Host Clusters alone do provide a highly available virtualization fabric for hosting virtual machines, but if a host is unexpectedly down, the VM’s that were running on that host need to be restarted on a surviving host in the cluster. In this scenario. because VMs are completely restarted on the surviving host, the failover time includes the time it takes for the operating system and applications inside each VM to startup and complete initialization.

When virtualizing cluster-aware applications inside VMs, failover times can be dramatically reduced in the event of an unplanned outage. When using Virtual Machine Guest Clustering, cluster-aware applications can more quickly failover by moving just the active application workload without the requirement of restarting entire virtual machines. In addition, many cluster-aware applications, such as Continuously Available File Share Clustering and SQL Server 2012 AlwaysOn, provide managed client-side failovers without any end-user interruption to the application workloads or the need to restart client applications.

Should I use Hyper-V Host Clustering and Virtual Machine Guest Clustering Together?

Absolutely! You can use both types of clustering together to match your organization’s recovery time objectives ( RTO ) for business-critical application workloads. I generally recommend building the foundation for a resilient and scalable host server fabric by first deploying Hyper-V Host Clustering. This provides a standard base-level of platform high availability for all virtual machines deployed to the host cluster, regardless of whether the application workloads inside each VM support clustering. Then, for business-critical application workloads that are cluster-aware, you can enhance your availability strategy by also leveraging Virtual Machine Guest Clustering for those specific applications inside each VM.

Which Applications can I use with Virtual Machine Guest Clustering?

Lots of common applications can be leveraged with Virtual Machine Guest Clustering. In fact, as a general rule-of-thumb, if an application supports Windows Server Failover Clustering, it will be supported as part of a Virtual Machine Guest Cluster. Common application workloads with which I’ve seen particular value when configured as a Virtual Machine Guest Cluster include:

- Continuously Available File Shares

- Distributed File System Namespaces ( DFS-N )

- DHCP Server ( although, the new DHCP Failover capability in Windows Server 2012 is an attractive alternative )

- SQL Server 2012 AlwaysOn

- Exchange Mailbox Servers

- Custom Scheduled Tasks and Scripts

How should I configure Virtual Networks for my VM Guest Clusters?

Much like a physical host cluster, Virtual Machine Guest Clusters should have separate network paths for internal cluster communication vs client network communications. At a minimum, each VM in a Guest Cluster scenario should have 2 virtual network adapters defined, with the option for a third virtual network adapter if using shared iSCSI storage ( see the next section for more information on shared storage ).

Separate Virtual Networks for VM Guest Clustering

Each virtual network to which a VM Guest Cluster node is attached should be consistently configured across all Hyper-V hosts with the same name and set as an “external” virtual network in the Virtual Switch Manager within Hyper-V Manager. This will allow the VMs to communicate over these networks regardless of the host on which a VM is placed.

Tip: On the virtual network adapters to be used for internal cluster communications, do not configure an IP default gateway. This will cause the cluster service to prefer this network adapter for internal cluster communications when both virtual adapters are available.

How can I present Shared Storage to Virtual Machine Guest Clusters?

Most clustered applications required shared storage that is accessible across all cluster nodes – whether those nodes are physical nodes, or in this case, virtual machines. To expose shared storage to clustered applications within virtual machines, two common approaches can be used in Windows Server 2012:

iSCSI Shared Storage – Each virtual machine can leverage the built-in iSCSI Software Initiator included in Windows Server 2012 to access shared iSCSI LUNs for cluster storage needs. This provides a very cost-effective way to present shared storage to virtual machines.

Don’t have iSCSI Shared Storage? Did you know that Windows Server 2012 includes a highly available iSCSI Target Server role that can be used to present shared storage from a Windows Server 2012 Failover Cluster using commodity SAS disks and server hardware? Check out the details at: Step-by-Step: Speaking iSCSI with Windows Server 2012 and Hyper-V.Fibre Channel Storage – New in Windows Server 2012 is the ability to also leverage Fibre Channel storage within Virtual Machine Guest Clusters, supported by the new Virtual Fibre Channel HBA capabilities in Windows Server 2012 and our FREE Hyper-V Server 2012.

I’d like to learn more about Virtual Fibre Channel Support … How does that work?

Virtual Fibre Channel support in Windows Server 2012 leverages Fibre Channel HBAs and switches that are compatible with N_Port ID Virtualization ( NPIV ). NPIV is leveraged by Windows Server 2012 Hyper-V and the FREE Hyper-V Server 2012 to define virtualized World Wide Node Names ( WWNNs ) and World Wide Port Names ( WWPNs ) that can be assigned to virtual Fibre Channel HBAs within the settings of each VM. These virtualized World Wide Names can then be zoned into the storage and masked into the LUNs that should be presented to each clustered Virtual Machine.

To use Virtual Fibre Channel in Windows Server 2012 Hyper-V or the FREE Hyper-V Server 2012, you can follow these steps:

- Install NPIV-compatible Fibre Channel HBAs in your Hyper-V host servers and connect those HBAs to NPIV-compatible Fibre Channel Switches.

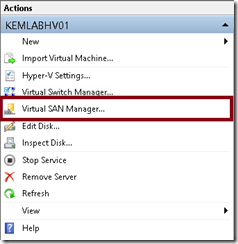

- Using Hyper-V Manager, click on the Virtual SAN Manager… host action to define a virtual Fibre Channel Switch on each Hyper-V host for each physical HBA.

Virtual SAN Manager Host Action

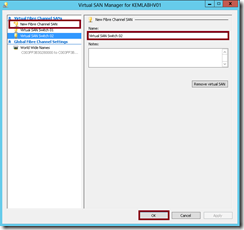

- In the Virtual SAN Manager dialog box, click New Fibre Channel SAN. In the Name: field, define a name for the Virtual Fibre Channel SAN Switch.

Virtual SAN Manager dialog box

Click the OK button to save your new Virtual SAN Switch definition. Repeat this step for each additional physical HBA on each Hyper-V Host Server.

NOTE: When defining the Virtual SAN Switch names on each Hyper-V Host, be sure to use consistent names across hosts to ensure that clustering will failover correctly.

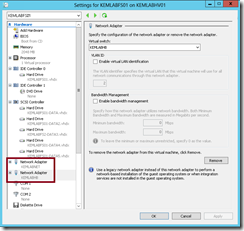

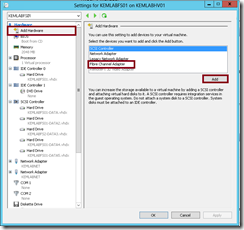

- After defining the Virtual Fibre Channel Switches on each Hyper-V Host, add new virtual Fibre Channel Adapters in the Settings of each VM.

VM Settings – Add Virtual Fibre Channel Adapters

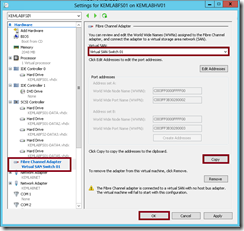

- Modify the properties of each newly added virtual Fibre Channel Adapter in the Settings of each VM to connect to the appropriate Virtual Fibre Channel Switch on the Hyper-V host.

Connect Virtual Fibre Channel HBA to Switch

- In the properties of the virtual Fibre Channel HBA ( shown in the figure above ), note that each virtual HBA is assigned two sets of World Wide Names noted below Address Set A and Address Set B in the dialog box. To ensure proper failover clustering operation, it is important that both sets of addresses ( all 4 addresses ) be zoned properly in your Fibre Channel Switches and masked properly to your Fibre Channel Storage Arrays and LUNs.

To make it easy to configure zoning and masking on your Fibre Channel SAN, click the Copy button to copy these WWNs to your clipboard for easy pasting into your Fibre Channel SAN management tools.

Tip: Do not use Fibre Channel WWN Auto-Discovery on your SAN to attempt to discover these WWN addresses. Doing so will only discover one set of addresses ( the set that is currently active ) and will not discover the second set of addresses assigned to each virtual HBA.

Completed! Once you’ve completed these steps for each Hyper-V Host and VM that will participate in the Virtual Machine Guest Cluster, you should now have storage that you can format and leverage as shared storage within your cluster!

How can I ensure the availability of the overall Virtual Machine Guest Cluster?

Another great question! To ensure availability of the overall Virtual Machine Guest Cluster, consider these additional settings to help ensure that the VMs being used as part of the same VM Guest Cluster are placed on separate Hyper-V Hosts for best overall availability:

- Set Preferred Owners for each VM Guest Cluster member. At your Hyper-V Host Cluster, use Failover Cluster Manager to set a different Preferred Owners order in the properties of each clustered VM.

Preferred Owners for each VM on the Host Cluster

- Set the same Anti-Affinity Group Name on each VM in the same VM Guest Cluster. Using PowerShell on one of your clustered hosts, configured the same Anti-Affinity Group name for each VM that is a member of the same Guest Cluster with the following commands:

$AntiAffinityGroup = New-Object System.Collections.Specialized.StringCollection

$AntiAffinityGroup.Add("MyVMCluster01")

(Get-ClusterGroup "MyVMName").AntiAffinityClassNames = $AntiAffinityGroup

Be sure to run the last command line for each VM that is a member of your VM Guest Cluster. Setting a consistent Anti-Affinity Group Name on each VM causes the Hyper-V Host cluster to attempt to place each VM on a separate host during failover scenarios.

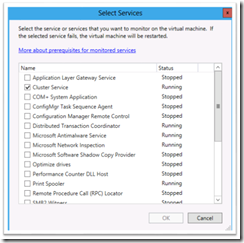

- Configure VM Monitoring for the Cluster Service inside each VM. At your Hyper-V Host Cluster, use Failover Cluster Manager to configure VM monitoring for the Cluster Service inside each VM that is a member of a VM Guest Cluster.

To configure VM Monitoring, right-click on each VM in Failover Cluster Manager and select More Actions –> Configure Monitoring from the pop-up menu.

Select Services for VM Monitoring

In the Select Services dialog box, select Cluster Service and click the OK button.

After configuring VM Monitoring within Failover Cluster Manager, configure the Cluster Service in the Services properties inside each VM to Take No Action for second and subsequent failures of the service.

Cluster Service Properties inside each VM

By configuring the Cluster Service for VM Monitoring using the steps above, the Hyper-V Host Cluster will proactively monitor the Cluster Service inside each VM that is a member of the VM Guest Cluster. If the Cluster Service should fail to stop and not restart after the first attempt, the Hyper-V Host Cluster will restart and/or failover this entire VM in an attempt to ensure that the Cluster Service is running in a healthy state.

Want more? Become a Virtualization Expert in 20 Days!

This month, my fellow Technical Evangelists and I are writing a new blog article series, titled Become a Virtualization Expert in 20 Days! Each day we’ll be releasing a new article that focuses on a different area of virtualization as it relates to compute, storage and/or networking. Be sure to catch the whole series at:

After you’re done reading the series, if you’d like to learn more and begin preparing for MCSA certification on Windows Server 2012, join our FREE Windows Server 2012 “Early Experts” online study group for IT Pros at: