MSF V2 CTP2 Deep Dive – Memory Based Batching

Its been quite a while since I posted about MSF v2 CTP2. As promised in my earlier posts I will be doing a series of deep-dive of the new SyncServices features added in MSF V2 CTP2. Today I am starting off with the Batching feature.

One of the most common feedback we received with the peer providers shipped for MSF V1 was the inability to sync changes in batches. Customer using DbSyncProvider always complained of OutOfMemory exceptions when synchronizing large data across databases. The MSF workflow did support the notion of batching but the relational peer providers did not take advantage of the feature.

Background:

When we decided to support batching we looked back at how the hub-spoke providers supported batching. For the hub-spoke we supported batched download of data from server by modifying the enumeration query to return rows in batches. Even though this solved the issue of OOM with large data it had some inherent limitations. Some of the problems were

- Code has to be hand coded by the user for each sync schema which meant a higher probability of user error.

- Batching was not supported for uploads from the clients.

- There was no easy way to estimate the number of batches you would need. The solution computed batch size by dividing the current tickcount with the last sent tick count for any table. This meant that the batch size was fixed even if the table had 0 changes between the last sent and the current time instance. This meant that the SyncAgent would return empty batches.

- Batching was based on number of rows which made it harder to predict when data will be too big.

- In general usability was hindered as the batching SP was complex and highly involved. The SP became more complex if the anchors were non timestamp based.

One other problem unique to the peer providers was the use of SyncKnowledge to compute the changes that needs to be synchronized. In hub-spoke these were simple timestamp anchors that users used to compute what has changed in a table and each table had its own anchor which did not collide with other tables. In peer providers the SyncKnowledge represents the “knowledge” of all tables for a given Sync scope. Any disparity in what the knowledge represents about a replica and the actual data would mean data will not synchronize and result in data non-convergence in your sync topology.

Motivation for Batching:

When we designed batching we wanted to address all the above shortcomings. We also wanted to reduce the probability of user errors when computing batches. The primary notion of batching would be to avoid running out of memory when synchronizing large amounts of data. The most straight forward way to support batching would be to batch by rows. This works for most cases but does the solve the problem mentioned earlier – how does the user compute how many rows can the client handle. A desktop machine might have enough processing power to consume 10,000 rows at a time but a hand held device might only be able to handle 100 rows. Moreover on a desktop the sync session has to share the available memory which meant that on high loads the number of rows that a sync session can handle without going out of memory rapidly declines.

The primary goal of the sync developer is to successfully sync data over from one replica to another without running out of memory. Basically the one and only goal of batching is to ensure that incremental progress can be made with the available memory resource on the machine. Since one of the most deterministic resource a developer has control over is the available memory, we decided to design batching around memory usage. The developer would put a limit on the max amount on the size of data being synchronized. This works wonderfully well as it makes batching an on demand operation. If row based batching was used then the system would have to send ‘N’ batches no matter how small the individual rows were of. In the memory based batching if all changes required to send to the destination fits within the max size specified then batching is bypassed.

We also wanted to make it support batching for all kinds of relational stores (Sql, Sql CE and any ADO.NET capable database). We wanted to achieve all of this with as little input from the user as possible. We did not want customers to reinvent the wheel around batching logic for every sync application they wrote.

Batching Workflow:

With the motivations for batching being clear we decided to enable batching via a simple switch on the RelationalSyncProvider. We added a new property, MemoryDataCacheSize", which users can set to limit the amount of memory used (in KB) by the synchronizing rows. Data cache limit can be set on both providers and the runtime will use the lower size to ensure that one peer does not send data that cannot be handled by the destination. Sync runtime will revert to batched mode even if one peer has not specified a cache size.

Batched Enumeration:

The workflow of batching is quite simple. Destination providers communicate its cache size by returning it to the SyncOrchestrator in its GetSyncBatchParameters() call. This in-turn is passed to the enumerating peer in the GetChangeBatch() call. Here is the simple workflow of the batched enumeration process.

- If batching is enabled then start a background thread that will compute the enumeration.

- Runtime starts by querying the list of tables mentioned in the DbSyncAdapters list.

- For each table it retrieves the ADO.NET DataReader for its corresponding enum query.

- For each row, before reading the column values, it computes the size of the row.

- If current_Data_Cache_Size + Next_Row_Size <= (110% * MemoryDataCacheSize) specified then it reads the row in to memory.

- If pulling in the new row exceeds the specified MemoryDataCacheSize it spools the current in memory rows to a file. It then cleans up its data cache and continues reading changes.

- Batches are spooled in the user specified directory configured on RelationalSyncProvider.BatchingDirectory property.

- The above steps are repeated till all the tables have been enumerated.

As you can see batch files are spooled to disk only when the data exceeds the memory size specified. If all changes fit in memory then the system reverts back to non batching mode where all changes are sent to destination in memory.

The runtime will try to stuff a batch with data as long as the in-memory size of those data does not exceed the specified batch size by 10%. This is to guard against sending many number of under populated batches.

There are times when a single row would be greater than 110% of the specified data cache size. In such cases the runtime errors out with an exception message that lists the offending table and the primary key of the row that is too big. Users would need to increase the data cache size to accommodate that row.

Since enumeration happens in the background, we employ a prodcuer/consumer model between the main threads GetChangeBatch() call and the background enumeration thread. The main consumer thread will block till the producer spools a batch (or reverts to non batched mode if all changes fit in memory) and report this batch information to the destination provider.

Batched Apply:

Relational providers uses the type DbSyncContext to communicate changes between the source and the destination providers. With batching we have added three new properties to this type to facilitate working with batches. The three properties added are

public string BatchFileName { get; set; } ==> Points to the actual spooled batch file.

public string IsLastBatch { get; set; }

public string IsDataBatched { get; set; }

When the destination receives a batched DbSyncContext it will queue the context. It will queue all batches till it receives the last batch at which point it will open a transaction, deserialize each batch, apply its contents and then finally commit the transaction. Destination provider will apply the batches in the same FIFO order it received them from the source provider.

Tombstone Application:

Relational providers usually applies changes in the following order. DELETES followed by INSERTS and UPDATES. Order in which these changes are applied is usually dictated by the order in which the users specify their table adapters. The adapter order assumes that related tables (PK/FK) and ordered correctly. For DELETES, the runtime will apply changes in the reverse adapter order to ensure that child table DELETES are applied first before parent table DELETES to avoid RI violations. Since batches are applied in FIFO order its plausible that the current batch may not contain the child table DELETES. Within a given batch the runtime continues to apply DELETES in reverse order. In the case where the child table is not in current batch the runtime will recognize the SQL errors for those tombstones and queue them up for retry. When all batches have been applied, the runtime will revisit this queued up DELETES and retry applying them. At this point all of the parent deletes should succeed as the dependent child entries should have already been applied.

Note that batching does not change any runtime logic related to conflicts handing. Batching only governs the number of rows in memory at any given point of time and nothing else. The only thing you have to guard in your ApplyChangeFailed event handler is the above mentioned case where a PK delete fails.

Batching Assumptions:

- Sync runtime has full Read/Write access to the directory specified in the BatchingDirectory property.

- MemoryDataCacheSize only applies to the memory used for reading sync rows. It does not guard against overall process memory usage.

- Batches are applied in the order they are received by the destination provider.

So, Does It Work?

Moment of truth is whether all this batching thingy works and does it prevent process from running out of memory. I coded up a simple scenario which syncs two tables, Orders and OrderDetails, from a Sql server down to a CE client. The schema for the two tables is quite simple.

//Orders

// orderid - int, PK

// orderdate – datetime

//OrderDetails

// orderdetailsid - id, PK

// orderid - int

// product - varchar(max)

// quantity –int

I populated the tables with about 25,000 rows each. Each row in OrderDetails is about 8K in size and each row in orders is about 50 bytes in size. The demo computes the max working set of the process for reporting purposes. I did two runs one without batching and one with batching. Here is the comparison between the two runs.

| Sync Type | Peak Working Set (MB) | Memory Use percent |

| Non Batched | 446 | NA |

| Batched (1 MB batch Size) | 47 | 10.53 |

| Batched (5 MB batch Size) | 59 | 13.2 |

| Batched (10 MB batch Size) | 78 | 17.45 |

As you can see that batching uses approximately 10-20% of the non batched memory for the above sample. This is not to be an indication of memory savings for all kinds of schemas. Feel free to try it with your real world schemas and I am sure you will see considerable improvements.

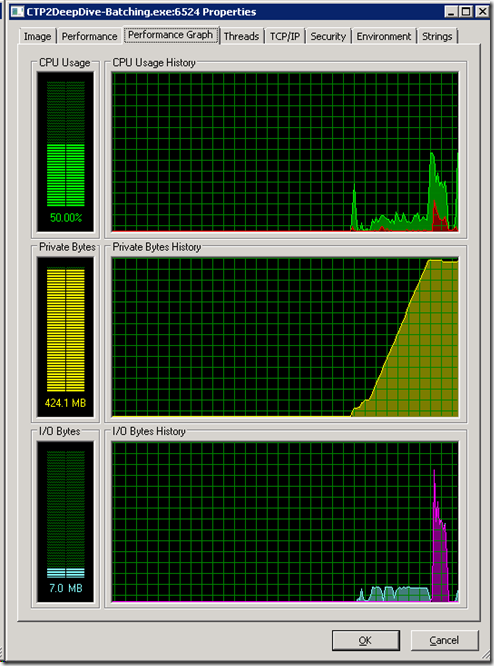

Here is the procmon output for the non-batched case. As you can see the memory utilization keeps climbing till all changes are read in memory.

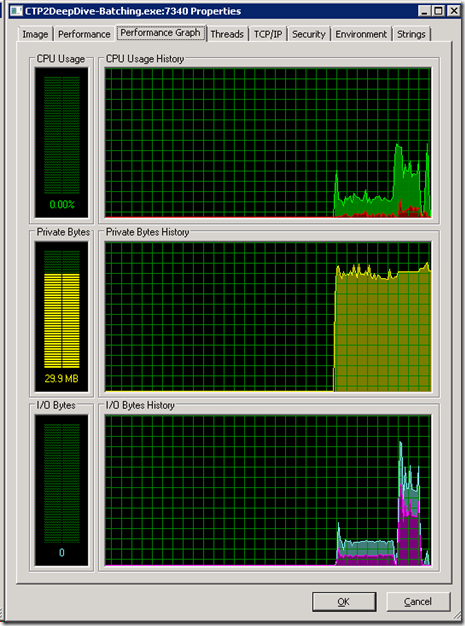

Here is the procmon for the same demo when batching is enabled. You can see that the runtime goes to 31 MB initially and then settles in to a nice pattern as files are spooled and then consumed by the destination.

Here is the MSDN doc link for the batching feature. https://msdn.microsoft.com/en-us/library/dd918908(SQL.105).aspx. Feel free to comment here is you have any specific questions.

Next- Detailed look at the batch file.

Maheshwar Jayaraman