Reducing Dropped Frames with Network Monitor 3.4

by Darren J. Fisher – Network Monitor Development Lead

Capturing network traffic is actually a very stressful task for most computers. With modern networks, traffic can arrive to a system at astounding rates. Most machines built these days have at least 1 Gbps network interfaces. When connected to a network of equal or faster speed, if the traffic consumes just ¼ of the capacity of this interface for 10 seconds, it will have processed 298 MB of data. Under heavy loads, the interface can reach ¾ or higher utilization of interface bandwidth, that’s approaching 1 GB of data in just 10 seconds!

Usually when a computer is receiving that much data, it is also doing other processing on it. Things like decoding a video stream, saving a file, or rendering an image. These types of operations by themselves would test the performance of typical computers. Let’s not forget the other things your computer is doing like scanning for viruses, drawing your desktop, running your gadgets, etc. Now add the cost of capturing every bit of the network traffic and saving it to the disk. It becomes easy to see how we might lose a packet here or there.

The latest version of Network Monitor provides very accurate statistics on how many frames it dropped during a capture. Dropping frames with Network Monitor can occur in three places typically:

- Network Interface: Frames dropped here are a result of traffic arriving too fast for your network hardware to decode and send it to the operating system. Dropping frames here is actually pretty rare. Most network interfaces live up to their speed limits and with modern network infrastructures your connection will rarely reach those limits.

- Network Monitor Capture Driver: Without going into too many details, Network Monitor uses a kernel mode driver that monitors each network interface. When capturing, it makes a copy of every frame it sees and tries to place them into a memory buffer so that the Network Monitor UI, NMCap, or your API program can process them. There are a finite number of buffers. If they are all full, we must discard frames until an empty one is available.

- Network Monitor Capture Engine: This component of Network Monitor receives frames from the Network Monitor Capture Driver and attempts to save them to a temporary file on the local disk drive. If you capture long enough, you will reach the storage quota on either your operating system or the one configured in Network Monitor. When this happens, the engine must discard the frames as there is no space to save them.

Dropping frames is an unfortunate reality of capturing network traffic. It is close to impossible to capture 100% of traffic in 100% of capture scenarios. Even with so called ‘typical’ scenarios, traffic rarely has a steady flow. There are always dips and spikes which apply sudden pressure like a rogue wave. When that happens, Network Monitor may be able to deal with it depending on how it is configured.

Typical Frame Dropping Scenarios

Let’s take a closer look at a few scenarios that typically result in dropped frames, why Network Monitor drops in these scenarios, and what you can do to deal with them.

Using a High Performance Capture Filter

In this scenario, most of the traffic that arrives at the computer is noise. So the user applies a capture filter. The desired outcome is a smaller trace containing only the frames that are of interest. We also want to avoid saving frames that we will just throw away later.

Why are frames dropped in this scenario?

A good analogy for understanding why is filling a pool with a water hose. The rate at which the water comes out of the hose remains constant. Unobstructed, every drop of water will flow into the pool until the pool is full. But if you add a filter between the hose and the pool, what happens? The filter slows down the flow of water into the pool but not from the hose. Depending on the thickness of the filter, the pressure in the hose will build up fast or slowly to the point where the hose will leak.

The pool represents your disk, the hose represents the network interface, and the thickness of the filter represents the complexity of a capture filter expression.

In order to identify the frames which pass the filter condition, Network Monitor must evaluate each and every frame it sees. Only when a frame passes the condition will it be saved in the trace. This requires a third component of Network Monitor to enter the mix, the Parsing Engine.

Parsing a frame is a very slow operation. To put this into perspective, for a batch of 500 frames on a fast machine, it can take a minimum of 15 times longer to evaluate a filter on those frames than it would take to simply save them to the disk. This time factor can grow if the filter expression is complex and/or a larger set of parsers is used (thicker water filter).

Remember above we talked about the driver using memory buffers to store frames that it saw at the network interface? Well those buffers are emptied by saving the frames they contain to disk or applying a filter to them first. Since the number of buffers is finite, if we do not empty them faster than they are being filled, we will drop frames. Going back to the analogy, the memory buffers represent amount of pressure the hose can withstand. The hose will leak unless we remove the filter; it is not a matter of ‘if’ but ‘when’. How long it takes to leak depends on the thickness of the filter and the pressure limit of the hose.

The same thing happens with a high performance capture filter. If the filter is in place and the traffic is steady at a moderate rate, the driver will eventually run out of memory buffers to fill. When this happens, we drop frames.

How can this be addressed?

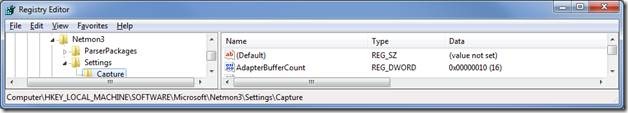

In this scenario, there is little we can do to control rate at which traffic arrives to the computer. We also may not be able to do much about how complex the filter is. We can however increase the pressure limit of the hose. We do this by increasing the number of memory buffers that are available to the driver. This is done by modifying a registry key:

AdapterBufferCount can be set to any value between 4 and 128. The bigger the number, the more memory that is available for buffering packets. The actual amount of memory allocated is the value for this key multiplied by 512KB. The default setting uses 8 MB.

Conservation should be the rule when changing this value. The memory which is allocated as a result of this value is supplied by physical memory. For example, if your system has 1 GB of RAM installed and you use a value of 128, that will cause 64MB of RAM to be used which is 6% of your total RAM. That may not seem like much until you add the RAM consumed by other OS components. Less physical RAM leads to increased page faults (swapping) which can impair the overall performance of your system while capturing.

This value is also per network interface enabled in Network Monitor. On typical Win7 machines, there are usually 3 interfaces enabled by default. This means that with a setting of 128, you can potentially consume 192 MB of physical RAM.

Additional techniques

The High Performance filtering feature has two additional levers that will allow it to yield (remove the water filter) temporarily so the capture driver can catch up. It does this by caching the frames to the disk and performing the filter operation on the cache instead.

Note: This has a drawback of using more disk space and can actually create an even more insidious situation. To be explained below.

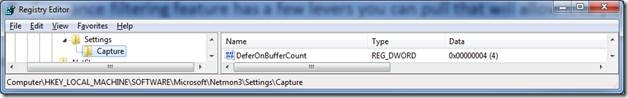

The first setting instructs the feature to turn itself off if the capture driver indicates that there are only a few buffers remaining. The setting is a registry key, DeferOnBufferCount.

When the number of full buffers is equal to or greater than this value, the High Performance filtering feature temporarily turns off. Instead of filtering frames as they arrive at the driver, they are placed in a cache and filtered later. We mentioned earlier that saving frames can be up to 15 times faster than filtering them. The time saved will allow the capture engine to process buffers faster. This in turn will make free buffers available to the driver faster. The end result is not getting into a state where dropping frames is eminent.

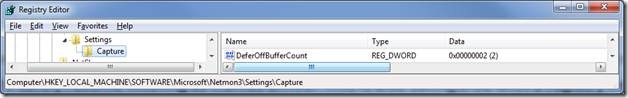

The second setting instructs to feature to turn itself back on when the number of full buffers is low enough to safely resume filtering as frames arrive. This is also configured with a registry key, DeferOffBufferCount.

“Defer” in the names of both of these keys refers to filtering the cache. Once triggered by the first setting, Network Monitor will continue to cache frames until the number of full buffers is equal to or less than the value of this key. When that happens, filtering of frames as they arrive will resume. This cycle will repeat as necessary based on the buffer conditions. DeferOnBufferCount has the highest precedence. Regardless of the off setting, if the number of buffers consumed is greater than or equal to DeferOnBufferCount, no filtering will on the cache will take place.

Together, these settings allow Network Monitor to react to spikes in normal traffic flow. The value for DeferOnBufferCount should be set to not trigger deferring under normal capturing conditions. The value for DeferOffBufferCount should be set low enough so that Network Monitor does not turn off deferring during the spike.

For example, under normal traffic conditions, the capture driver has 2 buffers full on average. During a spike, as many as 10 buffers fill up. The total number of buffers is 16. Under these conditions, a good setting for DeferOnBufferCount would be 6. That will not trigger deferring if a small spike occurs where 3 or 4 buffers become full but for a large spike, it will be triggered. DeferOffBufferCount in this scenario should be set to 3. Setting this too low may keep deferring on indefinitely. Too high and oscillation will occur where the feature turns on and off repeatedly which is not desirable.

Using a Normal (or no) Capture Filter

If a capture filter is not a High Performance filter, it is a normal filter. This type of filter is always applied to the frame cache. Filling the cache and processing of the cache occur simultaneously in separate threads; thus making the cache a shared resource. We will call the thread that processes the cache a proxy. The proxy and the capture engine effectively fight over who can access the cache.

Why are frames dropped in this scenario?

We like analogies so here is one for this; let’s revisit our pool but this time we want to drain the pool too. Our pool is special; we cannot fill the pool and drain it at the same time. If we are filling it, we must close the drain. If we are draining it, we have to block the hose. If the water is still on while we are draining, pressure will build up in the hose. If we drain it too long while the water is still on, our hose will leak because of the pressure build up. If we turn off the water however, we can drain as long as we want until the pool is empty.

Ideally we do not want the hose to leak. So as long as the drain is closed, we are fine. But we need to open the drain occasionally otherwise our pool will overflow. The trick is to keep the drain open long enough to keep the pool from overflowing but short enough to not make the hose start leaking. Our hose can withstand some pressure but not for long.

In this example, the pool represents the cache of frames. The hose represents the capture engine which fills the cache. The water is the incoming traffic from the capture driver. The drain represents the thread which processes the cache. The pressure in the hose represents the memory buffers that the capture driver uses. You can guess what leaking represents; dropped frames

Coming back to Network Monitor, we cannot afford to have the capture engine lose the fight over the cache for too long. We need to process frames in the cache while the cache is being filled however. The proxy must periodically lock the cache, remove frames from it, and then relinquish it. Frames in the cache are pending frames. Once the proxy has a frame, it can apply a filter to it or if there is no filter, display it (Network Monitor UI only).

Note: NMCap with no filter does not use a proxy; the cache is the target capture file.

Displaying a frame and applying a filter take time. While the proxy is doing this, the cache is not locked allowing the capture engine to fill it. Once the proxy is done with one frame, it gets another one from the cache, requiring it to be locked again for some period of time.

Going back to our analogy, if these periods of time are long, the hose will experience short rises in pressure spread over long periods of time. This is ideal because the water does not really flow at a constant rate; it dips and spikes over time (like network traffic). When it dips, the pressure is relieved.

However, if the periods of time are short, the rises in pressure will be closer together causing the pressure to build up faster. If it gets too high before the dips come, it will reach the pressure limit and leak.

This is what happens if the proxy’s processing time is too short. It will access the cache at a faster rate and starve the capture engine (proxy wins the fight too often). Starving the capture engine will cause it to slow down emptying buffers since the engine cannot empty them while the cache is locked. If the buffers are not being emptied fast enough, the capture driver will fill them all and when that happens, it will drop frames.

The ways you can cause the proxy to speed up are:

- No capture filter or display filter (UI only)

- Using the High Performance profile or Pure Capture profile

- Using a custom profile which parses frames very fast

How can this be addressed?

The proxy can also be considered an engine. Sometimes engines in cars are too powerful so the manufacturer installs a governor. The job of the governor is to keep the engine from going too fast so the drivers do not kill themselves. Our proxy also has a governor to keep it from causing dropped frames. This is actually a registry key, MaxPendingFramesPerSecond.

This controls how many frames per second the proxy can process while capturing; resulting in cache locks per second. The default value for this setting (0xFFFFFFFF) effectively turns the governor off (unless you have a supercomputer). If you pause or stop capturing, the governor is not applied.

If you see dropped frames in one of these scenarios. Set this value to a moderate number, such as 1000. If frame dropping continues, try setting lower values until the dropping stops. You can also start with a low value and increase it steadily until you see drops, then lower it slightly. The value that will work best for you depends on the processing power of your computer.

Increasing the setting for AdapterBufferCount may offer some relief but it is usually temporary. If your capture is limited to a small, fixed period of time (i.e. < 5 minutes), this method may be a reliable alternative.

Conclusion

As you can see, there are multiple factors that can affect the ability of Network Monitor to capture every frame that arrives at the computer. There are also multiple settings exposed that can be used to fine tune your experience. While there is no silver bullet for perfect capture performance, many scenarios can be dealt with by applying the techniques described above. Being able to recognize why frames are being dropped is an important first step in finding the best solution.

The solutions above can be considered ‘high-tech’ solutions. There are also some very simple, low-tech steps that you can take which may also yield the results you are looking for.

Eliminate Disk Contention by Saving to Another Disk Drive

Network Monitor exposes a setting for where the frame cache is stored; via Tools->Options from the main menu. If the default location is also a heavily used disk drive (i.e. the system disk), try a different drive if you have one.

Reduce the Processing Load on the Computer

This is obvious but often easier said than done. Try to have as few processes running as possible while capturing live traffic. Avoid unnecessary disk access. Try to have plenty of physical memory free to avoid virtual memory paging.

Use NMCap to Capture When Possible

NMCap provides the same capture features as the Network Monitor GUI application and it offers higher performance. If possible, use NMCap to capture traffic instead. The GUI application can be used to load and analyze the resulting capture file.

Use ‘weaker’ Filters

Everyone knows the joke where the guy says, “Doc, it hurts when I move my arm like this.” The doctor replies, “Well, don’t do that.” In our world the joke would go, “Microsoft, Network Monitor drops frames when I use this filter.” To which we may reply, “Well, use a different filter.”

Occasionally, Network Monitor users; especially network protocol gurus; will create capture filter expressions that attempt to be a bit too precise. Creating such a filter will yield the fewest and most desired frames but it often comes at a cost of complexity which can lead to dropped frames.

A not so obvious remedy is to use a less complex filter. This may result in more frames captured but may also result in fewer frames dropped. The ‘Display Filter’ functionality in the Network Monitor GUI can be used to see the exact frames desired with a more complex filter once the capture file is opened.