Modern Datacenter Architectural Patterns-Azure Search Tier

The Azure Search Tier design pattern details the Azure features and services required to deliver search services that can provide predictable performance and high availability across geographic boundaries and provides an architectural pattern for using Azure Search in delivering a search solution.

Table of Contents

1.1 Pattern Requirements and Service Description

Prepared by:

Liam Cavanagh – Microsoft

Mark Massad – Microsoft

Tom Shinder – Microsoft

Cloud Platform Integration Framework Overview and Patterns:

Cloud Platform Integration Framework – Overview and Architecture

Modern Datacenter Architecture Patterns-Multi-Site Data Tier

Modern Datacenter Architecture Patterns - Offsite Batch Processing Tier

Modern Datacenter Architecture Patterns-Global Load Balanced Web Tier

1 Overview

The Azure Search Tier design pattern details the Azure features and services  required to deliver search services that can provide predictable performance and high availability across geographic boundaries and provides an architectural pattern for using Azure Search in delivering a search solution. Azure Search is a “search-as-a-service” built within Microsoft Azure that allows developers to incorporate search capabilities into applications without having to deploy, manage or maintain infrastructure services to provide this capability to applications. The purpose of this pattern is to provide a repeatable solution intended for use in different situations and design.

required to deliver search services that can provide predictable performance and high availability across geographic boundaries and provides an architectural pattern for using Azure Search in delivering a search solution. Azure Search is a “search-as-a-service” built within Microsoft Azure that allows developers to incorporate search capabilities into applications without having to deploy, manage or maintain infrastructure services to provide this capability to applications. The purpose of this pattern is to provide a repeatable solution intended for use in different situations and design.

1.1 Pattern Requirements and Service Description

The Azure Search Tier Architecture pattern is designed to meet the need for adding search to an application or web site. The search service provides a cloud based search service that handles provisioning, configuration and scaling controls within Azure as a regular platform-as-a-service (PaaS) capability. This pattern is intentionally generic, but is based on key concepts and factors that will influence design and use of the service. Adopting this pattern will help make sure consistent application of principles and critical design elements in using Azure Search.

2 Architecture Pattern

This document describes a core pattern for using Azure Search with two variations of the core to demonstrate the architectural range of the service. The core pattern consists of Azure Search and surrounding Azure services and is intended to provide guidance for creating end-to-end designs. Variations of the pattern, specifically the Shared Service and Concurrency patterns, are also included in this section to provide guidance based on different requirements, Service Level Agreements (SLA) and other specific conditions.

2.1 Overview

One of the common challenges of search is trying to predict the optimal amount of infrastructure needed to meet the demands of the data being indexed and the volume of traffic to the site. Getting this wrong leads to provisioning excess capacity – which ends up costing more than it should have or not having enough resources to handle requests.

Azure Search takes the complexity out of setting up everything from scratch while providing architectural support to help you scale the service based on specific needs. There are options in terms of how to use the search as part of your application, whether it’s hosted in Azure or somewhere else. This pattern is intended to simplify the instantiation and use of Azure Search by establishing a reference architecture which can be repeatedly used across different scenarios and technologies.

The Azure Search Tier pattern is designed to be elastic with components and technology that can be substituted as required. These decisions fall within each customer’s design team and are defined on a case-by-case basis.

The next section describes the core pattern. This is then followed by pattern variations to help you understand use cases and tradeoffs relative to each configuration.

2.2 Core Pattern

Within the Azure Search Tier design pattern, a core pattern is a set of Azure services used to support or enable Azure Search as part of an end-to-end Solution. Azure Search is the main service in this pattern. It starts by creating an Azure Search Service within a subscription, affinity group and data center.

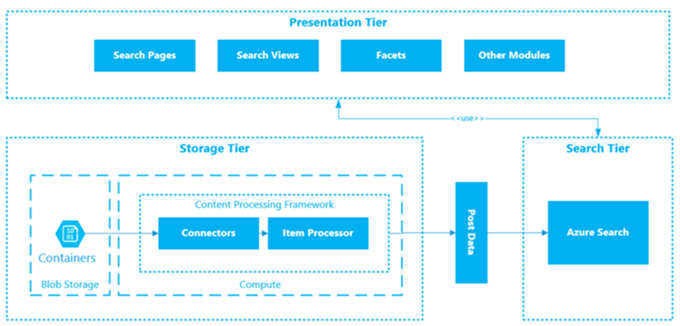

Azure Search is surrounded by a number of additional services to complete the pattern but other arrangements are possible. Supporting services in this pattern are used for content storage, compute and user interface (UI). These services are provisioned differently but have patterns and guidance (beyond the scope of this document), to assist in the design and deployment of each service or group. The diagram below provides a simple illustration of what service tiers are important and how they are used in this pattern.

Each of the main service areas are described in more detail following the diagram.

Figure 1 – Core Architecture Pattern

Search Tier

The Azure Search Tier is effectively a “black box” with an API. Once provisioned within a subscription, affinity group and data center, it receives instructions for query actions and administration thru its API. These instructions are either:

- Service management (e.g. - updated index, number of documents, size, etc.) or

- Search queries (e.g. all documents containing some criteria) or

- Content ingestion

Each index consists of searchable fields that are optimized for search performance. The index must be built before it can be used for loading data or query. This includes the data model or index schema. Once the index is created, populating data in Azure Search automatically provides:

- A full-text searchable index of data controlled by the schema

- A categorization or clustering of data resulting from the original data

- The distribution of data across partitions and replicas to obtain required performance and capacity

Storage Tier

The Storage Tier serves two purposes:

- Hosting content

- Providing compute services to host the crawler

Content in this pattern is stored in Blob storage containers while a virtual machine is simply used to run the crawler. In most cases this will be the first opportunity for substitution as your content may reside in other forms or locations and you may have options for running the crawler. Using Azure Storage demonstrates service interoperability but the data could live in a database, file share or behind an external service. The crawler could be run in Azure, on a local machine or any other platform capable of compute. Entries into the index can even be added manually.

Content Processing Framework

The Content Processing Framework is designed to basically handle the crawl or loading the index with data. For this pattern, it is hosted in the Storage Tier but like the presentation tier, may reside elsewhere. This is not a service in Azure Search at this time but a feature being considered for a future release. What’s important is that it provides a set of “connectors” for accessing repositories and “item processors” for extracting data.

This framework is often times generically referred to as a “crawler”. It is designed to combine multiple content streams or document collections into a searchable index. It is also where cleaning, extracting, normalizing and enrichment of content occurs. This provides a structure to retrieve, parse and create indices, taxonomies and other summarizations of original data. Content processing rules, development technology and platform selection are completely up to the designer but nonetheless an important part of the Solution.

Presentation Tier

The Presentation Tier interfaces with Azure Search and the Storage Tier by providing the user interface for search and administration. It integrates with Azure Search using an API based on OData. There are a number of development framework libraries and techniques beyond the scope of this document that you can use for this integration.

Generically speaking, components typically include “Search Views”, result pages and faceted filter methods - all supported by the Azure Search API. A “results link” is referenced which basically points to the content item through web Url, as an example. This is another opportunity for substitution in that data is transferred in/out of search using OData or document loaded from some location. It could be a web on Azure Websites, a desktop application on premises or anything that can exercise the API. There are also component libraries in the Presentation Tier that can be downloaded or purchased online from third parties.

This completes the basic definition of the core pattern. Azure Search runs with surrounding components to comprise a complete Solution. The remainder of this document will describe pattern variances from core pattern and discuss how you can handle a range of anticipated scenarios.

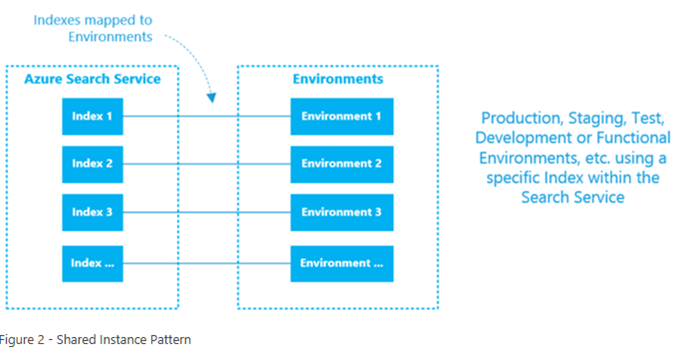

2.2.1 Shared Service Pattern

The Shared Service pattern outlines the sharing of one Azure Search service for multiple purposes, environments or development methodologies. It assigns a purpose at the index level of the service. Each index is independent such that it can have its own schema, content and use case. The Azure Search API takes care of which index is used for what based on the URL. The following diagram illustrates this mapping.

Note:

“Environment” implies everything outside of the Azure Search. This could include elements of the Core Pattern (above) or a specific case/ technology platform, residing on premises, etc. In other words, only the Search Index becomes a mapped member of the “Environment”. This is an important distinguisher making the index the primary building unit in the pattern.

Figure 2 - Shared Instance Pattern

The following comparative analysis is provided below when evaluating this pattern type:

Pros |

Cons |

Minimizes cost |

Number of environments limited to number of index instances |

Easiest to setup, configure and use |

No redundancy |

Single named instance of the search including URL |

Same access keys across environments |

Up to 25 indexes (today) |

Table 1 – Shared Instance Pros and Cons

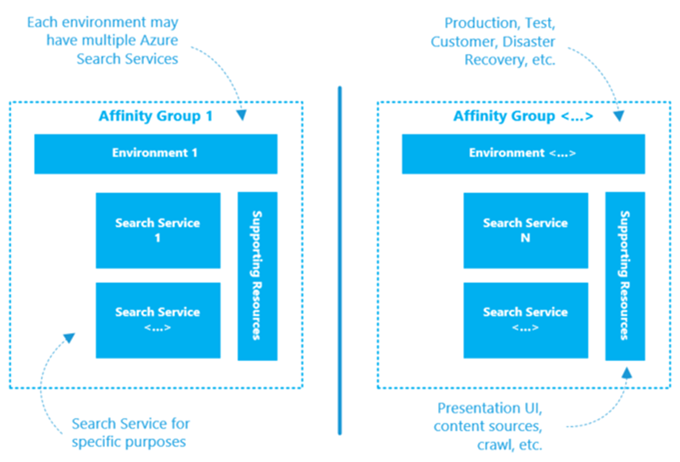

2.2.2 Concurrency Pattern

The Concurrency pattern allocates an Azure Search Service for any given purpose. It provides segregation of components and opens up the design to requirements involving larger entities (e.g. hosting environments, customers, and development principals and/or methodology).

The use of the term “environment” is generic but defined as any given situation, configuration or scenario. “Environments” can be based on core or similar patterns with supporting capability such as crawl, content, etc. The key differentiator is that this pattern includes one or more search services as a member of that entity. In other words, the search service becomes the primary building unit for this pattern vs. the index in the shared pattern.

For example, Environment 1 could be part of a production environment for a tenant service where each customer would get a dedicated search service including access keys. Environment 2 could be the same but for development or another division. Geo-Failover requirements are accommodated using the same model.

A simple illustration of this pattern is provided below:

Figure 3 - Concurrency Pattern

The following comparative analysis is provided below when evaluating this pattern type:

Pros |

Cons |

Dedicated capability at the service level |

Cost for each search service |

Supports Redundancy and High Availability scenarios |

Governance is more involved |

Supports larger and more complex hosting arrangements |

More configuration items to support |

Different access keys for each configuration |

Table 2 –Concurrency Pattern Pros and Cons

These patterns could also be combined to form a hybrid. In other words, multiple search services could be invoked with dedicated or shared support resources across the set. The open Azure Search API supports a number of configuration combinations but hybrid patterns are not part of this document.

2.3 Pattern Dependencies

The Azure Search Tier pattern does not depend on other patterns but it does depend on key components and decisions that will shape its use as a reference. This contains decisions and/or design elements classified as:

Content Sources

Content Sources define what data is available for index and how it will be used in search, including: meta-data, content types, locations, etc. This pattern depends on content in Azure Blob Storage but it may reside in other locations and formats.

User Experience (UX)

The design of the UX includes: search views, results, facets, etc. This pattern references the use of a MVC application hosted on Azure Websites to draw attention to what information needs to be displayed and what control is needed over the data (both end-user and administration). This includes ranking profiles, suggestions, paging, filtering, etc. UX controls may require additional data in the index to get the desired behavior or to enable maintenance operations. This pattern mainly depends on having a solid understanding of what the Search API is capable of and the design of your schema. There are interdependencies between all three (UX, search capability and schema). Deficiencies in any one area can have a profound effect on level of achievement.

Index Schema

The index schema or data model determines what fields are needed and how these fields are used by the API. This is similar to developing a database schema and query API. This pattern assumes data models are application specific but uses a generic model to demonstrate range. Pay attention to data types and how the UX uses these data types.

Crawl

The crawl loads the index with data. It is an important part of the content process framework and often includes iFilters, Word Breakers, Thesaurus, Content Enhancers, etc. There are open source and third party tools available to help feed data into the index but this pattern does not depend on them. Instead, this pattern describes key elements and interfaces in working with Azure Search should you choose to build your own crawler.

Capacity Planning

Capacity planning is required to determine how much capacity is needed for Search. Areas of consideration include, but are not limited to:

- Queries per second

- Number of items per index

- Redundancy

- Disaster recovery

- Size

- Rate of ingestion

Azure is responsible for the provisioning and configuration of the search service however there are performance and capacity factors that affect economics. This pattern assumes capacity estimation can be performed in order to obtain the right number of search units. Pattern considerations, sizing and costs are outlined in the sections below.

Integration

Integration is based on APIs to manage the index and perform query actions. These APIs are based on REST so is nothing to install to integrate search into your application. Finalizing your design will depend on how you integrate search into your application. There are a number of third party controls and libraries that fit into this space. This pattern has a dependency on application integration and uses Microsoft platforms and development techniques to accomplish this task.

Search Unit

The Azure Search Tier pattern depends on having a solid understanding of a Search Unit. This is covered in detail in the Scale and Performance section of this document.

2.4 Azure Services

Azure Search is a standalone service as part of an Azure Subscription. Interworking components such as load-balancing, storage, traffic management are embedded into the service and transparent to the development. This pattern does, however, make use of other Azure services to complement the design and help your understanding. These components reside in the storage tier but we are not limited to having these components run in Azure. The Azure Services used in this pattern are:

- Azure Search – Search-as-a-Service (currently in Preview)

- Affinity Groups - established at each region to help make sure resources are co-located within a given Azure datacenter.

- Virtual Machines – used in this pattern to host the crawler

- Blob Storage – used in this pattern to host content

- Websites – used in this pattern to host a web that interacts with Search through the search API

2.5 Pattern Considerations

There are a number of service and index constraints to be aware of when implementing the Azure Search Tier pattern. These will have a strong influence on the number of search instances and configuration required for your application or service:

- Indexes – Maximum of 25 per service

- Storage – Maximum of 25 storage per search unit

- Documents[1] – Maximum of 25 million per search unit

- Upload Request Size – Maximum of 16 MB per request

- URL Length – Maximum of 8KB in URL length

- QPS[2]- 15 QPS per Search Unit / replica

These constructs will be outlined in later sections of this document.

3 Interfaces and End Points

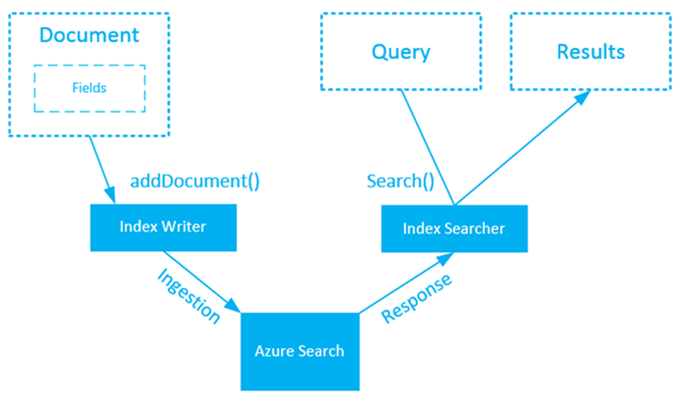

Azure Search is built on a search library that is used for indexing and search functions. It uses OData for communications and transfer of data over REST APIs that make it easy to use from any programming language. Data placed into Azure Search is referred to as a document with a collection of fields. Loading data into search is called ingestion. The structure of the index is based on JavaScript Object Notation (JSON) structure. Query of the data is performed using HTTP GET and receive is returned as JSON for inclusion into your presentation.

A simple way to look at these interfaces and endpoint is as follows:

Figure 4 - Interfaces and Endpoints

Common API functions include:

- Creating and Updating an Index: Administrative functions to create indexes within the service

- Listing Indexes: Getting a list of indexes in the search service

- Getting Index Statistics: Getting statistics on each index such as number of items and storage size

- Deleting an Index: Remove an index from the service.

- Adding or Deleting Data within an Index: Maintaining the data in the index

- Search: Performing query actions in OData format for obtaining results

- Lookup: Perform lookup into Search to find a specific document based on the document key

- Count: Get a count of items matching a faceted term or results set

- Query Suggestions: Provide assistance with search query criteria (real-time as the user types)

All API calls must be accompanied with an API key in the header or in the query string, must be issued over HTTPS and must include the API version in the query string. This handles not only ingestion but also query actions from the user. The use and handling of the API key may influence the selection and use of either the shared or concurrency pattern.

The remainder of this section will focus on two important aspects of this, namely: Crawler (Index Writer - for ingestion) should you choose to write your own crawler and Index Searcher (for querying the service).

3.1 Crawler (Index Writer)

The search needs content to be a meaningful component to the user. Without data, the user has nothing to query against. While there are open-source projects and third party products available to crawl data, this pattern includes provisions for creating your own crawler. This is done to help understand the complexities of a fairly simple concept and suggested API. In using this pattern to develop a crawler keep the following considerations in mind:

- Do you need identity and/or authorization components to access the data?

- What part of your data do you need to index?

- How will the crawler get launched?

- What constitutes a document (or item)?

- How will you know when data has changed or if it’s been deleted?

- Do you need security on the document or index?

- Do you want to index the body of the document (e.g. full-text crawl)?

Questions such as these are provided further define the details that will influence your design using this pattern. Once this is done, the actual APIs needed to build a crawler are generically described as follows:

- The Crawler itself

- Content processor

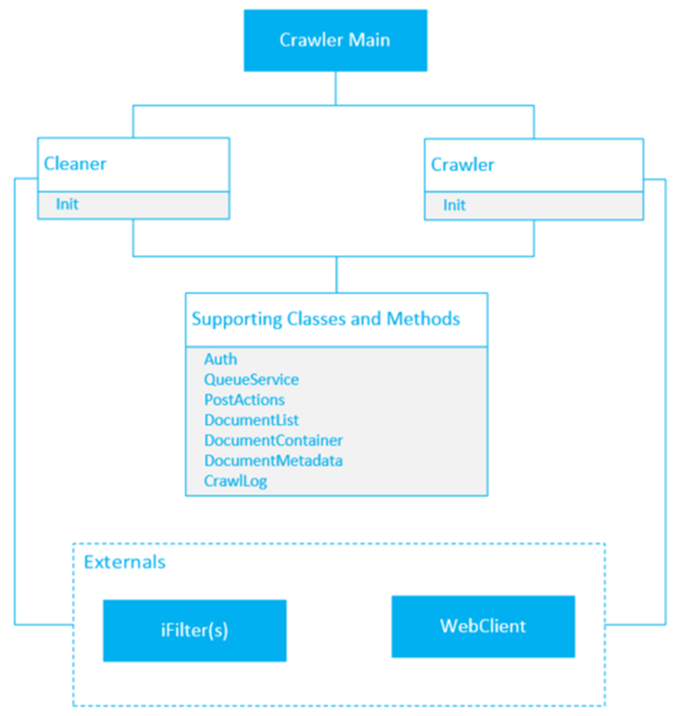

- Supporting classes

A generic class diagram showing sample methods and relationships for designing a simple crawler against file content or Binary Large Objects (BLOBs). This is being provided as a development pattern to accelerate your design.

Figure 5 - Crawler Class Pattern

3.1.1 Interface: Crawler Main

The purpose of the Crawler Main is to host the crawler - including security model, registration of connectors, iFilters and underlying objects. It handles:

- Parameters used to invoke the crawl

- Authentication used by the crawler

- Administrative information such as version, name, description, etc.

- A document processing queue seeded with starting values

- Registration of connectors or iFilters

This is the main class used to initialize the crawler and start the crawl.

- Interface: Crawler - This is the main class that gets invoked once the crawl has started. Crawler Main creates an instance of this class and it starts processing the queue. The queue is seeded by a start address and query. Each item being indexed would be put into a DocumentContainer object and submitted for indexing through QueueService. Once data is extracted for the index, it will be posted as a collection to Azure Search in size driven batches.

- Interface: QueueService - The QueueService is a utility service provided to the crawl. These are functions that typically involve lists, item iterations, multi-threading and/or batching of data being posted to Azure Search.

- Interface: DocumentContainer - This is a container for the document which includes document content and metadata. This represents an object loaded with that that typically complies with the search schema.

- Interface: DocumentMetadata - Metadata is stored in a document container. Metadata for a document consists of a set of attributes. Some attributes are specifically named in various methods (such as ContentType, Extension, ModifiedDate and WebUrl), others are provided through name/value pair collections.

- Interface: CrawlLog - Logging is recommended to verify the results of any given crawl. The pattern suggests capacity and performance data and verbose logging of each item. It also suggests configuration parameters for logging various events and granularity.

- Interface: Cleaner - Cleaner is a class that should check each item in the index to help make sure it is current with a valid WebUrl. If a file not found (404) response is returned from the check, the item is marked for deletion. The deletion is done as a batch transaction to maximize performance. The cleaner may be an optional component depending on maintenance rules. The use of a Change Log may also be available to you to replace the cleaner. However, this is not included in this pattern.

3.2 Index Searcher

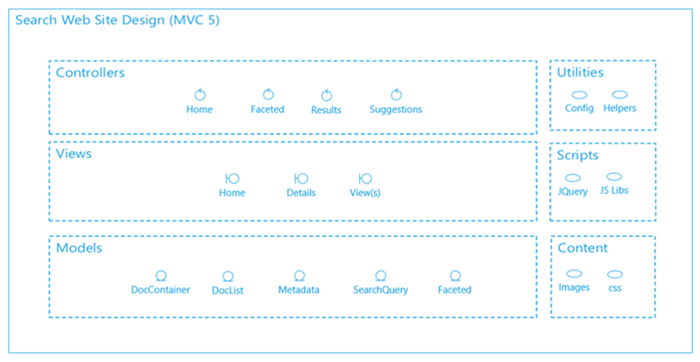

Since Azure Search is OData based, any framework language supporting OData API can be used to work with the service (e.g. JavaScript, .NET, Ruby, PHP, etc.). The Search UI is what people use to search which in turn interacts with the search service. The user experience can be interactive (with query suggestions) or it could be defined such that it only shows results. To illustrate this pattern, a Search UI website design in MVC 5 is provided as a reference point to assist in development.

Figure 6 - Search Web Site MVC Design Pattern

The following considerations apply with the Interface design:

- This pattern is based on a classic MVC 5 architecture with controllers, views, models, and other components. The pattern divides the application into interconnected parts as to separate the internal representation of data from the way information is presented or accepted from the user. It aligns the website structure with the Search information architecture.

- Components are grouped into classic MVC containers for organization, use and maintenance.

- Models consist of application data, business rules, logic and function.

- Views are any output representation of information such as a search results page or page component.

- Controllers accept input and convert it into commands for the model, view or view model.

Note:

This is only a suggested design based only on classic MVC principles. It is expected that specific coding practices and standards will produce a different structure or include additional tiers specific to your deployment.

4 Availability and Resiliency

Availability and Resiliency factors in this design pattern translate primarily to index replicas and partitions. Azure handles most of this for you will be able to adjust these settings in the portal to meet specific needs. With this version of the service, if higher availability is needed, multiple search instances can be created as shown in the concurrency pattern. In this case, additional environments are setup to support high availability.

Note:

The Azure Search service is in public preview mode, therefore no SLA has been defined for this service at this time.

5 Scale and Performance

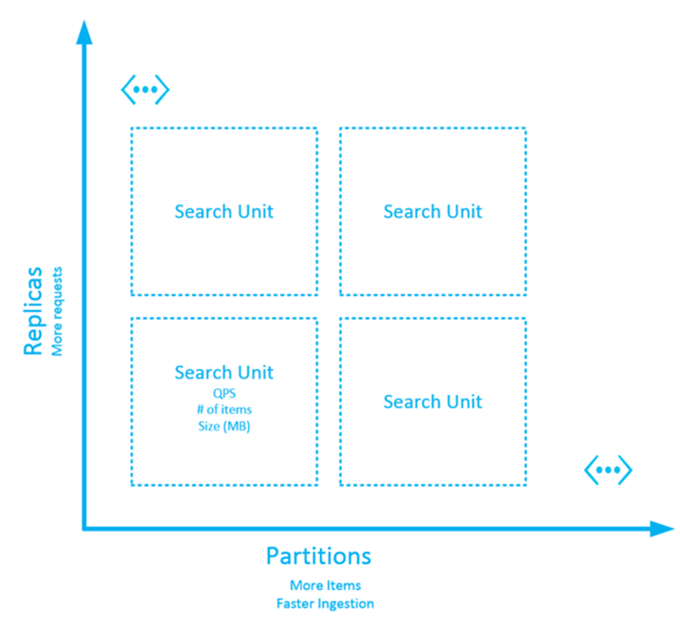

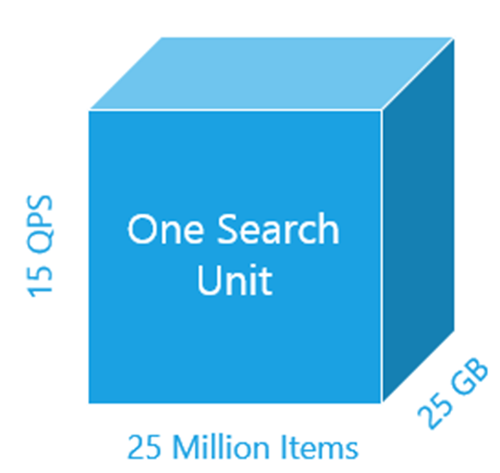

Performance and scalability can sometimes be considered challenging to predict and define. There are a number of network, hardware, software or environmental considerations that can affect a systems performance. Azure Search solves much of this complexity by scaling the services based on a number of factors making up a Search Unit. A Search Unit is a fixed unit of capability and the building blocks to scale the service. Each Search Unit is designed to handle specific capacity, based on:

- Index Size: The size of the index is key in determining the number of Search Units. In some cases, depending on content, the size of the index can be 10-75% the size of the original data. This is an important metric that will drive cost and arrangement.

- Ingestion Rate: Ingestion rates are usually expressed in terms of documents per hour. Search Units can be spread across index partitions to obtain higher ingestion rates.

- Queries per Second – This metric represents the number of query actions per second for results. Search Units can be stacked up into index replicas to obtain higher QPS.

- Availability – The level of availability required from the Azure Search service will potentially have an impact on the number of Search Units required.

Collectively, these factors can be represented as a two-dimensional figure based on replicas and partitions. A replica is a copy of the index to handle more requests. The search service will automatically load balance requests across replicas. A partition may contain the entire index or depending on size a portion thereof. Partitions are split across different machines allowing the search service to shrink or grow. These factors are important to understand as you design your service.

These concepts are outlined in the diagram below:

Figure 7 – Pattern considerations based on limits and areas of extensibility.

As the size of the index or search request volume changes, the number of Search Units may change. But the pattern is in most cases rectangular or square. In other words, a replica is a copy of a partition. This is a key consideration in performance and cost.

Number of Search Units = Replicas * Partitions |

Azure Search will maintain the number of Search Units but you can control these settings in the Azure Portal to optimize the search service for your specific requirements. At the time of this writing, for reference purposes only), a search unit is sized approximately as follows:

One Search Unit is capable of handling:

- 15 Queries per second[3]

- 25 Million Items[4]

- 25 GB in size of Index

These factors drive cost for the Solution. If a high ingestion rate is required, additional Search Units are added which spread the data across index partitions. See cost factors below and sample sizing.

Figure 8 – Search Unit Capacity.

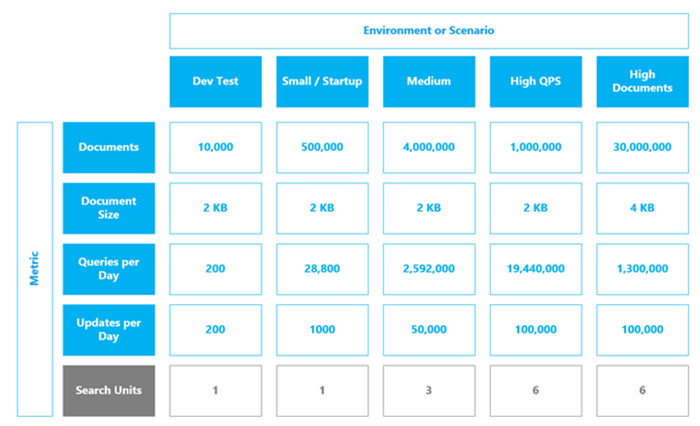

Note that the ability to control settings in Azure to optimize the configuration for specific needs will be included as part of the preview service. However, in this pattern, we are providing representative samples of how many Search Units can be anticipated in your deployment relative to various Environments/Scenarios and important metrics. The table below is provided as a reference to help you understand what you can expect for your deployment based on a range of conditions and factors.

Table 1 – Representative Sizing Factors

The final number of Search Units will depend on not only size factors but also rate factors such as queries-per-second and ingestion. This will ultimately drive the number of partitions and replicas needed for deployment. As you can see, one Search Unit is capable of handling quite a number of deployment scenarios with reasonable capacity. High query-per-second and documents are provided to help understand how additional Search Units are employed to scale the service.

6 Cost

An important consideration when deploying any Solution within Microsoft Azure is the cost of ownership. Costs related to on-premises cloud environments typically consist of up-front investments compute, storage and network resources, while costs related to public cloud environments such as Azure are based on the granular consumption of the services and resources found within them.

Costs can be broken down into two main categories:

- Cost factors

- Cost drivers

Cost factors consist of the specific Microsoft Azure services which have a unit consumption cost and are required to compose a given architectural pattern. Cost drivers are a series of configuration decisions for these services within a given architectural pattern that can increase or decrease costs. Microsoft Azure costs are divided by the specific service or capability hosted within Azure and continually updated to keep pace with the market demand.

Costs for each service are published publicly on the Microsoft Azure pricing calculator. It is recommended that costs be reviewed regularly during the design, implementation and operation of this and other architectural patterns

6.1 Cost Factors

Cost factors within the Azure Search Tier includes pricing based upon two dimensions: the number of Search Units (as outlined earlier) and the cost per GB of Egress. While ingress network traffic is included in the Azure service, the egress of network traffic across an Azure virtual network carries a cost. Additional cost factors consist of the inclusion of optional services such as the use of Application Insights for monitoring the supporting application utilizing this pattern.

6.2 Cost Drivers

As stated earlier, cost drivers consist of the configurable options of the Azure services required when implementing an architectural pattern which can impact the overall cost of the Solution. These configuration choices can have both a positive or negative impact on the cost of ownership of a given Solution within Azure, however they may also potentially impact the overall performance and availability of the Solution depending on the selections made by the organization.

Factors driving cost typically include capacity, redundancy and number of partitions. Cost drivers can be categorized by their level of impact (high, medium and low). Cost drivers for the Azure Search Tier architectural pattern are summarized in the table below.

Level of Impact |

Cost Driver |

Description |

High |

Search Unit |

Provides 1 unit of search capability |

Medium |

Size (and type) of Azure virtual machine instances |

A consideration for Azure compute costs include the virtual machine instance configuration size (and type). Instances range from low CPU and memory configurations to CPU and memory intensive sizes. Higher memory and CPU core allocations carry higher per hour operating costs. It is recommended that Extra small / shared core sizes are leveraged for hosting the crawler only. |

| Medium | Blob Storage |

Used to store content in Azure Blob containers |

Low |

Web Site |

Used to host the MVC 5 Web Site |

Note that the main cost driver will be the number of Search Units as indicated by the High Cost Impact.

7 Operations

Cloud Platform Integration Framework (CPIF) extends the operational and management functions of Microsoft Azure, System Center and Windows Server to support managed cloud workloads. As outlined in CPIF, Microsoft Azure architectural patterns support deployment, business continuity and disaster recovery, monitoring and maintenance as part of the operations of the Azure Search architectural pattern.

The deployment of this pattern involves only services in Azure. This includes the following:

- Azure Search Service and Instance(s)

- Storage Account for hosting Blobs (for content) and a Virtual Machine (for the crawler).

- Azure Websites for providing a sample Search UI

No automation for deployment is being provided at this time as it is all performed within the Azure Portal.

The Azure Portal includes a number of Monitoring features for Azure Services. These range from configuring monitoring for storage accounts to setting up instrumentation and data export in Azure Websites. Adequate guidance for configuration of monitoring, creating a monitoring dashboard and exporting data will be made available on https://azure.microsoft.com (or https://portal.azure.com) as the preview service evolves.

For Azure Search, Azure Portal extensions will be made available in within https://portal.azure.com to support providing visibility and controls into usage, capacity and configuration.

The maintenance of Azure Search predominately falls into the index schema and search data maintenance. Conceptually, this is no different than how search data is maintained within an application such as SharePoint. The critical factors include the schema/data type changes and keeping the fresh for each index and search instance.

In the current release, there is support for limited index schema updates. Schema updates that require re-indexing such as changing field types is not supported. While existing fields cannot be changed or deleted, updated fields can be added to an existing index at any time. When a updated field is added, all existing documents in the index will automatically have a null value for that field. If schema additions/changes are needed, an effective strategy is to create the updated field, load the content into the updated field through crawler, then switch supporting resources (or application) to use the updated field. The old field can be set to null or deleted such that there is zero down time in handling changes or swapping.

In the current release of the Azure Search, business continuity/disaster recovery (BC/DR) is achieved using the Concurrency Pattern. In other words, Search Instances are created to meet the requirements of BC/DR. Each search instance is independent of each other and Azure does not do synchronization. This will need to be accounted for in your architecture and maintenance design.

As Azure Search enters Preview, Azure portal extensions will be included for operational and management functions of the service. These are not available at this time, but features are anticipated to support deployment, business continuity, monitoring and maintenance of the service. This will also provide visibility and control into replicas, partitions and number of Search Units.

Outside of Azure Search, the use of this pattern can be achieved through the standard Azure Management Portal, Visual Studio and Azure PowerShell. Currently, Azure Search has its own onboarding process which will move to the Azure Portal in the near future.

For this pattern, Azure Search operations are focused mainly on index schema, content refresh and supporting resources. Azure Search supports schema changes but you will need to re-crawl the data. Keeping the index fresh is another operational activity that needs careful planning and execution. If updates are significant, it may be easier to crawl into an updated index then update supporting resources pointers when it’s ready. The same process may work recommended for schema changes as well.

8 Architecture Anti-Patterns

As with any architectural design there are both recommended practices and approaches which are not desirable. Anti-patterns for the Azure Search Tier include:

- Single region deployment for large, complex or demanding scenarios including disaster recovery

- Underestimating the demands and requirements of the crawler

Within the Azure Search architectural pattern there are multiple decisions which could impact the availability of the entire application infrastructure or Solution which depend on this tier. In many cases, the Search Tier serves simply as a search layer for other tiers of an application, including web, business logic components and data tiers.

For these reasons, it is important to avoid decisions which impact the availability of this tier. While scalability and performance are important for any service, these configuration choices can be reviewed and revisited over time with little configuration changes required and minimal impact to overall cost. Deployment to a single Azure region potentially exposes the application to the failure or inaccessibility of a given Azure region. While the loss of an entire region is an unlikely event, in order to withstand failures of this magnitude the pattern should include deployment to a second Azure region as outlined in the sections above.

are important for any service, these configuration choices can be reviewed and revisited over time with little configuration changes required and minimal impact to overall cost. Deployment to a single Azure region potentially exposes the application to the failure or inaccessibility of a given Azure region. While the loss of an entire region is an unlikely event, in order to withstand failures of this magnitude the pattern should include deployment to a second Azure region as outlined in the sections above.

In addition, deployment of a single search service may appear on the surface to reduce costs, however this does not protect against planned and unplanned availability within a given Azure datacenter or region. It is also important to help make sure the crawl process is sufficiently scaled to meet the demands of maintaining the index. In addition to normal CRUD (Create, Read, Update and Delete) operations, there is a time component to handling updates and ingestion. For these reasons, the architectural anti-patterns for this tier will typically focus on those decisions which impact the availability of the deployed Solution and maintenance of the data.

[1] Number of documents is an approximation. This is based on average document size, full-text capability of crawler and extracted data. Capacity planning and sample data sets are needed for more accuracy.

[2] Number of queries per second is an approximation. This depends on complexity of the query and size of the results set

[3] QPS is an approximation depending on query complexity and results set

[4] 25 Million Items per Search Unit is a relative number and ultimately driven by schema and attributes in a “document”