Failover Cluster Network Design with Hyper-V–How many NICs are required?

Failover Clustering reliability and stability is also “strongly” dependent on the underlying networking design and *drivers* but that’s a another story…. Let’s focus here in the design part.

Since there is no more the hard requirement like in Windows 2003 based clusters (MSCS) for an “HB” (heartbeat) network there is some “unsureness” around the network design for Failover Clustering based on Windows 2008 especially when virtualization workloads are involved![]() Heartbeat traffic.

Heartbeat traffic.

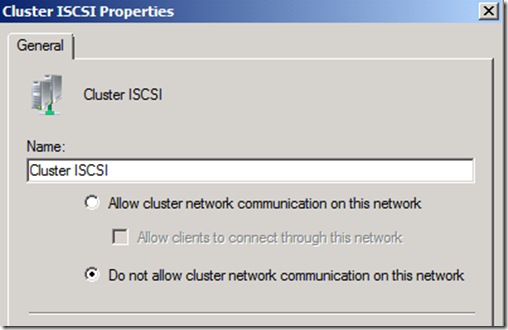

Cluster intra-communication (heartbeat traffic) will now go over each cluster network per default except you disable for cluster usage like in case of ISCSI:

NOTE: It is a well known best practice to disable cluster communication for ISCSI networks = dedicated for ISCSI traffic only!

The “golden” rule here is, for a “general” Failover Cluster, Microsoft does “recommend” to have at minimum 2 redundant network “paths” between the cluster nodes . But often you want to use more than the minimum “recommended” as you want to have additional redundancy (and/or performance) in your network connectivity (a.e. NIC Teaming) or you will use features like Hyper-V (CSV, LM) which will bring his own network requirements.

Depending on the used workloads on top of Failover Clustering, the number of required physical NICs can grow fast. In example in Hyper-V Failover Clustering with using Live Migration and ISCSI for VM guests the recommended number is roughly at minimum 4 physical NICs, of course more are required, when using NIC teaming technologies for redundancy and or performance objective.

Here are a few example scenarios and the number of the “minimum recommended” required physical NIC ports per cluster node:

Scenario 1:

Failover Cluster with 2 Nodes and Hyper-V (1 x Virtual Switch dedicated) in use without LM/CSV

=> min. 3 physical NICs are recommended => 2 Cluster Networks are automatically discovered and added to Cluster

Scenario 2:

Failover Cluster with 2 Nodes and Hyper-V (1 x Virtual Switch dedicated) in use with LM/CSV

=> min. 4 physical NICs are recommended => 3 Cluster Networks are automatically discovered and added to Cluster

Scenario 3:

Failover Cluster with 2 Nodes and Hyper-V (1 x Virtual Switch dedicated) in use with LM/CSV and ISCSI at host

=> min. 5 physical NICs are recommended (see note below for ISCSI) => 4 Cluster Networks are automatically discovered and added to Cluster

Scenario 4:

Failover Cluster with 2 Nodes and Hyper-V (2 x Virtual Switch dedicated) in use with LM/CSV and ISCSI at host and guest

=> min. 6 physical NICs are recommended (see note for ISCSI) => 4 Cluster Networks are automatically discovered and added to Cluster

Scenario 5:

Failover Cluster with 2 Nodes and Hyper-V (3 x Virtual Switch dedicated) in use with LM/CSV and ISCSI at host and guest

=> min. 7 physical NICs are recommended (see note for ISCSI) => 4 Cluster Networks are automatically discovered and added to Cluster

NOTE: In case of ISCSI it is recommended to have at minimum 2 physical network paths for redundancy (availability) purposes. NIC TEAMING IS NOT SUPPORTED HERE, MPIO or MCS must be used for reliability and availability purposes. As a best practice you should disable “cluster communication” through the ISCSI interfaces!

Of course, now when you use techniques like NIC teaming for networks like “Management, Hyper-V switches, CSV..” the number of required physical NICs will automatically grows.

Generally, the cluster service – “NETFT” network fault tolerant – will automatically discover each network based on their subnet and add it to the cluster as a cluster network. ISCSI networks should be generally disabled for Cluster usage (cluster communication).

Further official guidance around network design in Failover Clustering environments can be found here:

Network in a Failover Cluster

https://technet.microsoft.com/en-us/library/cc773427(WS.10).aspx

Network adapter teaming and server clustering

https://support.microsoft.com/kb/254101

Hyper-V: Live Migration Network Configuration Guide

https://technet.microsoft.com/en-us/library/ff428137(WS.10).aspx

Requirements for Using Cluster Shared Volumes in a Failover Cluster in Windows Server 2008 R2

https://technet.microsoft.com/en-us/library/ff182358(WS.10).aspx

Designating a Preferred Network for Cluster Shared Volumes Communication

https://technet.microsoft.com/en-us/library/ff182335(WS.10).aspx

Appendix A: Failover Cluster Requirements

https://technet.microsoft.com/en-us/library/dd197454(WS.10).aspx

Cluster Network Connectivity Events

https://technet.microsoft.com/en-us/library/dd337811(WS.10).aspx

Understanding Networking with Hyper-V

https://www.microsoft.com/download/en/details.aspx?amp;displaylang=en&displaylang=en&id=9843

Achieving High Availability for Hyper-V

https://technet.microsoft.com/en-us/magazine/2008.10.higha.aspx

Windows Server 2008 Failover Clusters: Networking (Part 1-4)

https://blogs.technet.com/askcore/archive/2010/02/12/windows-server-2008-failover-clusters-networking-part-1.aspx

https://blogs.technet.com/askcore/archive/2010/02/22/windows-server-2008-failover-clusters-networking-part-2.aspx

https://blogs.technet.com/askcore/archive/2010/02/25/windows-server-2008-failover-clusters-networking-part-3.aspx

https://blogs.technet.com/askcore/archive/2010/04/15/windows-server-2008-failover-clusters-networking-part-4.aspx

Description of what to consider when you deploy Windows Server 2008 failover cluster nodes on different, routed subnets

https://support.microsoft.com/kb/947048

Stay tuned…. ![]()

Regards

Ramazan