WCF Instancing, Concurrency, and Throttling – Part 2

From a developers perspective, code is either thread-safe or it is not. WCF will assume your service is not thread-safe unless you tell it otherwise by applying the ConcurrencyMode behavior. Your options for concurrency are Single (default), Multiple, and Reentrant. Depending on your implementation details for achieving thread-safety, you can really open up the throughput of your service by setting the ConcurrencyMode to Multiple or Reentrant.

The options for concurrency are, for the most part, self explanatory. So, please follow the links above if this is a concept new to you. In this post, I’m going to discuss a couple of specific scenarios; Reentrant Services and PerCall/Multi-Threaded Services

Reentrant Services

Making your service reentrant constrains the runtime to allow only one thread of execution on your service at any point in time (that is, it is still single-threaded). However, if your service operation makes an outbound WCF call then the lock on the instance context will be released, allowing another message to be dispatched to your service while the outbound call occurs. When the outbound call completes (returns back to your service), its thread will block until it can re-acquire the lock on the instance context.

The conditions for which reentrant concurrency applies are

1. your service is single-threaded, and

2. your service makes calls to other WCF services downstream OR your service calls back to the client through a callback contract.

If either of these conditions don’t apply to your service, then you would not gain anything by making your service reentrant.

PerCall / Multi-Threaded Services (over a binding with session)

For PerCall services, every message results in a new instance of your service, so generally there is not a concurrency issue unless you are accessing a shared resource between instances. However, there is a scenario where setting ConcurrencyMode to Multiple on a PerCall service can increase throughput to your service if the following conditions apply:

1. The client is multi-threaded and is making calls to your service from multiple threads using the same proxy.

2. The binding between the client and the service is a binding that has session (for example, netTcpBinding, wsHttpBinding w/Reliable Session, netNamedPipeBinding, etc.).

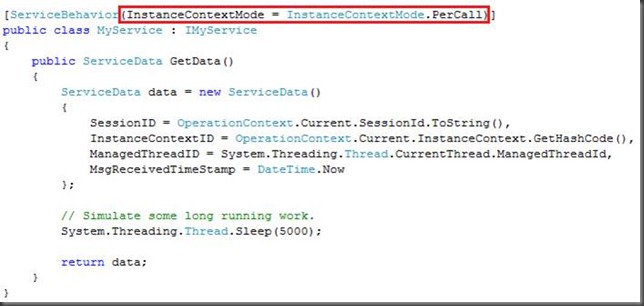

If these conditions apply and all you do is set your InstanceContextMode to PerCall, leaving the ConcurrencyMode to its default value (Single), then your multi-threaded client will not get the expected performance benefit of making concurrent calls to your service because there is a lock on the session in the channel layer. To illustrate, consider the following service code. This service just simply returns back some data from the service side to the client. One piece of data to notice is the MsgReceivedTimeStamp, which will indicate when the service instance actually receives the message.

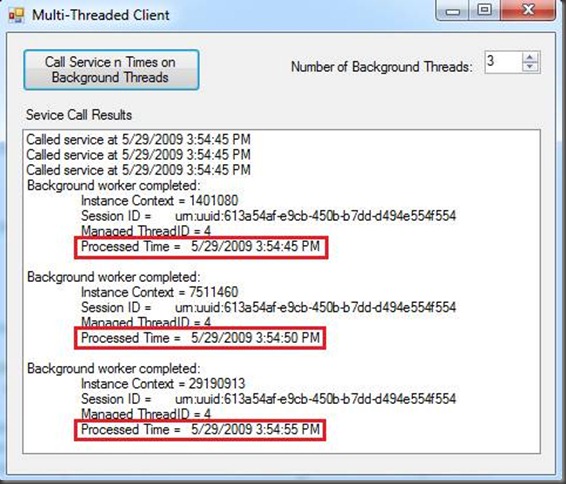

Next, assume we have a client application that caches a proxy to be used by multiple threads. If this client were to call the service simultaneously on 3 different threads using a BackgroundWorker, then the total response time would be about 15 seconds (5 seconds for each call). Output from such an application might look like this.

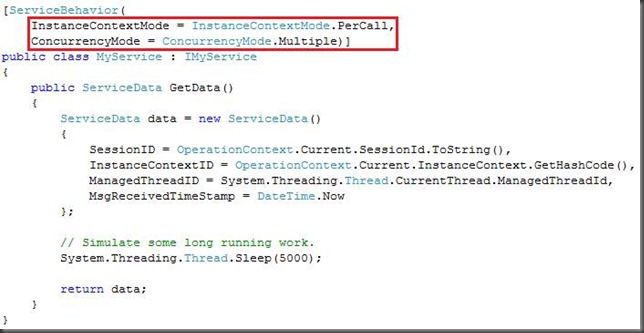

Clearly, this is not the behavior a client-side developer would expect. The results here are not any different than if we were to make 3 calls to the service sequentially. What we would like to see is all 3 threads dispatched at the same time such that the total response time is about 5 seconds (not 15 seconds). To achieve this, we can set the ConcurrencyMode to Multiple as shown here.

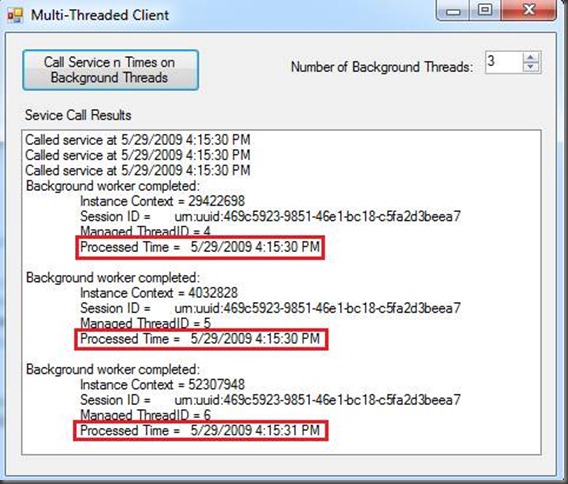

Running the client against the new service will yield the results we’re looking for. That is, all 3 calls were dispatched to the service at roughly the same time and we still have a unique InstanceContext (or service instance) for each message as shown here.

Writing thread-safe code and applying the correct ConcurrencyMode can result in significantly improved throughput for your service. In the next post, I’ll talk about how and why you can throttle your service.