Reconfigurable Data Processing for Clouds

Along with Anil Madhavapeddy at the University of Cambridge I've been thinking about how to apply reconfigurable computing technology (for example FPGAs) in data-centres and in cloud computing systems. Reconfigurable computing for some time now has had the potential to make a huge impact on mainstream high performance computing. We will soon have a million LUTs or more of highly parallel fine grain parallel processing power, and the ability to define high bandwidth custom memory hierarchies offers a compelling combination of flexibility and performance. However, mainstream adoption of reconfigurable computing has been hampered by the need to use and maintain specialized FPGA-based boards and clusters and the lack of programming models that make this technology accessible to mainstream programmers. FPGAs do not enjoy first class operating system support and lack the application binary interfaces (ABIs) and abstraction layers that other co-processing technologies enjoy (most notably GPUs).

By placing FPGAs on the same blades as GPUs and multicore processors in the cloud and offering them as a managed service with a high level programming infrastructure we can see a new dawn for reconfigurable computing which makes this exciting technology available to millions of developers without taking on the overhead of buying and maintaining specialized hardware and without having to invest in complex tool-chains and programming models based on the low-level details of circuit design. The major limitation on the growth potential of data-centres is now energy consumption and it is here where alternative computing resources like FPGAs can make a significant impact allowing us to scale out cloud operations to an extent which is not possible using just conventional processors.

Just a decade ago, it was common for a company to purchase physical machines and place them with a hosting company. As the Internet's popularity grew, their reliability and availability requirements also grew beyond a single data-centre. Sites such as Microsoft, Google and Amazon started building huge data-centres in the USA and Europe, with correspondingly larger energy demands. These providers had to provision for their peak load, and had much idle capacity at other times. At the same time, researchers were examining the possibility of dividing up commodity hardware into isolated chunks of computation and storage, which could be rented on-demand. The XenoServers project forecast a public infrastructure spread across the world, and the Xen hypervisor was developed as an open-source solution to partition multiple untrusted operating systems. Xen was adopted by Amazon to underpin its Elastic Computing service, and it thus became the first commercial provider of what is now dubbed ``cloud computing''--- renting a slice of a huge data-centre to provide on-demand computation resources that can be dynamically scaled up and down according to demand.

Cloud computing brought reconfigurable computing to the software arena. Hardware resources are now dynamic, and so sudden surges in load can be adapted to by adding more virtual machines to a server pool until it subsides. This resulted in a surge of new datacenter components designed to scale across machines, ranging from storage systems like Dynamo and Cassandra to distributed computation platforms like Google's MapReduce and Microsoft's Dryad which have also inspired FPGA-based map-reduce idioms.

Where are the Hardware Clouds?

Cloud data-centres have been encouraging horizontal scaling by increasing the number of hosts. The vertical scaling model of more powerful individual machines is now difficult due to the shift to multi-core CPUs instead of simply cranking up the clock speed. IBM notes that with "data centers using 10--30 times more energy per square foot than office space, energy use doubling every 5 years, and [..] delayed capital investments in new power plants", something must change. The growth potential of these data-centres is now energy limited, and the inefficiency of the software stack is beginning to take its toll. This is an ideal time to dramatically improve the efficiency of data-centres by mapping common and large-scale tasks into shared, million-LUT FPGAs boards that complement the general-purpose hardware currently installed.

Challenges

Reconfigurable FPGA technology has not made good inroads into commodity deployment, and we now consider some of the reasons. One major problem is that the CAD tools that have been developed to date predominately target a mode of use that is very different from what is required for reconfigurable computing in the cloud. CAD tools target an off-line scenario where the objective is to implement some function (e.g. network packet processing) in the smallest area (to reduce component cost by allowing the use of a smaller FPGA) whilst also meeting timing closure. The designed component becomes part of an embedded system (e.g. a network router) of which thousands or millions of units are made. Performance and utilisation are absolutely key and productivity has not been as paramount since the one off engineering costs are amortised over many units.

The use of FPGA for computing and co-processing has very different economics which is poorly supported by the mainstream vendor tools. Reconfigurable computing elements in the cloud need CAD tools that prioritise flexibility and they need to be programmable by regular mainstream programmers familiar with technologies like .NET and Java, and we can not require them to be ace Verilog programmers. Mainstream tool vendors have responded poorly to the requirements of this constituency because up until now they represent a very small part of the market.

Reconfigurable computing in the cloud promises to change that and there will be a new demand for genuinely high level synthesis tools that map programs to circuits. We need high level synthesis which works in much broader sense than current tools i.e. with a focus on applications rather than compute kernels. We need to be able to compile dynamic data-structures, recursion and very heterogeneous fine-grain parallelism and not just data-parallelism over well behaved static arrays. The need to synthesize applications rather than matrix-based kernel operations poses fresh new challenges for the high level synthesis community. This new scenario also enables new models of use for such tools e.g. enabling run-once circuits which is something that makes no sense in the conventional embedded use model.

In order to achieve the vision of a reconfigurable cloud based computing system we need to perform significant innovations in the area of CAD tools to make them fit for (the new) purpose. The closed nature of commercial vendor tools and the lack of current business model alignment of current FPGA manufacturers means that we can not expect the required support and capabilities. In particular, we need to develop cloud based reconfigurable computing models which largely abstract the underlying architecture so a computation can be mapped to either a Xilinx or an Altera or another vendor part because we wish to make the reconfigurable resource a commodity item. However, vendors have conventionally aggressively resisted commoditisation. However, this is essential for the reconfigurable computing research community to take full ownership of the programming chain starting at an unmapped netlist (perhaps against a generic library) all the way to a programming bit-stream plus the extra information required to support dynamic reconfiguration. The community already has excellent work on tools like VPR, Torc, and LegUp which can be used as a starting point for such a tool chain. A similar precedent in the software world is LLVM, which has grown into a mature framework for working on most aspects of compiler technology.

Overlay Architectures

When one implements an algorithm on a microprocessor the fundamental task involves working out to "drive" a fixed architecture to accomplished the desired computation. When one implements an algorithm on an FPGA one can devise the tailored architecture for the given problem. In general the former approach affords flexibility and rapid development at the cost of performance and the latter affords performance at the cost of development effort. There is an important intermediate step that involves the use of overlay architectures where one implements a logical architecture (e.g. some form of vector processor) on top of a regular reconfigurable architecture like an FPGA. Indeed, there is healthy level of research work in the area of soft vector processors e.g. VESPA, VIPERS and VEGAS. This approach becomes particularly pertinent for cloud based deployment of reconfigurable systems because it make two very important contributions:

- reconfiguration time is no longer an issue because what is downloaded to the FPGA is `program' for the overlay architecture and not a reconfiguration bit-stream

- the semantic gap between the regular programmers and the reconfigurable architecture and tools is reduced because now the overlay architecture acts like a contract between the programmer and the low level device

One could image a cloud based reconfigurable computing system where a user submits a program along with the architecture required to execute it. The architecture specification could be just an identifier to specify a fixed system provided architecture, a set of architectures required (which can be mixed an matched depending on resource availability and latency and energy requirements) or it may include an actual detailed circuit intermediate representation which is compiled in the cloud to a specific FPGA bit-stream. Furthermore, such approaches need the support of layout specification systems like Lava.

We also note that Texas Instruments just introduced the LabVIEW FPGA Cloud Compile mode which uses remote VMs to do layout; using the software VMs to bootstrap specialized hardware is particularly appealing. Related to the idea of overlay architectures is the notion of building flexible memory hierarchies out of soft logic (CoRAM and LEAP) and these approaches are a promising start for managing access to shared off-chip memory for a virtualized reconfigurable computing resource in the cloud.

Domain Specific Languages (DSLs)

Can we devise one language to program heterogeneous computing systems? Absolutely not. It is far better to provide a rich infrastructure for the execution and coordination of computations (and processes) and allow the community to innovate solutions for specific situations. For example, for users it is desirable to use OpenCL to express data-parallel computations that can generate code which can execute on a multicore processor, GPUs, be compiled to gates or execute on a soft vector processor on an FPGA. We already have examples of projects that take OpenCL or a closer variation for compilation to GPUs and FPGAs. Others might want to specify a MATLAB-based computation that streams data from a SQL-server data-base to process images and videos. Others might want to express SQL queries which are applied to information from databases in the cloud or applied to data streams from widely distributed sensors. Or perhaps someone wants to use Java with library based concurrency extensions. Rather than mandating one language and model we should instead allow the community to cultivate and incubate the necessary domain specific languages and the reconfigurable cloud based operating system should provide the "backplane" required to execute and compose these domain specific languages.

A concrete way to start defining an infrastructure is to develop a coordination and communication architecture for processes along with a concrete representation e.g. using an XML Schema. This could then be used to compose and map computations (e.g. map this kernel to a computing resources that his this capability), specify data-sources (stream input from this SQL-server data-source, stream output to a DRAM-based resource) etc. A program is now a collection of communicating processes along with a set of recipes for combining the processes for achieving the desired result and an invocation of a program involves the specification of non-functional requirements like "minimize latency" or "minimize energy consumption".

Research Directions and Hot Topics in Clouds

Cloud computing is quite new and evolving rapidly. We now list some of the major interest areas from that community, and some of the more problems that large, shared reconfigurable FPGAs could help to solve.

Operating Systems

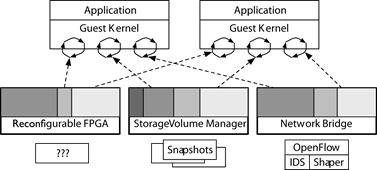

The traditional role of an operating system of partitioning physical hardware is quite different when virtualised in the cloud. Hypervisors expose simple network and storage interfaces to virtual machines, with the details of physical device drivers handled in a separate domain. Kernels that run on the cloud only need a few device drivers to work, and no longer have to support the full spectrum of physical devices. The figure below illustrates the difference between managing physical devices and using a portion of that resource from a virtualised application.

Split-trust devices on virtualised platforms have a management domain that partitions physical resources, allocates portions to guests, and enforces access rights. The details of the management policy is specific to the type of resource, such as storage or networking.

The management APIs in the control domain differ across resource types. The handling of networking involves bridging and topology and integration with systems such as OpenFlow. Storage management is concerned with snapshots and de-duplication for the blocks used by VMs. However, the front-end device exposed to the guests is simple; often little more than a shared-memory structure with a request/response channel. This technique is so far mainly used for I/O channels but could be extended to actually run computation over the data, with the same high-throughput and low-latency that existing I/O systems enjoy. The guest could specify the computation required (possibly as a DSL), and the management tools would implement the details of interfacing with the physical board and managing sharing across VMs. This is simple from the programmer's perspective, and portable across different FPGA boards and tool-chains.

The availability of GPUs and programmable I/O boards have led to the development of new software architectures. Helios is a new operating system designed to simplify the task of writing, deploying and profiling code across heterogeneous platforms. It introduces "satellite kernels" that export a uniform set of OS abstractions, but are independent tasks that run across different resources.

Data-centre Programming

Processing large datasets requires partitioning it across many hosts, and so distributed data-flow have become popular in recent years. These frameworks expose a simple programming model, and transparently handle the difficult aspects of distribution: fault tolerance, resource scheduling, synchronization and message passing. MapReduce and Dryad are two popular frameworks for certain classes of algorithms and more recently the CIEL execution engine also adds supports for iterative algorithms (e.g. k-means or binomial options pricing).

These frameworks all build Directed Acyclic Graphs (DAGs), where the nodes represent data, and the edges are computation over the data. The run-time schedules compute on specific nodes, and iteratively walks the DAG until a result is obtained. It can also prepare the host before a node is processed, such as replicating some required input data from a remote host. This can also include compilation, and so an FPGA DSL could be transparently scheduled to hardware (as available) or executed in software if not available. The main challenge is to track the cost of reconfiguring FPGAs rather than just executing it in software, but this is made easier since the run-time can inspect the size of the input data at runtime. Mesos investigates how to partition physical resources across multiple frameworks operating on the same set of hosts, which is useful when considering fixed-size FPGA boards.

The recent surge of new components designed specifically for data-centres also encourages research into new database models that depart from SQL and traditional ACID models. Mueller programmed data processing operators on top of large FPGAs, and concluded that the right computation model is essential (e.g. an asynchronous sorting network). Within these constraints however, they had comparable performance and significantly improved power-consumption and parallelisation---both areas essential to successful data-centre databases in the modern world.

A close integration between high-level host languages and FPGAs will greatly help adoption by mainstream programmers. The fact that C and C++ are considered low-level languages in the cloud, and high-level to FPGA programmers is indicative of the cultural difference between the two communities! There are a number of promising efforts that embed DSLs in C/C++ code to ease their integration. MORA is a DSL for streaming vector and matrix operations, aimed at multimedia applications. Designs can be compiled into normal executables for functional testing, before being retargeted at a hardware array. MARC uses the LLVM compiler infrastructure to convert C/C++ code to FPGAs. Although performance is still lower than a manually optimised FPGA implementation, it is significantly less effort to design and implement portably due to its higher-level approach. This quicker code/deployment/results cycle is essential to incrementally get feedback about code for the more casual programmer, who is using renting cloud computing resources in order to save time in the first place.

Some languages now separate data-parallel processing explicitly so that they can utilise resources such as GPUs. Data parallel Haskell integrates the full range of types available modern languages, and allows sum types, recursive types, higher-order functions and separate compilation. Accelerator is a library to synthesise data-parallel programs written in C# directly to FPGAs. A more radical embedding is via multi-stage programming, where programmers specify abstract algorithms in a high-level language that is put through a series of translation stages into the desired architecture. All of these approaches are highly relevant to reconfigurable FPGA computing in the cloud, as they extend existing, familiar programming languages with the constraints required to compile sub-sets into hardware. The Kiwi project at Microsoft and the Liquid Metal project at IBM both provide a route from high level concurrent programs to circuits.

Information Security

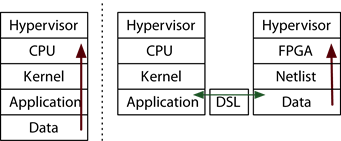

The cloud is often used to outsource processing over large datasets. The code implementing the batch-processing is often written in C or Fortran, and a bug in handling input data can let attackers execute arbitrary code on the host machine (see the figure below left). Although the hypervisor layer contains the attacker inside the virtual machine, they still have access to many of the local network resources, and worse, other (possibly sensitive) datasets. Exploits are mitigated by using software privilege separation, but this places trust in the OS kernel layer instead.

Malicious data can be crafted to exploit memory errors and execute as code on a CPU (left). With an FPGA interface, it cannot execute arbitrary code, and the CPU never iterates over the data (right).

Data processing on the cloud using reconfigurable FPGAs offers an exceptional improvement in security by shifting that trust from software to hardware. Specialising algorithms into FPGAs entirely removes the capability of attackers to run arbitrary code, thus enforcing strong privilege separation. The application compiles its algorithms to an FPGA, and never directly manipulates the data itself via the CPU. Malicious data never gets the opportunity to run on the host CPU, and instead only a small channel exists between the OS and FPGA to communicate results (see figure above right). The conventional threat model for FPGAs is that a physical attacker can compromise its hardware SRAM. When deployed in the cloud, the attacker cannot gain physical access, leaving few attack vectors.

Moving beyond low-level security, there is also a realisation that data contents needs protection against untrusted cloud infrastructure. Encoding data processing tasks across FPGAs enforces a data-centric view of computation, distinct from coordinating computation (e.g. load balancing or fault tolerance, which cannot compromise the contents of data). Programming language researchers have mechanisms for encoding information flow constraints, and more recently, statistical privacy properties. These techniques are often too intrusive to fully integrate into general-purpose languages, but are ideal for the domain-specific data-flow languages which provide the interface between general-purpose and reconfigurable FPGA computing.

Another intriguing development is homomorphic encryption, which permits computation over encrypted data without being able to ever decrypt the underlying data. The utility of homomorphic encryption has been recognised for decades, but has so far been extremely expensive to implement. Cloud computing revitalises the problem, as malicious providers might be secretly recording data or manipulating results. Recently, there have been several lattice-based cryptography schemes that reduce the complexity cost of homomorphic encryption. Lattice reduction can be significantly accelerated via FPGAs; Detrey et al. report a speedup of 2.12 of an FPGA versus a multi-core CPU of comparable costs. This points to a future where reconfigurable million-LUT FPGAs could be used to perform computation where even the cloud vendor is untrusted!

Reducing the cost of cryptography in the cloud could also have significant social impact. The Internet has seen large-scale deployment of anonymity networks such as Tor and FreeNet for storing data. Due to the encryption requirements imposed by onion-routing, access to such networks remains slow and high-latency. There have been proposals to shift the burden of anonymous routing into the cloud to fix this, but reducing the cost (financially) remains one of the key barriers to more widespread adoption of anonymity. This is symptomatic of the broader problem of improving networking performance in the cloud. Central control systems such as OpenFlow are rapidly gaining traction, along with high-performance implementation in hardware. Virtual networking is reconfigured much more often than hardware setups (e.g. for load-balancing or fault tolerance), and services such as Tor further increase the gate requirements as computation complexity increases.

The challenge, then, for integration into the cloud, is how to unify the demands of data-centric processing, language integration, network processing into a single infrastructure. Specific problems that have to be addressed in addition to those mentioned above include:

- The need for better OS integration, device models, and abstractions (as with split-trust in Xen described earlier).

- Without an ABI, software re-use and integration is very difficult. How can (for example) OpenSSL take advantage of an FPGA?

- Debugging and visualization support. General purpose OSes have provide a hypervisor-kernel-userspace-language runtime model gets progressively easier and higher-level to debug. Abstraction boundaries exist where they don't in current FPGAs. Staged programming or functional testing in a general-purpose systems makes this easier.

- We need to develop a common set of concepts, principles and models for application execution on reconfigurable computing platforms to allow collaboration between universities and companies and to provide a solid framework to build new innovations and applications. This kind of eco-system has been sadly lacking for reconfigurable systems.

It is encouraging that cloud computing is driven by charging at a finer granularity for solving problems. Reconfigurable FPGAs have driven down the cost of many types of computation commonly found on the cloud, and thus a community-driven deployment of a cloud setup with rentable hardware would provide a focal point to "fill in the blanks" for reconfigurable FPGA computing in the cloud.

It is not clear that these goals can be achieved by a collection of parallel independent university and industry projects. What is needed is a coordinated research program involving members of the reconfigurable computing community working with each other and researchers in cloud computing to define a new vision of where we would like to go and then set standards etc. to try and achieve that goal. For this to happen we will need some kind of wide ranging joint research project proposal or standardization effort.

Call to Arms

Reconfigurable computing is at the cusp of rising up from being a niche activity accessible to only a small group of experts to becoming a mainstream computing fabric used in concert with other heterogeneous computing elements like GPUs. For this to become a reality we need to combine some of the successes in the FPGA-based research with new thinking about programming models to create a development environment for "civilian programmers". This will require collaboration between researchers in architecture, CAD tools, programming languages and types, run-time system development, web services, scripting and orchestration, re-targetable compilation, instrumentation and monitoring of heterogeneous systems, and failure management. Furthermore, the requirements of reconfigurable computing in a shared cloud service context also places new requirements on CAD tools and architectures which are at odds with their current requirements. Today FPGA vendors produce architectures for use in an embedded context to be programmed by digital design engineers.

Yesterday's programmers of reconfigurable systems were highly trained digital designers using Verilog. Today were at the cusp of a revolution which will make tomorrow's users of reconfigurable technology regular software engineers who will map their algorithms onto a heterogeneous mixture of computing resources to achieve currently unachievable levels of performance, management of energy consumption and the execution of scenarios which promise an ever more interconnected world. Here we have set out a vision for a reconfigurable computing system in the cloud, identified important research challenges and promising research directions and illustrated scenarios that are made possible by reconfigurable computing in the cloud.