CRL verification Failure –SharePoint

Couple of weeks back, I had a chance to work with one of the customer who reported multiple issues in his farm. These issues were followed by a farm wide outage and he ended up contacting Microsoft. I got involved with this customer as part of the escalation process and following were his major concerns after the initial discussion I had with him.

1. Site slowness after every IIS Reset. The sites takes minutes to load this is been the issues from the day they setup this production farm.

2. On couple of servers in the farm the configuration cache is not getting refreshed and the OWSTimer is not working correctly. After clearing the configuration cache, if restarts the timer-service none of the files are created back by the timer.

3. Because of the issues with the configuration cache, they were forced remove one of the server from the NLB (Network Load Balancer). Unfortunately one server left in the NLB could not handle the user load during the business hours and this ended up with a farm wide outage.

Our primary objective here was to bring back the server which was moved out of NLB in working condition. We started looking at the timer job issue on the broken server. As always our starting point to dig further in to this issue was ULS logs. We enabled verbose logging and started looking at the ULS logs. Unfortunately the ULS logs were not helpful as the tracing service was not able to log much about the OWSTIMER as it was in stuck state. Basically nothing other than the following were getting logged in the ULS.

OWSTIMER.EXE (0x08E0) 0x06A4 ULS Logging Unified Logging Service 8pbc Verbose WatsonRC is currently unavailable. Error Reporting will still work properly.

OWSTIMER.EXE (0x08E0) 0x06A4 ULS Logging Unified Logging Service 8wsv High ULS Init Completed (OWSTIMER.EXE, ONETNA~1.DLL)

OWSTIMER.EXE (0x08E0) 0x06A4 Windows SharePoint Services Timer 6gey Verbose Entered VtimerStore::HrInitTimer

OWSTIMER.EXE (0x08E0) 0x06A4 Windows SharePoint Services Timer 6gfa Verbose Entered VtimerStore::HrGetISPNativeConfigurationProvider

OWSTIMER.EXE (0x08E0) 0x0C6C ULS Logging Unified Logging Service 7a8f Verbose Baseboard Manufacturer: 'Intel Corporation'

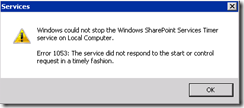

We tried to stop the OWSTIMER from service manager and we get the following error after a long wait period. So we had to always kill the respective process from the task manager.

We went back and looked at the configuration cache folder and found that couples of XML files are created in the last 30-45 minutes. This was interesting because it kind of proved us that files are getting created but either they are very slow or there could be a possibility that the some of the objects which in the configuration database (Objects Table) is not in good shape.

Since nothing from the ULS log was helping us to isolate between the object corruption and slowness, we decided to get a memory dump of the OWSTIMER.EXE as soon as we start it on the non-working server. I got couple of memory dumps and we ended up finding the following from the analysis:

ntdll!ZwWaitForSingleObject+0xa

kernel32!WaitForSingleObjectEx+0x9c

cryptnet!CryptRetrieveObjectByUrlWithTimeout+0x263

cryptnet!CryptRetrieveObjectByUrlW+0x20c

crypt32!ChainRetrieveObjectByUrlW+0x81

crypt32!CCertChainEngine::RetrieveCrossCertUrl+0x135

crypt32!CCertChainEngine::UpdateCrossCerts+0x196

crypt32!CCertChainEngine::Resync+0x1e8

crypt32!CCertChainEngine::CreateChainContextFromPathGraph+0x239

crypt32!CCertChainEngine::GetChainContext+0x8b

crypt32!CertGetCertificateChain+0x100

Most of the threads in OWSTIMER were checking the certificate revocation lists (CRLs) to verify that the signature is still valid. The problem here when loading signed assemblies, the .Net Framework checks the Internet based Certificate Revocation List to validate the signatures of the DLL that’s loaded. This kind of explained why they might be facing a slow page load after every IIS Reset. We confirmed this is the same root cause for the page load issue as well by getting a memory dump of w3wp process right after an IIS Reset.

So, what is going on here?

The problem is that when loading signed assemblies the .net Framework checks the Internet based Certificate Revocation List. As our servers have, like most secure environments, no outgoing connections to the public Internet, the connection to crl.microsoft.com times out after what appears to be 30 seconds. It probably does this a couple of times in succession, causing a delay in couple of minutes & we wait when spinning up SharePoint.

After the timeout the assembly is still loaded and the software works as expected, though very slow every time a new signed assembly is loaded for the first time, which happens a lot. The worst thing is that no entries are written to the event log and no exceptions are thrown so you are left completely in the dark about why your application is so slow.

The Workaround here is this configuration setting to disable signature verification in a .NET Framework 2.0 managed application. You can use this configuration setting in an application configuration file. To do this, add the following code to the <ApplicationName>.exe.config file for the .NET Framework 2.0 managed application,

For the timer service, we created a configuration file “owstimer.exe.config" with in bin folder and disabled the generatePublisherEvidence .

<configuration>

<runtime>

<generatePublisherEvidence enabled="false"/>

</runtime>

</configuration>

We restarted timer service and the configuration cache building completed in few seconds. The Sites started loading in few seconds after every IISReset. For more details about the Fix , please refer to https://support.microsoft.com/kb/936707/en-us .

For the site slowness after IISReset, we disabled CRL check on all the servers at ASP.net Level using same configuration in “aspnet.config" file.

Quick Ways to find out that CRL check failure are

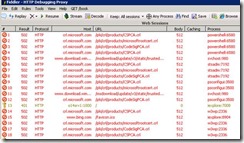

1. Collect a Fiddler trace on the Server and you would see the requests going to Crl.microsoft.com but no response is obtained.

2. Collect a Netmon Trace on the Server to see either the Name resolution failing for Crl.microsoft.com or no response for request to Crl.microsoft.com .

Here is a screenshot of a Fiddler Capture on SharePoint server which does not have access to internet & attempt was made to run SharePoint Management Shell, Stsadm Command & Launch Central Admin from Start Menu .

Here is a sample network captures taken on a SharePoint sever which is trying to access clr.microsoft.com site to check the CRL. To do so we try resolving the names crl.microsoft.com and the name resolution looks good.

312 16:35:59 13-08-2012 10.30.30.154 SERVER1 <00> DNS DNS:QueryId = 0xA724, QUERY (Standard query), Query for crl.microsoft.com of type Host Addr on class Internet

315 16:35:59 13-08-2012 SERVER1 <00> 10.30.30.154 DNS DNS:QueryId = 0xA724, QUERY (Standard query), Response - Success, 63.84.95.48, 63.84.95.73 ...

We then try to establish a TCP session on port 80 with the IP address 63.84.95.48 and we fail to establish the session.

317 16:35:59 13-08-2012 Owstimer.exe 10.30.30.154 63.84.95.48 TCP TCP:Flags=......S., SrcPort=53053, DstPort=HTTP(80), PayloadLen=0, Seq=339758504, Ack=0, Win=8192 ( ) = 8192

554 16:36:02 13-08-2012 TmProxy.exe 10.30.30.154 63.84.95.48 TCP TCP:[SynReTransmit #317]Flags=......S., SrcPort=53053, DstPort=HTTP(80), PayloadLen=0, Seq=339758504, Ack=0, Win=8192 ( ) = 8192

685 16:36:06 13-08-2012 TmProxy.exe 63.84.95.48 10.30.30.154 TCP TCP:Flags=...A.R.., SrcPort=HTTP(80), DstPort=53053, PayloadLen=0, Seq=0, Ack=339758505, Win=8192