Send log data to Azure Monitor by using the HTTP Data Collector API (deprecated)

This article shows you how to use the HTTP Data Collector API to send log data to Azure Monitor from a REST API client. It describes how to format data that's collected by your script or application, include it in a request, and have that request authorized by Azure Monitor. We provide examples for Azure PowerShell, C#, and Python.

Note

The Azure Monitor HTTP Data Collector API has been deprecated and will no longer be functional as of 9/14/2026. It's been replaced by the Logs ingestion API.

Concepts

You can use the HTTP Data Collector API to send log data to a Log Analytics workspace in Azure Monitor from any client that can call a REST API. The client might be a runbook in Azure Automation that collects management data from Azure or another cloud, or it might be an alternative management system that uses Azure Monitor to consolidate and analyze log data.

All data in the Log Analytics workspace is stored as a record with a particular record type. You format your data to send to the HTTP Data Collector API as multiple records in JavaScript Object Notation (JSON). When you submit the data, an individual record is created in the repository for each record in the request payload.

Create a request

To use the HTTP Data Collector API, you create a POST request that includes the data to send in JSON. The next three tables list the attributes that are required for each request. We describe each attribute in more detail later in the article.

Request URI

| Attribute | Property |

|---|---|

| Method | POST |

| URI | https://<CustomerId>.ods.opinsights.azure.com/api/logs?api-version=2016-04-01 |

| Content type | application/json |

Request URI parameters

| Parameter | Description |

|---|---|

| CustomerID | The unique identifier for the Log Analytics workspace. |

| Resource | The API resource name: /api/logs. |

| API Version | The version of the API to use with this request. Currently, the version is 2016-04-01. |

Request headers

| Header | Description |

|---|---|

| Authorization | The authorization signature. Later in the article, you can read about how to create an HMAC-SHA256 header. |

| Log-Type | Specify the record type of the data that's being submitted. It can contain only letters, numbers, and the underscore (_) character, and it can't exceed 100 characters. |

| x-ms-date | The date that the request was processed, in RFC 1123 format. |

| x-ms-AzureResourceId | The resource ID of the Azure resource that the data should be associated with. It populates the _ResourceId property and allows the data to be included in resource-context queries. If this field isn't specified, the data won't be included in resource-context queries. |

| time-generated-field | The name of a field in the data that contains the timestamp of the data item. If you specify a field, its contents are used for TimeGenerated. If you don't specify this field, the default for TimeGenerated is the time that the message is ingested. The contents of the message field should follow the ISO 8601 format YYYY-MM-DDThh:mm:ssZ. The Time Generated value cannot be older than 2 days before received time or more than a day in the future. In such case, the time that the message is ingested will be used. |

Authorization

Any request to the Azure Monitor HTTP Data Collector API must include an authorization header. To authenticate a request, sign the request with either the primary or the secondary key for the workspace that's making the request. Then, pass that signature as part of the request.

Here's the format for the authorization header:

Authorization: SharedKey <WorkspaceID>:<Signature>

WorkspaceID is the unique identifier for the Log Analytics workspace. Signature is a Hash-based Message Authentication Code (HMAC) that's constructed from the request and then computed by using the SHA256 algorithm. Then, you encode it by using Base64 encoding.

Use this format to encode the SharedKey signature string:

StringToSign = VERB + "\n" +

Content-Length + "\n" +

Content-Type + "\n" +

"x-ms-date:" + x-ms-date + "\n" +

"/api/logs";

Here's an example of a signature string:

POST\n1024\napplication/json\nx-ms-date:Mon, 04 Apr 2016 08:00:00 GMT\n/api/logs

When you have the signature string, encode it by using the HMAC-SHA256 algorithm on the UTF-8-encoded string, and then encode the result as Base64. Use this format:

Signature=Base64(HMAC-SHA256(UTF8(StringToSign)))

The samples in the next sections have sample code to help you create an authorization header.

Request body

The body of the message must be in JSON. It must include one or more records with the property name and value pairs in the following format. The property name can contain only letters, numbers, and the underscore (_) character.

[

{

"property 1": "value1",

"property 2": "value2",

"property 3": "value3",

"property 4": "value4"

}

]

You can batch multiple records together in a single request by using the following format. All the records must be the same record type.

[

{

"property 1": "value1",

"property 2": "value2",

"property 3": "value3",

"property 4": "value4"

},

{

"property 1": "value1",

"property 2": "value2",

"property 3": "value3",

"property 4": "value4"

}

]

Record type and properties

You define a custom record type when you submit data through the Azure Monitor HTTP Data Collector API. Currently, you can't write data to existing record types that were created by other data types and solutions. Azure Monitor reads the incoming data and then creates properties that match the data types of the values that you enter.

Each request to the Data Collector API must include a Log-Type header with the name for the record type. The suffix _CL is automatically appended to the name you enter to distinguish it from other log types as a custom log. For example, if you enter the name MyNewRecordType, Azure Monitor creates a record with the type MyNewRecordType_CL. This helps ensure that there are no conflicts between user-created type names and those shipped in current or future Microsoft solutions.

To identify a property's data type, Azure Monitor adds a suffix to the property name. If a property contains a null value, the property isn't included in that record. This table lists the property data type and corresponding suffix:

| Property data type | Suffix |

|---|---|

| String | _s |

| Boolean | _b |

| Double | _d |

| Date/time | _t |

| GUID (stored as a string) | _g |

Note

String values that appear to be GUIDs are given the _g suffix and formatted as a GUID, even if the incoming value doesn't include dashes. For example, both "8145d822-13a7-44ad-859c-36f31a84f6dd" and "8145d82213a744ad859c36f31a84f6dd" are stored as "8145d822-13a7-44ad-859c-36f31a84f6dd". The only differences between this and another string are the _g in the name and the insertion of dashes if they aren't provided in the input.

The data type that Azure Monitor uses for each property depends on whether the record type for the new record already exists.

- If the record type doesn't exist, Azure Monitor creates a new one by using the JSON type inference to determine the data type for each property for the new record.

- If the record type does exist, Azure Monitor attempts to create a new record based on existing properties. If the data type for a property in the new record doesn’t match and can’t be converted to the existing type, or if the record includes a property that doesn’t exist, Azure Monitor creates a new property that has the relevant suffix.

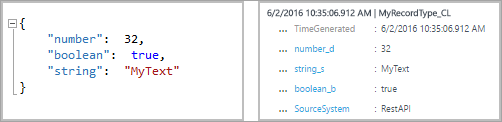

For example, the following submission entry would create a record with three properties, number_d, boolean_b, and string_s:

If you were to submit this next entry, with all values formatted as strings, the properties wouldn't change. You can convert the values to existing data types.

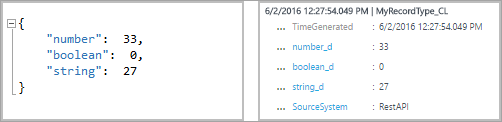

But, if you then make this next submission, Azure Monitor would create the new properties boolean_d and string_d. You can't convert these values.

If you then submit the following entry, before the record type is created, Azure Monitor would create a record with three properties, number_s, boolean_s, and string_s. In this entry, each of the initial values is formatted as a string:

Reserved properties

The following properties are reserved and shouldn't be used in a custom record type. You'll receive an error if your payload includes any of these property names:

- tenant

- TimeGenerated

- RawData

Data limits

The data posted to the Azure Monitor Data collection API is subject to certain constraints:

- Maximum of 30 MB per post to Azure Monitor Data Collector API. This is a size limit for a single post. If the data from a single post exceeds 30 MB, you should split the data into smaller sized chunks and send them concurrently.

- Maximum of 32 KB for field values. If the field value is greater than 32 KB, the data will be truncated.

- Recommended maximum of 50 fields for a given type. This is a practical limit from a usability and search experience perspective.

- Tables in Log Analytics workspaces support only up to 500 columns (referred to as fields in this article).

- Maximum of 45 characters for column names.

Return codes

The HTTP status code 200 means that the request has been received for processing. This indicates that the operation finished successfully.

The complete set of status codes that the service might return is listed in the following table:

| Code | Status | Error code | Description |

|---|---|---|---|

| 200 | OK | The request was successfully accepted. | |

| 400 | Bad request | InactiveCustomer | The workspace has been closed. |

| 400 | Bad request | InvalidApiVersion | The API version that you specified wasn't recognized by the service. |

| 400 | Bad request | InvalidCustomerId | The specified workspace ID is invalid. |

| 400 | Bad request | InvalidDataFormat | An invalid JSON was submitted. The response body might contain more information about how to resolve the error. |

| 400 | Bad request | InvalidLogType | The specified log type contained special characters or numerics. |

| 400 | Bad request | MissingApiVersion | The API version wasn’t specified. |

| 400 | Bad request | MissingContentType | The content type wasn’t specified. |

| 400 | Bad request | MissingLogType | The required value log type wasn’t specified. |

| 400 | Bad request | UnsupportedContentType | The content type wasn't set to application/json. |

| 403 | Forbidden | InvalidAuthorization | The service failed to authenticate the request. Verify that the workspace ID and connection key are valid. |

| 404 | Not Found | Either the provided URL is incorrect or the request is too large. | |

| 429 | Too Many Requests | The service is experiencing a high volume of data from your account. Please retry the request later. | |

| 500 | Internal Server Error | UnspecifiedError | The service encountered an internal error. Please retry the request. |

| 503 | Service Unavailable | ServiceUnavailable | The service currently is unavailable to receive requests. Please retry your request. |

Query data

To query data submitted by the Azure Monitor HTTP Data Collector API, search for records whose Type is equal to the LogType value that you specified and appended with _CL. For example, if you used MyCustomLog, you would return all records with MyCustomLog_CL.

Sample requests

In this section are samples that demonstrate how to submit data to the Azure Monitor HTTP Data Collector API by using various programming languages.

For each sample, set the variables for the authorization header by doing the following:

- In the Azure portal, locate your Log Analytics workspace.

- Select Agents.

- To the right of Workspace ID, select the Copy icon, and then paste the ID as the value of the Customer ID variable.

- To the right of Primary Key, select the Copy icon, and then paste the ID as the value of the Shared Key variable.

Alternatively, you can change the variables for the log type and JSON data.

# Replace with your Workspace ID

$CustomerId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

# Replace with your Primary Key

$SharedKey = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

# Specify the name of the record type that you'll be creating

$LogType = "MyRecordType"

# Optional name of a field that includes the timestamp for the data. If the time field is not specified, Azure Monitor assumes the time is the message ingestion time

$TimeStampField = ""

# Create two records with the same set of properties to create

$json = @"

[{ "StringValue": "MyString1",

"NumberValue": 42,

"BooleanValue": true,

"DateValue": "2019-09-12T20:00:00.625Z",

"GUIDValue": "9909ED01-A74C-4874-8ABF-D2678E3AE23D"

},

{ "StringValue": "MyString2",

"NumberValue": 43,

"BooleanValue": false,

"DateValue": "2019-09-12T20:00:00.625Z",

"GUIDValue": "8809ED01-A74C-4874-8ABF-D2678E3AE23D"

}]

"@

# Create the function to create the authorization signature

Function Build-Signature ($customerId, $sharedKey, $date, $contentLength, $method, $contentType, $resource)

{

$xHeaders = "x-ms-date:" + $date

$stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($sharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$authorization = 'SharedKey {0}:{1}' -f $customerId,$encodedHash

return $authorization

}

# Create the function to create and post the request

Function Post-LogAnalyticsData($customerId, $sharedKey, $body, $logType)

{

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

$signature = Build-Signature `

-customerId $customerId `

-sharedKey $sharedKey `

-date $rfc1123date `

-contentLength $contentLength `

-method $method `

-contentType $contentType `

-resource $resource

$uri = "https://" + $customerId + ".ods.opinsights.azure.com" + $resource + "?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature;

"Log-Type" = $logType;

"x-ms-date" = $rfc1123date;

"time-generated-field" = $TimeStampField;

}

$response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing

return $response.StatusCode

}

# Submit the data to the API endpoint

Post-LogAnalyticsData -customerId $customerId -sharedKey $sharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($json)) -logType $logType

Alternatives and considerations

Although the Data Collector API should cover most of your needs as you collect free-form data into Azure Logs, you might require an alternative approach to overcome some of the limitations of the API. Your options, including major considerations, are listed in the following table:

| Alternative | Description | Best suited for |

|---|---|---|

| Custom events: Native SDK-based ingestion in Application Insights | Application Insights, usually instrumented through an SDK within your application, gives you the ability to send custom data through Custom Events. |

|

| Data Collector API in Azure Monitor Logs | The Data Collector API in Azure Monitor Logs is a completely open-ended way to ingest data. Any data that's formatted in a JSON object can be sent here. After it's sent, it's processed and made available in Monitor Logs to be correlated with other data in Monitor Logs or against other Application Insights data. It's fairly easy to upload the data as files to an Azure Blob Storage blob, where the files will be processed and then uploaded to Log Analytics. For a sample implementation, see Create a data pipeline with the Data Collector API. |

|

| Azure Data Explorer | Azure Data Explorer, now generally available to the public, is the data platform that powers Application Insights Analytics and Azure Monitor Logs. By using the data platform in its raw form, you have complete flexibility (but require the overhead of management) over the cluster (Kubernetes role-based access control (RBAC), retention rate, schema, and so on). Azure Data Explorer provides many ingestion options, including CSV, TSV, and JSON files. |

|

Next steps

Use the Log Search API to retrieve data from the Log Analytics workspace.

Learn more about how to create a data pipeline with the Data Collector API by using a Logic Apps workflow to Azure Monitor.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for