Azure Percept Vision AI module

Important

Retirement of Azure Percept DK:

Update 22 February 2023: A firmware update for the Percept DK Vision and Audio accessory components (also known as Vision and Audio SOM) is now available here, and will enable the accessory components to continue functioning beyond the retirement date.

The Azure Percept public preview will be evolving to support new edge device platforms and developer experiences. As part of this evolution the Azure Percept DK and Audio Accessory and associated supporting Azure services for the Percept DK will be retired March 30th, 2023.

Effective March 30th, 2023, the Azure Percept DK and Audio Accessory will no longer be supported by any Azure services including Azure Percept Studio, OS updates, containers updates, view web stream, and Custom Vision integration. Microsoft will no longer provide customer success support and any associated supporting services. For more information, please visit the Retirement Notice Blog Post.

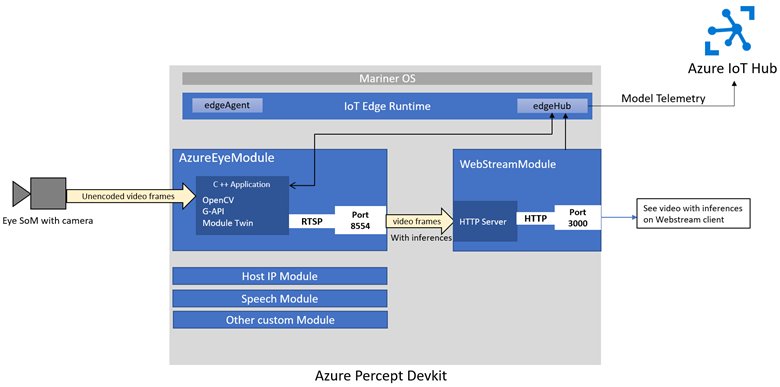

Azureeyemodule is the name of the edge module responsible for running the AI vision workload on the Azure Percept DK. It's part of the Azure IoT suite of edge modules and is deployed to the Azure Percept DK during the setup experience. This article provides an overview of the module and its architecture.

Architecture

The Azure Percept Workload on the Azure Percept DK is a C++ application that runs inside the azureeyemodule docker container. It uses OpenCV GAPI for image processing and model execution. Azureeyemodule runs on the Mariner operating system as part of the Azure IoT suite of modules that run on the Azure Percept DK.

The Azure Percept Workload is meant to take in images and output images and messages. The output images may be marked up with drawings such as bounding boxes, segmentation masks, joints, labels, and so on. The output messages are a JSON stream of inference results that can be ingested and used by downstream tasks. The results are served up as an RTSP stream that is available on port 8554 of the device. The results are also shipped over to another module running on the device, which serves the RTSP stream wrapped in an HTTP server, running on port 3000. Either way, they'll be viewable only on the local network.

Caution

There is no encryption or authentication with respect to the RTSP feeds. Anyone on the local network can view exactly what the Azure Percept Vision is seeing by typing in the correct address into a web browser or RTSP media player.

The Azure Percept Workload enables several features that end users can take advantage of:

- A no-code solution for common computer vision use cases, such as object classification and common object detection.

- An advanced solution, where a developer can bring their own (potentially cascaded) trained model to the device and run it, possibly passing results to another IoT module of their own creation running on the device.

- A retraining loop for grabbing images from the device periodically, retraining the model in the cloud, and then pushing the newly trained model back down to the device. Using the device's ability to update and swap models on the fly.

AI workload details

The Workload application is open-sourced in the Azure Percept Advanced Development GitHub repository and is made up of many small C++ modules, with some of the more important being:

- main.cpp: Sets up everything and then runs the main loop.

- iot: This folder contains modules that handle incoming and outgoing messages from the Azure IoT Edge Hub, and the twin update method.

- model: This folder contains modules for a class hierarchy of computer vision models.

- kernels: This folder contains modules for G-API kernels, ops, and C++ wrapper functions.

Developers can build custom modules or customize the current azureeyemodule using this workload application.

Next steps

- Now that you know more about the azureeyemodule and Azure Percept Workload, try using your own model or pipeline by following one of these tutorials

- Or, try transfer learning using one of our ready-made machine learning notebooks