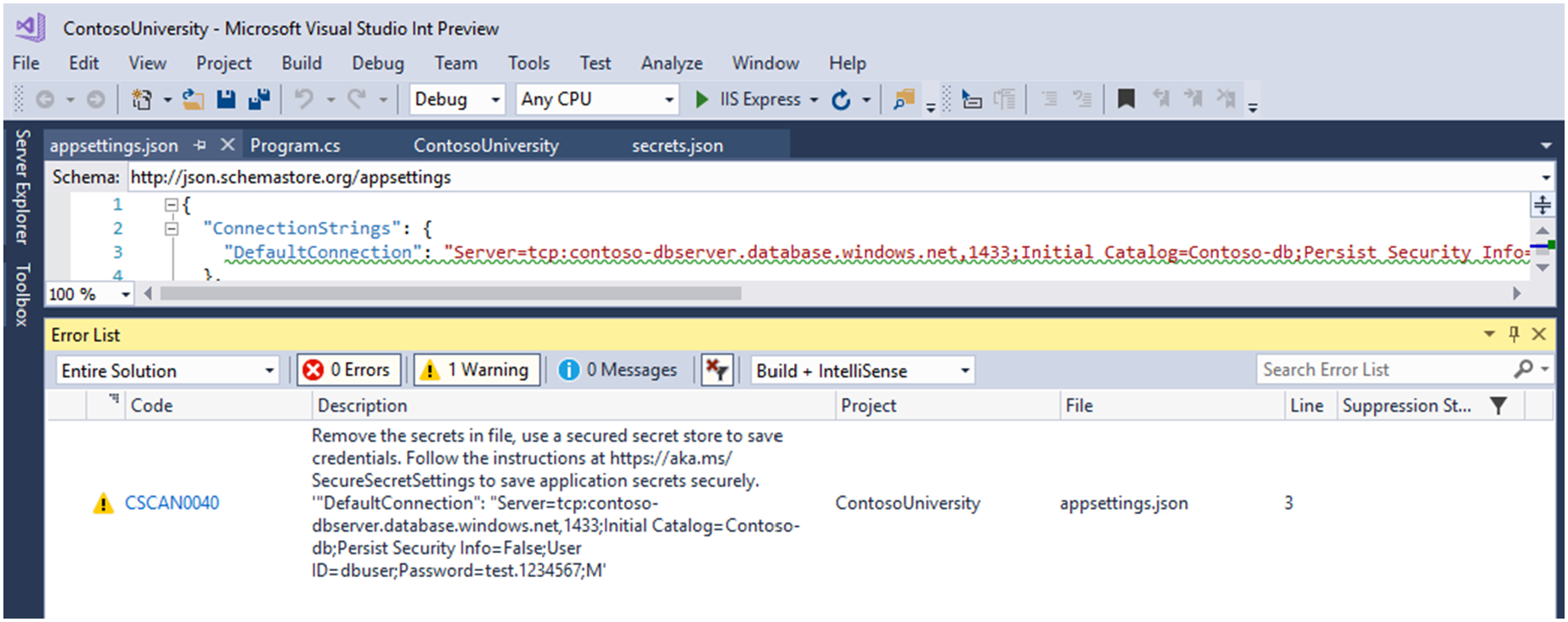

The Continuous Delivery Tools for Visual Studio extension makes it simple to automate and stay up to date on your DevOps pipeline for ASP.NET and other projects targeting Azure. The tools also allow to improve your code quality and security. This version of the extension is only available for Visual Studio 2017 RC.3 and above. A previous version of the extension was automatically installed as part of the .NET Core preview workload in RC.2. The Continuous Delivery Tools for Visual Studio is a Technology Preview DevLabs extension. Automatically detect secret settings in project source code

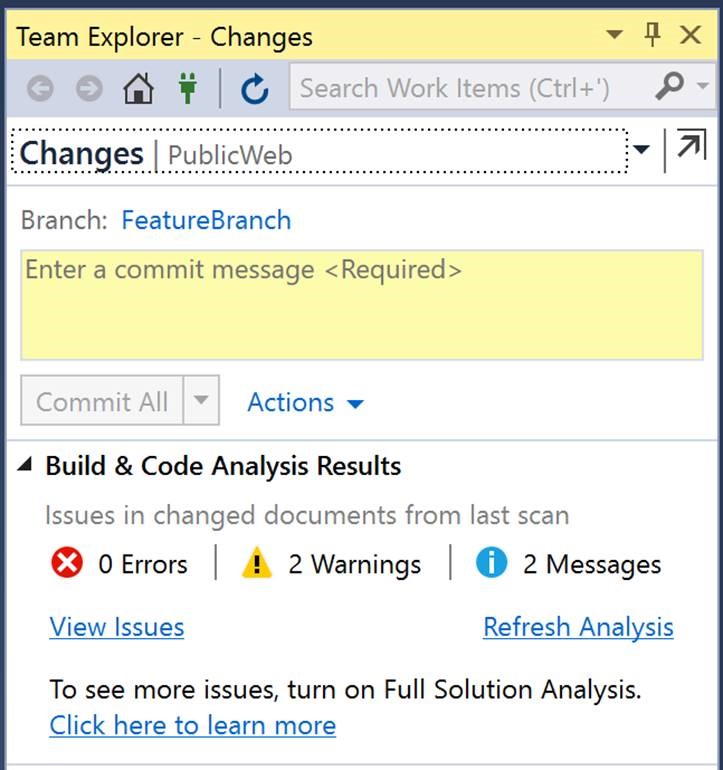

NOTE: the analyzer only works on project files opened in Visual Studio. If you close a file, the detected result will disappear. Heads-up information on Build and Code Analysis Results on Commit Early remediation of issues in your code is a key way to drive quality and improve your team’s productivity. With the new Build and Code Analysis Results panel, you get a heads-up reminder at commit-time of issues detected by any code analysis tool that puts results in the error list. This means you can take care of those issues before they propagate into your team’s CI/CD process, and commit with confidence. The panel shows results both for live edit-time analysis (e.g. C#/VB Analyzers) and, via the Refresh Analysis button, for batch-style static analysis (e.g. C43;43; Static Analysis tools). It is supported on Visual Studio 2017 Enterprise. At present it supports code being committed to a Git Repo.

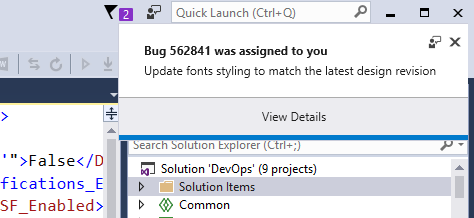

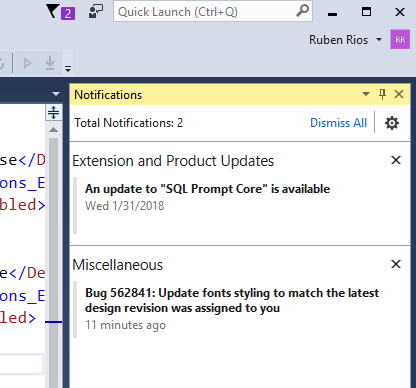

In response to your feedback, the panel now shows issue counts only for files that are changed in the set of files you are committing, so that you can focus only on the issues related to your changes. View Issues will let you see a view of the Error List filtered to just the changed files too. You can tell us more about what you think about this new feature by filling out a short survey. Build notifications for any CI run on Visual Studio Team Services Things move fast when you have an automated DevOps pipeline that ships code to your customers several times a day. However, thanks to new build notifications feature, you can now learn about build issues as soon as they occur without having to switch context away from your development environment. Build notifications are scoped to build events requested on behalf of accounts on your keychain and associated with the solution that’s currently open. As such, you don’t have to worry about getting flooded with notifications or even with notifications that are not contextual or relevant to you. Work item notifications for any work item that is assigned to you You can also stay on top of important VSTS work items assignments, thanks to the new work item notifications feature. With this feature you'll learn about new work items as soon as they are assigned to you without having to switch context away from your development environment. You will not be bothered with multiple notifications of several items are being updated at the same time. Notifications will be batched and you will only be presented with a single notification. Open any of your VSTS hosted solutions on the Visual Studio IDE, and you will be automatically notified via the new Toast notification mechanism.

Notifications tool window integration The new toast notification appears in the top right corner of the IDE, and whenever not acted upon or dismissed by you, it retracts to the notification flag, indicating it can be found inside the Notifications tool window.

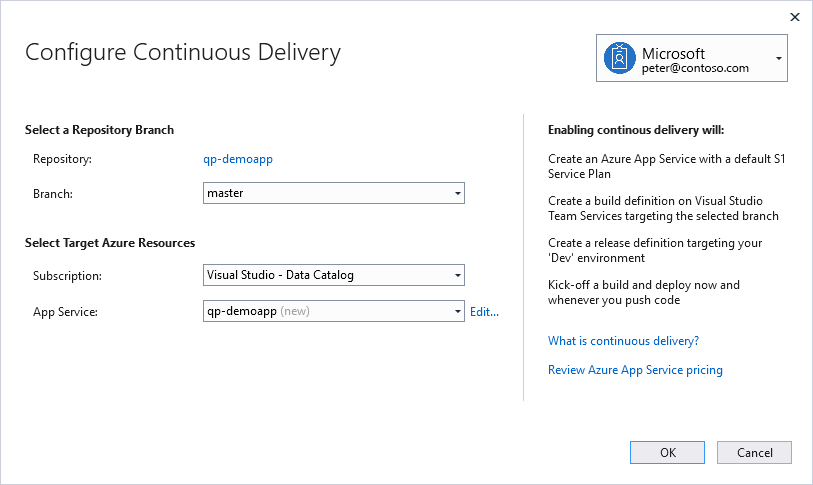

Automate the build and deployment of any ASP.NET project From the ASPNET project menu click “Configure Continuous Delivery...” to setup an automated Build and Release definition that can deploy your ASP.NET project to an Azure App Service or Azure Container Service. Use it to quickly setup an automated ‘Dev’ or ‘Test’ environment. Then add additional steps like “manual checks” to configure staging and production deployments from Visual Studio Team Services. Visual Studio Team Services will trigger a build and deployment for the source repository after every Git Push operation. To learn more about how to setup and benefit from a CI/CD pipeline, checkout the get started guide. The extension allows you to configure Continuous Delivery to Azure App Services, Web Containers and Service Fabric Clusters for ASP.NET and ASP.NET Core projects under source control on GitHub or VSTS. It supports both TFVC and Git repos. Release Notes: Version: 0.4.211.1649

Version: 0.4.210.44124

Version: 0.4.174.2830

Version: 0.4.173.6850

Version: 0.4.132.17223

Version: 0.4.122.16101

Version: 0.4.66.9081

Version: 0.4.28.54201

Version: 0.4.9.4324

Version: 0.3.510.42958

Version: 0.3.493.58515

Version: 0.3.439.12417

Version: 0.3.438.6810

Version: 0.3.387.64656

Version: 0.3.360.58237

Version: 0.3.275.25451

Version: 0.3.274.54004

Version: 0.3.271.47084

Version: 0.3.269.62949

Version: 0.3.268.17844

Version: 0.3.216.5157

Version: 0.3.168.56876

Version: 0.3.112.7525

Version: 0.3.111.7525

Version: 0.3.105.11995

Version: 0.3.29.47597

Version: 0.3.18.52138

Version: 0.2.235.55646

Version: 0.2.189.9278

Version: 0.2.109.18451

Version: 0.2.108.1548

It’s all about feedback… Over the coming days, weeks, and months we’ll update the extension with improvements based on your feedback, new experiences to try, and of course bug fixes. We’re interested in hearing how we can help improve the speed of delivering software to customers. If you are interested in sharing your feedback ping us at vsDevOps@microsoft.com. |