Azure Data Explorer data ingestion overview

Data ingestion involves loading data into a table in your cluster. Azure Data Explorer ensures data validity, converts formats as needed, and performs manipulations like schema matching, organization, indexing, encoding, and compression. Once ingested, data is available for query.

Azure Data Explorer offers one-time ingestion or the establishment of a continuous ingestion pipeline, using either streaming or queued ingestion. To determine which is right for you, see One-time data ingestion and Continuous data ingestion.

Note

Data is persisted in storage according to the set retention policy.

One-time data ingestion

One-time ingestion is helpful for the transfer of historical data, filling in missing data, and the initial stages of prototyping and data analysis. This approach facilitates fast data integration without the need for a continuous pipeline commitment.

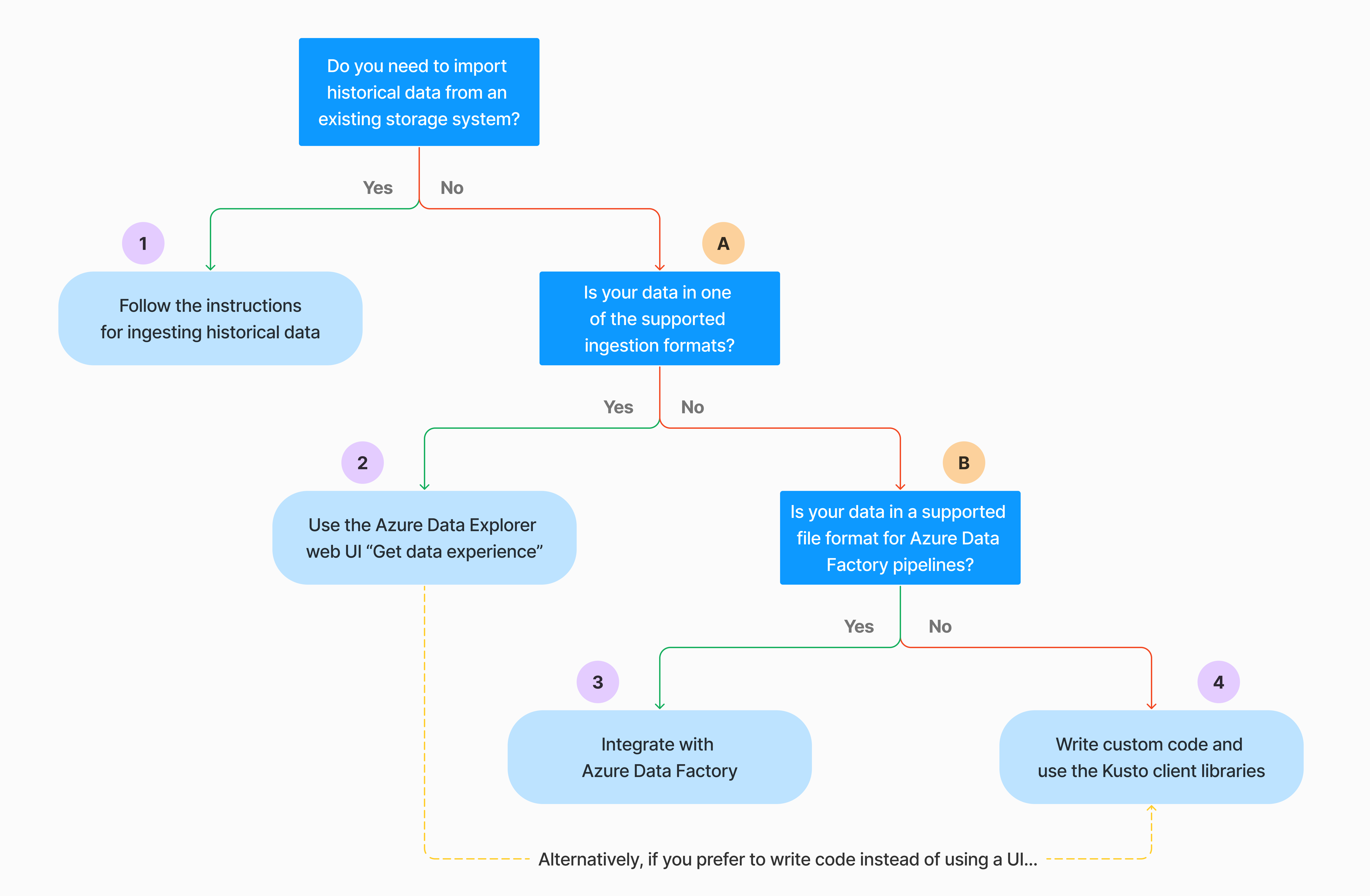

There are multiple ways to perform one-time data ingestion. Use the following decision tree to determine the most suitable option for your use case:

For more information, see the relevant documentation:

| Callout | Relevant documentation |

|---|---|

|

See the data formats supported by Azure Data Explorer for ingestion. |

|

See the file formats supported for Azure Data Factory pipelines. |

|

To import data from an existing storage system, see How to ingest historical data into Azure Data Explorer. |

|

In the Azure Data Explorer web UI, you can get data from a local file, Amazon S3, or Azure Storage. |

|

To integrate with Azure Data Factory, see Copy data to Azure Data Explorer by using Azure Data Factory. |

|

Kusto client libraries are available for C#, Python, Java, JavaScript, TypeScript, and Go. You can write code to manipulate your data and then use the Kusto Ingest library to ingest data into your Azure Data Explorer table. The data must be in one of the supported formats prior to ingestion. |

Continuous data ingestion

Continuous ingestion excels in situations demanding immediate insights from live data. For example, continuous ingestion is useful for monitoring systems, log and event data, and real-time analytics.

Continuous data ingestion involves setting up an ingestion pipeline with either streaming or queued ingestion:

Streaming ingestion: This method ensures near-real-time latency for small sets of data per table. Data is ingested in micro batches from a streaming source, initially placed in the row store, and then transferred to column store extents. For more information, see Configure streaming ingestion.

Queued ingestion: This method is optimized for high ingestion throughput. Data is batched based on ingestion properties, with small batches then merged and optimized for fast query results. By default, the maximum queued values are 5 minutes, 1000 items, or a total size of 1 GB. The data size limit for a queued ingestion command is 6 GB. This method uses retry mechanisms to mitigate transient failures and follows the 'at least once' messaging semantics to ensure no messages are lost in the process. For more information about queued ingestion, see Ingestion batching policy.

Note

For most scenarios, we recommend using queued ingestion as it is the more performant option.

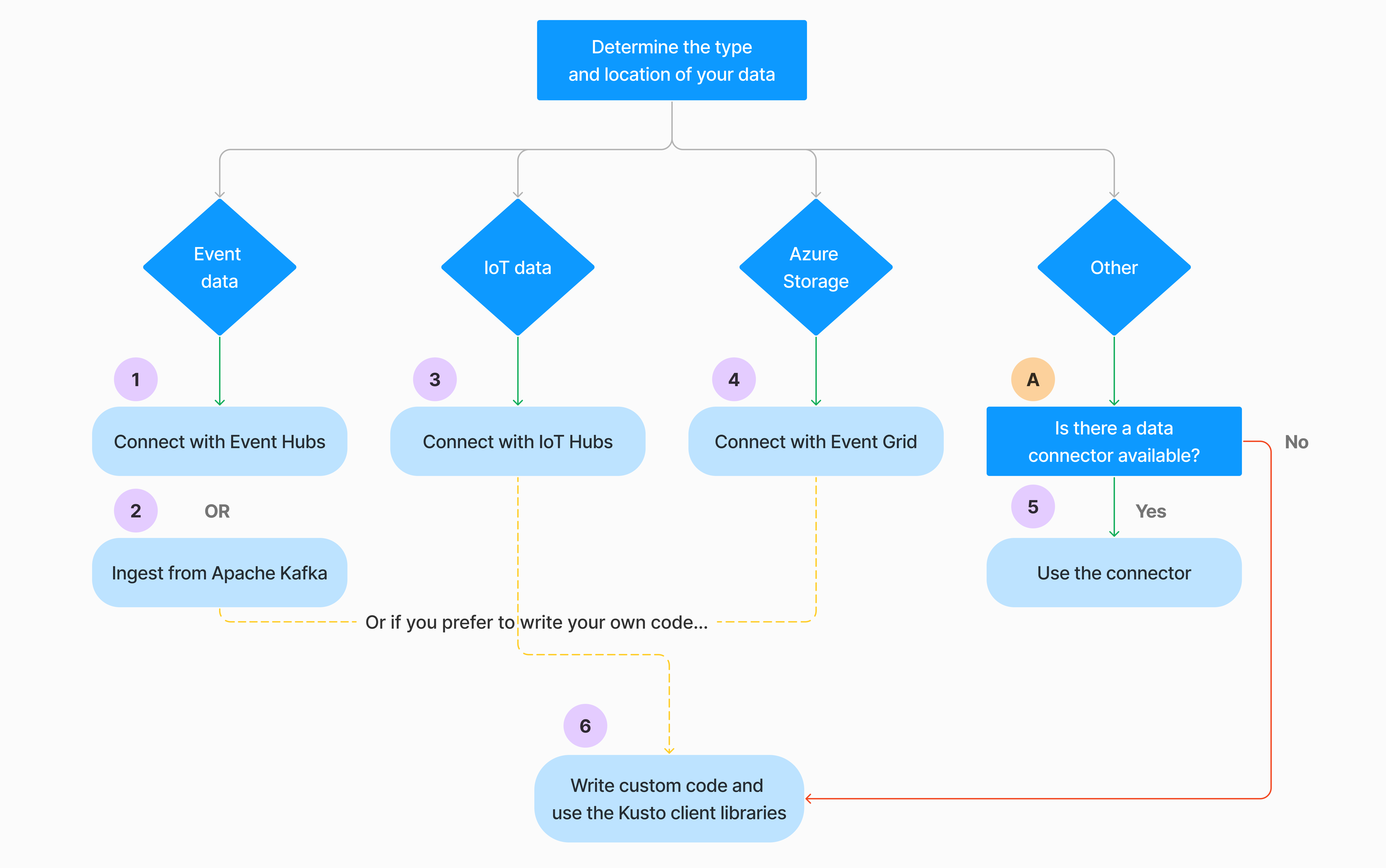

There are multiple ways to configure continuous data ingestion. Use the following decision tree to determine the most suitable option for your use case:

For more information, see the relevant documentation:

| Callout | Relevant documentation |

|---|---|

|

For a list of connectors, see Connectors overview. |

|

Create an Event Hubs data connection. Integration with Event Hubs provides services such as throttling, retries, monitoring, and alerts. |

|

Ingest data from Apache Kafka, a distributed streaming platform for building real-time streaming data pipelines. |

|

Create an IoT Hub data connection. Integration with IoT Hubs provides services such as throttling, retries, monitoring, and alerts. |

|

Create an Event Grid data connection. Integration with Event Grid provides services such as throttling, retries, monitoring, and alerts. |

|

See the guidance for the relevant connector, such as Apache Spark, Apache Kafka, Azure Cosmos DB, Fluent Bit, Logstash, Open Telemetry, Power Automate, Splunk, and more. For more information, see Connectors overview. |

|

Kusto client libraries are available for C#, Python, Java, JavaScript, TypeScript, and Go. You can write code to manipulate your data and then use the Kusto Ingest library to ingest data into your Azure Data Explorer table. The data must be in one of the supported formats prior to ingestion. |

Note

Streaming ingestion isn't supported for all ingestion methods. For support details, check the documentation for the specific ingestion method.

Direct ingestion with management commands

Azure Data Explorer offers the following ingestion management commands, which ingest data directly to your cluster instead of using the data management service. They should be used only for exploration and prototyping and not in production or high-volume scenarios.

- Inline ingestion: The .ingest inline command contains the data to ingest being a part of the command text itself. This method is intended for improvised testing purposes.

- Ingest from query: The .set, .append, .set-or-append, or .set-or-replace commands indirectly specifies the data to ingest as the results of a query or a command.

- Ingest from storage: The .ingest into command gets the data to ingest from external storage, such as Azure Blob Storage, accessible by your cluster and pointed-to by the command.

Compare ingestion methods

The following table compares the main ingestion methods:

| Ingestion name | Data type | Maximum file size | Streaming, queued, direct | Most common scenarios | Considerations |

|---|---|---|---|---|---|

| Apache Spark connector | Every format supported by the Spark environment | Unlimited | Queued | Existing pipeline, preprocessing on Spark before ingestion, fast way to create a safe (Spark) streaming pipeline from the various sources the Spark environment supports. | Consider cost of Spark cluster. For batch write, compare with Azure Data Explorer data connection for Event Grid. For Spark streaming, compare with the data connection for event hub. |

| Azure Data Factory (ADF) | Supported data formats | Unlimited. Inherits ADF restrictions. | Queued or per ADF trigger | Supports formats that are unsupported, such as Excel and XML, and can copy large files from over 90 sources, from on perm to cloud | This method takes relatively more time until data is ingested. ADF uploads all data to memory and then begins ingestion. |

| Event Grid | Supported data formats | 1 GB uncompressed | Queued | Continuous ingestion from Azure storage, external data in Azure storage | Ingestion can be triggered by blob renaming or blob creation actions |

| Event Hub | Supported data formats | N/A | Queued, streaming | Messages, events | |

| Get data experience | *SV, JSON | 1 GB uncompressed | Queued or direct ingestion | One-off, create table schema, definition of continuous ingestion with Event Grid, bulk ingestion with container (up to 5,000 blobs; no limit when using historical ingestion) | |

| IoT Hub | Supported data formats | N/A | Queued, streaming | IoT messages, IoT events, IoT properties | |

| Kafka connector | Avro, ApacheAvro, JSON, CSV, Parquet, and ORC | Unlimited. Inherits Java restrictions. | Queued, streaming | Existing pipeline, high volume consumption from the source. | Preference can be determined by the existing use of a multiple producers or consumer service or the desired level of service management. |

| Kusto client libraries | Supported data formats | 1 GB uncompressed | Queued, streaming, direct | Write your own code according to organizational needs | Programmatic ingestion is optimized for reducing ingestion costs (COGs) by minimizing storage transactions during and following the ingestion process. |

| LightIngest | Supported data formats | 1 GB uncompressed | Queued or direct ingestion | Data migration, historical data with adjusted ingestion timestamps, bulk ingestion | Case-sensitive and space-sensitive |

| Logic Apps | Supported data formats | 1 GB uncompressed | Queued | Used to automate pipelines | |

| LogStash | JSON | Unlimited. Inherits Java restrictions. | Queued | Existing pipeline, use the mature, open source nature of Logstash for high volume consumption from the input(s). | Preference can be determined by the existing use of a multiple producers or consumer service or the desired level of service management. |

| Power Automate | Supported data formats | 1 GB uncompressed | Queued | Ingestion commands as part of flow. Used to automate pipelines. |

For information on other connectors, see Connectors overview.

Permissions

The following list describes the permissions required for various ingestion scenarios:

- To create a new table requires at least Database User permissions.

- To ingest data into an existing table, without changing its schema, requires at least Database Ingestor permissions.

- To change the schema of an existing table requires at least Table Admin or Database Admin permissions.

For more information, see Kusto role-based access control.

The ingestion process

The following steps outline the general ingestion process:

Set batching policy (optional): Data is batched based on the ingestion batching policy. For guidance, see Optimize for throughput.

Set retention policy (optional): If the database retention policy isn't suitable for your needs, override it at the table level. For more information, see Retention policy.

Create a table: If you're using the Get data experience, you can create a table as part of the ingestion flow. Otherwise, create a table prior to ingestion in the Azure Data Explorer web UI or with the .create table command.

Create a schema mapping: Schema mappings help bind source data fields to destination table columns. Different types of mappings are supported, including row-oriented formats like CSV, JSON, and AVRO, and column-oriented formats like Parquet. In most methods, mappings can also be precreated on the table.

Set update policy (optional): Certain data formats like Parquet, JSON, and Avro enable straightforward ingest-time transformations. For more intricate processing during ingestion, use the update policy. This policy automatically executes extractions and transformations on ingested data within the original table, then ingests the modified data into one or more destination tables.

Ingest data: Use your preferred ingestion tool, connector, or method to bring in the data.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for