This article answers commonly asked questions about Azure Media Services.

Azure Media Services retirement

Where can I find more information about Azure Media Services retirement?

Developing with SDKs

Where can I find the Media Services API and SDKs?

Should I use the client SDKs or write directly to the REST API?

We don't recommend that you try to wrap the REST API for Media Services directly into your own library code. Doing so properly for production purposes would require you to implement the full Azure Resource Manager retry logic and understand how to manage long-running operations in Resource Manager APIs. The client SDKs for various languages - for example, .NET, Java, TypeScript, Python, and Ruby - handle this for you automatically, to reduce the chances of problems with retry logic or failed API calls.

Where can I find Media Services samples?

See the samples pages. There are samples for Accounts, Assets, Live Streaming, VOD Streaming, Content Protection, Encoding, Analytics, and using Players.

How does paging on large result sets (such as a list of assets) work in the API?

When you're using pagination, you should always use the next link to enumerate the collection and not depend on a particular page size. For details and examples, see Filtering, ordering, and paging entities.

Accounts

How do I use a managed identity to encrypt data to Media Services?

For information about using the Azure CLI to pair Media Services with Azure Key Vault to encrypt your data, see the Use a Key Vault key to encrypt data into a Media Services account tutorial.

How do I use a managed identity to give Media Services access to a restricted storage account?

If you want Media Services to access a storage account when the storage account is configured to block requests from unknown IP addresses, follow the steps in Access storage with a Media Services managed identity.

What is the process for moving a Media Services account between subscriptions?

Security

What Azure roles can perform actions on Media Services resources?

Does Media Services support fine-grained role based access control (RBAC)?

Media Services defines the following built-in roles:

- Media Services Account Administrator

- Media Services Media Operator

- Media Services Policy Administrator

- Media Services Streaming Endpoints Administrator

- Media Services Live Events Administrator

These roles are detailed in Azure role-based access control (Azure RBAC) for Media Services accounts.

These roles can be used to give fine-grained access to a Media Services account. To allow all access to a Media Services account, the “Owner” or “Contributor” role can be used. Media Services has an older API on the deprecation path that does not support fine-grained access control. Customers who require fine-grained access control should not select “Enable classic APIs” when creating a Media Services account using the portal (or use the 2020-05-01 API version if they are using the API to create accounts).

The following built-in Azure Policy can be used to block the creation of accounts that support the old API: Azure Media Services accounts that allow access to the legacy v2 API should be blocked - Microsoft Azure

Assets, uploading, and storage

What is a Media Services asset?

A Media Services asset is an Azure Storage account container that's used for each video file that you upload. It has a unique identifier that's used with transforms and other operations. See Assets in Azure Media Services v3.

How do I create a Media Services asset?

Every time you want to upload a media file and do something with it, like encoding or streaming, you create an asset to store the media file and associated files. Assets are automatically created for you if you use the Azure portal. If you're not using the portal to upload files, you must create an asset first.

Encoding

What encoding formats are available with Media Services?

The common encoding formats are available with Media Services Standard Encoder. For a list of all formats, see Standard Encoder formats and codecs.

How do I create a Media Services job?

You can create a job in the Azure portal by using the Azure CLI, REST, or any of the SDKs. See the Media Services samples for the language that you prefer.

Can I use Media Services to create an auto-generated bitrate ladder?

Does Media Services support content-aware encoding?

Yes. Media Services can perform a two-pass analysis on a video. It can then recommend the best adaptive bitrate set, resolutions, and encoding settings based on contents of the video.

Can I use an externally encoded or existing MP4 file in Media Services?

Yes. For details and links to a sample application that shows how to upload a single-bitrate MP4 file that's pre-encoded and generate the server manifest (.ism) and client manifest (.ismc), see the answer to the question "Can I stream existing MP4 files that are pre-encoded or encoded in another solution?" in the section about packaging and delivery. That answer also describes the performance impact on the origin.

Can Media Services be used for very short-form file content encoding?

We don't recommend it. Very short content that's less than a minute or two in duration is not ideal for adaptive bitrate streaming. If you intend to stream very short-form files, we recommend that you pre-encode the content into a format that's easily streamed using a single bitrate.

Because most adaptive bitrate players need time to buffer multiple segments of video, as well as time to analyze the network bandwidth before "shifting" up or down the adaptive bitrate ladder, it's often useless to provide a lot of bitrates for content that's under 30 seconds long. By the time the player locks its heuristic algorithm on the right bitrate to play based on network conditions, the file will be done streaming.

In addition, some players default to buffering up to three segments of video. Each segment can be two to six seconds long. For very short-format videos, the player is likely to buffer and begin playback of the first selected bitrate of the adaptive bitrate set. For this reason, we recommend using a single-bitrate MP4 file, and uploading it to an asset if you need HLS or DASH manifest generation. For details on how to achieve this, see the answer to the question "Can I stream existing MP4 files that are pre-encoded or encoded in another solution?" in the section about packaging and delivery.

It's necessary to deliver the files in HLS or DASH format only if you want to benefit from the capabilities of those protocols. For single-bitrate streams, they can still offer a lot - such as faster seeking, digital rights management (DRM) support, and increased difficulty to download via URL (but still possible!) than a progressive download MP4 in blob storage. Caption support for VTT and IMSC1 is another benefit. In addition, the ability to late bind additional audio renditions, or dubbings in alternate languages, makes this a valuable choice for some situations.

How to rotate a video, subclip a video, stitch videos, create thumbnails and sprites, etc?

If you are looking for ways to use a Media Services encoder to do things like rotate a video, subclip a video, stitch videos, create thumbnails and sprites, we have a ton of code samples on the Code Samples page. Available sample languages include Node.JS, Python, .NET, and Java.

Live streaming

What is a Media Services live event?

A Media Services live event is the process of ingesting live video feeds and broadcasting them through an RTMPS protocol or Smooth Streaming. For more information, see Live events and live outputs in Media Services.

How do I create a Media Services live event?

The first step is to choose an on-premises encoder. We've provided examples for creating a live event with Wirecast and OBS. If you would rather start with an overview of Media Services live events, see Live event types.

How do I do live transcription with a Media Services live event?

Azure Media Service delivers video, audio, and text in various protocols. When you publish your live stream by using MPEG-DASH or HLS/CMAF, then along with video and audio, the service delivers the transcribed text in IMSC1.1-compatible TTML. For more information, see Live transcription.

How do I monitor my live event's health?

You can monitor live events by subscribing to Azure Event Grid events. For more information, see the Event Grid event schema. You can either:

- Subscribe to the stream-level Microsoft.Media.LiveEventEncoderDisconnected events and monitor that no reconnections come in for a while to stop and delete your live event.

- Subscribe to the track-level heartbeat events. If all tracks have an incoming bitrate dropping to 0 or the last time stamp is no longer increasing, you can safely shut down the live event. The heartbeat events come in every 20 seconds for every track, so it might be a bit verbose.

Can I reuse the same streaming URL on restart of a live event?

No, you can't easily use the same streaming URL if you stop and start a live event. Each time you create and publish a new live output (and asset), a new streaming URL (GUID) will be used for the new locator. That way, you're sure there won't be any cache conflict in the streaming endpoint and the content delivery network (CDN). You can prepare (and know) the streaming URLs in advance because you can force a specific GUID for the streaming locator, and then decide the manifest name to use for the live output.

Let's say that you decide to use GUID 1a7ed69e-a361-433d-8a56-29c61872744f for the live output that you'll create tomorrow. When the day comes, you start the live event and create a live output. You can decide to use "conference1" for the manifest and force the GUID for the locator.

The streaming URL is predictable and is http://<youraccountname>-<azureregion>.streaming.media.azure.net/1a7ed69e-a361-433d-8a56-29c61872744f/conference1.ism/manifest.

You can't reuse the same live output or asset multiple times. Think of the combination of the live output and asset as a tape recording. After the live output has been recorded to the asset, you can't reuse it for another recording. There will be blob conflict or overwrites if you do that again. Unless you plan to fully purge the blobs in the storage account, and purge the CDN completely, there will be problems. There will likely still be problems because the fragments are already cached downstream at the CDN or in client device caches (for example, the browser cache).

Packaging and delivery

I uploaded, encoded, and published a video. Why won't the video play when I try to stream it?

One of the most common reasons is that you don't have the streaming endpoint from which you're trying to play back in the running state.

What is a Media Services streaming endpoint?

In Media Services, a streaming endpoint represents a dynamic (just-in-time) packaging and origin service that can deliver your live and on-demand content directly to a client player app by using one of the common streaming media protocols (HLS or DASH). In addition, the streaming endpoint provides dynamic (just-in-time) encryption to industry-leading DRM systems. For more information, see Streaming endpoints (origin) in Azure Media Services.

What is a Media Services streaming locator?

To make videos available to clients for playback, you create a streaming locator and then build streaming URLs. Streaming locators are also used to apply streaming policies that contain rules for how the media files are consumed.

How do I create a Media Services streaming locator?

To build a streaming URL, you first create a streaming locator. You then concatenate the streaming endpoint's host name and the streaming locator's path.

What is a streaming policy?

Streaming policies enable you to define streaming protocols and encryption options for your streaming locators. Media Services v3 provides some predefined streaming policies. For more information, see Streaming policies.

How do I create a Media Services streaming policy?

For a list of predefined policies that you can use to get started, see Streaming policies.

How do I stream HLS-format content to Apple devices?

Make sure you have (format=m3u8-cmaf) at the end of your path (after the /manifest portion of the URL) to tell the streaming origin server to return HLS content for consumption on Apple iOS-native devices. For details, see Delivering content.

Can I stream existing MP4 files that are pre-encoded or encoded in another solution?

Yes, the Media Services origin server (streaming endpoint) supports dynamic packaging of MP4 files to HLS or DASH streaming format. However, the content must be encoded in closed-GOP format, with short GOPs in the duration range of two to six seconds. We recommend the following settings: two-second GOPs, key frame maximum and minimum distance of two seconds, constant bitrate encoding (CBR mode). Most content in this format that's encoded through H.264 or HEVC video codec, along with AAC audio format, can be supported. Additional audio formats that are pre-encoded might also be supported, such as Dolby DD+.

The key to making it work is to create an asset, upload the pre-encoded assets into the asset's container by using Azure Blob Storage client SDKs, and then generate the required server manifest (.ism) and client manifest files. For details, see the .NET sample project in Stream existing MP4 files.

Keep in mind that there are performance implications when you use this approach, because the built-in encoder in Media Services also generates binary indexes (.mpi files) that improve the access time into the MP4 files. Without these files, the server can use slightly more CPU at high load. For more information, see Streaming an existing single bitrate MP4 file with HLS or Dash.

When you're scaling up with this approach, you should monitor the streaming endpoint's CPU load. If you're planning to go to production with a large library of MP4 files that are pre-encoded outside Media Services, file a support ticket to have your architecture reviewed and ask about ways to improve the origin server performance of pre-encoded MP4 content.

Will v2 streaming locators continue to work after February 2024?

Streaming locators created with v2 API will continue to work after our v2 API is turned off. Once the Streaming Locator data is created in the Media Services backend database, there is no dependency on the v2 REST API for streaming. We will not remove v2 specific records from the database when v2 is turned off in February 2024.

There are some properties of assets and locators created with v2 that cannot be accessed or updated using the new v3 API. For example, v2 exposes an Asset Files API that does not have an equivalent feature in the v3 API. Often this is not a problem for most of our customers, since it is not a widely used feature and you can still stream old locators and delete them when they are no longer needed.

After migration, you should avoid making any calls to the v2 API to modify streaming locators or assets.

Content protection

How do I deliver my media content with dynamic encryption?

Dynamic encryption is securing your media from the time it leaves your computer all the way through storage, processing, and delivery. With Media Services, you can deliver your live and on-demand content encrypted dynamically with Advanced Encryption Standard (AES-128) or any of the three major DRM systems: Microsoft PlayReady, Google Widevine, and Apple FairPlay. For more information, see Protect your content with Media Services dynamic encryption.

Should I use AES-128 clear key encryption or a DRM system?

Customers often wonder whether they should use AES encryption or a DRM system. The main difference between the two systems is that with AES encryption, the content key is transmitted to the client over TLS. The key is encrypted in transit without any additional encryption ("in the clear"). As a result, the key that's used to decrypt the content is accessible to the client player and can be viewed in a network trace on the client in plain text. AES-128 clear key encryption is suitable for use cases where the viewer is a trusted party (for example, encrypting corporate videos distributed within a company to be viewed by employees).

DRM systems like PlayReady, Widevine, and FairPlay provide an additional level of encryption on the key that's used to decrypt the content, compared to an AES-128 clear key. The content key is encrypted to a key protected by the DRM runtime in addition to any transport-level encryption that TLS provides. Decryption is handled in a secure environment at the operating system level, where it's more difficult for a malicious user to attack. We recommend DRM for use cases where the viewer might not be a trusted party and you need the highest level of security.

How do I show a video to only users who have a specific permission, without using Azure AD?

You don't have to use any specific token provider such as Azure Active Directory (Azure AD). You can create your own JWT provider (so-called Secure Token Service, or STS) by using asymmetric key encryption. In your custom STS, you can add claims based on your business logic.

Make sure that the issuer, audience, and claims all match up exactly between what's in JWT and the ContentKeyPolicyRestriction value used in ContentKeyPolicy.

For more information, see Protect your content by using Media Services dynamic encryption.

How and where do I get a JWT token before using it to request a license or key?

For production, you need to have Secure Token Service (that is, a web service), which issues a JWT token upon an HTTPS request. For test, you can use the code shown in the GetTokenAsync method defined in Program.cs.

After a user is authenticated, the player makes a request to STS for such a token and assigns it as the value of the token. You can use the Azure Media Player API.

For an example of running STS with either a symmetric key or an asymmetric key, see the JWT tool. For an example of a player based on Azure Media Player that uses such a JWT token, see the Azure media test tool. (Expand the player_settings link to see the token input.)

How do I authorize requests to stream videos with AES encryption?

The correct approach is to use Secure Token Service. In STS, depending on the user profile, add different claims (such as "Premium User," "Basic User," or "Free Trial User"). With different claims in a JWT, the user can see different contents. For different contents or assets, ContentKeyPolicyRestriction will have the corresponding RequiredClaims value.

Use Azure Media Services APIs for configuring license/key delivery and encrypting your assets (as shown in this sample).

Why does only audio play and not video when I'm using FairPlay offline mode?

This behavior seems to be by design of the sample app. When an alternate audio track is present (which is the case for HLS) during offline mode, both iOS 10 and iOS 11 default to the alternate audio track. To compensate for this behavior in FPS offline mode, remove the alternate audio track from the stream. To do this on Media Services, add the dynamic manifest filter audio-only=false. In other words, an HLS URL ends with .ism/manifest(format=m3u8-aapl,audio-only=false).

Why does FairPlay offline play audio only without video mode after I add audio-only=false?

Depending on the cache key design for the content delivery network, the content might be cached. Purge the cache.

What is the downloaded/offline file structure on iOS devices?

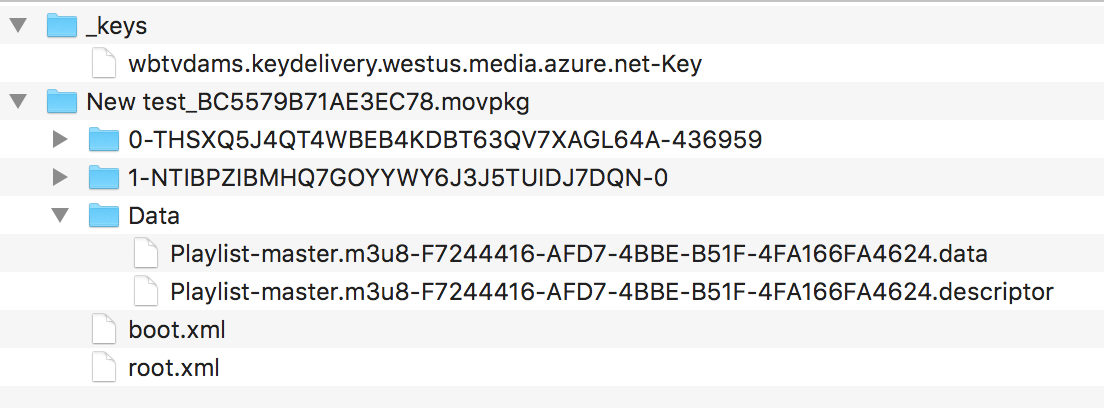

The downloaded file structure on an iOS device looks like the following screenshot. The _keys folder stores downloaded FPS licenses, with one stored file for each license service host. The .movpkg folder stores audio and video content.

The first folder with a name that ends with a dash followed by a number contains video content. The numeric value is the peak bandwidth of the video renditions. The second folder with a name that ends with a dash followed by 0 contains audio content. The third folder named Data contains the master playlist of the FPS content. Finally, boot.xml provides a complete description of the .movpkg folder content.

Here's a sample boot.xml file:

<?xml version="1.0" encoding="UTF-8"?>

<HLSMoviePackage xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance" xmlns="http://apple.com/IMG/Schemas/HLSMoviePackage" xsi:schemaLocation="http://apple.com/IMG/Schemas/HLSMoviePackage /System/Library/Schemas/HLSMoviePackage.xsd">

<Version>1.0</Version>

<HLSMoviePackageType>PersistedStore</HLSMoviePackageType>

<Streams>

<Stream ID="1-4DTFY3A3VDRCNZ53YZ3RJ2NPG2AJHNBD-0" Path="1-4DTFY3A3VDRCNZ53YZ3RJ2NPG2AJHNBD-0" NetworkURL="https://example.streaming.mediaservices.windows.net/e7c76dbb-8e38-44b3-be8c-5c78890c4bb4/MicrosoftElite01.ism/QualityLevels(127000)/Manifest(aac_eng_2_127,format=m3u8-aapl)">

<Complete>YES</Complete>

</Stream>

<Stream ID="0-HC6H5GWC5IU62P4VHE7NWNGO2SZGPKUJ-310656" Path="0-HC6H5GWC5IU62P4VHE7NWNGO2SZGPKUJ-310656" NetworkURL="https://example.streaming.mediaservices.windows.net/e7c76dbb-8e38-44b3-be8c-5c78890c4bb4/MicrosoftElite01.ism/QualityLevels(161000)/Manifest(video,format=m3u8-aapl)">

<Complete>YES</Complete>

</Stream>

</Streams>

<MasterPlaylist>

<NetworkURL>https://example.streaming.mediaservices.windows.net/e7c76dbb-8e38-44b3-be8c-5c78890c4bb4/MicrosoftElite01.ism/manifest(format=m3u8-aapl,audio-only=false)</NetworkURL>

</MasterPlaylist>

<DataItems Directory="Data">

<DataItem>

<ID>CB50F631-8227-477A-BCEC-365BBF12BCC0</ID>

<Category>Playlist</Category>

<Name>master.m3u8</Name>

<DataPath>Playlist-master.m3u8-CB50F631-8227-477A-BCEC-365BBF12BCC0.data</DataPath>

<Role>Master</Role>

</DataItem>

</DataItems>

</HLSMoviePackage>

How can I deliver persistent licenses (offline enabled) for some clients/users and non-persistent licenses (offline disabled) for others? Do I have to duplicate the content and use separate content keys?

Because Media Services v3 allows an asset to have multiple StreamingLocator instances, you can have:

- One

ContentKeyPolicyinstance withlicense_type = "persistent",ContentKeyPolicyRestrictionwith a claim on"persistent", and itsStreamingLocatorinstance. - Another

ContentKeyPolicyinstance withlicense_type="nonpersistent",ContentKeyPolicyRestrictionwith a claim on"nonpersistent", and itsStreamingLocatorinstance. - Two

StreamingLocatorinstances that have differentContentKeyvalues.

Depending on business logic of custom STS, different claims are issued in the JWT token. With the token, only the corresponding license can be obtained and only the corresponding URL can be played.

What is the mapping between the Widevine and Media Services DRM security levels?

Google's Widevine DRM Architecture Overview defines three security levels. However, the Azure Media Services documentation on the Widevine license template outlines five security levels (client robustness requirements for playback).

Google Widevine defines both sets of security levels. The difference is in usage level: architecture or API. The five security levels are used in the Widevine API. The Azure Media Services Widevine license service deserializes the content_key_specs object, which

contains security_level, and passes it to the Widevine global delivery service. The following table shows the mapping between the two sets of security levels.

| Security levels defined in the Widevine architecture | Security levels used in the Widevine API |

|---|---|

| Security Level 1: All content processing, cryptography, and control are performed within the Trusted Execution Environment (TEE). In some implementation models, security processing might be performed in different chips. | security_level=5: The cryptography, decoding, and all handling of the media (compressed and uncompressed) must be handled within a hardware-backed TEE. security_level=4: The cryptography and decoding of content must be performed within a hardware-backed TEE. |

| Security Level 2: Cryptography (but not video processing) is performed within the TEE. Decrypted buffers are returned to the application domain and processed through separate video hardware or software. At Level 2, however, cryptographic information is still processed only within the TEE. | security_level=3: The key material and cryptographic operations must be performed within a hardware-backed TEE. |

| Security Level 3: There's no TEE on the device. Appropriate measures can be taken to protect the cryptographic information and decrypted content on the host operating system. A Level 3 implementation might also include a hardware cryptographic engine, but that enhances only performance, not security. | security_level=2: Software cryptography and an obfuscated decoder are required. security_level=1: Software-based white-box cryptography is required. |

Monitoring

How do I monitor my Media Services resources?

Use Azure Monitor to keep track of what's happening with your Media Services resources. For more information, see Monitor Media Services. How to guides are listed at the end of the page.

How do I monitor my Media Services live event?

Use Azure Event Grid to monitor your live event without a polling service. See Create and monitor Media Services events with Event Grid using the Azure portal.

Players

Which video players can I use with Media Services?

Media Services works with many players. See the list of compatible media players or try the 3rd party player samples.

High availability

Does Media Services support high availability?

For information about Media Services and high availability, see High availability with Media Services and Video on Demand (VOD).

Migrating from v2

How do I migrate from Media Services v2 to Media Services v3?

We've created a comprehensive guide for migration from v2 to v3. We're interested in knowing about your migration experience and needs, so feel free to provide feedback via GitHub issue or support ticket.

Troubleshooting

How do I find out what this error code means?

We've documented error codes in the following references: Streaming endpoint error codes, Live event error codes, and Job error codes. If you can't find answers there, please create a support ticket.

How do I reset my account credentials?

Billing and cost estimates

How much does Media Services cost?

See the Media Services pricing guide.

Quotas and limits

What quotas and limits are there for Media Services?

Compliance and customer data

Does Media Services store any customer data outside the service region?

Customers attach their own storage accounts to their Azure Media Services accounts. All asset data is stored in these associated storage accounts, and the customer controls the location and replication type of this storage.

Additional data associated with a Media Services account (including content encryption keys, token verification keys, JobInputHttp URLs, and other entity metadata) is stored in Microsoft-owned storage within the region selected for the Media Services account.

Due to data residency requirements in Brazil South and Southeast Asia, additional account data is stored in a zone-redundant fashion and is contained in a single region. For Southeast Asia, all the additional account data is stored in Singapore. For Brazil South, the data is stored in Brazil. In regions other than Brazil South and Southeast Asia, additional account data might also be stored in Microsoft-owned storage in the paired region.

Does Media Services provide high availability or data replication?

Azure Media Services is a regional service and does not provide high availability or data replication. We encourage customers who need these features to build a solution by using Media Services accounts in multiple regions. A sample that shows how to build a solution for high availability with Media Services video on demand is available as a guide.