Troubleshoot Hyper-V to Azure replication and failover

This article describes common issues that you might come across when replicating on-premises Hyper-V VMs to Azure, using Azure Site Recovery.

Enable protection issues

If you experience issues when you enable protection for Hyper-V VMs, check the following recommendations:

- Check that your Hyper-V hosts and VMs meet all requirements and prerequisites.

- If Hyper-V servers are located in System Center Virtual Machine Manager (VMM) clouds, verify that you've prepared the VMM server.

- Check that the Hyper-V Virtual Machine Management service is running on Hyper-V hosts.

- Check for issues that appear in the Hyper-V-VMMS\Admin sign in to the VM. This log is located in Applications and Services Logs > Microsoft > Windows.

- On the guest VM, verify that WMI is enabled and accessible.

- Learn about basic WMI testing.

- Troubleshoot WMI.

- Troubleshoot problems with WMI scripts and services.

- On the guest VM, ensure that the latest version of Integration Services is running.

Cannot enable protection as the virtual machine is not highly available (error code 70094)

When you're enabling replication for a machine and you encounter an error stating that replication cannot be enabled as the machine is not highly available, then to fix this issue try the below steps:

- Restart the VMM service on the VMM server.

- Remove the virtual machine from the cluster and add it again.

The VSS writer NTDS failed with status 11 and writer specific failure code 0x800423F4

When trying to enable replication, you may face an error informing that enable replication failed ast NTDS failed. One of the possible causes for this issue is that the virtual machine's operating system in Windows Server 2012 and not Windows Server 2012 R2. To fix this issue, try the below steps:

- Upgrade to Windows Server R2 with 4072650 applied.

- Ensure that Hyper-V Host is also Windows 2016 or higher.

Replication issues

Troubleshoot issues with initial and ongoing replication as follows:

- Make sure you're running the latest version of Site Recovery services.

- Verify whether replication is paused:

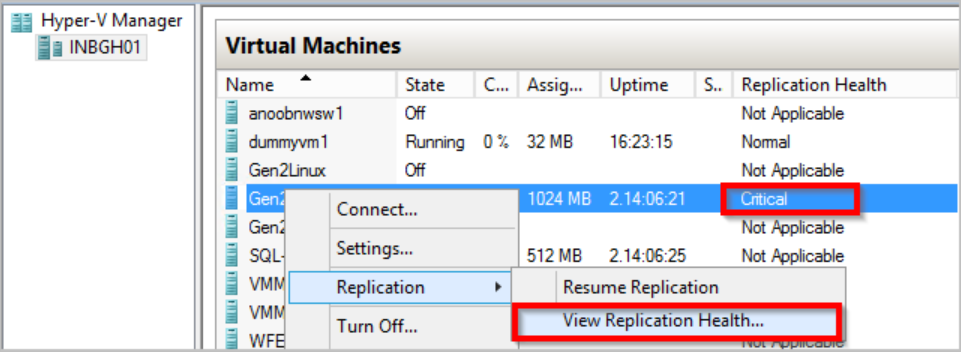

- Check the VM health status in the Hyper-V Manager console.

- If it's critical, right-click the VM > Replication > View Replication Health.

- If replication is paused, select Resume Replication.

- Check that required services are running. If they aren't, restart them.

- If you're replicating Hyper-V without VMM, check that these services are running on the Hyper-V host:

- Virtual Machine Management service

- Microsoft Azure Recovery Services Agent service

- Microsoft Azure Site Recovery service

- WMI Provider Host service

- If you're replicating with VMM in the environment, check that these services are running:

- On the Hyper-V host, check that the Virtual Machine Management service, the Microsoft Azure Recovery Services Agent, and the WMI Provider Host service are running.

- On the VMM server, ensure that the System Center Virtual Machine Manager Service is running.

- If you're replicating Hyper-V without VMM, check that these services are running on the Hyper-V host:

- Check connectivity between the Hyper-V server and Azure. To check connectivity, open Task Manager on the Hyper V host. On the Performance tab, select Open Resource Monitor. On the Network tab > Process with Network Activity, check whether cbengine.exe is actively sending large volumes (Mbs) of data.

- Check if the Hyper-V hosts can connect to the Azure storage blob URL. To check if the hosts can connect, select and check cbengine.exe. View TCP Connections to verify connectivity from the host to the Azure storage blob.

- Check performance issues, as described in the next section.

Performance issues

Network bandwidth limitations can affect replication. Troubleshoot issues as follows:

- Check if there are bandwidth or throttling constraints in your environment.

- Run the Deployment Planner profiler.

- After running the profiler, follow the bandwidth and storage recommendations.

- Check data churn limitations. If you see high data churn on a VM, do the following:

- Check if your VM is marked for resynchronization.

- Follow these steps to investigate the source of the churn.

- Churn can occur when the HRL log files exceed 50% of the available disk space. If this is the issue, provision more storage space for all VMs on which the issue occurs.

- Check that replication isn't paused. If it is, it continues writing the changes to the hrl file, which can contribute to its increased size.

Critical replication state issues

To check replication health, connect to the on-premises Hyper-V Manager console, select the VM, and verify health.

Select View Replication Health to see the details:

- If replication is paused, right-click the VM > Replication > Resume replication.

- If a VM on a Hyper-V host configured in Site Recovery migrates to a different Hyper-V host in the same cluster, or to a standalone machine, replication for the VM isn't impacted. Just check that the new Hyper-V host meets all prerequisites, and is configured in Site Recovery.

App-consistent snapshot issues

An app-consistent snapshot is a point-in-time snapshot of the application data inside the VM. Volume Shadow Copy Service (VSS) ensures that apps on the VM are in a consistent state when the snapshot is taken. This section details some common issues you might experience.

VSS failing inside the VM

Check that the latest version of Integration services is installed and running. Check if an update is available by running the following command from an elevated PowerShell prompt on the Hyper-V host: get-vm | select Name, State, IntegrationServicesState.

Check that VSS services are running and healthy:

- To check the services, sign in to the guest VM. Then open an admin command prompt, and run the following commands to check whether all the VSS writers are healthy.

- Vssadmin list writers

- Vssadmin list shadows

- Vssadmin list providers

- Check the output. If writers are in a failed state, do the following:

- Check the application event log on the VM for VSS operation errors.

- Try restarting these services associated with the failed writer:

- Volume Shadow Copy

- Azure Site Recovery VSS Provider

- Volume Shadow Copy

- After you do this, wait for a couple of hours to see if app-consistent snapshots are generated successfully.

- As a last resort, try rebooting the VM. This might resolve services that are in unresponsive state.

- To check the services, sign in to the guest VM. Then open an admin command prompt, and run the following commands to check whether all the VSS writers are healthy.

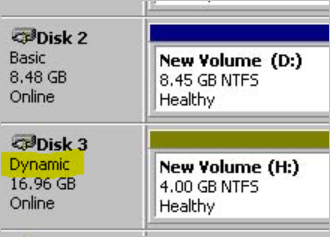

Check you don't have dynamic disks in the VM. THis isn't supported for app-consistent snapshots. You can check in Disk Management (diskmgmt.msc).

Check that you don't have an iSCSI disk attached to the VM. This isn't supported.

Check that the Backup service is enabled. Verify that it's enabled in Hyper-V settings > Integration Services.

Make sure there are no conflicts with apps taking VSS snapshots. If multiple apps are trying to take VSS snapshots at the same time conflicts can occur. For example, if a Backup app is taking VSS snapshots when Site Recovery is scheduled by your replication policy to take a snapshot.

Check if the VM is experiencing a high churn rate:

- You can measure the daily data change rate for the guest VMs, using performance counters on Hyper-V host. To measure the data change rate, enable the following counter. Aggregate a sample of this value across the VM disks for 5-15 minutes, to get the VM churn.

- Category: “Hyper-V Virtual Storage Device”

- Counter: “Write Bytes / Sec”

- This data churn rate will increase or remain at a high level, depending on how busy the VM or its apps are.

- The average source disk data churn is 2 MB/s for standard storage for Site Recovery. Learn more

- In addition you can verify storage scalability targets.

- You can measure the daily data change rate for the guest VMs, using performance counters on Hyper-V host. To measure the data change rate, enable the following counter. Aggregate a sample of this value across the VM disks for 5-15 minutes, to get the VM churn.

Make sure that if you're using a Linux based server, then you have enabled app-consistency on it. Learn more

Run the Deployment Planner.

VSS failing inside the Hyper-V Host

Check event logs for VSS errors and recommendations:

- On the Hyper-V host server, open the Hyper-V Admin event log in Event Viewer > Applications and Services Logs > Microsoft > Windows > Hyper-V > Admin.

- Verify whether there are any events that indicate app-consistent snapshot failures.

- A typical error is: "Hyper-V failed to generate VSS snapshot set for virtual machine 'XYZ': The writer experienced a non-transient error. Restarting the VSS service might resolve issues if the service is unresponsive."

To generate VSS snapshots for the VM, check that Hyper-V Integration Services is installed on the VM, and that the Backup (VSS) Integration Service is enabled.

- Ensure that the Integration Services VSS service/daemons are running on the guest, and are in an OK state.

- You can check this from an elevated PowerShell session on the Hyper-V host with command Get-VMIntegrationService -VMName<VMName>-Name VSS You can also get this information by logging into the guest VM. Learn more.

- Ensure that the Backup/VSS integration Services on the VM is running and in healthy state. If not, restart these services, and the Hyper-V Volume Shadow Copy requestor service on the Hyper-V host server.

Common errors

| Error code | Message | Details |

|---|---|---|

| 0x800700EA | "Hyper-V failed to generate VSS snapshot set for virtual machine: More data is available. (0x800700EA). VSS snapshot set generation can fail if backup operation is in progress. Replication operation for virtual machine failed: More data is available." |

Check if your VM has dynamic disk enabled. This isn't supported. |

| 0x80070032 | "Hyper-V Volume Shadow Copy Requestor failed to connect to virtual machine <./VMname> because the version does not match the version expected by Hyper-V | Check if the latest Windows updates are installed. Upgrade to the latest version of Integration Services. |

Collect replication logs

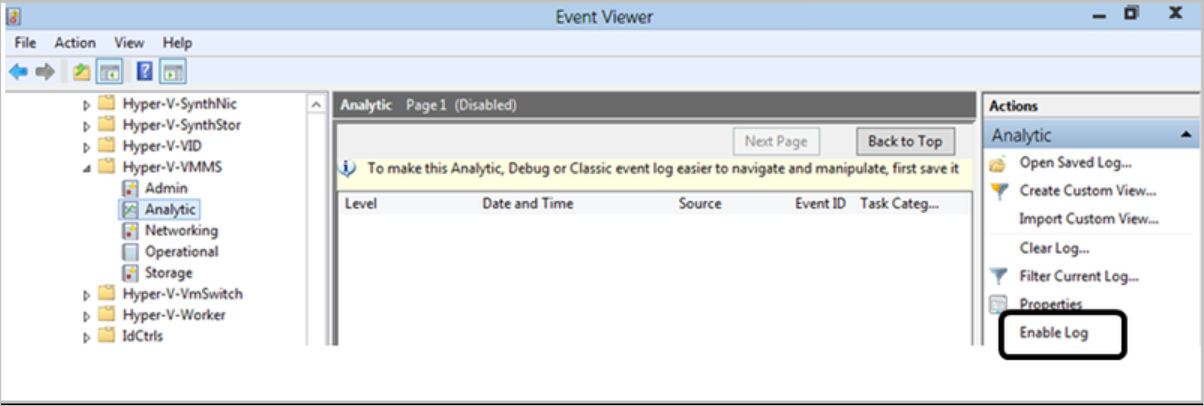

All Hyper-V replication events are logged in the Hyper-V-VMMS\Admin log, located in Applications and Services Logs > Microsoft > Windows. In addition, you can enable an Analytic log for the Hyper-V Virtual Machine Management Service, as follows:

Make the Analytic and Debug logs viewable in the Event Viewer. To make the logs available, in the Event Viewer, select View > Show Analytic and Debug Logs.. The Analytic log appears under Hyper-V-VMMS.

In the Actions pane, select Enable Log.

After it's enabled, it appears in Performance Monitor, as an Event Trace Session under Data Collector Sets.

To view the collected information, stop the tracing session by disabling the log. Then save the log, and open it again in Event Viewer, or use other tools to convert it as required.

Event log locations

| Event log | Details |

|---|---|

| Applications and Service Logs/Microsoft/VirtualMachineManager/Server/Admin (VMM server) | Logs to troubleshoot VMM issues. |

| Applications and Service Logs/MicrosoftAzureRecoveryServices/Replication (Hyper-V host) | Logs to troubleshoot Microsoft Azure Recovery Services Agent issues. |

| Applications and Service Logs/Microsoft/Azure Site Recovery/Provider/Operational (Hyper-V host) | Logs to troubleshoot Microsoft Azure Site Recovery Service issues. |

| Applications and Service Logs/Microsoft/Windows/Hyper-V-VMMS/Admin (Hyper-V host) | Logs to troubleshoot Hyper-V VM management issues. |

Log collection for advanced troubleshooting

These tools can help with advanced troubleshooting:

- For VMM, perform Site Recovery log collection using the Support Diagnostics Platform (SDP) tool.

- For Hyper-V without VMM, download this tool, and run it on the Hyper-V host to collect the logs.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for