IBM Db2 Azure Virtual Machines DBMS deployment for SAP workload

With Microsoft Azure, you can migrate your existing SAP application running on IBM Db2 for Linux, UNIX, and Windows (LUW) to Azure virtual machines. With SAP on IBM Db2 for LUW, administrators and developers can still use the same development and administration tools, which are available on-premises. General information about running SAP Business Suite on IBM Db2 for LUW is available via the SAP Community Network (SCN) in SAP on IBM Db2 for Linux, UNIX, and Windows.

For more information and updates about SAP on Db2 for LUW on Azure, see SAP Note 2233094.

There are various articles for SAP workload on Azure. We recommend beginning with Get started with SAP on Azure VMs and then read about other areas of interest.

The following SAP Notes are related to SAP on Azure regarding the area covered in this document:

| Note number | Title |

|---|---|

| 1928533 | SAP Applications on Azure: Supported Products and Azure VM types |

| 2015553 | SAP on Microsoft Azure: Support Prerequisites |

| 1999351 | Troubleshooting Enhanced Azure Monitoring for SAP |

| 2178632 | Key Monitoring Metrics for SAP on Microsoft Azure |

| 1409604 | Virtualization on Windows: Enhanced Monitoring |

| 2191498 | SAP on Linux with Azure: Enhanced Monitoring |

| 2233094 | DB6: SAP Applications on Azure Using IBM DB2 for Linux, UNIX, and Windows - Additional Information |

| 2243692 | Linux on Microsoft Azure (IaaS) VM: SAP license issues |

| 1984787 | SUSE LINUX Enterprise Server 12: Installation notes |

| 2002167 | Red Hat Enterprise Linux 7.x: Installation and Upgrade |

| 1597355 | Swap-space recommendation for Linux |

As a preread to this document, review Considerations for Azure Virtual Machines DBMS deployment for SAP workload. Review other guides in the SAP workload on Azure.

IBM Db2 for Linux, UNIX, and Windows Version Support

SAP on IBM Db2 for LUW on Microsoft Azure Virtual Machine Services is supported as of Db2 version 10.5.

For information about supported SAP products and Azure VM(Virtual Machines) types, refer to SAP Note 1928533.

IBM Db2 for Linux, UNIX, and Windows Configuration Guidelines for SAP Installations in Azure VMs

Storage Configuration

For an overview of Azure storage types for SAP workload, consult the article Azure Storage types for SAP workload All database files must be stored on mounted disks of Azure block storage (Windows: NTFS, Linux: xfs, supported as of Db2 11.1, or ext3).

Remote shared volumes like the Azure services in the listed scenarios are NOT supported for Db2 database files:

Microsoft Azure File Service for all guest OS.

Azure NetApp Files for Db2 running in Windows guest OS.

Remote shared volumes like the Azure services in the listed scenarios are supported for Db2 database files:

- Hosting Linux guest OS based Db2 data and log files on NFS shares hosted on Azure NetApp Files is supported!

If you're using disks based on Azure Page BLOB Storage or Managed Disks, the statements made in Considerations for Azure Virtual Machines DBMS deployment for SAP workload apply to deployments with the Db2 DBMS as well.

As explained earlier in the general part of the document, quotas on IOPS throughput for Azure disks exist. The exact quotas are depending on the VM type used. A list of VM types with their quotas can be found here (Linux) and here (Windows).

As long as the current IOPS quota per disk is sufficient, it's possible to store all the database files on one single mounted disk. Whereas you always should separate the data files and transaction log files on different disks/VHDs.

For performance considerations, also refer to chapter 'Data Safety and Performance Considerations for Database Directories' in SAP installation guides.

Alternatively, you can use Windows Storage Pools, which are only available in Windows Server 2012 and higher as described Considerations for Azure Virtual Machines DBMS deployment for SAP workload. On Linux you can use LVM or mdadm to create one large logical device over multiple disks.

For Azure M-Series VM, you can reduce by factors the latency writing into the transaction logs, compared to Azure Premium storage performance, when using Azure Write Accelerator. Therefore, you should deploy Azure Write Accelerator for one or more VHDs that form the volume for the Db2 transaction logs. Details can be read in the document Write Accelerator.

IBM Db2 LUW 11.5 released support for 4-KB sector size. Though you need to enable the usage of 4-KB sector size with 11.5 by the configurations setting of db2set DB2_4K_DEVICE_SUPPORT=ON as documented in:

For older Db2 versions, a 512 Byte sector size must be used. Premium SSD disks are 4-KB native and have 512 Byte emulation. Ultra disk uses 4-KB sector size by default. You can enable 512 Byte sector size during creation of Ultra disk. Details are available Using Azure ultra disks. This 512 Byte sector size is a prerequisite for IBM Db2 LUW versions lower than 11.5.

On Windows using Storage pools for Db2 storage paths for log_dir, sapdata and saptmp directories, you must specify a physical disk sector size of 512 Bytes. When using Windows Storage Pools, you must create the storage pools manually via command line interface using the parameter -LogicalSectorSizeDefault. For more information, see New-StoragePool.

Recommendation on VM and disk structure for IBM Db2 deployment

IBM Db2 for SAP NetWeaver Applications is supported on any VM type listed in SAP support note 1928533. Recommended VM families for running IBM Db2 database are Esd_v4/Eas_v4/Es_v3 and M/M_v2-series for large multi-terabyte databases. The IBM Db2 transaction log disk write performance can be improved by enabling the M-series Write Accelerator.

Following is a baseline configuration for various sizes and uses of SAP on Db2 deployments from small to large. The list is based on Azure premium storage. However, Azure Ultra disk is fully supported with Db2 as well and can be used as well. Use the values for capacity, burst throughput, and burst IOPS to define the Ultra disk configuration. You can limit the IOPS for the /db2/<SID>/log_dir at around 5000 IOPS.

Extra small SAP system: database size 50 - 200 GB: example Solution Manager

| VM Name / Size | Db2 mount point | Azure Premium Disk | # of Disks | IOPS | Through- put [MB/s] |

Size [GB] | Burst IOPS | Burst Through- put [GB] |

Stripe size | Caching |

|---|---|---|---|---|---|---|---|---|---|---|

| E4ds_v4 | /db2 | P6 | 1 | 240 | 50 | 64 | 3,500 | 170 | ||

| vCPU: 4 | /db2/<SID>/sapdata |

P10 | 2 | 1,000 | 200 | 256 | 7,000 | 340 | 256 KB |

ReadOnly |

| RAM: 32 GiB | /db2/<SID>/saptmp |

P6 | 1 | 240 | 50 | 128 | 3,500 | 170 | ||

/db2/<SID>/log_dir |

P6 | 2 | 480 | 100 | 128 | 7,000 | 340 | 64 KB |

||

/db2/<SID>/offline_log_dir |

P10 | 1 | 500 | 100 | 128 | 3,500 | 170 |

Small SAP system: database size 200 - 750 GB: small Business Suite

| VM Name / Size | Db2 mount point | Azure Premium Disk | # of Disks | IOPS | Through- put [MB/s] |

Size [GB] | Burst IOPS | Burst Through- put [GB] |

Stripe size | Caching |

|---|---|---|---|---|---|---|---|---|---|---|

| E16ds_v4 | /db2 | P6 | 1 | 240 | 50 | 64 | 3,500 | 170 | ||

| vCPU: 16 | /db2/<SID>/sapdata |

P15 | 4 | 4,400 | 500 | 1.024 | 14,000 | 680 | 256 KB | ReadOnly |

| RAM: 128 GiB | /db2/<SID>/saptmp |

P6 | 2 | 480 | 100 | 128 | 7,000 | 340 | 128 KB | |

/db2/<SID>/log_dir |

P15 | 2 | 2,200 | 250 | 512 | 7,000 | 340 | 64 KB |

||

/db2/<SID>/offline_log_dir |

P10 | 1 | 500 | 100 | 128 | 3,500 | 170 |

Medium SAP system: database size 500 - 1000 GB: small Business Suite

| VM Name / Size | Db2 mount point | Azure Premium Disk | # of Disks | IOPS | Through- put [MB/s] |

Size [GB] | Burst IOPS | Burst Through- put [GB] |

Stripe size | Caching |

|---|---|---|---|---|---|---|---|---|---|---|

| E32ds_v4 | /db2 | P6 | 1 | 240 | 50 | 64 | 3,500 | 170 | ||

| vCPU: 32 | /db2/<SID>/sapdata |

P30 | 2 | 10,000 | 400 | 2.048 | 10,000 | 400 | 256 KB | ReadOnly |

| RAM: 256 GiB | /db2/<SID>/saptmp |

P10 | 2 | 1,000 | 200 | 256 | 7,000 | 340 | 128 KB | |

/db2/<SID>/log_dir |

P20 | 2 | 4,600 | 300 | 1.024 | 7,000 | 340 | 64 KB |

||

/db2/<SID>/offline_log_dir |

P15 | 1 | 1,100 | 125 | 256 | 3,500 | 170 |

Large SAP system: database size 750 - 2000 GB: Business Suite

| VM Name / Size | Db2 mount point | Azure Premium Disk | # of Disks | IOPS | Through- put [MB/s] |

Size [GB] | Burst IOPS | Burst Through- put [GB] |

Stripe size | Caching |

|---|---|---|---|---|---|---|---|---|---|---|

| E64ds_v4 | /db2 | P6 | 1 | 240 | 50 | 64 | 3,500 | 170 | ||

| vCPU: 64 | /db2/<SID>/sapdata |

P30 | 4 | 20,000 | 800 | 4.096 | 20,000 | 800 | 256 KB | ReadOnly |

| RAM: 504 GiB | /db2/<SID>/saptmp |

P15 | 2 | 2,200 | 250 | 512 | 7,000 | 340 | 128 KB | |

/db2/<SID>/log_dir |

P20 | 4 | 9,200 | 600 | 2.048 | 14,000 | 680 | 64 KB |

||

/db2/<SID>/offline_log_dir |

P20 | 1 | 2,300 | 150 | 512 | 3,500 | 170 |

Large multi-terabyte SAP system: database size 2 TB+: Global Business Suite system

| VM Name / Size | Db2 mount point | Azure Premium Disk | # of Disks | IOPS | Through- put [MB/s] |

Size [GB] | Burst IOPS | Burst Through- put [GB] |

Stripe size | Caching |

|---|---|---|---|---|---|---|---|---|---|---|

| M128s | /db2 | P10 | 1 | 500 | 100 | 128 | 3,500 | 170 | ||

| vCPU: 128 | /db2/<SID>/sapdata |

P40 | 4 | 30,000 | 1.000 | 8.192 | 30,000 | 1.000 | 256 KB | ReadOnly |

| RAM: 2,048 GiB | /db2/<SID>/saptmp |

P20 | 2 | 4,600 | 300 | 1.024 | 7,000 | 340 | 128 KB | |

/db2/<SID>/log_dir |

P30 | 4 | 20,000 | 800 | 4.096 | 20,000 | 800 | 64 KB |

Write- Accelerator |

|

/db2/<SID>/offline_log_dir |

P30 | 1 | 5,000 | 200 | 1.024 | 5,000 | 200 |

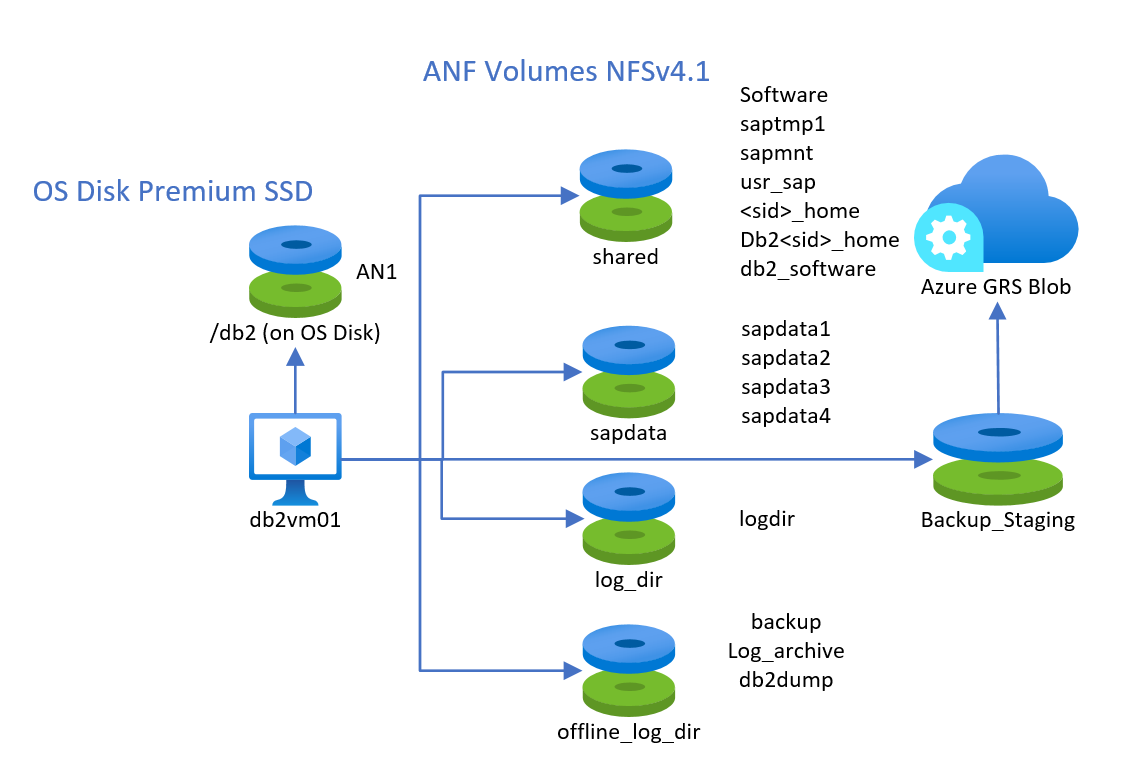

Using Azure NetApp Files

The usage of NFS v4.1 volumes based on Azure NetApp Files (ANF) is supported with IBM Db2, hosted in Suse or Red Hat Linux guest OS. You should create at least four different volumes that list like:

- Shared volume for saptmp1, sapmnt, usr_sap,

<sid>_home, db2<sid>_home, db2_software - One data volume for sapdata1 to sapdatan

- One log volume for the redo log directory

- One volume for the log archives and backups

A fifth potential volume could be an ANF volume that you use for more long-term backups that you use to snapshot and store the snapshots in Azure Blob store.

The configuration could look like shown here:

The performance tier and the size of the ANF hosted volumes must be chosen based on the performance requirements. However, we recommend taking the Ultra performance level for the data and the log volume. It isn't supported to mix block storage and shared storage types for the data and log volume.

As of mount options, mounting those volumes could look like (you need to replace <SID> and <sid> by the SID of your SAP system):

vi /etc/idmapd.conf

# Example

[General]

Domain = defaultv4iddomain.com

[Mapping]

Nobody-User = nobody

Nobody-Group = nobody

mount -t nfs -o rw,hard,sync,rsize=262144,wsize=262144,sec=sys,vers=4.1,tcp 172.17.10.4:/db2shared /mnt

mkdir -p /db2/Software /db2/AN1/saptmp /usr/sap/<SID> /sapmnt/<SID> /home/<sid>adm /db2/db2<sid> /db2/<SID>/db2_software

mkdir -p /mnt/Software /mnt/saptmp /mnt/usr_sap /mnt/sapmnt /mnt/<sid>_home /mnt/db2_software /mnt/db2<sid>

umount /mnt

mount -t nfs -o rw,hard,sync,rsize=262144,wsize=262144,sec=sys,vers=4.1,tcp 172.17.10.4:/db2data /mnt

mkdir -p /db2/AN1/sapdata/sapdata1 /db2/AN1/sapdata/sapdata2 /db2/AN1/sapdata/sapdata3 /db2/AN1/sapdata/sapdata4

mkdir -p /mnt/sapdata1 /mnt/sapdata2 /mnt/sapdata3 /mnt/sapdata4

umount /mnt

mount -t nfs -o rw,hard,sync,rsize=262144,wsize=262144,sec=sys,vers=4.1,tcp 172.17.10.4:/db2log /mnt

mkdir /db2/AN1/log_dir

mkdir /mnt/log_dir

umount /mnt

mount -t nfs -o rw,hard,sync,rsize=262144,wsize=262144,sec=sys,vers=4.1,tcp 172.17.10.4:/db2backup /mnt

mkdir /db2/AN1/backup

mkdir /mnt/backup

mkdir /db2/AN1/offline_log_dir /db2/AN1/db2dump

mkdir /mnt/offline_log_dir /mnt/db2dump

umount /mnt

Note

The mount option hard and sync are required

Backup/Restore

The backup/restore functionality for IBM Db2 for LUW is supported in the same way as on standard Windows Server Operating Systems and Hyper-V.

Make sure that you have a valid database backup strategy in place.

As in bare-metal deployments, backup/restore performance depends on how many volumes can be read in parallel and what the throughput of those volumes might be. In addition, the CPU consumption used by backup compression may play a significant role on VMs with up to eight CPU threads. Therefore, one can assume:

- The fewer the number of disks used to store the database devices, the smaller the overall throughput in reading

- The smaller the number of CPU threads in the VM, the more severe the impact of backup compression

- The fewer targets (Stripe Directories, disks) to write the backup to, the lower the throughput

To increase the number of targets to write to, two options can be used/combined depending on your needs:

- Striping the backup target volume over multiple disks to improve the IOPS throughput on that striped volume

- Using more than one target directory to write the backup to

Note

Db2 on Windows doesn't support the Windows VSS technology. As a result, the application consistent VM backup of Azure Backup Service can't be leveraged for VMs the Db2 DBMS is deployed in.

High Availability and Disaster Recovery

Linux Pacemaker

Important

For Db2 versions 11.5.6 and higher we highly recommend Integrated solution using Pacemaker from IBM.

- Integrated solution using Pacemaker

- Alternate or additional configurations available on Microsoft Azure Db2 high availability disaster recovery (HADR) with pacemaker is supported. Both SLES and RHEL operating systems are supported. This configuration enables high availability of IBM Db2 for SAP. Deployment guides:

- SLES: High availability of IBM Db2 LUW on Azure VMs on SUSE Linux Enterprise Server with Pacemaker

- RHEL: High availability of IBM Db2 LUW on Azure VMs on Red Hat Enterprise Linux Server

Windows Cluster Server

Microsoft Cluster Server (MSCS) isn't supported.

Db2 high availability disaster recovery (HADR) is supported. If the virtual machines of the HA configuration have working name resolution, the setup in Azure doesn't differ from any setup that is done on-premises. It isn't recommended to rely on IP resolution only.

Don't use Geo-Replication for the storage accounts that store the database disks. For more information, see the document Considerations for Azure Virtual Machines DBMS deployment for SAP workload.

Accelerated Networking

For Db2 deployments on Windows, we highly recommend using the Azure functionality of Accelerated Networking as described in the document Azure Accelerated Networking. Also consider recommendations made in Considerations for Azure Virtual Machines DBMS deployment for SAP workload.

Specifics for Linux deployments

As long as the current IOPS quota per disk is sufficient, it's possible to store all the database files on one single disk. Whereas you always should separate the data files and transaction log files on different disks.

If the IOPS or I/O throughput of a single Azure VHD isn't sufficient, you can use LVM (Logical Volume Manager) or MDADM as described in the document Considerations for Azure Virtual Machines DBMS deployment for SAP workload to create one large logical device over multiple disks.

For the disks containing the Db2 storage paths for your sapdata and saptmp directories, you must specify a physical disk sector size of 512 KB.

Other

All other general areas like Azure Availability Sets or SAP monitoring apply for deployments of VMs with the IBM Database as well. These general areas we describe in Considerations for Azure Virtual Machines DBMS deployment for SAP workload.

Next steps

Read the article:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for