Anomaly detection

In this hands-on exercise you will be modifying the logic used to generate an IoT Alert to be more dynamic by using the Anomaly Detection preview feature of Azure Stream Analytics. Currently, the IoT Alert is generated when the temperature from the device reaches the hard-coded limit. Using the anomaly detection capabilities, you will be modifying the stream job queries to detect both an upward and downward trend in the temperature. This type of detection would be ideal where you want early notification of a change from an expected state but not have a hard-coded value that indicates when it becomes a problem.

Exercise 1: Building new query

In this exercise, you will be building a new query to replace the existing Azure Stream Analytics job query. You will be building this new query incrementally, so you can see what the parts do. This lab is designed to work with your physical device, if you don't have a physical device you can alter the query to use fields from the simulated device.

Task 1: Add anomaly data collection to Azure

To save the detail scores generated from our anomaly detection we need to create another collection in the Azure Cosmos DB we used in the prior hands on unit. We are doing this to make it easy to see the values generated from the anomaly detection operator.

Go to your Azure portal, select Resource Groups and open the Resource Group you created when you deployed Connected Field Service.

Open the Azure Cosmo DB you created.

Select Data Explorer, click on the ... button of the Telemetry database and select New Container.

Enter AnomalyData for Container ID, enter DeviceID for Partition Key and click OK.

Task 2: Add new output

In this task, you will add a new Output for the AnomalyData collection.

Close the Azure Cosmos DB blade.

Locate and open the Stream Analytics Job.

Click Stop. The Stream Analytics Job must be stopped before you can change its query and input/outputs.

Click Yes.

After the Job stops, select Output, click + Add, and select Cosmo DB.

Enter AnomalyDB for Output Alias, select your Subscription, select the Cosmo DB you created, select the Database you created, enter AnomalyData for Container Name, and click Save.

Each exercise consists of a scenario and learning objectives, the scenario describes the purpose of the exercises, while the objectives are listed and have bullet points.

Task 3: Prepare and build the new query

Select Query.

Copy the existing query and save it in case you want to reference it later.

Clear the existing query, we are going to build a new one step by step.

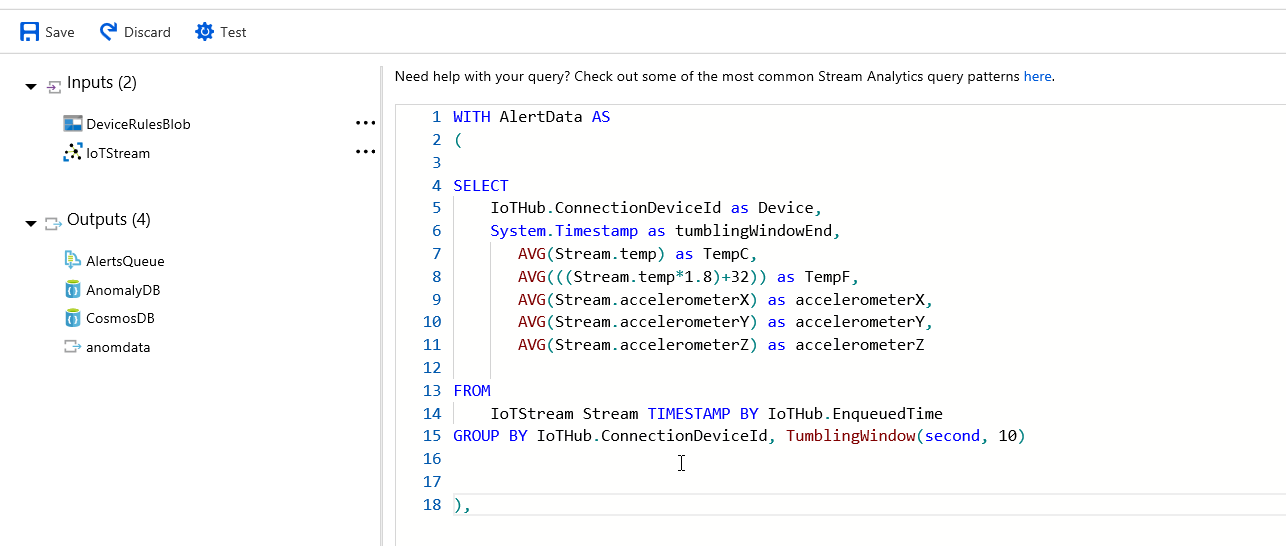

Paste the below snippet in the Query editor.

WITH AlertData AS ( SELECT IoTHub.ConnectionDeviceId as Device, System.Timestamp as tumblingWindowEnd, AVG(Stream.temp) as TempC, AVG(((Stream.temp*1.8)+32)) as TempF, AVG(Stream.accelerometerX) as accelerometerX, AVG(Stream.accelerometerY) as accelerometerY, AVG(Stream.accelerometerZ) as accelerometerZ FROM IoTStream Stream TIMESTAMP BY IoTHub.EnqueuedTime GROUP BY IoTHub.ConnectionDeviceId, TumblingWindow(second, 10) ),

Note

This will process the raw data off the device and group into 10 second tumbling windows that will be evaluated for anomalies. We choose to use averages for the data in the window, you could use whatever aggregation that made sense for your scenario.

Paste the following just after the prior query, this will use the data from the last query and further augment it.

FillInMissingValuesStep AS ( SELECT System.Timestamp AS hoppingWindowEnd, TopOne() OVER (ORDER BY tumblingWindowEnd DESC) AS lastEvent FROM AlertData GROUP BY HOPPINGWINDOW(second, 300, 5) ),Note

To help ensure uniformity of data so we don't have any gaps, we've chosen to fill the gaps by taking the last event in every hop window.

Paste the following step to do the actual anomaly scoring.

AnomalyDetectionStep AS ( SELECT lastevent.Device as lastEventDevice, hoppingWindowEnd, lastEvent.tumblingWindowEnd as lastTumblingWindowEnd, lastEvent.TempC as lastEventTempC, lastEvent.TempF as lastEventTempF, lastEvent.accelerometerY as lastEventaccelerometerY, lastEvent.accelerometerX as lastEventaccelerometerX, lastEvent.accelerometerZ as lastEventaccelerometerZ, system.timestamp as anomalyDetectionStepTimestamp, ANOMALYDETECTION(lastEvent.TempC) OVER (PARTITION BY lastevent.Device LIMIT DURATION(mi, 2)) as scores FROM FillInMissingValuesStep ),Note

This query uses the ANOMALYDETECTION operator on the TempC value. It is done on each device and is measured over a two-minute duration. This causes training of the anomaly detection to be device specific and could accommodate for differences in baseline temperatures at each device location. The output from this results in scores being added to the output for evaluation in the next query.

Paste the following on to the query you are building.

AnomalyDetectionFilter AS ( SELECT lastEventDevice as DeviceId, CAST(GetRecordPropertyValue(scores, 'BiLevelChangeScore') as float) as BiLevelChangeScore, CAST(GetRecordPropertyValue(scores, 'SlowPosTrendScore') as float) as SlowPosTrendScore, CAST(GetRecordPropertyValue(scores, 'SlowNegTrendScore') as float) as SlowNegTrendScore, lastEventTempC as Reading, 'Tempature' as ReadingType, 'Trend Up ' as Threshold, 'EventToken' as EventToken, lastTumblingWindowEnd as time FROM AnomalyDetectionStep WHERE CAST(GetRecordPropertyValue(scores, 'SlowPosTrendScore') as float) >= 10 union SELECT lastEventDevice as DeviceId, CAST(GetRecordPropertyValue(scores, 'BiLevelChangeScore') as float) as BiLevelChangeScore, CAST(GetRecordPropertyValue(scores, 'SlowPosTrendScore') as float) as SlowPosTrendScore, CAST(GetRecordPropertyValue(scores, 'SlowNegTrendScore') as float) as SlowNegTrendScore, lastEventTempC as Reading, 'Tempature' as ReadingType, 'Trend Down ' as Threshold, 'EventToken' as EventToken, lastTumblingWindowEnd as time FROM AnomalyDetectionStep WHERE CAST(GetRecordPropertyValue(scores, 'SlowNegTrendScore') as float) >= 10 )Note

This does the evaluation of both the SlowPosTrendScore and SlowNegTrendScore. The value you are checking is the sensitivity. It could start being meaningful at 3.25, but we choose to wait till it was more significant at 10. In the real world you would tune that to your particular scenario. Note also we have fields selected for Reading, ReadingType, Threshold and EventToken. This is data that is expected by the Logic App for any message we output to the AlertsQueue which you will be doing in the next query. If you don't maintain these fields you need to modify that Logic App to not expect them.

Paste the snippet after the last snippet.

SELECT * INTO AlertsQueue FROM AnomalyDetectionFilter data WHERE LAG(data.DeviceID) OVER (PARTITION BY data.DeviceId, CAST(data.Reading as bigint), data.ReadingType LIMIT DURATION(minute, 1)) IS NULLNote

This does the actual insert into AlertsQueue which will be picked up by the Logic App to create the IoT Alert Record. Note we are keeping the LAG operator in the where to limit how often we put messages into the queue to only when the same device for a rounded temperature has new data within the minute.

To insure we log the same detail data from the device add back the following query.

SELECT IoTHub.ConnectionDeviceId as DeviceID, humidity, temp, pressure, magnetometerX, magnetometerY, magnetometerZ, accelerometerX, accelerometerY, accelerometerZ, gyroscopeX, gyroscopeY, gyroscopeZ Into CosmosDB FROM IoTStream TIMESTAMP BY IoTHub.EnqueuedTimeAdd the following. This will save off the scores from the anomaly detection operator, so you can easily see the data via the Azure Cosmos DB Data Explorer.

SELECT lasteventdevice as DeviceID, hoppingwindowend, lasteventtempc, lasteventtempf, lasteventaccelerometery, lasteventaccelerometerx, lasteventaccelerometerz, anomalydetectionsteptimestamp, scores Into AnomalyDB FROM AnomalyDetectionStepSave the Query.

Click Yes.

Select the Overview tab and click Start.

Select Now and click Start again.

Make sure the job stats successfully.

Exercise 2: Testing the query

In this exercise, you will attempt to cause the temperature on your physical device to be changed to test the anomaly detection.

Each exercise consists of a scenario and learning objectives, the scenario describes the purpose of the exercises, while the objectives are listed and have bullet points.

Task 1: Test the query

Now is the challenging part, you need something to slowly change the temperature the device reads. Ice packs work well, as do cans of compressed air. A hair dryer or a hand warmer might work as well. Just make sure to protect your device so you don't get it wet or otherwise damage it.

Use one of the tools mentioned to change the temperature of your device.

Close the Stream Analytics blade.

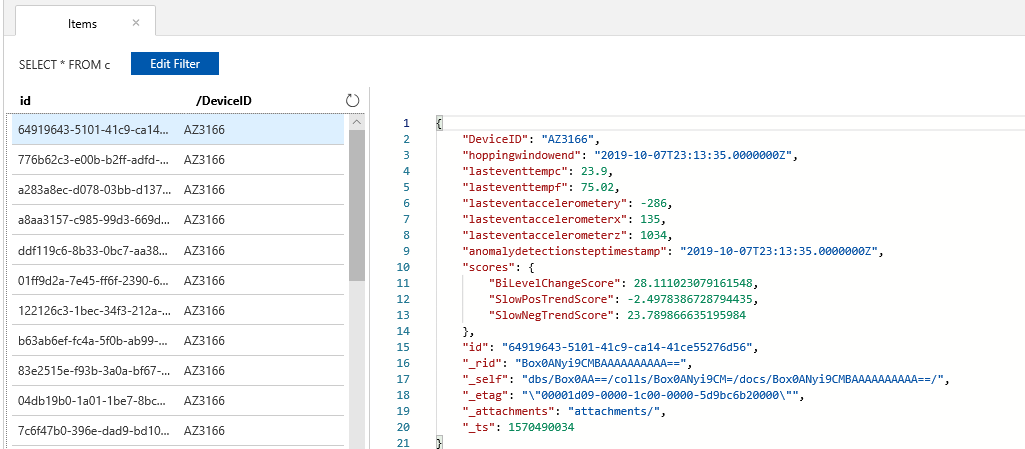

Open the Azure Cosmos DB.

Select Data Explorer, expand the AnomalyData, and select Items.

You should get list of alerts. Click on one of the alerts.

You should see sensor information from your device.

Navigate to Power Apps and make sure you are in the correct environment.

Select Apps and click to open the Connected Field Service application.

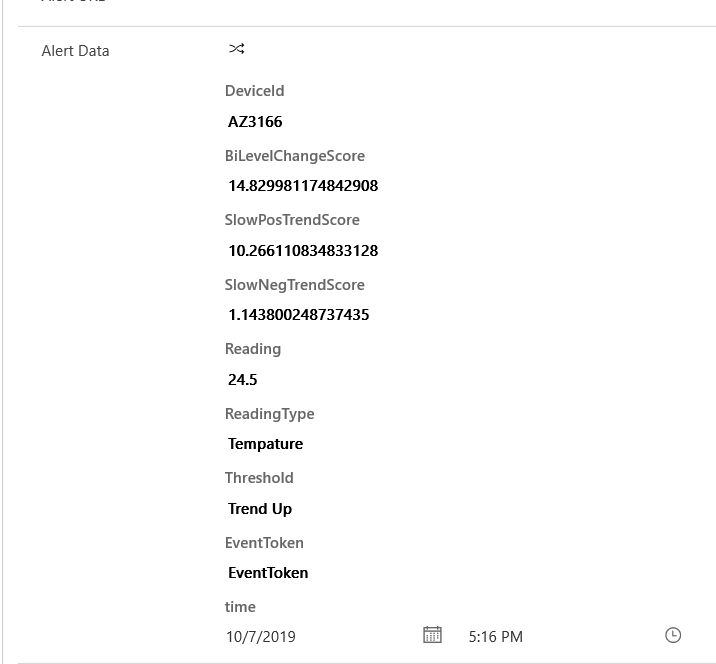

Select IoT Alerts.

Open one of the alerts.

Go to the Alert Data section and you should see sensor information from your device.