Changes to automatic update statistics in SQL Server – traceflag 2371

When SQL Server introduced automatic update statistics with SQL Server 7.0 in 1998, the basic design was to keep track of the number of changes to a table. When the number of changes on a table exceeded a certain percentage threshold an automatic update of the statistics would be executed.

Over the next releases up to SQL Server 2005, several changes were made to the principal data collected in the statistics and the asynchronous update of statistics was introduced. In addition, optimizations were included where SQL Server tracks the changes on a per column level in order to update selective statistics of a table only. For more details, the most comprehensive overview on the subject of Statistics as used by the Query Optimizer can be found here: https://msdn.microsoft.com/en-us/library/dd535534(SQL.100).aspx

In the releases of SQL Server 2008 and 2008 R2, no major changes were initially performed. Some of the fixed thresholds we established in the time of developing SQL Server 7.0 like the threshold of modifications applied which would trigger an automatic update statistics never got changed. The value chosen at that time was 20% and remained like that in all SQL Server releases on the market. This means for today’s SQL Server releases, if a column of a table would have been exposed to modifications which exceeded 20% of the # of rows in the table, an automatic update statistics would be triggered. The update is executed against the index B-Trees including that column as leading column in the index or to the column statistics of such a column. Indexes not containing the column as leading column are not experiencing an update of the statistics. Another restriction decided as well in the time frame of SQL Server 7.0 development was meant to avoid small tables frequently getting updated in statistics by requiring at least 500 rows to be in the static table (6 rows in temp tables).

How did the automatic update statistics work with SAP applications

SAP immediately embraced automatic update statistics and tens of thousands of customers used it for many years with SAP Netweaver based applications very successfully. This is in marked contrast to other RDBMS where regular jobs must be scheduled to update statistics on tables. Therefore the basic recommendation by SAP is to fully leverage this SQL Server functionality. Smaller issues around requiring at least 500 rows in a table only appeared with SAP BW. Processes to supplement additional update statistics in SAP BW were implemented and documented with SAP OSS Note #849062 – ‘Optimizer statistics for InfoCubes in BW’. However with SAP customer systems increasing in size, 64 bit support and a general trend to utilize Intel based systems for larger and larger line of business applications, the number of customers operating SAP ERP or BW systems with some tables containing several billion rows increased. We here and there encountered situations where the automatic update statistics fixed thresholds of 20% required the number of changed rows in a table to be many hundreds of millions before triggering another automatic update statistics. As a result some customers reported update statistics taking place extremely rarely and with that outdated statistics sometimes resulting in query plans which were not optimal.

One way out

In SQL Server 2008 R2 SP1 and in the next release of SQL Server, we released a trace flag which will change the fixed rate of the 20% threshold for update statistics into a dynamic percentage rate. The higher the number of rows in a table, the lower the threshold will become to trigger an update of the statistics. For example, if the trace flag is activated it means an update statistics will be triggered on a table with 1 billion rows when 1 million changes occur. If the trace flag is not activated, then the same table with 1 billion records would need 200 million changes before an update statistics is triggered. In order to activate this new logic, you need to enable traceflag 2371. As a result the threshold to trigger update statistics will be calculated based on the number of rows in the table. However, the table still needs to have a minimum of 500 rows as the minimum row threshold did not change. As before the update of the statistics will apply the default sample rate which is dynamic and based on the # of rows.

More details of functionality under trace flag 2371

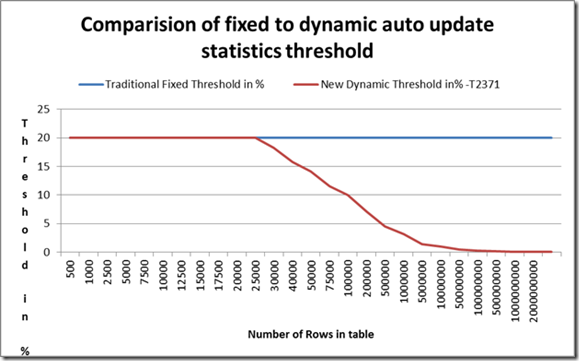

In the graph below, you can see how the new formula works. With smaller tables, the threshold will still be around a 20% percentage. It only is when you exceed 25,000 rows per table where the dynamic rule will kick in and where, with an increasing # of rows, the percentage of records changed is becoming lower and lower. E.g. in a table with 100,000 rows, the threshold to trigger update statistics will already be down to 10%. In a table with 1,000,000 rows it only will take changes of around 3.2% to trigger automatic update statistics doing its job.

In a table with 10,000,000 or 50,000,000 rows, less than 1% or 0.5% of changes are needed to trigger a statistics update. Or a table with 100,000,000 rows requires only around 0.31% changes to trigger update statistics. Means the most important tables in the SAP schemas experiencing most of the changes will experience statistics update rates which are 20 to 60 times more frequent and with that should catch changes like a new fiscal month, a new fiscal years within a few days instead like before missing out on those changes for a few weeks or a few months.

Consequences of enabling the traceflag

As we showed above update statistics is getting executed way more often against the tables which experience frequent changes. The downside is that updating the statistics results in recompilation of the queries accessing the table. This again can increase the risk of getting a different query plan for the next executions of queries against those tables. On the other side, the dynamic threshold to trigger update statistics should address issues encountered like not finding a new month or new fiscal year for month end reporting in the statistics and hence choosing a sub-optimal plan.

Since we just released this new functionality, we have no recommendation yet on how or whether to use this new functionality for SAP applications in general. Microsoft itself is testing this new traceflag very successfully for a few months already and will deploy it with the new SQL Denali version into their productive SAP ERP system in mid of November 2011. However we would encourage SAP customers who encounter issues with queries using outdated statistics or customers who use the procedures described in OSS note #1558087 to give this new functionality a try. The functionality as described got implemented into Service Pack 1 of SQL Server 2008 R2 and will also be available in the next release of SQL Server (currently known as Denali) under the same traceflag. There are no plans to down-port this functionality in earlier releases of SQL Server like SQL Server 2008 or 2005.