Este artigo apresenta uma visão geral das soluções de banco de dados do Azure descritas no Centro de Arquitetura do Azure.

Apache®, Apache Cassandra® e o logotipo do Hadoop são marcas registradas ou marcas comerciais da Apache Software Foundation nos Estados Unidos e/ou em outros países. O uso desta marca não implica aprovação por parte da Apache Software Foundation.

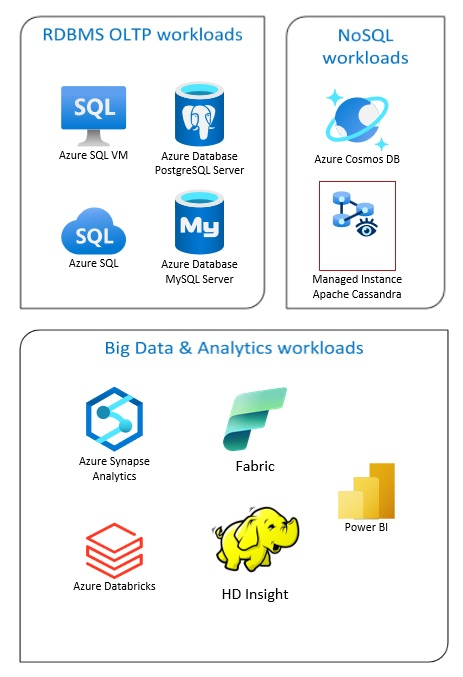

Entre as soluções de Banco de Dados do Azure estão sistemas de gerenciamento de banco de dados relacionais tradicionais (RDBMS e OLTP), cargas de trabalho de Big Data e análise (inclusive OLAP) e cargas de trabalho NoSQL.

Entre as cargas de trabalho RDBMS estão o processamento de transações online (OLTP) e processamento analítico online (OLAP). Os dados de várias fontes na organização podem ser consolidados em um data warehouse. Você pode usar um processo de extração, transformação e carregamento (ETL) ou extração, carregamento e transformação (ELT) para mover e transformar os dados de origem. Para obter mais informações sobre bancos de dados RDBMS, consulte Explorar bancos de dados relacionais no Azure.

Uma arquitetura de Big Data foi projetada para lidar com a ingestão, o processamento e a análise de dados grandes ou complexos. As soluções de Big Data normalmente envolvem uma grande quantidade de dados relacionais e não relacionais, que os sistemas RDBMS tradicionais não são indicados para armazenar. Eles normalmente envolvem soluções como Data Lakes, Delta Lakes e lakehouses. Consulte mais informações em Design da arquitetura do Analytics.

Os bancos de dados NoSQL são chamados de bancos de dados NoSQL não relacionais ou não SQL para realçar o fato de que eles podem suportar grandes volumes de dados não estruturados que mudam rapidamente. Eles não armazenam dados em tabelas, linhas e colunas, como bancos de dados (SQL). Para obter mais informações sobre bancos de dados não SQL, consulte Dados NoSQL e O que são bancos de dados NoSQL?.

Este artigo apresenta recursos para saber mais sobre bancos de dados do Azure. Ele descreve caminhos para implementar as arquiteturas que atendem às necessidades e melhores práticas para ter em mente ao projetar as soluções.

Existem muitas arquiteturas para você desenhar para atender às necessidades do banco de dados. Também damos ideias de soluções para você desenvolver, dentre os quais estão links para todos os componentes de que você precisa.

Saiba mais sobre os bancos de dados no Azure

Ao começar a pensar em arquiteturas possíveis para a solução, é vital que você escolha o armazenamento de dados correto. Se você não tem experiência em bancos de dados no Azure, o melhor lugar para começar é o Microsoft Learn. Esta plataforma online gratuita oferece vídeos e tutoriais para aprendizado prático. O Microsoft Learn oferece caminhos de aprendizado baseados no cargo de trabalho, como desenvolvedor ou analista de dados.

Você pode começar com uma descrição geral dos bancos de dados diferentes no Azure e o uso. Você também pode procurar módulos de dados do Azure e Escolha uma abordagem do armazenamento de dados no Azure. Estes artigos ajudam você a compreender as opções em soluções de dados do Azure e saber por que algumas soluções são recomendadas em cenários específicos.

Aqui estão alguns módulos do Learn que você pode achar úteis:

- Projetar sua migração para o Azure

- Implantar o Banco de Dados SQL do Azure

- Explorar os serviços de banco de dados e análise do Azure

- Proteger o Banco de Dados SQL do Azure

- Azure Cosmos DB

- Banco de Dados do Azure para PostgreSQL

- Banco de Dados do Azure para MySQL

- SQL Server em VMs do Azure

Caminho de produção

Para encontrar opções úteis para lidar com dados relacionais, leve em consideração estes recursos:

- Para saber mais sobre recursos para coletar dados de várias fontes e como aplicar transformações de dados nos pipelines de dados, consulte Análise no Azure.

- Para saber mais sobre o OLAP, que organiza bancos de dados comerciais grandes e dá suporte à análise complexa, consulte Processamento analítico online.

- Para saber mais sobre sistemas OLTP que registram interações comerciais à medida que elas ocorrem, consulte Processamento de transações online.

Um banco de dados não relacional não usa o esquema tabular de linhas e colunas. Para obter mais informações, consulte Dados não relacionais e NoSQL.

Para saber mais sobre data lakes, que armazenam uma grande quantidade de dados no formato nativo e bruto, consulte Data lakes.

Uma arquitetura de Big Data pode lidar com ingestão, processamento e análise de dados grandes ou muito complexos para sistemas de banco de dados tradicionais. Para obter mais informações, consulte Arquiteturas de Big Data e análise.

Uma nuvem híbrida é um ambiente de TI que combina nuvem pública e datacenters locais. Para obter mais informações, consulte Estender soluções de dados locais para a nuvem ou leve em consideração o Azure Arc combinado com bancos de dados do Azure.

O Azure Cosmos DB é um banco de dados NoSQL totalmente gerenciado para o desenvolvimento de aplicativos modernos. Para obter mais informações, consulte Modelo de recurso do Azure Cosmos DB.

Para saber mais sobre as opções para transferir dados de e para o Azure, consulte Transferir dados de e para o Azure.

Práticas recomendadas

Analise essas melhores práticas ao projetar as soluções.

| Práticas recomendadas | Descrição |

|---|---|

| Padrões de gerenciamento de dados | O gerenciamento de dados é o elemento-chave dos aplicativos em nuvem. Ele influencia a maioria dos atributos de qualidade. |

| Padrão de caixa de saída transacional com o Azure Cosmos DB | Saiba como usar o padrão de Caixa de Saída Transacional para mensagens confiáveis e entrega garantida de eventos. |

| Distribuir dados globalmente com o Azure Cosmos DB | Para obter baixa latência e alta disponibilidade, alguns aplicativos precisam ser implantados em datacenters próximos dos usuários. |

| Segurança no Azure Cosmos DB | As melhores práticas de segurança ajudam a evitar, detectar e responder a violações de banco de dados. |

| Backup contínuo com restauração pontual no Azure Cosmos DB | Saiba mais sobre o recurso da restauração pontual do Azure Cosmos DB. |

| Obter alta disponibilidade com o Azure Cosmos DB | O Azure Cosmos DB oferece vários recursos e opções de configuração para atingir alta disponibilidade. |

| Alta disponibilidade do Banco de Dados SQL e Instância Gerenciada de SQL do Azure | O banco de dados não deve ser um ponto único de falha na arquitetura de software. |

Opções de tecnologia

Existem muitas opções de tecnologias a serem usadas com os Bancos de Dados do Azure. Estes artigos ajudam você a escolher as melhores tecnologias para as necessidades.

- Escolher um armazenamento de dados

- Escolher um armazenamento de dados analíticos no Azure

- Escolher uma tecnologia de análise de dados no Azure

- Escolher uma tecnologia de processamento em lotes no Azure

- Escolha uma tecnologia de armazenamento de Big Data no Azure

- Escolher uma tecnologia de orquestração de pipeline de dados no Azure

- Escolher um armazenamento de dados de pesquisa no Azure

- Escolher uma tecnologia de processamento de fluxo no Azure

Mantenha-se por dentro com bancos de dados

Consulte as atualizações do Azure para ficar por dentro da tecnologia dos Bancos de Dados do Azure.

Recursos relacionados

Essas arquiteturas usam tecnologias de banco de dados.

Aqui estão alguns outros recursos:

- Cenário da Adatum Corporation para gerenciamento e análise de dados no Azure

- Cenário Lamna Healthcare para gerenciamento e análise de dados no Azure

- Otimizar a administração de instâncias do SQL Server

- Cenário de Relecloud para análise e gerenciamento de dados no Azure

Soluções de exemplo

Essas ideias de solução são algumas das abordagens de exemplo que você pode adaptar às necessidades.

- Cache de dados

- Data warehouse corporativo

- Sistema de mensagens

- Aplicativos sem servidor usando o Azure Cosmos DB

Produtos de banco de dados semelhantes

Se você tiver familiaridade com o Amazon Web Services (AWS) ou o Google Cloud, consulte as seguintes comparações: