从 Application Insights 导出遥测数据

想要将遥测数据保留超过标准保持期? 或者要以某种专业的方式处理它? 连续导出适用于此目的。 可以使用 JSON 格式将 Application Insights 门户中显示的事件导出到 Azure 中的存储。 可以从该存储中下载这些数据,并编写所需的代码来处理这些数据。

重要

- 2024 年 2 月 29 日,连续导出将被弃用,不再是 Application Insights 的一部分。

- 迁移到基于工作区的 Application Insights 资源时,必须将诊断设置用于导出遥测数据。 所有基于工作区的 Application Insights 资源都必须使用诊断设置。

- 导出诊断设置可能会增加成本。 有关详细信息,请参阅基于诊断设置的导出。

在设置连续导出之前,请考虑一些备选方法:

- 通过“指标”或“搜索”选项卡顶部的“导出”按钮,可将表格和图表发送到 Excel 电子表格。

- Log Analytics 提供功能强大的遥测查询语言。 它还可以导出结果。

- 如果要在 Power BI 中浏览数据,则无需使用连续导出也可以做到,但前提是已迁移到基于工作区的资源。

- 数据访问 REST API 使你能够以编程方式访问遥测数据。

- 还可以访问通过 PowerShell 设置连续导出。

连续导出将数据复制到存储空间后(数据可在其中保存任意长的时间),在正常保持期内,这些数据仍可在 Application Insights 中使用。

支持的区域

以下区域支持连续导出:

- Southeast Asia

- 加拿大中部

- 印度中部

- 北欧

- 英国南部

- 澳大利亚东部

- Japan East

- 韩国中部

- 法国中部

- 东亚

- 美国西部

- 美国中部

- 美国东部 2

- 美国中南部

- 美国西部 2

- 南非北部

- 美国中北部

- Brazil South

- 瑞士北部

- Australia Southeast

- 英国西部

- 德国中西部

- 瑞士西部

- 澳大利亚中部 2

- 阿联酋中部

- 巴西东南部

- 澳大利亚中部

- 阿拉伯联合酋长国北部

- 挪威东部

- 日本西部

注意

如果导出是在 2021 年 2 月 23 日之前配置的,连续导出将继续适用于美国东部和欧洲西部的应用程序。 无论应用程序何时创建,都不能在美国东部或欧洲西部的任何应用程序上配置新的连续导出规则。

连续导出高级存储配置

连续导出不支持以下 Azure 存储功能/配置:

- 将 Azure 虚拟网络/Azure 存储防火墙与 Azure Blob 存储配合使用。

- Azure Data Lake Storage Gen2。

创建连续导出

注意

应用程序每天导出的数据不能超过 3 TB。 如果每天导出的数据超过 3 TB,则导出将被禁用。 若要无限制地导出,请使用基于诊断设置的导出。

在应用左侧“配置”下的 Application Insights 资源中,打开“连续导出”,并选择“添加”。

选择要导出的遥测数据类型。

创建或选择要用于存储数据的 Azure 存储帐户。 有关存储定价选项的详细信息,请参阅定价页。

选择“添加”>“导出目标”>“存储帐户”。 然后创建新存储或选择现有存储。

警告

默认情况下,存储位置将设置为与 Application Insights 资源相同的地理区域。 如果存储在不同的区域中,则可能会产生传输费用。

在存储中创建或选择一个容器。

注意

创建导出后,新引入的数据将开始流向 Azure Blob 存储。 连续导出只传输在启用连续导出后创建/引入的新遥测数据。 在启用连续导出之前存在的任何数据都不会导出。 不支持使用连续导出以追溯方式导出以前创建的数据。

数据出现在存储中之前可能有大约一小时的延迟。

完成第一次导出后,会在 Blob 存储容器中找到以下结构。 (此结构因所收集的数据而异。)

| 名称 | 说明 |

|---|---|

| 可用性 | 报告可用性 Web 测试。 |

| 事件 | TrackEvent() 生成的自定义事件。 |

| 异常 | 报告服务器和浏览器中发生的异常。 |

| 消息 | 由 TrackTrace 和日志记录适配器发送。 |

| 指标 | 由指标 API 调用生成。 |

| PerformanceCounters | Application Insights 收集到的性能计数器。 |

| 请求 | 由 TrackRequest 发送。 标准模块使用请求来报告在服务器上测量的服务器响应时间。 |

编辑连续导出

选择“连续导出”,然后选择要编辑的存储帐户。

停止连续导出

若要停止导出,请选择“禁用”。 再次选择“启用”时,将使用新数据重启导出。 无法获取在禁用导出时传入门户的数据。

要永久停止导出,请将其删除。 这样做不会将数据从存储中删除。

无法添加或更改导出?

若要添加或更改导出,你需要具有“所有者”、“参与者”或“Application Insights 参与者”访问权限。 了解角色。

获取哪些事件?

导出的数据是我们从你的应用程序接收的原始遥测数据,其中添加了来自客户端 IP 地址的位置数据。

由采样丢弃的数据不会包含在导出的数据中。

不包含其他计算的指标。 例如,不会导出平均 CPU 使用率,但会导出用来计算平均值的原始遥测数据。

该数据还包含已设置的任何可用性 Web 测试的结果。

注意

如果应用程序发送大量数据,采样功能可能会运行,并只发送一小部分生成的遥测数据。 了解有关采样的详细信息。

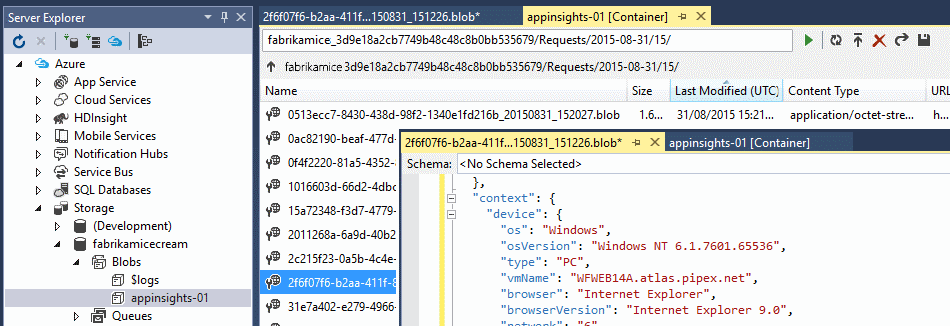

检查数据

可以直接在门户中检查存储。 在最左侧的菜单中选择“主页”。 在顶部显示“Azure 服务”的位置,选择“存储帐户”。 选择存储帐户名称,然后在“概述”页上选择“服务”>“Blob”。 最后,选择容器名称。

若要在 Visual Studio 中检查 Azure 存储,请选择“视图”>“Cloud Explorer”。 如果没有此菜单命令,则需要安装 Azure SDK。 打开“新建项目”对话框,展开 Visual C#/云并选择“获取用于 .NET 的 Microsoft Azure SDK”。

打开 Blob 存储后,会看到包含一组 Blob 文件的容器。 你将看到每个文件的 URI 派生自 Application Insights 的资源名称、其检测密钥和遥测类型、日期、时间。 资源名称为全小写形式,检测密钥不包含连字符。

日期和时间采用 UTC,表示在存储中存放遥测数据的时间,而不是生成遥测数据的时间。 因此,如果编写代码来下载数据,数据的日期和时间可能会线性移动。

路径格式如下:

$"{applicationName}_{instrumentationKey}/{type}/{blobDeliveryTimeUtc:yyyy-MM-dd}/{ blobDeliveryTimeUtc:HH}/{blobId}_{blobCreationTimeUtc:yyyyMMdd_HHmmss}.blob"

其中:

blobCreationTimeUtc是在内部暂存存储中创建 Blob 的时间。blobDeliveryTimeUtc是将 Blob 复制到导出目标存储的时间。

数据格式

设置数据的格式以便:

每个 Blob 是一个文本文件,其中包含多个以

\n分隔的行。 它包含大约半分钟时间内处理的遥测数据。每行代表遥测数据点,例如请求或页面视图。

每行是未设置格式的 JSON 文档。 如果要查看行,请在 Visual Studio 中打开 Blob,然后选择“编辑”>“高级”>“设置文件格式”。

持续时间以刻度为单位,10000 刻度 = 1 毫秒。 例如,这些值显示从浏览器发送请求用了 1 毫秒时间,接收它用了 3 毫秒,在浏览器中处理页面用了 1.8 秒:

"sendRequest": {"value": 10000.0},

"receiveRequest": {"value": 30000.0},

"clientProcess": {"value": 17970000.0}

有关属性类型和值的详细数据模型参考,请参阅 Application Insights 导出数据模型。

处理数据

如果数据规模不大,可以编写一些代码来提取数据,并在电子表格中阅读这些数据。 例如:

private IEnumerable<T> DeserializeMany<T>(string folderName)

{

var files = Directory.EnumerateFiles(folderName, "*.blob", SearchOption.AllDirectories);

foreach (var file in files)

{

using (var fileReader = File.OpenText(file))

{

string fileContent = fileReader.ReadToEnd();

IEnumerable<string> entities = fileContent.Split('\n').Where(s => !string.IsNullOrWhiteSpace(s));

foreach (var entity in entities)

{

yield return JsonConvert.DeserializeObject<T>(entity);

}

}

}

}

如需更详细的代码示例,请参阅使用辅助角色。

删除旧数据

你需要负责管理存储容量,以及在必要时删除旧数据。

重新生成存储密钥

如果更改存储密钥,连续导出将停止运行。 Azure 帐户中会显示通知。

选择“连续导出”选项卡并编辑导出。 编辑“导出目标”值,只保留选定的同一存储。 选择“确定”以确认。

连续导出将重启。

导出示例

有关导出示例,请参阅:

如果数据规模较大,可以考虑云中的 HDInsight Hadoop 群集。 HDInsight 提供各种用于管理和分析大数据的技术。 可以使用它来处理从 Application Insights 导出的数据。

常见问题

本部分提供常见问题的解答。

是否可以一次性下载图表?

可以。 在选项卡的顶部,选择“导出数据”。

我设置了导出,但为什么存储中没有数据?

自设置导出之后,Application Insights 是否从应用程序收到了任何遥测数据? 只会收到新数据。

尝试设置导出时为什么出现拒绝访问错误?

如果帐户由组织拥有,则你必须是所有者或参与者组的成员。

是否可以直接导出到我自己的本地存储?

否。 我们的导出引擎目前仅适用于 Azure 存储。

放置在存储中的数据量是否有任何限制?

不是。 我们将持续推送数据,直到删除了导出。 如果达到 Blob 存储的外在限制,我们将停止推送,但那个限制极大。 可以自行控制使用的存储量。

存储中应会出现多少个 Blob?

- 对于选择要导出的每种数据类型,将每分钟创建一个新 Blob(如果有可用的数据)。

- 对于高流量的应用程序,将分配额外的分区单元。 在此情况下,每个单元会每分钟创建一个 Blob。

我为存储重新生成了密钥或更改了容器的名称,但为什么现在导出不能正常进行?

请编辑导出并选择“导出目标”选项卡。像以前一样保留选择相同的存储,并选择“确定”以确认。 导出将重新开始。 如果更改是在最近几天内做出的,则不会丢失数据。

是否可以暂停导出?

是的。 选择“禁用”。

代码示例

基于诊断设置的导出

首选诊断设置导出,因为它提供额外的功能:

- 具有虚拟网络、防火墙和专用链接的 Azure 存储帐户。

- 导出到 Azure 事件中心。

诊断设置导出在以下方面与连续导出存在更多的不同:

- 更新的架构。

- 遥测数据在到达时发送,而不是以批量上传的方式发送。

重要

由于对目标(例如存储帐户)的调用增加,可能会产生额外的费用。

若要迁移到诊断设置导出,请执行以下操作:

- 在经典 Application Insights 上启用诊断设置。

- 配置数据导出:从 Application Insights 资源中选择“ 诊断设置>”“添加诊断设置 ”。

- 验证新数据导出的配置是否与连续导出相同

注意

如果要在 Log Analytics 工作区中存储诊断日志,需要考虑两点以免 Application Insights 中出现重复数据:

- 该目标不能与 Application Insights 资源所基于的 Log Analytics 工作区相同。

- Application Insights 用户不能同时访问两个工作区。 将 Log Analytics 访问控制模式设置为“需要工作区权限”。 通过 Azure 基于角色的访问控制,确保用户只能访问 Application Insights 资源所基于的 Log Analytics 工作区。

这些步骤是必要的,因为 Application Insights 跨 Application Insight 资源(包括 Log Analytics 工作区)来访问遥测数据,以提供完整的端到端事务操作和准确的应用程序映射。 由于诊断日志使用相同的表名,因此如果用户有权访问包含相同数据的多个资源,则可能会显示重复的遥测数据。

Application Insights 导出数据模型

此表列出了从 Application Insights SDK 发送到门户的遥测属性。 连续导出的数据输出中会显示这些属性。 这些属性还显示在指标资源管理器和诊断搜索的属性筛选器中。

需要注意的要点:

- 这些表中的

[0]表示必须在其中插入索引的路径中的一个点,但它不一定总为 0。 - 持续时间的单位为微秒,因此 10000000 == 1 秒。

- 日期和时间采用 UTC,以 ISO 格式

yyyy-MM-DDThh:mm:ss.sssZ表示

示例

// A server report about an HTTP request

{

"request": [

{

"urlData": { // derived from 'url'

"host": "contoso.org",

"base": "/",

"hashTag": ""

},

"responseCode": 200, // Sent to client

"success": true, // Default == responseCode<400

// Request id becomes the operation id of child events

"id": "fCOhCdCnZ9I=",

"name": "GET Home/Index",

"count": 1, // 100% / sampling rate

"durationMetric": {

"value": 1046804.0, // 10000000 == 1 second

// Currently the following fields are redundant:

"count": 1.0,

"min": 1046804.0,

"max": 1046804.0,

"stdDev": 0.0,

"sampledValue": 1046804.0

},

"url": "/"

}

],

"internal": {

"data": {

"id": "7f156650-ef4c-11e5-8453-3f984b167d05",

"documentVersion": "1.61"

}

},

"context": {

"device": { // client browser

"type": "PC",

"screenResolution": { },

"roleInstance": "WFWEB14B.fabrikam.net"

},

"application": { },

"location": { // derived from client ip

"continent": "North America",

"country": "United States",

// last octagon is anonymized to 0 at portal:

"clientip": "168.62.177.0",

"province": "",

"city": ""

},

"data": {

"isSynthetic": true, // we identified source as a bot

// percentage of generated data sent to portal:

"samplingRate": 100.0,

"eventTime": "2016-03-21T10:05:45.7334717Z" // UTC

},

"user": {

"isAuthenticated": false,

"anonId": "us-tx-sn1-azr", // bot agent id

"anonAcquisitionDate": "0001-01-01T00:00:00Z",

"authAcquisitionDate": "0001-01-01T00:00:00Z",

"accountAcquisitionDate": "0001-01-01T00:00:00Z"

},

"operation": {

"id": "fCOhCdCnZ9I=",

"parentId": "fCOhCdCnZ9I=",

"name": "GET Home/Index"

},

"cloud": { },

"serverDevice": { },

"custom": { // set by custom fields of track calls

"dimensions": [ ],

"metrics": [ ]

},

"session": {

"id": "65504c10-44a6-489e-b9dc-94184eb00d86",

"isFirst": true

}

}

}

上下文

所有类型的遥测随附上下文部分。 并非所有字段都连同每个数据点传输。

| `Path` | 类型 | 注释 |

|---|---|---|

| context.custom.dimensions [0] | object [ ] | 自定义属性参数设置的键-值字符串对。 键的最大长度为 100,值的最大长度为 1024。 如果唯一值超过 100 个,属性可搜索,但不可用于分段。 每个 ikey 最多有 200 个键。 |

| context.custom.metrics [0] | object [ ] | 自定义测量参数和 TrackMetrics 设置的键-值对。 键的最大长度为 100,值可以是数字。 |

| context.data.eventTime | string | UTC |

| context.data.isSynthetic | boolean | 请求似乎来自 Bot 或 Web 测试。 |

| context.data.samplingRate | number | SDK 生成的、发送到门户的遥测百分比。 范围为 0.0-100.0。 |

| context.device | object | 客户端设备 |

| context.device.browser | string | IE、Chrome... |

| context.device.browserVersion | string | Chrome 48.0... |

| context.device.deviceModel | string | |

| context.device.deviceName | string | |

| context.device.id | string | |

| context.device.locale | string | en-GB、de-DE... |

| context.device.network | string | |

| context.device.oemName | string | |

| context.device.os | string | |

| context.device.osVersion | string | 主机 OS |

| context.device.roleInstance | string | 服务器主机的 ID |

| context.device.roleName | string | |

| context.device.screenResolution | string | |

| context.device.type | string | 电脑、浏览器... |

| context.location | object | 派生自 clientip。 |

| context.location.city | string | 派生自 clientip(如果已知) |

| context.location.clientip | string | 最后一个八边形匿名化为 0。 |

| context.location.continent | string | |

| context.location.country | string | |

| context.location.province | string | 州或省 |

| context.operation.id | string | 具有相同 operation id 的项在门户中显示为“相关项”。 通常是 request id。 |

| context.operation.name | string | URL 或请求名称 |

| context.operation.parentId | string | 允许嵌套的相关项。 |

| context.session.id | string | 一组来自相同源的操作的 Id。 如果在 30 分钟期限内没有操作,则表示会话结束。 |

| context.session.isFirst | boolean | |

| context.user.accountAcquisitionDate | string | |

| context.user.accountId | string | |

| context.user.anonAcquisitionDate | string | |

| context.user.anonId | string | |

| context.user.authAcquisitionDate | string | 经过身份验证的用户 |

| context.user.authId | string | |

| context.user.isAuthenticated | boolean | |

| context.user.storeRegion | string | |

| internal.data.documentVersion | string | |

| internal.data.id | string | 将项引入到 Application Insights 时分配的 Unique id |

事件

TrackEvent() 生成的自定义事件。

| `Path` | 类型 | 注释 |

|---|---|---|

| event [0] count | integer | 100/(采样率)。 例如 4 => 25%。 |

| event [0] name | string | 事件名称。 最大长度为 250。 |

| event [0] url | string | |

| event [0] urlData.base | string | |

| event [0] urlData.host | string |

异常

报告服务器和浏览器中发生的异常。

| `Path` | 类型 | 注释 |

|---|---|---|

| basicException [0] assembly | string | |

| basicException [0] count | integer | 100/(采样率)。 例如 4 => 25%。 |

| basicException [0] exceptionGroup | string | |

| basicException [0] exceptionType | string | |

| basicException [0] failedUserCodeMethod | string | |

| basicException [0] failedUserCodeAssembly | string | |

| basicException [0] handledAt | string | |

| basicException [0] hasFullStack | boolean | |

basicException [0] id |

string | |

| basicException [0] method | string | |

| basicException [0] message | string | 异常消息。 最大长度为 10k。 |

| basicException [0] outerExceptionMessage | string | |

| basicException [0] outerExceptionThrownAtAssembly | string | |

| basicException [0] outerExceptionThrownAtMethod | string | |

| basicException [0] outerExceptionType | string | |

| basicException [0] outerId | string | |

| basicException [0] parsedStack [0] assembly | string | |

| basicException [0] parsedStack [0] fileName | string | |

| basicException [0] parsedStack [0] level | integer | |

| basicException [0] parsedStack [0] line | integer | |

| basicException [0] parsedStack [0] method | string | |

| basicException [0] stack | string | 最大长度为 10k |

| basicException [0] typeName | string |

跟踪消息

由 TrackTrace 和日志记录适配器发送。

| `Path` | 类型 | 注释 |

|---|---|---|

| message [0] loggerName | string | |

| message [0] parameters | string | |

| message [0] raw | string | 日志消息,最大长度为 10k。 |

| message [0] severityLevel | string |

远程依赖项

由 TrackDependency 发送。 用于报告服务器中依赖项调用以及浏览器中 AJAX 调用的性能和用法。

| `Path` | 类型 | 注释 |

|---|---|---|

| remoteDependency [0] async | boolean | |

| remoteDependency [0] baseName | string | |

| remoteDependency [0] commandName | string | 例如“home/index” |

| remoteDependency [0] count | integer | 100/(采样率)。 例如 4 => 25%。 |

| remoteDependency [0] dependencyTypeName | string | HTTP、SQL... |

| remoteDependency [0] durationMetric.value | number | 从依赖项调用到完成响应花费的时间 |

remoteDependency [0] id |

string | |

| remoteDependency [0] name | string | URL。 最大长度为 250。 |

| remoteDependency [0] resultCode | string | 源 HTTP 依赖项 |

| remoteDependency [0] success | boolean | |

| remoteDependency [0] type | string | Http、Sql... |

| remoteDependency [0] url | string | 最大长度为 2000 |

| remoteDependency [0] urlData.base | string | 最大长度为 2000 |

| remoteDependency [0] urlData.hashTag | string | |

| remoteDependency [0] urlData.host | string | 最大长度为 200 |

请求

由 TrackRequest 发送。 标准模块使用此属性报告在服务器上测量的服务器响应时间。

| `Path` | 类型 | 注释 |

|---|---|---|

| request [0] count | integer | 100/(采样率)。 例如:4 => 25%. |

| request [0] durationMetric.value | number | 从请求到响应花费的时间。 1e7 == 1s |

请求 {0} id |

string | Operation id |

| request [0] name | string | GET/POST + URL 基。 最大长度为 250 |

| request [0] responseCode | integer | 发送到客户端的 HTTP 响应 |

| request [0] success | boolean | 默认值 == (responseCode < 400) |

| request [0] url | string | 不包括主机 |

| request [0] urlData.base | string | |

| request [0] urlData.hashTag | string | |

| request [0] urlData.host | string |

页面视图性能

由浏览器发送。 测量处理页面花费的时间,从用户发起请求算起,到显示完成(不包括异步 AJAX 调用)为止。

上下文值显示客户端 OS 和浏览器版本。

| `Path` | 类型 | 注释 |

|---|---|---|

| clientPerformance [0] clientProcess.value | integer | 从接收 HTML 完成到显示页面花费的时间。 |

| clientPerformance [0] name | string | |

| clientPerformance [0] networkConnection.value | integer | 建立网络连接花费的时间。 |

| clientPerformance [0] receiveRequest.value | integer | 从发送请求结束到在回复中接收 HTML 所花费的时间。 |

| clientPerformance [0] sendRequest.value | integer | 发送 HTTP 请求花费的时间。 |

| clientPerformance [0] total.value | integer | 从开始发送请求到显示页面花费的时间。 |

| clientPerformance [0] url | string | 此请求的 URL |

| clientPerformance [0] urlData.base | string | |

| clientPerformance [0] urlData.hashTag | string | |

| clientPerformance [0] urlData.host | string | |

| clientPerformance [0] urlData.protocol | string |

页面视图

由 trackPageView() 或 stopTrackPage 发送

| `Path` | 类型 | 注释 |

|---|---|---|

| view [0] count | integer | 100/(采样率)。 例如 4 => 25%。 |

| view [0] durationMetric.value | integer | 在 trackPageView() 中设置的,或者由 startTrackPage() - stopTrackPage() 设置的可选值。 与 clientPerformance 值不同。 |

| view [0] name | string | 页面标题。 最大长度为 250 |

| view [0] url | string | |

| view [0] urlData.base | string | |

| view [0] urlData.hashTag | string | |

| view [0] urlData.host | string |

可用性

报告可用性 Web 测试。

| `Path` | 类型 | 注释 |

|---|---|---|

| availability [0] availabilityMetric.name | string | availability |

| availability [0] availabilityMetric.value | number | 1.0 或 0.0 |

| availability [0] count | integer | 100/(采样率)。 例如 4 => 25%。 |

| availability [0] dataSizeMetric.name | string | |

| availability [0] dataSizeMetric.value | integer | |

| availability [0] durationMetric.name | string | |

| availability [0] durationMetric.value | number | 测试持续时间。 1e7==1s |

| availability [0] message | string | 故障诊断 |

| availability [0] result | string | 通过/失败 |

| availability [0] runLocation | string | http 请求的地域源 |

| availability [0] testName | string | |

| availability [0] testRunId | string | |

| availability [0] testTimestamp | string |

指标

由 TrackMetric() 生成。

指标值出现在 context.custom.metrics[0] 中

例如:

{

"metric": [ ],

"context": {

...

"custom": {

"dimensions": [

{ "ProcessId": "4068" }

],

"metrics": [

{

"dispatchRate": {

"value": 0.001295,

"count": 1.0,

"min": 0.001295,

"max": 0.001295,

"stdDev": 0.0,

"sampledValue": 0.001295,

"sum": 0.001295

}

}

]

}

}

}

关于指标值

指标报告和其他位置中的指标值是使用标准对象结构报告的。 例如:

"durationMetric": {

"name": "contoso.org",

"type": "Aggregation",

"value": 468.71603053650279,

"count": 1.0,

"min": 468.71603053650279,

"max": 468.71603053650279,

"stdDev": 0.0,

"sampledValue": 468.71603053650279

}

目前(将来可能会变化)在所有通过标准 SDK 模块报告的值中,只有 count==1 以及 name 和 value 字段有用。 这些元素存在差异的唯一情况是你编写自己的 TrackMetric 调用,并在其中设置其他参数。

其他字段的用途是便于在 SDK 中聚合指标,减少门户接收的流量。 例如,在发送每份指标报告之前,可求多个连续读数的平均值。 然后计算最小值、最大值、标准偏差和聚合值(总和或平均值),并将计数设置为报告呈现的读数数目。

上表中省略了一些极少用到的字段:count、min、max、stdDev 和 sampledValue。

如果需要减少遥测量,可以改用采样,而不要使用预先聚合的指标。

持续时间

除非另有规定,否则持续时间以十分之一微秒表示,因此 10000000.0 表示 1 秒。