適用於Linux的 Azure NetApp Files 效能基準檢驗

本文說明 Azure NetApp Files 針對 Linux 提供的效能效能評定。

Linux 向外延展

本節說明 Linux 工作負載輸送量和工作負載 IOPS 的效能效能評定。

Linux 工作負載輸送量

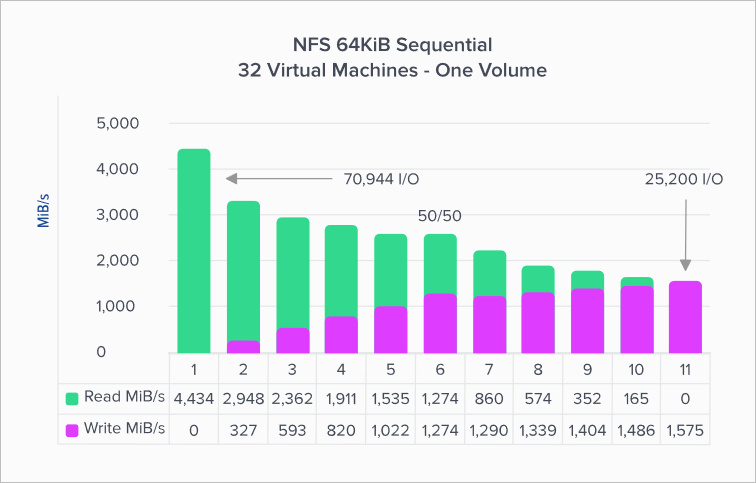

下圖代表 64-kibibyte (KiB) 循序工作負載和 1 TiB 工作集。 它會顯示單一 Azure NetApp Files 磁碟區可以處理 ~1,600 MiB/s 純循序寫入和 ~4,500 MiB/s 純循序讀取。

圖表說明一次減少 10%, 從純讀取到純寫入。 它示範在使用不同讀取/寫入比率時可以預期的情況(100%:0%、90%:10%、80%:20%等等)。

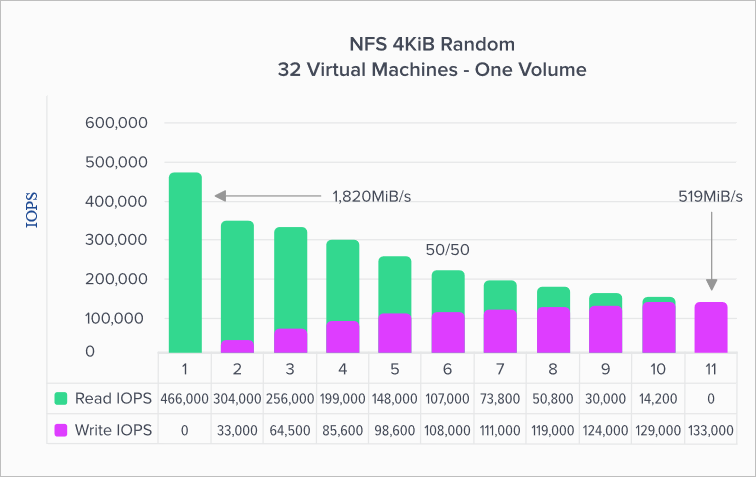

Linux 工作負載 IOPS

下圖代表 4 kibibyte (KiB) 隨機工作負載和 1 TiB 工作集。 此圖表顯示 Azure NetApp Files 磁碟區可以處理 ~130,000 個純隨機寫入和 ~460,000 個純隨機讀取。

此圖表說明從純讀取到純寫入,一次減少 10%。 它示範在使用不同讀取/寫入比率時可以預期的情況(100%:0%、90%:10%、80%:20%等等)。

Linux相應增加

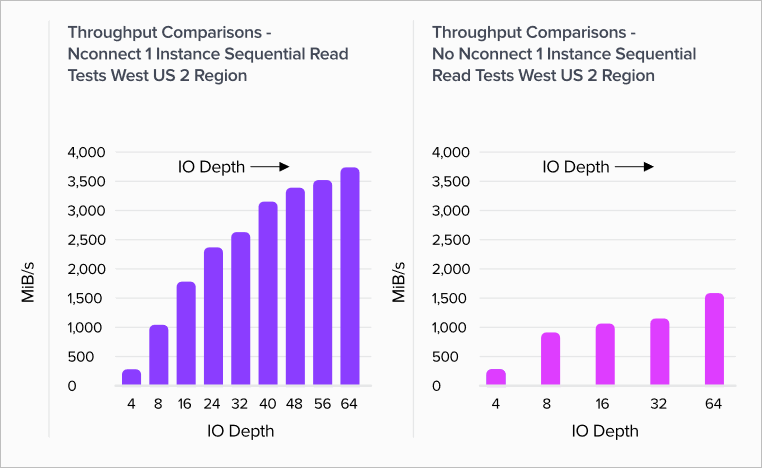

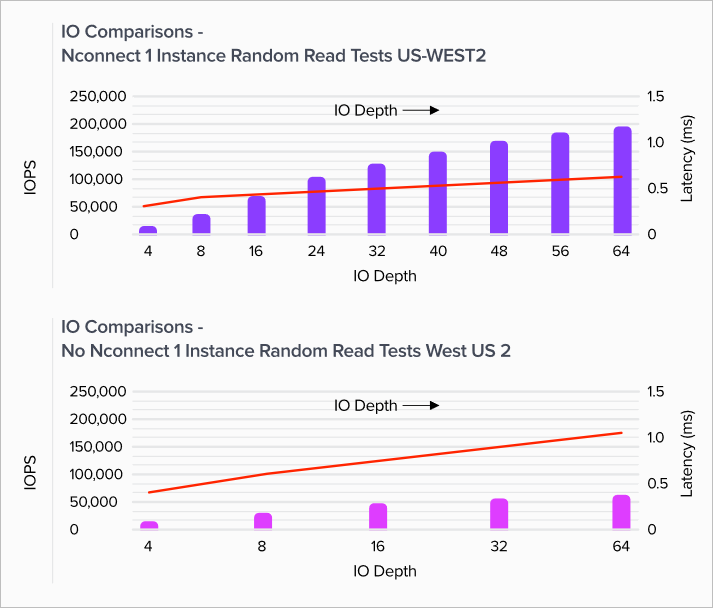

本節中的圖表顯示用戶端掛接選項與 NFSv3 的驗證測試結果。 如需詳細資訊,請參閱 nconnect Linux 掛接選項的一節。

圖表會將 的優點 nconnect 與非connected 掛接的磁碟區進行比較。 在圖表中,FIO 會使用 64-KiB 循序工作負載從 us-west2 Azure 區域中的單一D32s_v4實例產生工作負載,這是 Azure NetApp Files 在測試期間所支援的最大 I/O 大小。 Azure NetApp Files 現在支援較大的 I/O 大小。 如需詳細資訊,請參閱 rsize Linux 掛接選項的 和 wsize 一節。

Linux 讀取輸送量

下圖顯示 ~3,500 MiB/秒的 64 KiB 循序讀取, nconnect其中約 2.3X 為非nconnect。

Linux 寫入輸送量

下圖顯示循序寫入。 它們表示 nconnect 循序寫入沒有明顯的優點。 1,500 MiB/s 大致上是循序寫入磁碟區上限和D32s_v4實例輸出限制。

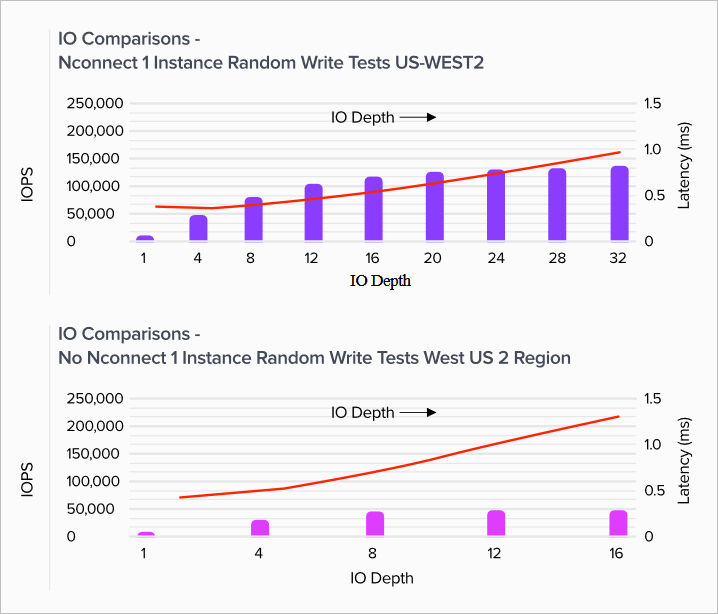

Linux 讀取 IOPS

下圖顯示 4-KiB 隨機讀取 ~200,000 個讀取 IOPS 與 nconnect,大約 3X 非nconnect。

Linux 寫入 IOPS

下圖顯示 ~135,000 個寫入 IOPS 與 nconnect的 4 KiB 隨機寫入,大約 3X 非nconnect。